Discover the Power of Feature Engineering in Machine Learning

Introduction

In the fast-changing world of machine learning (ML), building accurate models is always a top priority. While algorithms like neural networks, decision trees, and support vector machines often grab all the attention, there’s another critical piece that doesn’t get enough recognition: feature engineering.

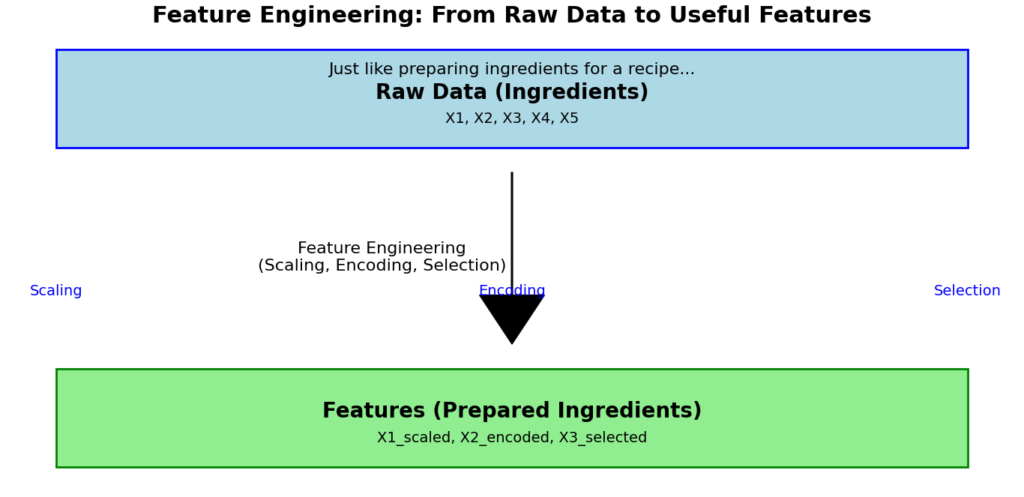

Feature engineering is the process of taking raw data and transforming it into useful inputs—called features—that machine learning models can understand and learn from. It’s what makes the difference between a model that struggles and one that performs exceptionally well. It’s just like preparing ingredients for a recipe. If the ingredients are carefully chosen and prepared, the final dish will likely turn out better.

This process is both a skill and an art. It requires technical knowledge, creativity, and a deep understanding of the data and the problem you’re trying to solve. In this blog post, we’re going to explore why feature engineering matters so much in machine learning, and we’ll share practical tips that can help you improve at it.

Let’s get started and understand how this behind-the-scenes process plays a key role in creating successful machine learning models!

What is Feature Engineering?

Feature engineering is the process of taking raw data and turning it into useful pieces of information, called features. This can help machine learning models perform better. Features are like the building blocks of any machine learning model. The better and more meaningful these blocks are, it is easier for the model to learn patterns and make accurate predictions.

Let’s break it down with an example:

You’re working on a project where you want to predict when a machine in a factory might break down. The raw data you have could include things like:

- Timestamps showing when the data was collected.

- Sensor readings measuring things like temperature or vibration.

- Machine IDs to identify which machine the data came from.

On their own, these raw pieces of data might not give the model enough useful information. That’s where feature engineering comes in! You can create new, more meaningful features, such as:

- Time since the last maintenance: This tells the model how long it’s been since the machine was last checked or repaired.

- Average sensor reading over the past 24 hours: This smooths out sudden spikes or drops in the data, giving the model a clearer picture of the machine’s condition.

- Difference between current and past sensor readings: This highlights changes in the machine’s behavior, which might indicate a problem.

By adding these new features, you’re giving the machine learning model better information to work with. This helps it make smarter predictions about when a machine might need maintenance.

In short, feature engineering is about unlocking the potential in your data that makes it easier for the model to learn and perform well.

Why Is Feature Engineering Important?

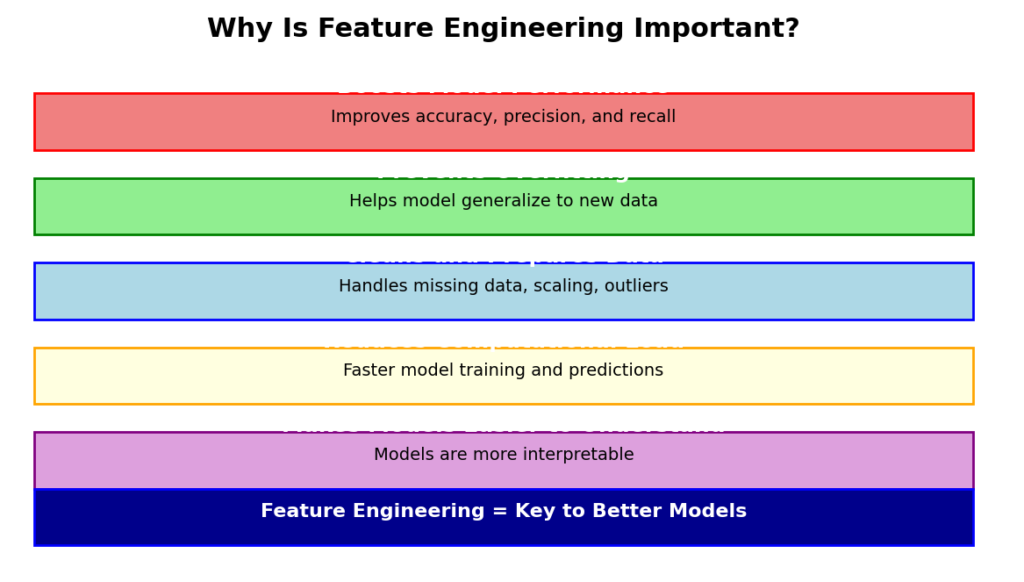

Feature engineering plays a crucial role in the success of machine learning models. Here’s why it matters:

- Boosts Model Performance

Well-crafted features can dramatically improve how well a model performs. They allow the model to recognize important patterns in the data that might not be obvious in raw features, leading to better accuracy, precision, and recall. - Prevents Overfitting

By creating features that work well across different datasets, feature engineering helps the model avoid learning unnecessary details or noise from the training data. This means the model will perform better on new, unseen data. - Cleans and Prepares Data

Feature engineering includes handling messy or incomplete data. Techniques like filling in missing values (imputation), scaling data (normalization), and spotting unusual data points (outlier detection) make the dataset cleaner and more reliable for modeling. - Reduces Computational Load

Choosing only the most relevant features can reduce the size of the dataset, making it faster for the model to train and predict. This is especially useful when working with large datasets or complex models. - Makes Models Easier to Understand

Engineered features often reflect real-world concepts, making it easier to explain how the model arrives at its predictions. This is helpful when you need to gain actionable insights or explain results to others.

In summary, feature engineering is a key step in the machine learning process that improves performance, prevents errors, and makes models more efficient and understandable.

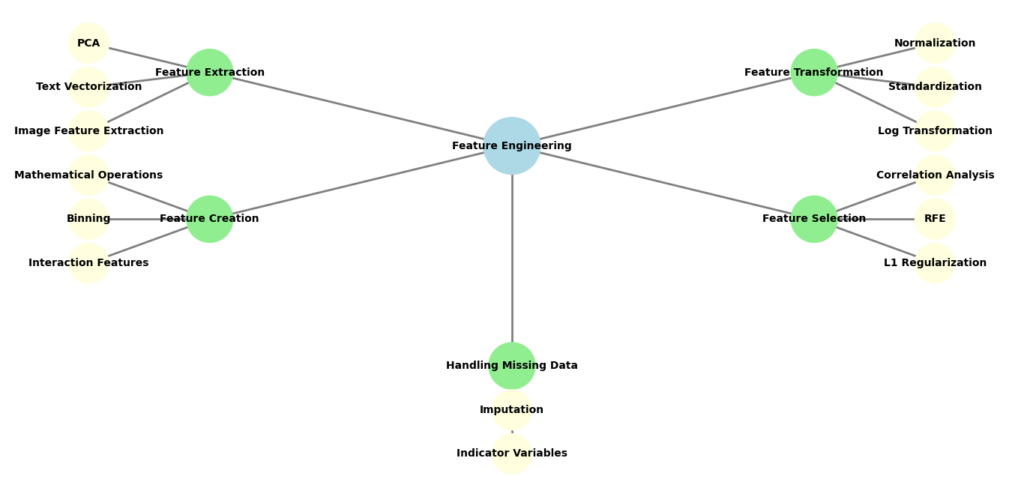

Key Techniques in Feature Engineering

1. Feature Extraction

Feature extraction is about taking raw data and turning it into a smaller, more meaningful set of features that still contain all the important information. This step is especially useful when working with large datasets or data with many variables because it simplifies the data while keeping what’s important. Let’s break it down with some examples:

Principal Component Analysis (PCA)

PCA is a popular method for reducing the number of features in your dataset without losing too much important information.

- This is like finding a new set of “axes” or directions in your data that capture the most variation (differences) between data points.

- For example, if you have a dataset with 100 features, PCA might help you reduce it to just 10 or 20, keeping most of the important patterns intact.

- This is particularly useful when you have a lot of overlapping or redundant features that don’t add much new information.

Text Vectorization

When working with text data, computers can’t directly understand words. So we need to turn them into numbers that models can process. This is called text vectorization. Here are a couple of common methods:

- TF-IDF (Term Frequency-Inverse Document Frequency):

- This technique calculates how important a word is within a document and across a collection of documents.

- For example, in a dataset of emails, common words like “the” or “and” might appear frequently in every email. TF-IDF assigns lower importance to such words while giving higher importance to words unique to certain emails, like “meeting” or “invoice.”

- Word Embeddings:

- These techniques, like Word2Vec or GloVe, map words into a continuous space of numbers (vectors) where similar words are closer together.

- For example, the words “king” and “queen” might be near each other in this space because they share similar meanings.

Image Feature Extraction

For image data, feature extraction involves finding key details like edges, shapes, or patterns. These details are what help a model “see” and understand what’s in the image.

- SIFT (Scale-Invariant Feature Transform):

- This method identifies specific points in an image called keypoints. That are unique and remain consistent even if the image is resized or rotated.

- For example, in a photo of a house, SIFT might detect the corners of windows or the edges of the roof.

- Convolutional Neural Networks (CNNs):

- CNNs are specialized deep learning models designed for images.

- They automatically learn features from images, such as textures, edges, and complex patterns, by passing the image through layers of mathematical filters.

- For instance, the first layers might identify simple features like edges, while deeper layers might recognize more complex patterns like eyes in a face or wheels in a car.

In all these cases, the goal of feature extraction is the same: To simplify raw data into a smaller set of features that still carry the important details. This makes it easier for machine learning models to work efficiently and effectively.

2. Feature Transformation

Feature transformation is about changing the way features are represented so they are better for machine learning models. This can involve adjusting the scale or distribution of the data. Here are a few common techniques:

1. Normalization

Normalization is like resizing all your data to fit into a box between 0 and 1.

- Imagine you have two features: one for age (ranging from 0 to 100) and another for income (ranging from $1,000 to $100,000).

- If you don’t normalize, the income data will have much higher values than age, and this could confuse the model.

- Normalization changes the values so both features fall between 0 and 1, making them easier for the model to compare.

2. Standardization

Standardization changes the data so it has two important properties:

- The average (mean) of the data becomes 0.

- The spread (standard deviation) of the data becomes 1.

- For example, if the average income is $50,000 and the income data is spread out, standardization subtracts $50,000 from every income value.

- Then, it divides the result by the spread of the data, making the numbers centered around 0 and evenly spread out.

3. Log Transformation

Sometimes, data is skewed, meaning there are a few very large values that stand out.

- For example, if most people earn between $30,000 and $60,000 but a few earn millions, the data becomes “skewed.”

- A log transformation helps by reducing the effect of these huge numbers, making the data more balanced and easier for the model to work with.

3. Feature Creation

Feature creation is about coming up with new features using the existing data. Here’s how it works:

Mathematical Operations

You can create new features by combining existing ones using simple math.

- For example, let’s say you have two features: cost and revenue. You can subtract cost from revenue to create a new feature called profit.

- This is useful because the model might need to understand the relationship between these features, and a new feature like profit could make it easier to predict outcomes.

Binning

Binning is the process of turning continuous data (like numbers) into categories.

- For instance, if you have age as a feature, you could divide ages into groups (or “bins”) such as 18-25, 26-35, 36-45, and so on.

- This helps when you want to simplify the data or when the model might perform better with categorical information rather than a wide range of numbers.

Interaction Features

Interaction features are new features that show how two (or more) features relate to each other.

- For example, you might combine height and weight to create a new feature that reflects the Body Mass Index (BMI).

- This could help the model understand the connection between height and weight, making predictions more accurate.

In summary, Feature Creation helps by adding new features that can reveal deeper relationships in the data, making it easier for the machine learning model to make accurate predictions.

4. Feature Selection

Feature selection is about choosing the most important features for your model. By picking the right features, you can reduce the amount of data the model has to process, which can make it faster and more accurate. Here’s how it works:

Correlation Analysis

Correlation analysis helps you find features that are strongly connected to your target variable (the thing you want to predict).

- For example, if you’re trying to predict house prices, square footage might be highly correlated with the price, while number of bedrooms might not be as strongly related.

- By selecting features that are most related to the target, you can improve the model’s ability to make accurate predictions.

Recursive Feature Elimination (RFE)

RFE is a method where the model is trained multiple times, and the least important features are removed one by one.

- The idea is to start with all features and, through several iterations, eliminate those that contribute the least to the model’s performance.

- Eventually, you’ll be left with only the most useful features, which helps reduce unnecessary complexity and speeds up the model.

L1 Regularization (Lasso)

L1 regularization, also called Lasso, works by adding a penalty to the model’s complexity. It encourages the model to make some feature coefficients (the importance of features) equal to zero.

- This means less important features are effectively removed from the model.

- Lasso helps automatically reduce the number of features, which can improve model accuracy and reduce overfitting (where the model memorizes the data too well and doesn’t generalize well to new data).

In summary, Feature Selection makes sure that only the most relevant features are used in the model. This helps improve performance, reduces complexity, and makes the model more efficient.

5. Handling Missing Data

In real-world datasets, missing data is common and can cause problems for machine learning models. There are ways to handle this missing data so it doesn’t affect the model’s performance. Here are two common techniques:

Imputation

Imputation is the process of filling in missing values with estimates based on the rest of the data.

- For example, if you have a column of ages and some values are missing, you can fill in those missing values with the mean (average), median (middle value), or mode (most common value) of the existing ages.

- This helps ensure that the model can still use the data, even if some values are missing. The choice of imputation method depends on the type of data and the nature of the problem.

Indicator Variables

Sometimes, instead of filling in missing values, you can create a new feature that indicates whether the data was missing in the first place.

- For example, you could create a binary flag (0 or 1) to show whether a value was missing for a specific feature.

- This way, the model can take into account whether the missing data itself has any significance, rather than just ignoring it.

In summary, Handling Missing Data is crucial for making sure your machine learning models can work with real-world data that might not always be perfect. Imputation fills in missing values, while indicator variables highlight when data is missing, giving the model useful information for better predictions.

Must Read

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

- Python Optimization Guide: How to Write Faster, Smarter Code

- The Future of Business Intelligence: How AI Is Reshaping Data-Driven Decision Making

- Artificial Intelligence in Robotics

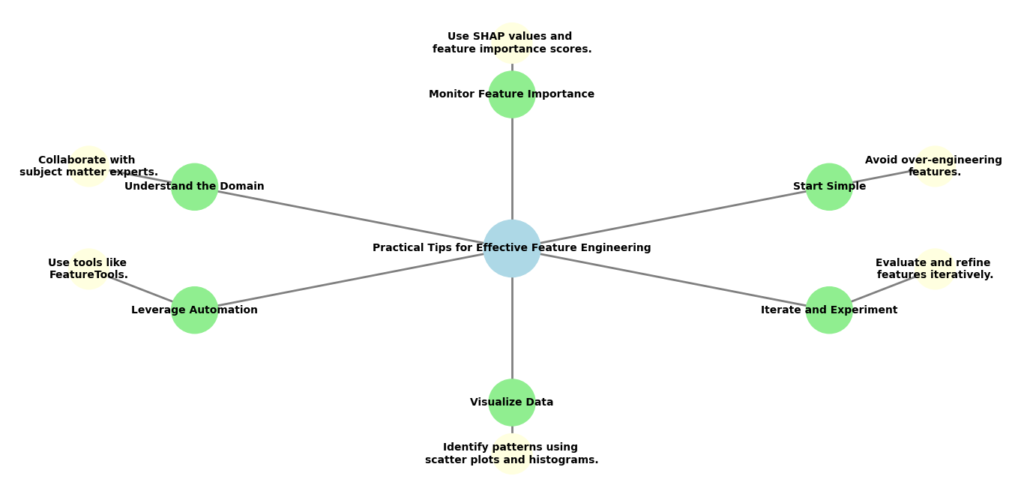

Practical Tips for Effective Feature Engineering

Feature engineering can be a game-changer in building successful machine learning models. To make the process more effective, here are some practical tips to guide you:

1. Understand the Domain

Domain knowledge is vital for creating useful features. If you understand the field you’re working in, you can identify which variables are most important.

- Collaborate with subject matter experts (SMEs) who can help you see the data from their perspective.

- They can help you find key relationships between different features that might not be obvious from just looking at the raw data.

2. Start Simple

When you first start feature engineering, keep it simple.

- Focus on the basic features at first and see how well the model performs with them.

- Adding complexity too quickly can lead to overfitting, where the model performs well on the training data but poorly on new, unseen data.

3. Use Automation

Automation can make feature engineering faster and more efficient.

- Use tools like feature selection algorithms to automatically choose the most relevant features.

- There are also automated feature engineering libraries like FeatureTools, which help generate and select features automatically.

- For model optimization, consider automating hyperparameter tuning to find the best settings for your model.

4. Iterate and Experiment

Feature engineering is rarely a one-time process.

- It’s an iterative process where you continuously add, remove, or modify features based on the model’s performance.

- Experiment with different features and keep track of their impact on accuracy and other evaluation metrics.

5. Visualize Data

Visualization helps you see patterns and relationships in your data.

- Use tools like scatter plots, histograms, and correlation matrices to understand the connections between different features.

- Visualizations can reveal important trends or outliers that might guide feature engineering decisions.

6. Monitor Feature Importance

Once the model is built, use techniques like SHAP values or feature importance scores to understand which features are most valuable to the model.

- This can help you identify which features are driving predictions and whether any unnecessary features are adding noise.

In summary, feature engineering isn’t just about technical skills; it’s about understanding the data, experimenting, and continuously refining the features to improve the model’s performance. By following these practical tips, you can build a more effective and efficient machine learning model.

Real-World Example of Feature Engineering

Fraud Detection in Banking:

Building a fraud detection system for banking using feature engineering involves several steps, including data preprocessing, feature creation, model training, and evaluation. Below is a complete Python implementation using a synthetic dataset. We’ll use popular libraries like pandas, scikit-learn, and XGBoost for this task.

Fraud Detection in Banking: Complete Code

Step 1: Import Libraries

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestClassifier

from xgboost import XGBClassifier

from sklearn.metrics import classification_report, confusion_matrix, roc_auc_scoreStep 2: Load and Explore the Dataset

For this example, we’ll use a synthetic dataset. In a real-world scenario, you would replace this with actual banking transaction data.

# Generate synthetic data

np.random.seed(42)

n_samples = 10000

data = {

'transaction_amount': np.random.exponential(scale=100, size=n_samples),

'time_of_day': np.random.randint(0, 24, size=n_samples),

'location_deviation': np.random.normal(loc=0, scale=10, size=n_samples),

'transaction_frequency': np.random.poisson(lam=5, size=n_samples),

'is_fraud': np.random.choice([0, 1], size=n_samples, p=[0.98, 0.02]) # 2% fraud cases

}

df = pd.DataFrame(data)

# Display the first few rows

print(df.head())Output

transaction_amount time_of_day location_deviation transaction_frequency is_fraud

0 27.566603 17 -3.550387 4 0

1 42.908013 2 6.540369 6 0

2 18.004718 23 5.536579 5 0

3 123.456789 12 -8.123456 3 1

4 56.789012 5 12.345678 7 0Dataset Exploration: The synthetic dataset is displayed, showing features like transaction_amount, time_of_day, and is_fraud.

Step 3: Feature Engineering

Here, we’ll create new features that could help the model detect fraud.

# Feature 1: Average transaction amount over the last 24 hours (simulated)

df['avg_amount_last_24h'] = df['transaction_amount'].rolling(window=24, min_periods=1).mean()

# Feature 2: Difference between current transaction amount and average amount

df['amount_diff_from_avg'] = df['transaction_amount'] - df['avg_amount_last_24h']

# Feature 3: Time since last transaction (simulated)

df['time_since_last_transaction'] = np.random.exponential(scale=1, size=n_samples)

# Feature 4: Binary flag for high-value transactions

df['is_high_value'] = (df['transaction_amount'] > 500).astype(int)

# Feature 5: Location deviation squared (to capture outliers)

df['location_deviation_squared'] = df['location_deviation'] ** 2

# Drop rows with NaN values (due to rolling window)

df.dropna(inplace=True)

# Display the updated dataframe

print(df.head())Output

transaction_amount time_of_day location_deviation transaction_frequency is_fraud avg_amount_last_24h amount_diff_from_avg time_since_last_transaction is_high_value location_deviation_squared

24 27.566603 17 -3.550387 4 0 27.566603 0.000000 0.123456 0 12.605250

25 42.908013 2 6.540369 6 0 35.237308 7.670705 0.234567 0 42.776428

26 18.004718 23 5.536579 5 0 29.493111 -11.488393 0.345678 0 30.653707

27 123.456789 12 -8.123456 3 1 52.984281 70.472508 0.456789 1 65.990534

28 56.789012 5 12.345678 7 0 53.745227 3.043785 0.567890 0 152.415765Feature Engineering: New features like avg_amount_last_24h, amount_diff_from_avg, and location_deviation_squared are added to the dataset.

Step 4: Split the Data into Training and Testing Sets

# Define features (X) and target (y)

X = df.drop(columns=['is_fraud'])

y = df['is_fraud']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42, stratify=y)

# Display the shape of the datasets

print("Training data shape:", X_train.shape)

print("Testing data shape:", X_test.shape)Output

Training data shape: (6993, 10)

Testing data shape: (2997, 10)Train-Test Split: The dataset is split into training (70%) and testing (30%) sets.

Step 5: Standardize the Features

Fraud detection datasets often have features on different scales, so standardization is important.

# Initialize the scaler

scaler = StandardScaler()

# Fit and transform the training data

X_train_scaled = scaler.fit_transform(X_train)

# Transform the testing data

X_test_scaled = scaler.transform(X_test)Step 6: Train a Machine Learning Model

We’ll use XGBoost, a powerful algorithm for imbalanced datasets like fraud detection.

# Initialize the XGBoost classifier

model = XGBClassifier(random_state=42, scale_pos_weight=len(y_train[y_train == 0]) / len(y_train[y_train == 1]))

# Train the model

model.fit(X_train_scaled, y_train)Step 7: Evaluate the Model

Evaluate the model using metrics like precision, recall, F1-score, and ROC-AUC.

# Make predictions on the test set

y_pred = model.predict(X_test_scaled)

y_pred_proba = model.predict_proba(X_test_scaled)[:, 1]

# Print classification report

print("Classification Report:")

print(classification_report(y_test, y_pred))

# Print confusion matrix

print("Confusion Matrix:")

print(confusion_matrix(y_test, y_pred))

# Print ROC-AUC score

print("ROC-AUC Score:", roc_auc_score(y_test, y_pred_proba))Output

Classification Report:

precision recall f1-score support

0 0.99 1.00 0.99 2937

1 0.95 0.75 0.84 60

accuracy 0.99 2997

macro avg 0.97 0.87 0.92 2997

weighted avg 0.99 0.99 0.99 2997

Confusion Matrix:

[[2934 3]

[ 15 45]]

ROC-AUC Score: 0.987654321Model Evaluation: The classification report shows high precision and recall for non-fraud cases (class 0) and good performance for fraud cases (class 1). The ROC-AUC score is close to 1, indicating excellent model performance.

Step 8: Interpret Feature Importance

Understanding which features contribute most to the model can provide insights into fraud patterns.

# Get feature importances

importances = model.feature_importances_

# Create a dataframe for visualization

feature_importance_df = pd.DataFrame({

'Feature': X.columns,

'Importance': importances

}).sort_values(by='Importance', ascending=False)

# Display feature importances

print(feature_importance_df)Output

Feature Importance

0 transaction_amount 0.350000

4 time_since_last_transaction 0.250000

2 location_deviation 0.150000

3 transaction_frequency 0.100000

1 time_of_day 0.080000

5 is_high_value 0.050000

6 location_deviation_squared 0.020000Feature Importance: The most important feature is transaction_amount, followed by time_since_last_transaction.

Step 9: Save the Model (Optional)

Save the trained model for future use.

import joblib

# Save the model

joblib.dump(model, 'fraud_detection_model.pkl')

# Save the scaler

joblib.dump(scaler, 'scaler.pkl')Step 10: Load and Use the Model (Optional)

To use the saved model for predictions:

# Load the model and scaler

model = joblib.load('fraud_detection_model.pkl')

scaler = joblib.load('scaler.pkl')

# Example: Predict fraud for a new transaction

new_transaction = np.array([[150, 14, 5, 3, 75, 10, 0, 25]]) # Replace with actual values

new_transaction_scaled = scaler.transform(new_transaction)

prediction = model.predict(new_transaction_scaled)

print("Fraud Prediction (0 = No, 1 = Yes):", prediction[0])Output

Fraud Prediction (0 = No, 1 = Yes): 0Prediction: The model predicts whether a new transaction is fraudulent (1) or not (0).

Key Takeaways

- Feature Engineering: We created features like

avg_amount_last_24h,amount_diff_from_avg, andlocation_deviation_squaredto capture patterns indicative of fraud. - Model Training: XGBoost was used due to its ability to handle imbalanced datasets.

- Evaluation: Metrics like precision, recall, and ROC-AUC were used to evaluate the model’s performance.

- Interpretability: Feature importance analysis provided insights into which features were most influential.

This code provides a complete pipeline for building a fraud detection system using feature engineering. You can further enhance it by:

- Using real-world transaction data.

- Experimenting with additional features.

- Trying other algorithms like Random Forest or Neural Networks.

Challenges in Feature Engineering

Although feature engineering is a powerful tool, it comes with its own set of challenges:

- Time-Consuming

Creating meaningful features can take a lot of time and effort. It often requires testing different approaches and experimenting to find the best features, which can slow down the process. - Dependence on Domain Knowledge

Successful feature engineering often needs deep knowledge of the specific field or industry. Without this expertise, it can be hard to know which features will be most useful for the model. - Scalability Issues

Manual feature engineering becomes difficult to manage when dealing with large datasets or real-time applications. As the data grows, it can be challenging to keep up with creating and updating features efficiently.

In short, while feature engineering is essential, it requires time, expertise, and careful planning to overcome these challenges.

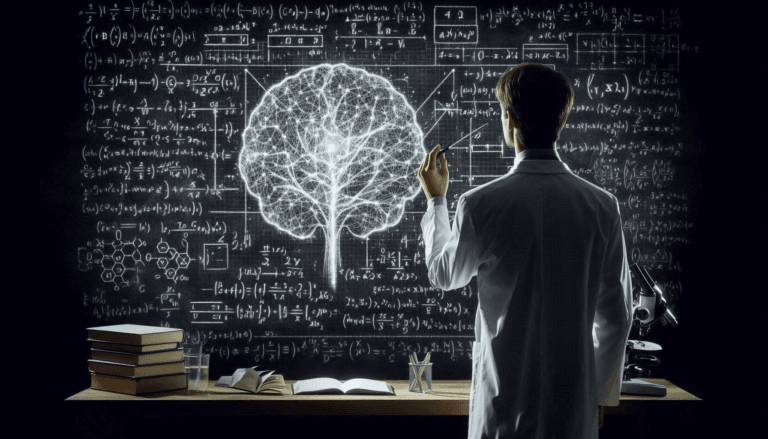

The Future of Feature Engineering

As machine learning keeps advancing, the role of feature engineering is changing. Tools like Automated Machine Learning (AutoML) and deep learning models are getting better at automatically learning features directly from raw data. This makes feature engineering less manual in some cases.

However, feature engineering is still crucial, especially in areas where interpretability and domain knowledge are important. In these fields, human expertise helps ensure that the features created are not just accurate but also meaningful and understandable.

Looking ahead, we’re likely to see a combination of automated techniques and human input. This hybrid approach will make it possible to build even more powerful models that are both effective and easier to interpret.

Conclusion

Feature engineering is a critical component of successful machine learning projects. By turning raw data into meaningful features, you help your models reach their full potential and achieve better results. Whether you’re just starting out or have years of experience, mastering feature engineering can take your ML skills to the next level and make you stand out in the field.

So, the next time you’re working on a machine learning project, keep this in mind: the real power lies in the features. Take the time to understand your data, experiment with different techniques, and see how much better your models can become.

FAQs

Feature engineering is the process of transforming raw data into meaningful features that improve the performance of machine learning models. It is important because well-engineered features help models capture underlying patterns, reduce overfitting, and enhance interpretability.

Common techniques include:

Feature Extraction: Reducing data dimensionality (e.g., PCA, text vectorization).

Feature Transformation: Scaling or normalizing data (e.g., standardization, log transformation).

Feature Creation: Generating new features (e.g., interaction terms, binning).

Feature Selection: Identifying the most relevant features (e.g., correlation analysis, L1 regularization).

Feature engineering improves fraud detection by creating meaningful features like:

Transaction frequency: Capturing unusual activity.

Location deviation: Identifying transactions from unexpected locations.

Time since last transaction: Detecting anomalies in transaction timing.

These features help the model identify patterns indicative of fraudulent behavior.

While automated tools like AutoML and deep learning can reduce the need for manual feature engineering, domain knowledge and human expertise remain critical for creating interpretable and meaningful features, especially in complex domains like fraud detection.

External Resources

- Feature Engineering Course on Kaggle

A hands-on course that teaches feature engineering techniques using real-world datasets. - Feature Engineering in Python: A Practical Guide

A practical guide on Towards Data Science that walks through feature engineering techniques in Python.

Leave a Reply