The Most Popular Neural Network Architectures You Should Know

Introduction

Neural networks are a key part of artificial intelligence (AI). Which helping machines learn and make decisions in a way similar to how our brains work. These networks are used in many areas like image recognition, language processing, and self-driving cars. There are many different types of neural network architectures, and each one is good for solving specific problems. Knowing which architecture to use for a particular task is important because it helps you get the best results. In this blog post, we’ll go over the most popular neural network architectures and explain how to choose the right one for your project.

Why Neural Networks Are Important in AI and Machine Learning

Neural networks are a key part of AI and machine learning. They allow machines to learn from data and make decisions, similar to how humans think. Here’s why they matter:

- Recognizing Patterns:

Neural networks are really good at finding patterns in data. This helps them do things like recognize images, understand speech, and process language. By spotting these patterns, they can understand complex information and perform these tasks accurately. - Getting Better Over Time:

One of the great things about neural networks is that they improve as they get more data. The more data they process, the better they become at what they do. This self-improvement is very important for machine learning. - How Neural Networks Are Used:

- Healthcare: Neural networks help with analyzing medical images and predicting diseases. They can spot problems in images like X-rays or MRIs and help predict health issues early.

- Finance: In the financial world, neural networks are used to detect fraud and predict stock prices. They make financial systems safer and more accurate.

- Self-Driving Cars: Neural networks help self-driving cars make decisions, like avoiding obstacles and following traffic rules. This is crucial for driving safely.

Neural networks are changing industries by helping machines learn from data, improve over time, and make smart decisions. This is opening up exciting new possibilities in AI and machine learning.

Watch and Learn: Neural Networks Explained in Minutes

If you prefer a visual explanation, this video breaks down CNNs, RNNs, and GANs in just a few minutes. Perfect for beginners and experts alike!

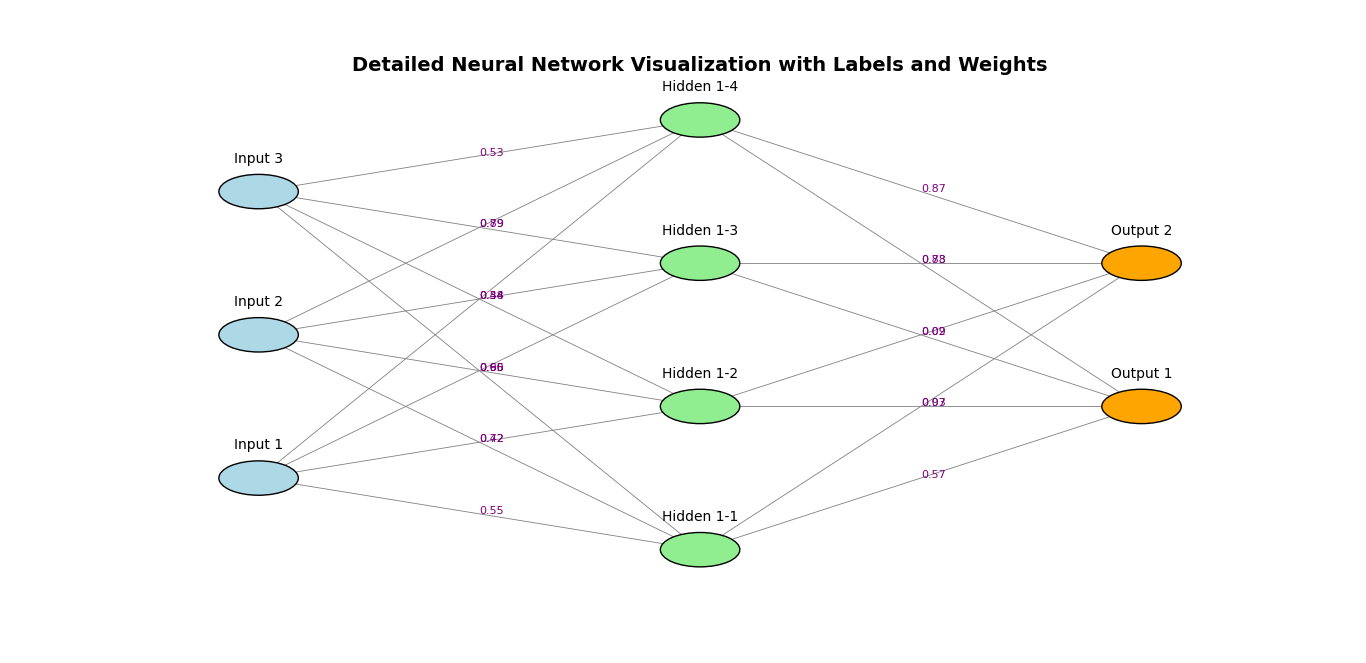

Artificial Neural Networks (ANNs)

An Artificial Neural Network (ANN) is a model inspired by how the brain processes information. It is made up of interconnected neurons that work together to solve complex problems. ANNs are the backbone of deep learning, a key area of machine learning that has transformed many AI applications.

Definition and Components of ANNs

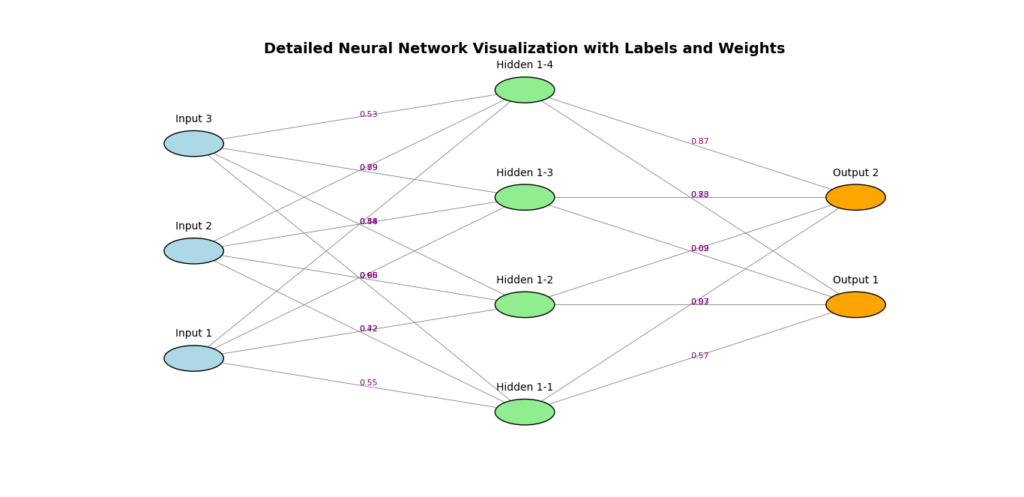

At the heart of every ANN are several important components:

- Neurons:

These are the fundamental units of a neural network. Each neuron receives data, processes it, and sends the result to the next neuron. These neurons are connected in a network, allowing the system to learn patterns from the data. - Layers:

Neural networks are built in layers of neurons:- Input Layer: This is where the data enters the network.

- Hidden Layers: These layers sit between the input and output layers. They process information and help the network learn from the data.

- Output Layer: The final layer that gives the network’s predictions or results.

- Activation Functions:

Activation functions decide whether a neuron should be activated based on its input. They introduce non-linearity, helping the network learn complex patterns. Common activation functions include:- Sigmoid: Outputs values between 0 and 1, commonly used in binary classification tasks.

- ReLU (Rectified Linear Unit): Outputs the input directly if it’s positive, and zero if negative. It’s effective in deep learning for reducing complexity.

- Tanh: Similar to sigmoid, but the output range is between -1 and 1.

ANNs are central to modern AI, enabling machines to recognize patterns, improve over time, and make complex decisions.

Convolutional Neural Networks (CNNs): The Neural Network Architectures

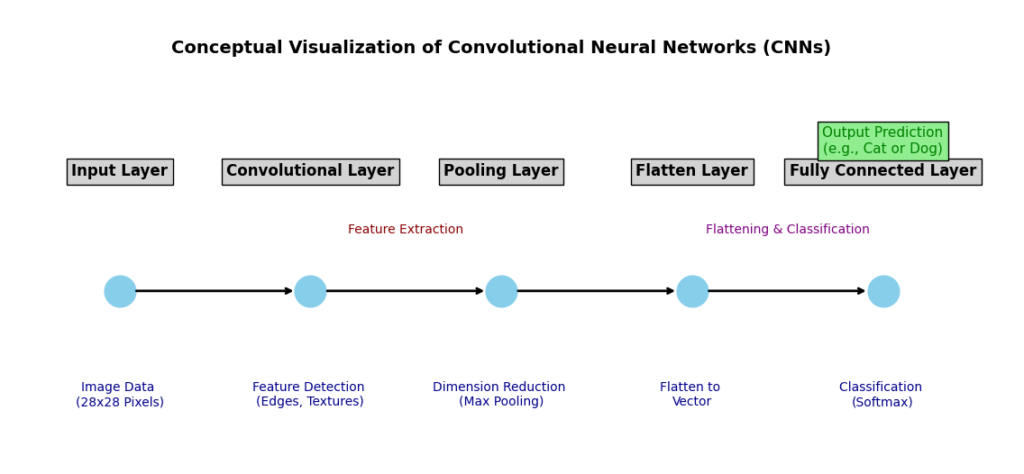

Convolutional Neural Networks (CNNs) are specialized neural networks designed to process visual data like images and videos. They work by identifying patterns such as edges, shapes, and textures, which helps in tasks like image classification and object detection. CNNs are a key part of AI systems, especially in applications that involve visual recognition.

How Convolutional Layers Work

At the heart of CNNs are convolutional layers, which perform a process called convolution. Here’s a simple breakdown:

- Filters (Kernels): Imagine placing a small grid (filter) over a part of an image. This filter detects patterns, such as edges or colors. Filters can vary in size, typically being small, like 3×3 or 5×5.

- Sliding Window Process: The filter moves (slides) across the image in small steps (called strides). At each step, it multiplies its values with the corresponding image pixel values and adds them up. This creates a feature map that highlights where certain patterns are present.

- Padding: Sometimes, extra pixels are added around the image edges to ensure the filter captures all regions. This is known as padding.

By detecting patterns at different locations, CNNs build an understanding of image features layer by layer. The early layers detect simple patterns (like edges), while deeper layers identify more complex features (like faces or objects).

How CNNs Are Used in Image and Video Recognition

CNNs are incredibly powerful for tasks involving visual data:

- Image Classification: In tasks like recognizing whether a picture contains a dog or a cat, CNNs analyze the image’s patterns and decide its category.

- Object Detection: CNNs not only recognize objects but also determine their locations within an image, drawing boxes around them.

- Video Recognition: By analyzing individual frames of a video, CNNs can detect actions, track objects, or identify scenes.

Structure of a CNN (CNN Architecture)

A typical CNN consists of the following layers:

- Convolutional Layer: Detects patterns in the image by applying filters.

- Pooling Layer: Reduces the size of feature maps while keeping important information. This helps make the network faster and less sensitive to small changes in the input image.

- Fully Connected Layer: Combines the features learned in previous layers to make predictions.

CNNs are particularly popular in tasks like medical image analysis, self-driving cars, and facial recognition systems. They can efficiently handle large amounts of visual data, making them important for image classification and other applications in AI and machine learning.

Must Read

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

- Python Optimization Guide: How to Write Faster, Smarter Code

- The Future of Business Intelligence: How AI Is Reshaping Data-Driven Decision Making

- Artificial Intelligence in Robotics

Recurrent Neural Networks (RNNs)

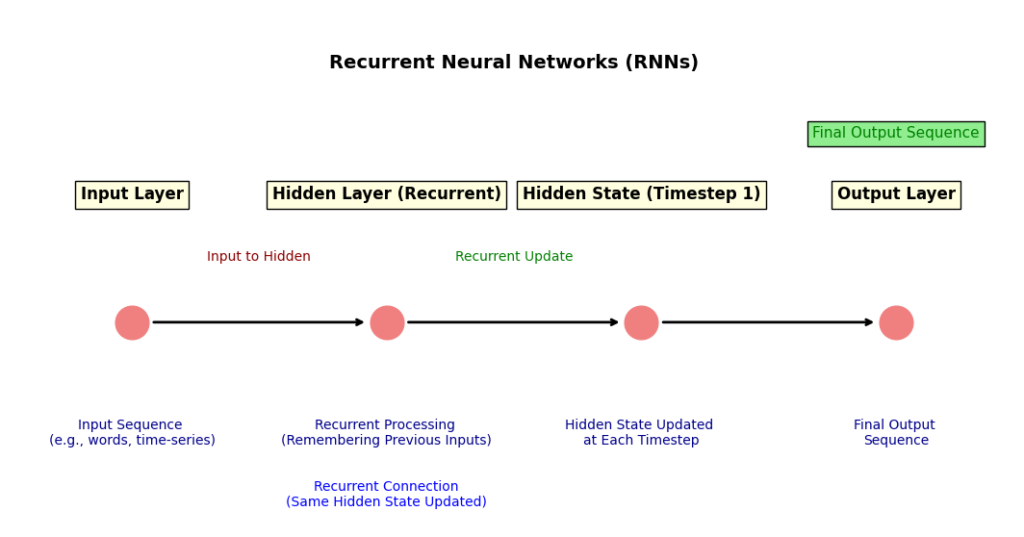

Recurrent Neural Networks (RNNs) are special types of neural networks built to handle sequential data. This means they can process data where the order matters. Unlike traditional neural networks that process each input independently, RNNs have a built-in memory to keep track of information from previous steps, making them perfect for tasks involving text, speech, or time-series data.

How RNNs Work

The unique feature of RNNs is their ability to connect information across different points in a sequence:

- Sequential Processing: Suppose you’re analyzing a sentence: “I love to read books.” Traditional networks only look at one word at a time without knowing the previous context. In contrast, RNNs remember the earlier words while processing the current word. This makes the network understand the flow and meaning better.

- Memory Mechanism: Each step of the sequence produces a hidden state, which captures important information from earlier steps. This hidden state is passed to the next step, helping the network maintain context.

However, standard RNNs sometimes struggle with remembering information from longer sequences due to a problem called vanishing gradients. To overcome this, advanced versions like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) were developed. These architectures can remember critical information over longer periods.

Why RNNs Are Important

RNNs are important because they can handle tasks where context and sequence are crucial:

- Natural Language Processing (NLP):

- Language Modeling: Predicting the next word in a sentence, like when your phone suggests the next word while typing.

- Sentiment Analysis: Analyzing a customer’s review to detect whether it’s positive, negative, or neutral.

- Text Translation: Translating a paragraph from English to French while keeping the original meaning intact.

- Time Series Prediction:

- Stock Market Forecasting: Analyzing past stock prices to predict future market trends.

- Weather Forecasting: Understanding previous weather patterns to predict upcoming conditions.

- IoT Monitoring: Detecting unusual patterns in sensor data to predict equipment failure.

Example in NLP: Predicting the Next Word

Imagine you’re training an RNN to predict the next word in a sentence:

- Input: “I am going to the…”

- The RNN processes “I,” updates its hidden state, then moves to “am,” and so on.

- By the time it processes “the,” it has enough context to predict a reasonable next word, such as “store,” “beach,” or “market.”

Because the RNN remembers earlier words, its prediction aligns with the sentence’s overall meaning.

Generative Adversarial Networks (GANs)

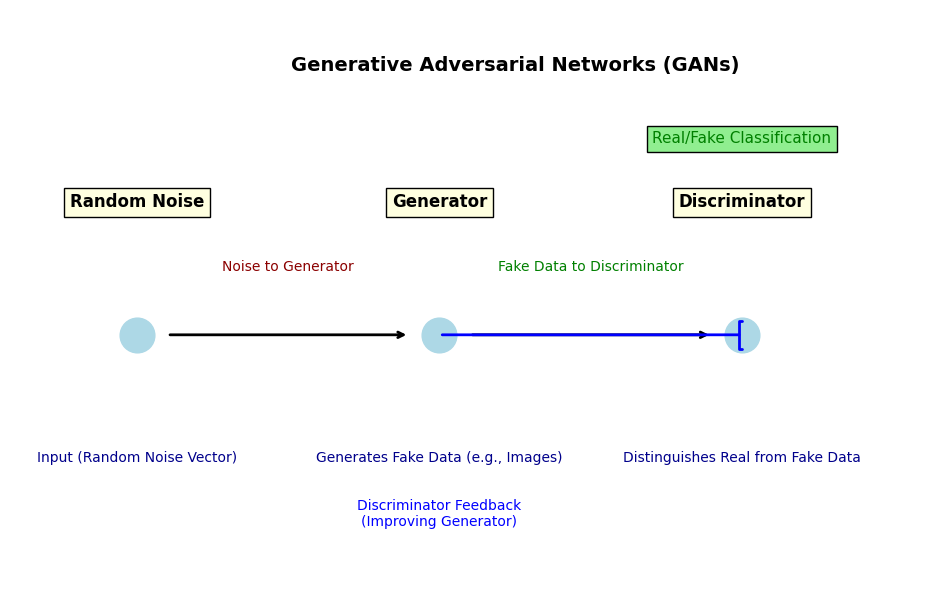

Generative Adversarial Networks (GANs) are a type of neural network that helps computers create new data that looks real. They are made up of two main parts: the Generator and the Discriminator. These two work like rivals, constantly trying to outsmart each other.

How GANs Work: Generator vs Discriminator

- Generator:

- The Generator makes fake data, such as images or sounds.

- Its goal is to create data so realistic that the Discriminator can’t tell it’s fake.

- Discriminator:

- The Discriminator checks whether the data is real or fake.

- It gets better at spotting fake data as it learns from the Generator’s attempts.

Both parts keep improving through this friendly competition. The Generator learns to make better fakes, and the Discriminator becomes smarter at identifying them. This back-and-forth process helps GANs produce impressive results.

Everyday Uses of GANs

GANs are used in many areas, including:

- Creating Images:

- GANs can make realistic-looking pictures from scratch, often used for design, advertisements, or video games.

- Deepfake Videos:

- GANs power deepfake technology, where someone’s face is swapped into a video.

- While there are concerns about misuse, this technology is also useful in film editing and dubbing.

- Art and Creativity:

- Artists use GANs to create AI-generated artwork.

- Tools like Artbreeder let users make unique images by adjusting different settings.

Example: GANs for Handwritten Number Generation

Let’s understand how GANs might work when creating images of numbers:

- Starting Point:

- The Generator creates random, messy images that look nothing like actual numbers.

- Training Process:

- The Discriminator compares these images with real handwritten numbers from a dataset.

- It tells the Generator when the images look wrong.

- The Generator adjusts and tries again, improving each time.

- Final Result:

- After training, the Generator produces images that look just like real handwritten numbers, even though they were created by a computer.

GANs have changed how AI creates data. They make realistic images, videos, and sounds that benefit industries from art to technology. Through the teamwork of the Generator and Discriminator, GANs push AI creativity to new levels.

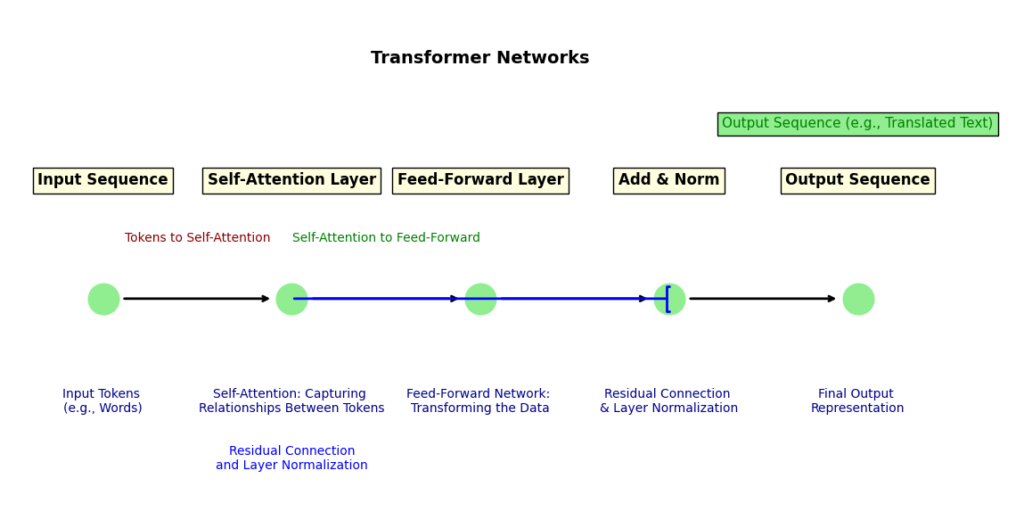

Transformer Networks

Transformer Networks are special types of neural networks that understand and process text better than older models. They have become important for tasks in Natural Language Processing (NLP) like language translation, text generation, and question answering.

Why Transformers Matter for NLP

Most older models, like RNNs, read text one word at a time, which can be slow and confusing when dealing with long sentences. Transformers process all the words at once and understand how they relate to each other. This makes them faster and more accurate for understanding language.

Here are common tasks where transformers are used:

- Language Translation: Converting text from one language to another (like translating English to French).

- Text Generation: Creating meaningful text based on a prompt (like what AI assistants do).

- Summarization: Turning a long article into a shorter version while keeping the key points.

- Sentiment Analysis: Detecting the tone of a text, such as whether a review is positive or negative.

Key Components of Transformers

1. Attention Mechanism:

Transformers use attention to figure out which parts of a sentence are the most important.

- Example: In the sentence, “The teacher who wore a blue dress taught math,” attention helps the model focus on “teacher” and “taught math” while ignoring extra details like “blue dress.”

- This makes it easier to understand the main idea.

2. Self-Attention:

Each word in a sentence pays attention to every other word in the same sentence.

- Example: In “John gave Mary a gift, and she smiled,” the word “she” refers to Mary.

The self-attention feature helps the model connect “she” to the right person without confusion.

How Transformers Work in Real Applications

BERT (Bidirectional Encoder Representations from Transformers):

- Reads text in both directions (left to right and right to left at the same time).

- Example: It helps search engines understand complex search queries like “cheapest flight with baggage included.”

GPT (Generative Pre-trained Transformer):

- Specializes in creating meaningful text based on what you type.

- Example: When you type “write a thank-you note,” GPT generates a complete and thoughtful response.

Transformers have changed the way AI understands text. By using attention and self-attention mechanisms, they make reading, understanding, and generating text more accurate and meaningful.

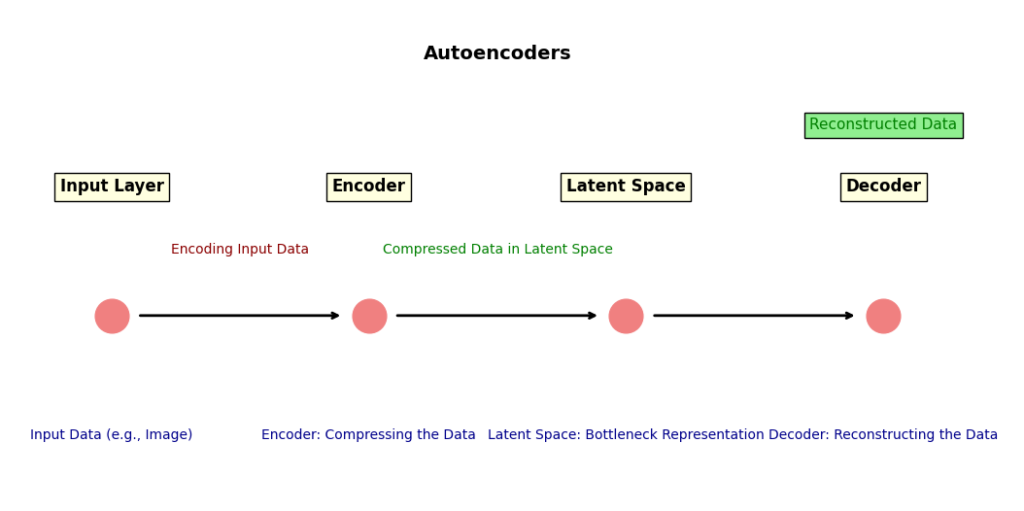

Autoencoders

Autoencoders are special neural networks that learn how to copy data in a smart way. They do this by squeezing information into a smaller form and then trying to rebuild the original data. This helps in tasks like compressing files, removing noise, and finding odd patterns in data.

How Autoencoders Work

An autoencoder has two main parts:

- Encoder: This part compresses the input data into a smaller, simpler representation.

- Example: Reducing the number of features in an image while keeping its important details.

- Decoder: This part takes the compressed data and tries to reconstruct the original input as accurately as possible.

- Example: Creating a clean version of a noisy image.

Together, the encoder and decoder form a system that learns to recreate data from fewer pieces.

Unsupervised Learning Capabilities

Autoencoders do not require labeled data. They learn patterns on their own by analyzing the input data. This makes them great for tasks where you don’t have predefined labels, such as:

- Feature Extraction: Finding useful patterns in raw data.

- Dimensionality Reduction: Simplifying datasets with many features, similar to Principal Component Analysis (PCA).

- Data Reconstruction: Rebuilding images or other data from limited information.

Key Applications of Autoencoders

- Data Compression: Compressing large files (like images) while keeping the most important details.

- Example: Saving memory space for storing large datasets.

- Denoising Data: Removing noise from images, audio, or other signals.

- Example: Cleaning up blurry or noisy pictures in photography apps.

- Anomaly Detection: Spotting unusual patterns in data. This is useful in industries like finance and cybersecurity.

- Example: Detecting fraudulent credit card transactions or unusual network activity.

Autoencoders have become an important tool for anomaly detection, data compression, and cleaning noisy information. Their ability to learn without needing labeled data makes them versatile in many AI applications.

Conclusion: The Future of Neural Network Architectures

The field of neural network architectures is evolving rapidly, with exciting new trends and innovations shaping the future. As technology progresses, neural networks are becoming more powerful, efficient, and adaptable. Here are some of the emerging trends to keep an eye on:

Emerging Trends and Innovations in Neural Network Research

- Transformer Evolution:

Transformers, already widely used in natural language processing (NLP), are expanding into other fields like computer vision and speech recognition. Their ability to handle large datasets and process information faster makes them crucial for AI advancements. - Neural Architecture Search (NAS):

Researchers are exploring automated methods to design neural networks. NAS helps create architectures that perform better on specific tasks without needing to manually tweak them. This could lead to more efficient and specialized networks. - Spiking Neural Networks (SNNs):

Inspired by the human brain, SNNs are gaining attention for their ability to process information in a more brain-like manner. They might help create AI that mimics human learning and cognition more closely. - Energy-Efficient Networks:

With the rise in the use of AI, the need for energy-efficient networks is growing. Researchers are focusing on reducing the computational cost of training deep neural networks, making AI more accessible and sustainable. - Integration of Multiple Modalities:

AI models are beginning to combine multiple types of data (like images, text, and speech). Future neural networks will likely integrate these different data types for better performance across various tasks.

Encouraging Experimentation and Learning

As neural networks continue to advance, it’s important to experiment and learn from different types of architectures. AI is a field that thrives on innovation, and even small changes to existing models can lead to significant improvements. Whether you’re a researcher, developer, or hobbyist, exploring new ideas and techniques will help move the field forward.

Unlock the Power of Neural Networks: A Comprehensive Guide to Deep Learning with Python

To explore neural networks and deep learning in more detail, I highly recommend reading my book: “Neural Networks and Deep Learning with Python: A Practical Approach“ by Emmimal P. Alexander.

This comprehensive guide takes you on a journey through both foundational and advanced neural network concepts. It’s designed to help beginners, students, and research scholars understand how neural networks work and their impact across industries. Here’s what you can expect to find inside:

What’s Inside the Book:

- Essentials of Neural Networks:

From basic perceptrons to multi-layer networks, the book breaks down the key building blocks of how neural networks learn and operate. - Deep Learning Architectures:

Explore deep learning models like CNNs, RNNs, and GANs. See how they shape innovations in areas like computer vision, NLP, and generative models. - Hands-On Python Code & Exercises:

The book includes practical Python code examples and exercises, designed for active learning. You’ll gain the skills to create your own models and implement techniques discussed. - Advanced Topics & Mathematical Foundations:

Learn complex concepts such as backpropagation, gradient descent, and activation functions in a way that’s approachable for everyone, with intuitive diagrams and step-by-step formulas. - Visual Aids:

Diagrams help you understand difficult concepts and make them easier to grasp, ensuring you stay engaged and follow along.

Topics Covered:

- Reinforcement Learning:

The book explores cutting-edge AI techniques, including reinforcement learning, so you can build intelligent agents that learn and make decisions based on their environment. - Optimization Techniques:

Learn about model optimization, including hyperparameter tuning, and advanced algorithms like Adam and RMSprop, which enhance your models’ performance. - Code Examples for Libraries:

The book uses popular libraries such as TensorFlow, PyTorch, and Keras, enabling you to experiment with real-world neural network implementations.

Is This Book For You?

Yes, it is! Whether you are a beginner or someone looking to explore advanced topics, this guide will walk you through the complexities of neural networks with clear, understandable explanations and real-world examples.

Get Your Copy:

- Kindle Edition: Click Here

- Hardcover: Click Here

- Paperback: Click Here

Whether you’re building deep learning models for image classification, sentiment analysis, or exploring new AI innovations, this book will guide you step by step toward mastering neural networks.

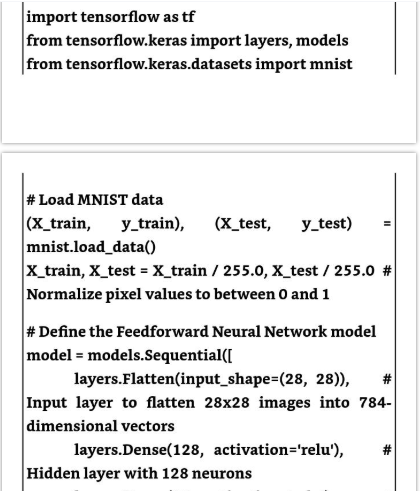

Sneak Peek Into the Book: Screenshots of Key Concepts

Here’s a glimpse of some of the exciting content from “Neural Networks and Deep Learning with Python: A Practical Approach“. These screenshots highlight key concepts, Python code examples, and insightful diagrams to help you visualize the material covered throughout the book.

Understanding the First Neuron Model: A Glimpse into Neural Networks

Screenshot of the First Neuron Model

Implementing a Feedforward Neural Network with MNIST Dataset

Screenshot of Feedforward Neural Network Code Using MNIST Dataset

Leave a Reply