How to Get Started with LightRAG: The Simple, Fast Alternative to GraphRAG

Introduction to LightRAG

Building tools for retrieval-augmented generation (RAG) can be tricky, especially when the process is too complex or resource-heavy. Many people rely on GraphRAG, which is powerful but difficult to manage. Its complexity and high demands often discourage developers from using it. That’s why LightRAG was created. It is a faster, simpler, and more efficient alternative. LightRAG focuses on making RAG workflows simple while still delivering excellent results. You don’t need to deal with complicated setups or worry about excessive resource usage.

In this blog post, you’ll learn how to get started with LightRAG. We’ll explain its architecture, compare it with GraphRAG, and provide easy-to-follow coding examples. Whether you’re building a chatbot, a recommendation engine, or a knowledge retrieval system, this guide will help you use LightRAG effectively.

What is LightRAG?

LightRAG is a simpler and faster framework for tasks related to retrieval-augmented generation (RAG). RAG combines information retrieval with natural language generation, which helps systems generate accurate responses by pulling in relevant data.

Unlike other RAG systems like GraphRAG, which use complex graphs to organize and connect data, LightRAG focuses on using simpler, more efficient methods. It uses lightweight techniques to retrieve and index information, which makes it quicker, easier to set up, and scalable for handling large amounts of data.

In short, LightRAG is a more efficient and accessible way to build RAG-based systems without the complexity or heavy resource requirements of alternatives.

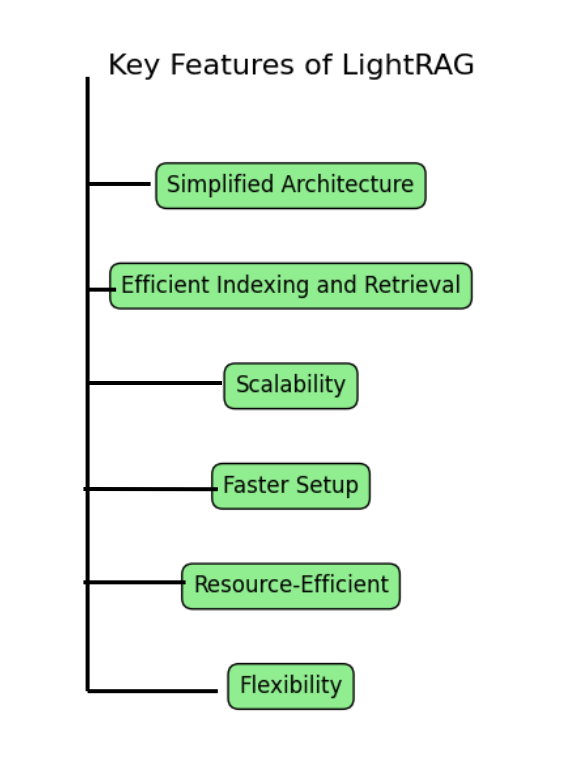

Key Features of LightRAG:

Here are the key features of LightRAG:

- Simplified Architecture: LightRAG uses a simple design, making it easier to implement. When compared to more complex RAG frameworks like GraphRAG.

- Efficient Indexing and Retrieval: It focuses on lightweight indexing and retrieval techniques. This helps speed up the process of finding and organizing relevant data.

- Scalability: LightRAG can handle large datasets effectively, allowing it to scale smoothly as your project grows.

- Faster Setup: With a less complex system, you can get started quickly without needing extensive configuration or understanding of complicated structures.

- Resource-Efficient: It’s optimized for performance, meaning it requires fewer resources, making it accessible for a wider range of projects and users.

- Flexibility: It’s adaptable for different use cases, from chatbots to recommendation systems, while still maintaining great performance.

These features make LightRAG an attractive option for developers looking for an efficient and easy-to-use tool for RAG tasks.

Why Choose LightRAG Over GraphRAG?

While GraphRAG is a powerful tool, it comes with some significant challenges:

- Complexity: The graph-based structures used in GraphRAG require advanced knowledge and careful tuning, making it harder for many developers to get started.

- Resource-Intensive: GraphRAG demands a lot of memory and computing power, which can be a barrier, especially for projects with limited resources.

- Slow Retrieval: Traversing large graphs to retrieve data can be slow, which becomes a problem when working with big datasets.

LightRAG addresses these issues by:

- Simplifying the Architecture: LightRAG offers a much simpler design, making it easier to understand and implement.

- Reducing Resource Requirements: It uses lightweight indexing and retrieval techniques, which require fewer resources and work well even on machines with limited capacity.

- Delivering Faster Retrieval Times: LightRAG is optimized for speed, allowing for quicker data retrieval, even with large datasets.

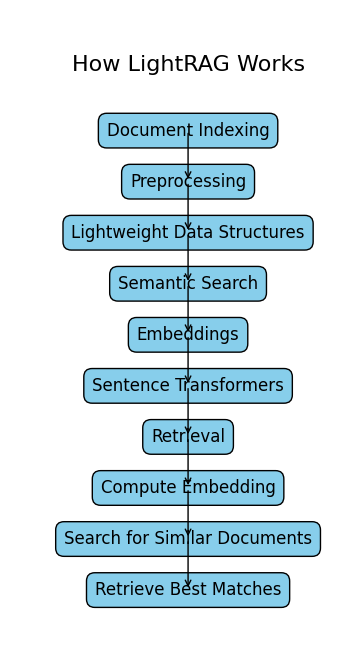

How LightRAG Works

LightRAG is built to be simple, fast, and efficient. Let’s break down how it works, step by step:

1. Document Indexing

Before you can use LightRAG for search, you need to index your documents. It is just like as organizing your documents that makes them easy to search later.

- Preprocessing: First, the documents are prepared for indexing. This step might involve cleaning up the data (removing unnecessary characters or formatting) so that the system can process it better.

- Lightweight Data Structures: Instead of using heavy, complex structures, LightRAG uses efficient tools like FAISS (Facebook AI Similarity Search) or Annoy (Approximate Nearest Neighbors). These tools help create a compact index that allows the system to quickly find and compare documents based on their content.

2. Semantic Search

Once the documents are indexed, LightRAG can search through them intelligently using something called semantic search.

- Embeddings: To make sense of the documents, LightRAG transforms the text into embeddings. An embedding is just a way to represent words or entire sentences as vectors (numbers arranged in a certain way). These vectors capture the meaning of the words in a mathematical form.

- Sentence Transformers: To create these vectors, LightRAG typically uses a tool like Sentence Transformers, which turns sentences or documents into embeddings. These embeddings allow LightRAG to understand the context of the text, rather than just looking at exact matches of words.

3. Retrieval

When you give LightRAG a query (a question or search request), it works like this:

- Compute the Embedding: LightRAG first converts your query into an embedding (just like it did with the documents).

- Search for Similar Documents: Then, it compares the query’s embedding with the embeddings of the indexed documents to find the most relevant or similar documents.

- Retrieve the Best Matches: LightRAG fetches the documents that are closest in meaning to your query, even if the exact words don’t match. This makes the search semantic — it looks for meaning, not just keywords.

In Summary:

- Indexing makes documents easy to search.

- Semantic search uses embeddings to understand the meaning of text.

- Retrieval finds the most relevant documents by comparing the query’s meaning to the indexed documents’ meanings.

By using this process, LightRAG can quickly and accurately retrieve information from large sets of documents based on the context and meaning of your queries.

Must Read

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

How to Get Started with LightRAG

Let’s walk through the steps to set up and use LightRAG in your project. We’ll cover installation, data preparation, indexing, retrieval, and integration with language models.

Setting Up Your Environment for LightRAG

Before you start working with LightRAG, make sure you have all the necessary libraries installed. Here’s how you can get everything set up:

Step 1: Install LightRAG

To install LightRAG, simply use pip:

pip install lightragStep 2: Prepare Your Data

LightRAG works with text-based datasets to retrieve and generate information. For this example, let’s assume you have a collection of documents stored in a JSON file, where each document contains a title and content field.

Here’s how you can prepare your data:

Example Data Setup:

import json

# Sample data: A list of documents

data = [

{"title": "Introduction to AI", "content": "Artificial Intelligence is transforming industries by enabling machines to perform tasks that typically require human intelligence."},

{"title": "Data Science Basics", "content": "Data science involves analyzing and interpreting complex data to extract meaningful insights and support decision-making."},

{"title": "Machine Learning 101", "content": "Machine learning is a subset of AI that focuses on training algorithms to learn patterns from data and make predictions or decisions without being explicitly programmed."},

]

# Save the sample data to a JSON file

with open("documents.json", "w") as f:

json.dump(data, f)

Explanation:

- Data Structure: The data is stored as a list of dictionaries, where each dictionary represents a document with a

titleandcontent. - Saving Data: The

json.dump()function writes the data into a file nameddocuments.json. You can replace this file with your own text data.

Now, you have a JSON file containing your dataset, ready to be processed and used with LightRAG!

Step 3: Index Your Data

LightRAG uses an efficient indexing mechanism to enable fast data retrieval. Here’s how you can index your dataset:

Code to Index Your Data:

from lightrag import LightRAG

# Initialize LightRAG

rag = LightRAG()

# Load and index documents from the JSON file

rag.index_documents("documents.json")

print("Documents indexed successfully!")

Explanation:

- Initialize LightRAG: We create an instance of the LightRAG class to interact with the framework.

- Index Documents: The

index_documents()method loads the documents from thedocuments.jsonfile and indexes them for fast retrieval. - Confirmation: A print statement confirms that the documents have been indexed successfully.

Now, your data is indexed and ready for efficient retrieval, making it easier to search through documents later in the process!

Step 4: Perform Retrieval

Once your data is indexed, you can retrieve relevant documents based on a query. LightRAG uses semantic search to understand the context of the query and find the most relevant results.

Code to Perform Retrieval:

# Query the indexed data

query = "What is machine learning?"

results = rag.retrieve(query, top_k=2) # Retrieve top 2 results

# Display results

for result in results:

print(f"Title: {result['title']}")

print(f"Content: {result['content']}")

print("-" * 50)

Explanation:

- Query: You define the query you want to search for. In this example, the query is “What is machine learning?“

- Retrieve Results: The

rag.retrieve()method fetches the top 2 results (you can adjusttop_kto retrieve more or fewer results). - Display Results: The results are printed out, showing the title and content of the retrieved documents.

Output:

Title: Machine Learning 101

Content: Machine learning is a subset of AI that focuses on training algorithms to learn patterns from data and make predictions or decisions without being explicitly programmed.

--------------------------------------------------

Title: Introduction to AI

Content: Artificial Intelligence is transforming industries by enabling machines to perform tasks that typically require human intelligence.

--------------------------------------------------

With semantic search, LightRAG returns the most relevant documents based on the context of your query, making it an efficient tool for information retrieval!

Step 5: Integrate with a Language Model (Optional)

LightRAG can be combined with a language model like GPT to generate more sophisticated responses based on the retrieved documents. Here’s how you can integrate LightRAG with OpenAI’s GPT:

Code to Integrate with OpenAI GPT:

import openai

# Set up OpenAI API

openai.api_key = "your-openai-api-key"

# Generate a response using GPT

def generate_response(query, context):

prompt = f"Context: {context}\n\nQuestion: {query}\nAnswer:"

response = openai.Completion.create(

engine="text-davinci-003", # You can choose a different engine if needed

prompt=prompt,

max_tokens=150

)

return response.choices[0].text.strip()

# Retrieve and generate a response

query = "What is machine learning?"

results = rag.retrieve(query, top_k=1)

context = results[0]['content']

answer = generate_response(query, context)

print(f"Answer: {answer}")

Explanation:

- Set Up OpenAI API: First, you need to set up your OpenAI API key.

- Generate a Response: The

generate_response()function creates a prompt using the retrieved context and query, then sends the prompt to OpenAI’s GPT to generate a response. - Retrieve Context: The

rag.retrieve()method is used to retrieve the most relevant document (top 1 in this case). - Generate and Print Answer: Finally, the answer is generated by GPT and printed.

Output:

Answer: Machine learning is a subset of AI that focuses on training algorithms to learn patterns from data and make predictions or decisions without being explicitly programmed.

This integration allows you to combine the power of LightRAG for information retrieval with GPT’s ability to generate coherent answers, creating a robust system for answering complex queries!

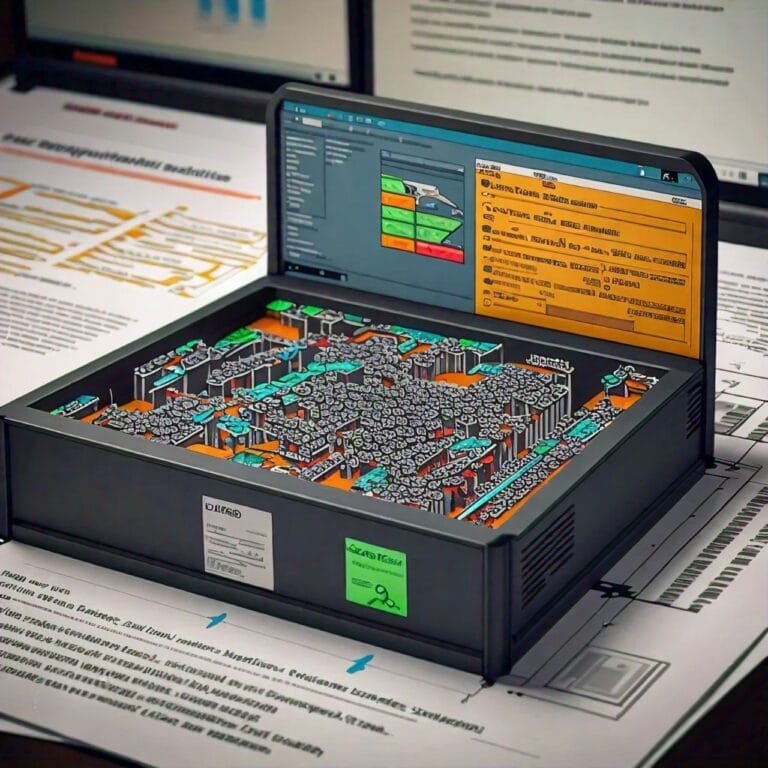

Comparison with GraphRAG

| Feature | LightRAG | GraphRAG |

|---|---|---|

| Complexity | Simple and lightweight | Complex graph-based structures |

| Speed | Faster retrieval times | Slower due to graph traversals |

| Scalability | Handles large datasets efficiently | Struggles with large datasets |

| Ease of Use | Intuitive API, easy to implement | Steeper learning curve |

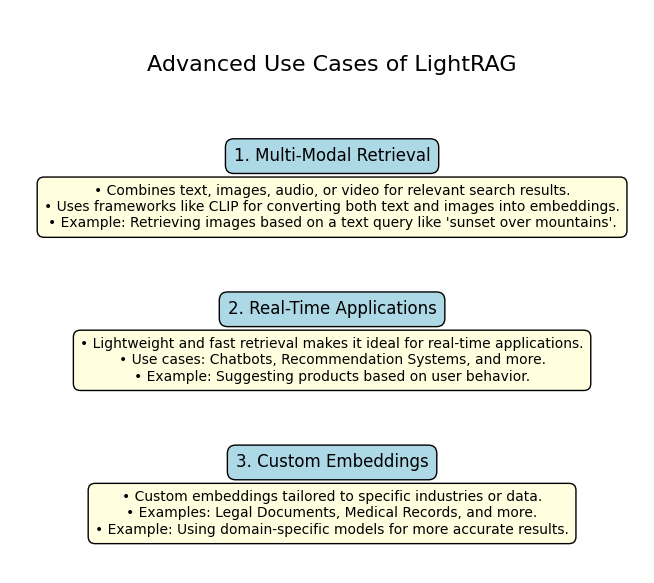

Advanced Use Cases of LightRAG

LightRAG is a flexible framework that can handle more than just basic text-based search. Let’s explore some advanced ways it can be used:

1. Multi-Modal Retrieval

- What is Multi-Modal Data? Multi-modal data combines different types of information, like text, images, audio, or videos. For example, searching for a product could involve both text (product description) and images (product photo).

- How Does LightRAG Handle It? LightRAG can work with frameworks like CLIP (Contrastive Language–Image Pretraining). CLIP is a powerful tool that converts both text and images into embeddings, allowing them to be compared directly.

- Example Use Case: If you have a dataset of images and their descriptions, LightRAG can retrieve relevant images based on a text query (e.g., “sunset over mountains”) or suggest text-based information for a given image.

2. Real-Time Applications

- Why LightRAG Is Suitable: LightRAG’s lightweight design and fast retrieval mechanism make it ideal for applications that need instant responses.

- Examples:

- Chatbots: A chatbot powered by LightRAG can retrieve answers or relevant documents in real time to respond to user queries.

- Recommendation Systems: Suggest personalized products, articles, or movies based on user preferences or behavior in milliseconds.

3. Custom Embeddings

- What Are Custom Embeddings? Custom embeddings are vector representations customized for specific types of data or industries. For example:

- Legal Documents: Using embeddings trained on legal text.

- Medical Records: Using embeddings optimized for healthcare-related terms.

- Why Use Them? Pretrained embeddings are general-purpose and might not fully capture the nuances of specialized datasets. Custom embeddings allow LightRAG to deliver more accurate and relevant results.

- How to Use Custom Embeddings: Instead of standard Sentence Transformers, you can plug in a domain-specific model trained on your own dataset. LightRAG will then use these embeddings for indexing and retrieval.

These advanced use cases show how LightRAG can handle more than just simple text queries. It can scale to multi-modal applications, power real-time systems, and adapt to specialized domains, making it a versatile tool for modern AI-driven projects.

Conclusion

LightRAG stands out as a powerful yet simple solution for developers and data scientists aiming to simplify retrieval-augmented generation (RAG) workflows. Compared to GraphRAG, it offers a lighter, faster, and more scalable alternative without sacrificing functionality.

In this guide, you’ve explored:

- How to set up LightRAG in your environment.

- The steps to index your data efficiently.

- How to retrieve information using semantic search.

- Integrating LightRAG with advanced tools like language models (e.g., GPT).

Whether you’re building a chatbot, a recommendation engine, or a knowledge retrieval system, LightRAG’s user-friendly design and high performance make it a valuable addition to your toolkit. With its focus on simplicity and speed, LightRAG empowers you to tackle real-world challenges effectively, delivering better results with less complexity.

FAQs

LightRAG is a lightweight and efficient framework for retrieval-augmented generation (RAG) tasks. Unlike GraphRAG, which relies on complex graph-based structures, LightRAG uses lightweight indexing and semantic search techniques to deliver faster and simpler retrieval. It is designed to reduce computational overhead while maintaining high performance, making it ideal for developers who need a scalable and easy-to-implement solution.

Yes, LightRAG is designed to handle large datasets efficiently. It uses optimized indexing mechanisms (e.g., FAISS or Annoy) and semantic search techniques to ensure fast retrieval times, even with millions of documents. Its lightweight architecture makes it more scalable than GraphRAG, which can struggle with large datasets due to its graph-based structure.

LightRAG can be easily integrated with language models like GPT. After retrieving relevant documents using LightRAG, you can pass the retrieved context along with the user query to a language model (e.g., OpenAI’s GPT) to generate a response. The blog post includes a step-by-step example of how to do this using the OpenAI API.

Absolutely! LightRAG’s fast retrieval times and efficient indexing make it well-suited for real-time applications like chatbots, recommendation systems, and knowledge bases. Its lightweight design ensures low latency, making it a great choice for applications that require quick responses.

External Resources

1. Official GitHub Repository

- Description: The primary source for LightRAG’s codebase, including installation instructions, usage examples, and updates.

- Link: LightRAG on GitHub

2. Deployment and Usage Guide

- Description: A comprehensive tutorial that guides you through deploying and using LightRAG, complete with hands-on examples and best practices.

- Link: LightRAG Deployment and Usage Guide

3. LearnOpenCV Article

- Description: An in-depth article that discusses LightRAG’s architecture, its advantages over traditional RAG systems, and provides a code walkthrough.

- Link: LightRAG: Simple and Fast Alternative to GraphRAG

Leave a Reply