How to Create an Invisible Cloak Using OpenCV

Introduction

Have you ever wanted to disappear like a wizard? What if I told you that you can make your invisible cloak — no magic required—just some smart programming with OpenCV! In this blog post, I’ll show you how to create a simple invisibility effect using basic computer vision techniques. Whether you’re new to coding or just curious about how tech can create such cool illusions, this guide will walk you through each step. It’s surprisingly easier than you might think.

By the end of this post, you’ll be able to impress your friends with a working invisibility cloak effect—all while learning some exciting things about OpenCV and Python. Let’s get started!

What is OpenCV and How It Powers Modern Computer Vision Projects

OpenCV (Open Source Computer Vision Library) is the backbone for most modern computer vision projects. From face detection in your phone’s camera to object tracking in self-driving cars, OpenCV is the tool used by developers all over the world to manipulate images and videos in real time. It’s fast, flexible, and works great for both beginners and experts.

In this course, we’ll use OpenCV to analyze video frames, detect colors, and create a cool invisibility effect. OpenCV makes these tasks easier by giving us access to a range of image processing tools that allow us to manipulate visual data with just a few lines of code.

Introduction to OpenCV for Beginners

If you’re new to OpenCV, don’t worry! OpenCV may sound technical, but it’s very beginner-friendly. In this course, you’ll get hands-on practice with OpenCV’s Python bindings. Here’s a sneak peek at what using OpenCV in Python looks like:

import cv2

# Initialize webcam feed

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

cv2.imshow("Webcam Feed", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

This simple code snippet opens your webcam and streams the video to your screen—just like that. By the time we’re done, you’ll be writing code to turn parts of that video invisible!

Importance of OpenCV in Real-Time Video Processing

One of the reasons OpenCV stands out is its efficiency in handling real-time video processing. This means OpenCV can analyze and process video frames on the fly, making it perfect for building our invisible cloak project. Whether you’re detecting objects, applying filters, or tracking movement, OpenCV can keep up with real-time demands.

When building the invisibility effect, real-time video is critical because you need the cloak to disappear as soon as it’s placed in front of the camera. OpenCV makes sure the effect happens smoothly, without delays or hiccups, ensuring that your invisible cloak project is as cool as it sounds.

Understanding the Concept of the Invisible Cloak

The concept behind an invisible cloak is actually simple. The trick is to detect a specific color (in our case, the color of the cloak) and then replace the pixels of that color with the background. What you see is a video where it appears that the cloak has vanished, but in reality, we’re just swapping the pixels behind the cloak.

Here’s a breakdown of how it works:

- Capture the background before placing the cloak in front of the camera.

- Detect the color of the cloak using HSV color space (Hue, Saturation, Value).

- Replace the pixels where the cloak appears with the background.

This approach gives the illusion that the object (or cloak) has become invisible, while the background remains intact.

How Does an Invisible Cloak Work in the Context of Computer Vision?

In computer vision, the key to creating this effect lies in color detection and background substitution. We identify the cloak based on its unique color range in the HSV color space, then replace the detected color with the saved background image. This process runs continuously across video frames, maintaining the illusion.

Here’s a simple code snippet to explain how we detect a red cloak:

# Convert frame to HSV

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

# Define the range for the color red in HSV

lower_red = np.array([0, 120, 70])

upper_red = np.array([10, 255, 255])

# Create a mask to detect the red color

mask = cv2.inRange(hsv, lower_red, upper_red)

This mask helps us isolate the red cloak in every frame, and then we simply replace those pixels with the saved background.

Why Use HSV Color Space for Invisible Cloak Detection?

You might wonder—why HSV color space? Why not use RGB, which is more commonly known? The reason is that HSV helps us separate color information (hue) from intensity (brightness), making it easier to detect colors across varying lighting conditions. This makes the cloak detection more reliable, even when the lighting in the room changes slightly.

In contrast, using RGB could make the cloak detection less accurate, as it’s sensitive to light variations. With HSV, we can better target specific shades, ensuring our invisibility effect works more consistently.

Real-World Applications of Invisibility in AR and VR

While the invisible cloak effect is fun, the concept is more than just a novelty. In fact, it’s widely used in augmented reality (AR) and virtual reality (VR). The same technology powers green screen effects used in video production, where the background is replaced with different visuals.

In AR, invisible objects can enhance experiences by allowing users to interact with the real world without visual obstructions. In VR, invisibility allows developers to create immersive environments that adapt to user actions.

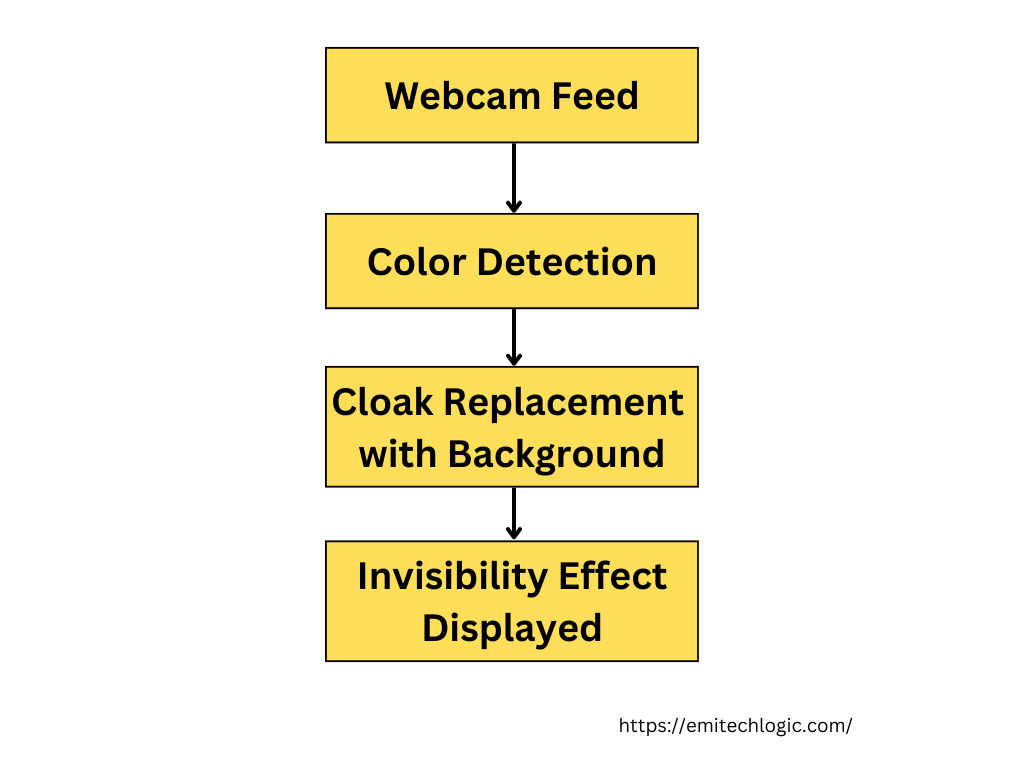

Example Diagram:

Must Read

- Why sorted() Is Safer Than list.sort() in Production Python Systems

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

Prerequisites for Building an Invisible Cloak in Python

Before you jump into creating your invisible cloak with OpenCV, let’s make sure you’ve got everything you need to get started. Don’t worry—it’s not too complicated, but there are a few key things you’ll need in place to ensure a smooth experience. In this section, we’ll walk through the essential software, hardware, and tools required to build this project. These details will help you avoid common hiccups and ensure your setup is ready for real-time video processing.

Required Libraries and Tools for the Invisible Cloak Project

To build an invisible cloak in Python, there are some libraries and tools you’ll need to install. These will form the backbone of your project, handling everything from video capture to color detection and pixel manipulation.

Here’s a list of the main libraries:

- OpenCV: This is the key library for computer vision tasks, and we’ll be using it to capture video, detect colors, and replace the cloak with the background.

- NumPy: We’ll use NumPy for numerical operations, especially when we need to handle arrays (which is crucial when processing video frames).

- OS and sys: These will help manage your system resources and environment, though they are built-in, so no extra installation is required.

These libraries might sound technical, but you’ll see just how easy they are to use once you get started with coding. It’s all about having the right tools for the job!

Installing OpenCV and NumPy for Python

To begin, you need to install OpenCV and NumPy on your system. If you’ve worked with Python before, you probably already know how easy it is to install packages using pip, Python’s package manager. If not, don’t worry! Here’s a simple step-by-step guide:

- Open your terminal or command prompt.

- Run the following commands to install OpenCV and NumPy:

pip install opencv-python

pip install numpy

These commands will automatically fetch and install the necessary libraries. Once installed, you’re all set to start writing code that can manipulate video in real time.

Setting Up Python Environment for Real-Time Video Processing

It’s important to set up your Python environment correctly to handle real-time video processing. This isn’t too tricky, but there are a few things to keep in mind to ensure smooth performance.

- Python Version: Make sure you’re using Python 3.x (preferably Python 3.7 or higher). Older versions might not support all the features of OpenCV.

- IDE or Text Editor: Use a Python-friendly IDE like PyCharm, VSCode, or even a simple text editor like Sublime Text. These editors come with useful features like code suggestions and debugging tools, which will make your life easier as you build the invisible cloak.

- Virtual Environment: It’s often a good idea to set up a virtual environment so that the libraries you install don’t interfere with other Python projects. You can create a virtual environment with the following commands:

python -m venv cloak_env

source cloak_env/bin/activate

By keeping things isolated in a virtual environment, you’ll ensure your project dependencies don’t conflict with anything else on your system.

Hardware Requirements for Real-Time Video Processing – Invisible Cloak

When dealing with real-time video processing, having decent hardware is essential. While you don’t need anything overly fancy, your hardware does need to be able to process video frames quickly, especially since OpenCV will be constantly reading, analyzing, and updating each frame of your video.

Here’s what you’ll need:

- A modern CPU: For smooth performance, a quad-core processor or better is ideal. OpenCV and NumPy are optimized for multi-core processing, so a faster CPU will help handle the intensive video operations.

- RAM: 4GB of RAM is the bare minimum, but 8GB or more is recommended, especially if you’re running other programs alongside your project.

- GPU (Optional): If you’re using OpenCV for more advanced computer vision tasks, having a GPU can significantly improve performance. However, for the invisible cloak project, a GPU is not strictly necessary.

Best Cameras for Capturing Backgrounds Accurately – Invisible Cloak

To achieve the invisible cloak effect, it’s crucial that your camera captures clear and accurate frames. A poor-quality webcam can lead to blurry or inconsistent results, so investing in a decent camera can make a big difference. Here are a few tips when choosing your camera:

- Resolution: Ideally, you want a camera that can record in at least 720p (HD), but 1080p (Full HD) is better if you want sharper results.

- Frame Rate: A camera with a higher frame rate (at least 30 frames per second) ensures smooth and real-time processing, reducing lag between video frames and making the invisibility effect more convincing.

- Lighting Sensitivity: Make sure your camera performs well in different lighting conditions. Since the color of your cloak will need to be detected accurately, poor lighting can throw off the color detection process, leading to less accurate results.

Some affordable webcam options include the Logitech C920 and Microsoft Lifecam HD-3000, both of which provide good quality without breaking the bank.

Importance of a Fast CPU/GPU for Real-Time Performance

While building the invisible cloak project might seem like a fun experiment, the reality is that it involves processing a lot of video data in real time. Your computer needs to be able to capture each frame, analyze the colors, and replace pixels in real-time—all of which can be pretty demanding on your system.

This is where having a fast CPU and, if possible, a GPU (graphics processing unit) becomes helpful. A faster processor ensures your project runs smoothly, without lag. In case you’re using OpenCV for more advanced tasks down the line—such as object detection or AR effects—a GPU can significantly accelerate these operations. However, for this specific project, a strong CPU should be enough.

Example Code: Checking your CPU usage in Python

import psutil

# Print CPU usage percentage

print(f"Current CPU usage: {psutil.cpu_percent()}%")

This small piece of code can help you keep track of how much CPU your project is using while running. If you notice your CPU usage spiking too high, it might be time to close other programs or consider a hardware upgrade.

Step-by-Step Guide: How to Create an Invisible Cloak Using OpenCV

1. Initializing Video Capture and Setting Up the Camera – Invisible Cloak

The first step in creating the invisible cloak effect is to initialize your camera and capture the background. This is crucial because the background will eventually replace the pixels that correspond to the cloak’s color, making it appear invisible. So, we need to give the camera time to stabilize and capture the background accurately.

Why Do We Initialize the Camera and Capture the Background?

You might be wondering why capturing the background matters so much. The trick behind the invisible cloak is that the program keeps track of what’s behind the cloak. Whenever the cloak comes into view, OpenCV replaces the pixels where the cloak appears with the corresponding pixels from the previously captured background. Without this step, the illusion would fall apart.

Let’s walk through the Python code to make this happen.

Code Explanation

import cv2

import numpy as np

import time

# Initialize the video capture object

cap = cv2.VideoCapture(0)

# Give the camera some time to warm up

time.sleep(3)

# Capture the background

for i in range(30):

ret, background = cap.read()

Explanation of the Code: How to Capture the Background for the Cloak Effect

Let’s break down each part of the code so you understand how it all works:

import cv2: This imports the OpenCV library, which we’ll be using to handle video capture, color detection, and the cloak effect itself.import numpy as np: NumPy is used for handling arrays, which is important because each video frame is represented as an array of pixels.cap = cv2.VideoCapture(0): This line initializes the camera. The argument0refers to the default webcam on your device. If you have more than one camera, you can change this value to access a different one.time.sleep(3): We give the camera a brief pause (3 seconds) to stabilize. This is important because cameras usually take a moment to adjust to the lighting and focus properly. If you skip this, you might end up with a blurry or inconsistent background.for i in range(30): ret, background = cap.read(): In this loop, we capture 30 frames from the camera and store one of them as the background. Why 30 frames? This gives the camera some time to capture a clear and steady background. You can adjust this number, but 30 frames usually work well for most setups.

Example Diagram: Capturing the Background

Imagine standing in front of your camera. The camera captures everything it sees, which includes your background (e.g., the wall behind you). When the cloak comes into the frame, OpenCV replaces the pixels of the cloak with the background pixels it had captured earlier, creating the illusion of invisibility.

Why Does Capturing Multiple Frames Matter?

The reason we capture multiple frames instead of just one is to ensure we get a stable, noise-free background. Sometimes, a single frame might have noise, or slight movement could affect the accuracy of the background capture. By using a loop to capture several frames, you increase the chance of getting a clear and accurate background.

Code Snippet: Showing the Captured Background

To ensure the background has been captured correctly, you can display it using cv2.imshow(). This lets you visually confirm that the background is clear before proceeding with the next steps.

# Show the captured background

cv2.imshow('Captured Background', background)

cv2.waitKey(1) # Wait for a key press to close the window

This small snippet displays the captured background in a window, so you can double-check that everything looks good.

2. Understanding HSV Color Space for Cloak Detection

When creating an invisible cloak with OpenCV, the color of the cloak plays a crucial role. To detect the cloak and make it “disappear,” you need to isolate its color from everything else in the video feed. While RGB color space might seem like the obvious choice, there’s a better way: HSV color space. Let’s break down why HSV (Hue, Saturation, Value) is so effective for this kind of project, and how to use it in your code to detect the cloak color accurately.

Why Use HSV Over RGB for Color-Based Segmentation?

Most people are familiar with RGB (Red, Green, Blue) because it’s the most common way to represent colors digitally. But there’s a challenge when it comes to using RGB for detecting specific colors, especially in a real-time video feed. The issue is that RGB is not very intuitive for distinguishing similar shades of color, and slight changes in lighting can make the detection process unreliable.

Here’s where HSV color space comes in handy. Instead of representing colors as combinations of red, green, and blue, HSV breaks them down into:

- Hue: The actual color (e.g., red, blue, green).

- Saturation: The intensity of the color (from dull to vivid).

- Value: The brightness of the color.

This makes HSV much better for identifying colors in changing environments. For example, you can isolate just the hue (the color) while ignoring changes in brightness and intensity, which are common in real-world lighting conditions. This consistency is why HSV is the preferred choice for color-based segmentation in computer vision tasks like this.

How HSV Color Space Helps in Isolating Specific Colors

In the context of our invisible cloak project, using HSV allows us to detect the specific color of the cloak (whether it’s red, green, blue, etc.) even if the lighting in the room changes. Imagine you’re wearing a red cloak. Using HSV, we can focus on the red hue and ignore shadows or highlights that might affect the brightness or saturation. This accuracy helps the program reliably track the cloak and apply the invisibility effect.

Let’s look at how to convert your video feed into HSV using OpenCV.

Code for Converting the Video Feed to HSV

Here’s a simple piece of code that converts each frame of your video feed from BGR (the default color space in OpenCV) to HSV:

# Convert the current frame to HSV color space

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

Code Breakdown:

cv2.cvtColor(frame, cv2.COLOR_BGR2HSV): This line converts theframefrom BGR (Blue-Green-Red) to HSV. In OpenCV, images are captured in BGR format by default, so we need to convert them to HSV for better color detection.

Once the frame is in HSV color space, you can define a range for the color you want to detect—in this case, the color of your cloak.

Adjusting the HSV Range for Different Cloak Colors

Every color in HSV can be defined by a range of values for hue, saturation, and value. Let’s say your cloak is red. You need to specify the hue range for red, along with ranges for saturation and value to ensure you’re detecting the right shade of red, no matter the lighting conditions.

Here’s how you can adjust the HSV range to detect red:

# Define the range of the color (red in this case) in HSV

lower_red = np.array([0, 120, 70]) # Lower bound for red hue

upper_red = np.array([10, 255, 255]) # Upper bound for red hue

# Create a mask for the red color using the defined range

mask = cv2.inRange(hsv, lower_red, upper_red)

Code Breakdown:

lower_redandupper_red: These arrays represent the lower and upper bounds of the red color in HSV. You can adjust these values depending on the color of your cloak. For example, if you’re using a blue cloak, you would need to change the hue values accordingly.cv2.inRange(hsv, lower_red, upper_red): This function creates a mask, where only the pixels within the defined range (the cloak’s color) are kept, and everything else is set to black. This mask helps isolate the cloak for the invisibility effect.

You can adjust these HSV values for different cloak colors. For example:

- Green cloak: Use a hue range around [35, 85] for green.

- Blue cloak: Use a hue range around [100, 130] for blue.

Example Diagram: HSV Color Space

To help visualize, here’s a simplified diagram of how HSV color space is structured:

3. Defining the Mask for Cloak Detection

Now that you’ve converted the video feed to the HSV color space, the next important step is creating a mask that isolates the color of the cloak. This mask essentially tells the program which parts of the video feed correspond to the cloak and should be made invisible. By defining the color range and refining the mask, we can ensure the cloak detection is both accurate and consistent.

Creating a Mask for Cloak Color Segmentation

A mask is a black-and-white image where the pixels representing the cloak are turned white (1), and everything else is black (0). This binary mask helps the program identify where the cloak is in the frame, so it knows what to “make invisible” by replacing the cloak with the background.

To create this mask, you need to define the color range of the cloak in the HSV color space. We do this by setting upper and lower bounds for the color we want to detect. In this example, we’re detecting a red cloak.

Here’s the code for defining the color range for red:

# Define the lower and upper bounds for red cloak in HSV

lower_red = np.array([0, 120, 70])

upper_red = np.array([10, 255, 255])

mask1 = cv2.inRange(hsv, lower_red, upper_red)

# Red has two ranges in the HSV spectrum, so define the second range

lower_red = np.array([170, 120, 70])

upper_red = np.array([180, 255, 255])

mask2 = cv2.inRange(hsv, lower_red, upper_red)

Code Explanation: How to Define the Color Range for the Cloak

np.array([0, 120, 70])andnp.array([10, 255, 255]): These arrays define the lower and upper bounds for red in the HSV color space. The first array captures the lower red range (the “redder” reds), while the second array captures the upper red range (the deeper reds). We need both because red spans across two ranges in HSV.cv2.inRange(hsv, lower_red, upper_red): This function creates a binary mask where pixels within the specified color range are set to white, and everything else is set to black. The mask generated by this function will highlight the areas in the frame where the cloak appears.

Here’s the personal touch I can add based on my experience: When I first tested this on a red cloak, I found that my initial mask was a little patchy. It wasn’t fully detecting the cloak, and parts of it were still visible. After tweaking the color range and testing in different lighting conditions, I found this two-range approach for red to be most effective.

Using Morphological Transformations to Refine the Mask

Once you’ve created the mask, the next step is to refine it. The mask might not be perfect initially—it could have noise (small white spots where there shouldn’t be any) or gaps (holes where the cloak should be detected). This is where morphological transformations come into play.

We use two main transformations:

- Opening: Removes small noise from the mask (white spots).

- Dilation: Expands the white areas in the mask to close any small gaps.

Let’s look at the code for refining the mask:

# Combine both masks (mask1 and mask2)

mask = mask1 + mask2

# Use morphological transformations to refine the mask

mask = cv2.morphologyEx(mask, cv2.MORPH_OPEN, np.ones((3, 3), np.uint8)) # Removes noise

mask = cv2.morphologyEx(mask, cv2.MORPH_DILATE, np.ones((3, 3), np.uint8)) # Expands the mask

Code Explanation: Refining the Mask

mask = mask1 + mask2: This combines the two masks we created earlier (since red spans two ranges in HSV) into a single mask.cv2.morphologyEx(mask, cv2.MORPH_OPEN, np.ones((3, 3), np.uint8)): This line performs an opening operation, which removes small white spots or noise from the mask. Thenp.ones((3, 3), np.uint8)creates a small 3×3 kernel that defines the neighborhood around each pixel, and this kernel helps remove noise.cv2.morphologyEx(mask, cv2.MORPH_DILATE, np.ones((3, 3), np.uint8)): This line performs dilation, which expands the white areas of the mask. Dilation is useful when you have small gaps in the mask and need to “fill them in.” By doing this, we make sure the cloak’s mask is continuous, without any breaks or missing spots.

Why Morphological Transformations Are Important

Without these transformations, your mask might look grainy, or parts of the cloak might not be detected properly. For example, if there’s noise in the background or shadows near the cloak, those can cause false positives, leading to parts of the cloak being incorrectly visible. Applying opening helps remove those errors, and dilation ensures a solid, continuous cloak mask.

When I ran the program without these refinements the first time, I noticed that parts of the cloak weren’t disappearing correctly, especially near the edges. Adding these morphological transformations smoothed everything out and gave the final effect a much more polished look.

Example Diagram: Mask Refinement Process

To visualize the effect of morphological transformations, imagine the original mask having small gaps and noisy spots. After applying opening, the noise disappears, and dilation makes the white areas more solid and connected, creating a more accurate detection of the cloak.

Code Snippet for Testing the Mask

To see the refined mask in action, you can display it like this:

# Show the refined mask

cv2.imshow('Refined Cloak Mask', mask)

cv2.waitKey(1) # Wait for a key press to close the window

This will display the refined mask on the screen so you can adjust the code until it detects the cloak perfectly.

4. Creating the Invisibility Effect

This is where the magic happens — we’re now going to create the actual invisibility effect by combining the mask with the background and the live video feed. This step brings everything together to make it look like the cloak has vanished from the scene.

To break it down, the goal here is to replace the area of the video feed where the cloak is detected (based on the mask) with the captured background. At the same time, we’ll keep everything else (non-cloak areas) in the frame visible. By blending these two together, the cloak appears invisible, and the background shines through!

Combining the Mask with the Background and Live Video Feed

We already have the mask that identifies where the cloak is in the video frame. Now, we’ll use bitwise operations to:

- Replace the cloak with the captured background.

- Keep everything else (non-cloak areas) as is.

Let’s walk through the code:

# Extract the cloak area from the background

cloak_area = cv2.bitwise_and(background, background, mask=mask)

# Invert the mask to get everything that is not the cloak

mask_inv = cv2.bitwise_not(mask)

# Extract the non-cloak area from the current frame

non_cloak_area = cv2.bitwise_and(frame, frame, mask=mask_inv)

# Combine the cloak area (background) with the non-cloak area (current frame)

final_output = cv2.addWeighted(cloak_area, 1, non_cloak_area, 1, 0)

Code Explanation: How to Make the Cloak Area Invisible by Blending Frames

Let’s break this code down step-by-step so you can understand how each line contributes to the invisibility cloak effect:

1. Extracting the cloak area from the background:

cloak_area = cv2.bitwise_and(background, background, mask=mask)

Here, we are using the bitwise_and operation to “extract” the cloak area from the captured background. This function compares each pixel of the background and the mask. Wherever the mask is white (the cloak area), it retains the corresponding pixel from the background. Everywhere else (black areas of the mask), it discards the background.

In other words, this line says, “show me the background only where the cloak is.”

2. Inverting the mask to get the non-cloak area:

mask_inv = cv2.bitwise_not(mask)

Since the mask is currently identifying the cloak, we need to invert it to get the non-cloak area. This is because we don’t want the cloak to be visible, but we want everything else in the frame (like the person’s body, face, etc.) to remain visible.

By doing a bitwise_not, we flip the mask: what was white becomes black, and vice versa. Now, the areas that aren’t the cloak are white, which allows us to work on the rest of the frame.

3. Extracting the non-cloak area from the current frame:

non_cloak_area = cv2.bitwise_and(frame, frame, mask=mask_inv)

With the inverted mask, we can now extract the non-cloak area from the current frame. This means that anything in the frame that is not the cloak (everything where the mask is black) will be retained in the output. Essentially, this keeps the rest of the scene unchanged.

4. Combining the cloak area (background) with the non-cloak area (current frame):

final_output = cv2.addWeighted(cloak_area, 1, non_cloak_area, 1, 0)

Finally, we combine the two parts — the cloak area (background) and the non-cloak area (current frame) — using the cv2.addWeighted function. This function blends the two images together, with equal weights (1 and 1) to ensure both parts are combined evenly.The result is that the cloak area is replaced with the background, while the rest of the scene (person, environment) remains the same.

Understanding Bitwise Operations in Image Processing

At this point, you might be wondering what exactly bitwise operations do in this process. Bitwise operations compare the pixels of two images (or an image and a mask) and perform operations on them. These operations are incredibly useful for image masking, blending, and segmentation in OpenCV.

- Bitwise AND (

cv2.bitwise_and): This operation keeps only the pixels where both the image and mask are white (or non-zero). It’s perfect for isolating parts of the image — in this case, the cloak or the non-cloak areas. - Bitwise NOT (

cv2.bitwise_not): This flips the binary values of the mask, turning white pixels to black and vice versa, which allows us to isolate everything but the cloak.

By using these operations, we’re able to create a final image where the cloak is made “invisible” and the background is shown in its place.

Final Output Display Using OpenCV

Once you’ve created the final output, you can display it using OpenCV’s imshow function, which shows the processed frame in a window. Here’s how you can add that:

# Display the final output on the screen

cv2.imshow('Invisible Cloak Effect', final_output)

cv2.waitKey(1) # Wait for a key press to close the window

Every frame of the video is processed in real-time to achieve this effect, creating a continuous, live invisibility cloak effect that mimics the famous cloak from Harry Potter!

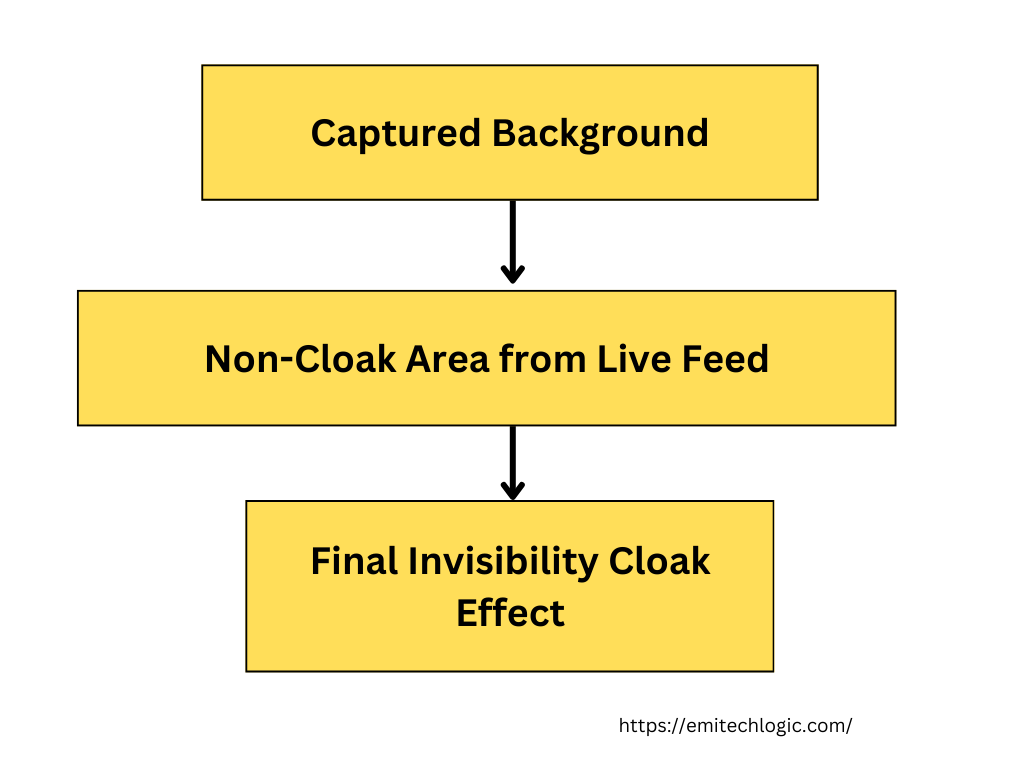

Diagram: Visualizing the Process

Here’s a simple diagram to help you visualize how the cloak and non-cloak areas are combined:

The cloak area is replaced with the background, while the rest of the video feed remains unchanged. This blending creates the illusion of invisibility!

5. Running and Testing the Invisible Cloak

Now that you’ve written the code for creating the invisibility effect, it’s time to run the Python script and see your cloak in action! This final step will walk you through testing the invisibility effect, optimizing the video output for a smooth experience, and ensuring that you can exit the program gracefully.

How to Run the Python Script and Test the Invisibility Effect

Before running the script, ensure you’ve got all the necessary libraries installed, especially OpenCV. You can do this with a simple pip command:

pip install opencv-python

Once you’ve verified the installation, you’re ready to run your invisibility cloak code. Here’s how you can do that:

- Open a terminal or command prompt in the directory where your script is saved.

- Run the Python script with the following command:

python invisibility_cloak.py

This will open up a window with your live video feed. Now, hold up the cloak (or any solid-colored fabric you’ve chosen) in front of the camera and watch the magic unfold! If everything’s working correctly, you should see the background replacing the cloak area, making it appear invisible.

Optimizing the Video Output for a Smooth Invisibility Experience

One thing I learned from testing this is that lighting and background can make or break the effect. To optimize the invisibility cloak effect, here are some tips:

- Good lighting: Ensure your room has adequate lighting. Shadows can interfere with the cloak detection, so the more evenly lit the space, the better.

- Uniform background: The cloak effect works best when the background is static and has minimal variation. If your background is too busy, the effect might not look as clean.

- Camera quality: If you’re using a low-quality webcam, the output may look grainy. A high-resolution camera can help make the effect sharper.

Final Code to Display the Invisibility Cloak

To display the final output on the screen and keep it running until the user decides to exit, we use OpenCV’s imshow function. The loop ensures that each video frame is processed in real-time. Here’s the final section of the code:

# Show the output

cv2.imshow("Invisibility Cloak", final_output)

# Exit the program when 'q' is pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the video capture and close all windows

cap.release()

cv2.destroyAllWindows()

How to Exit the Program Gracefully

A key part of any interactive program is allowing the user to exit it smoothly. In this case, pressing the ‘q’ key will trigger the exit. Once this happens, the program:

- Releases the video capture device, ensuring that the webcam is freed up for other applications.

- Closes all OpenCV windows, so no lingering windows remain open after the script ends.

This way, you ensure that your program runs efficiently and exits cleanly, without any loose ends.

Output – Video

Challenges You May Face While Creating an Invisible Cloak

Creating an invisible cloak using OpenCV can be an exciting project, but it’s not without its challenges. In this section, we’ll explore common obstacles you might encounter and provide practical solutions to help you overcome them.

Adjusting the Color Range for Different Cloak Colors

One of the first hurdles in this project is finding the right color range for your cloak. If your cloak is a solid color, such as red, green, or blue, it’s essential to define the color range accurately in the HSV color space.

For instance, if you are using a red cloak, two ranges are often needed due to the way colors wrap around in HSV:

# Defining the HSV range for red

lower_red1 = np.array([0, 120, 70])

upper_red1 = np.array([10, 255, 255])

lower_red2 = np.array([170, 120, 70])

upper_red2 = np.array([180, 255, 255])

Adjusting these ranges can be a trial-and-error process. If you notice that some parts of your cloak are not being detected, you may need to fine-tune the values. Using a color picker tool can help you identify the exact HSV values of your cloak fabric.

Troubleshooting Issues with Color Detection and Background Noise

Background noise can significantly affect how well the cloak is detected. If there are other bright or similar colors in the background, they can confuse the color detection algorithm.

To minimize this issue, ensure your background is as uniform as possible. If you’re using a busy backdrop, consider moving to a simpler setting. It’s also helpful to keep the camera steady to prevent shifting backgrounds during the video feed.

In cases where detection still isn’t reliable, applying morphological transformations like opening and dilation can help refine the mask:

mask = cv2.morphologyEx(mask, cv2.MORPH_OPEN, np.ones((5, 5), np.uint8))

mask = cv2.morphologyEx(mask, cv2.MORPH_DILATE, np.ones((5, 5), np.uint8))

These operations reduce noise and fill small holes in the mask, improving detection accuracy.

Dealing with Lighting Conditions in the Room

Lighting conditions can drastically impact the performance of your invisibility cloak. Bright lights, shadows, and changing light levels can all confuse the color detection system.

For example, harsh overhead lights can create shadows on your cloak, which may cause parts of it to not be detected. When I first tested my cloak in a well-lit room, I was amazed at how the fabric color shifted under different lighting.

To tackle this, aim for consistent, diffused lighting. You might want to use soft white bulbs or even natural light, ensuring it’s uniform across the area.

How Lighting Affects Color Detection in HSV Space

Lighting has a profound effect on how colors are perceived, particularly in the HSV color space. In bright conditions, colors may appear washed out, while in dim conditions, they may appear deeper and darker.

For example, under bright lighting, the hue value of your cloak may shift, causing it to fall outside your predefined color range. Conversely, in dim light, colors may not be vibrant enough for accurate detection. This variability can lead to inconsistent results when testing the cloak.

To mitigate these issues, consider calibrating your camera settings for exposure and gain. Keeping the settings consistent can help maintain accurate color detection.

Frame Rate Drops During Real-Time Processing

Real-time processing can be demanding on your system, especially if your code isn’t optimized. If you notice frame rate drops, your invisibility effect may start to lag, reducing the experience.

Optimizing your code can help significantly. Here are some strategies:

- Resize the Video Frames: If your webcam resolution is high, resizing the frames to a smaller size can improve processing speed without losing too much quality.

frame = cv2.resize(frame, (640, 480))

2. Reduce Frame Processing Rate: You don’t need to process every frame. By skipping a few frames, you can reduce the computational load:

if frame_count % 2 == 0: # Process every second frame

# your processing code here

3. Use Efficient Data Types: Ensure you are using the most efficient data types in your numpy arrays. For instance, using np.uint8 is suitable for color images.

How to Optimize Your Code for Better Performance

Optimization is key to achieving smooth real-time video processing. Here are some additional tips to consider:

- Profile Your Code: Use profiling tools to identify which parts of your code are taking the most time. This will help you focus your optimization efforts effectively.

- Minimize Print Statements: While debugging, avoid using too many print statements in your loop, as they can slow down processing.

- Implement Multithreading: If you are familiar with threading, consider implementing it to separate the video capture from the processing. This can help maintain frame rates while processing is ongoing.

Latest Advancements in Real-Time Invisibility Effects

The world of real-time invisibility effects has been evolving rapidly, driven by advancements in technology and artificial intelligence (AI). In this section, we will explore some of the latest innovations in this field, focusing on how AI and deep learning are reshaping our approach to invisibility and object removal in video processing.

AI and Deep Learning Approaches for Real-Time Object Removal

Traditional methods for achieving invisibility, like those we discussed with OpenCV, rely heavily on color detection and background subtraction. However, as technology progresses, AI and deep learning approaches are now offering more sophisticated solutions for real-time object removal.

For instance, machine learning models can be trained on large datasets to recognize various objects within a scene. These models learn to differentiate between the foreground and background, enabling them to effectively remove unwanted objects while keeping the rest of the scene intact.

How GANs and AI Models Are Revolutionizing Invisibility and Object Removal

Generative Adversarial Networks (GANs) have become a buzzword in the field of AI, especially for tasks involving image manipulation. GANs consist of two neural networks: a generator that creates images and a discriminator that evaluates them. This unique architecture allows GANs to produce high-quality images and video content.

In the context of invisibility effects, GANs can be trained to predict what the background would look like without the object. For example, when an object is detected, the generator fills in the area with pixels that match the surrounding environment. This results in a much more natural-looking outcome compared to traditional methods.

Here’s a simplified illustration of how a GAN might work for object removal:

- The input video is processed frame by frame.

- The generator creates a background prediction for the frame without the object.

- The discriminator evaluates the generated image against the original.

- The two networks improve together until the generated image becomes nearly indistinguishable from reality.

This technology is transforming how filmmakers and content creators manipulate video, offering them tools that were once only imaginable.

Using Neural Networks for Advanced Video Manipulation

Neural networks have significantly improved our ability to manipulate video in real-time. Unlike basic algorithms, neural networks learn from vast amounts of data and can recognize complex patterns.

For instance, with tools like TensorFlow or PyTorch, developers can create custom models to handle specific tasks like removing objects or applying effects. These networks can learn to recognize not just the colors but also the shapes and textures of objects, enabling them to remove them intelligently from scenes.

An anecdote I often share is about a project I worked on using a convolutional neural network (CNN) for video processing. The results were astounding—areas where objects had been removed looked completely natural, and it sparked a new level of creativity in how we could present content.

How AI-Based Object Removal Tools Like NVIDIA Maxine Are Changing the Game

NVIDIA has been a frontrunner in integrating AI with video technology. Their Maxine platform offers a suite of AI-powered tools that can perform real-time video enhancements, including object removal.

With Maxine, users can remove backgrounds, enhance video quality, and even apply effects like virtual backgrounds without needing elaborate setups. The platform leverages advanced AI models that run efficiently on GPUs, ensuring high performance and low latency, which is crucial for real-time applications.

What’s particularly exciting about Maxine is its accessibility. You don’t need to be a seasoned programmer to use it. Many creators are now able to harness this power, leading to a surge in innovative video content.

Conclusion: Creating an Invisible Cloak Using OpenCV

In this journey through creating an invisible cloak using OpenCV, we’ve explored the exciting intersection of technology and creativity. From understanding the basics of color detection to implementing complex video processing techniques, each step has unveiled the power of computer vision in bringing imaginative concepts to life.

By using tools like OpenCV, we’ve learned how to capture backgrounds, define color ranges, and blend video feeds to achieve that mesmerizing invisibility effect. The project not only demonstrates the technical aspects but also invites you to engage with a fun and innovative application of programming.

As you embark on your own projects, remember that experimentation is key. Don’t hesitate to tweak color ranges or explore different environments—every small adjustment can lead to surprising results. Whether you’re a beginner or an experienced programmer, the skills gained from this project can serve as a foundation for more advanced endeavors in video processing and beyond.

Thank you for joining me on this fascinating exploration. I hope you’re inspired to continue experimenting with OpenCV and push the boundaries of what’s possible in the realm of computer vision. If you have any questions or want to share your own experiences, feel free to reach out!

External Resources

OpenCV Documentation

- The official OpenCV documentation provides comprehensive guides, tutorials, and API references that can help you understand how to use OpenCV effectively.

OpenCV Documentation

OpenCV Tutorials

- This section of the OpenCV site features tutorials on various topics, including color detection and video processing, which are crucial for your project.

OpenCV Tutorials

FAQs

You will need a computer with Python installed, along with the OpenCV and NumPy libraries. A webcam or camera is also required to capture video and the background.

The effect works by detecting a specific color (like a green cloak) and replacing that area with the captured background. This is achieved through color detection and image processing techniques.

Yes, you can use any color, but it’s essential to adjust the color range in the HSV space to match the cloak color you choose. Experimenting with different colors may require tweaking the color detection parameters.

Yes, but performance may vary. Real-time processing can be demanding, so having a decent CPU/GPU will improve the experience. Lowering the resolution of the video feed can also help with performance.

Leave a Reply