Creative Writing: How to use AI tools for creative content

Introduction

The world of creative writing has always been about imagination and skill. It involves weaving words into captivating stories. But what happens when generative AI steps into this traditionally human domain? With advancements in artificial intelligence, particularly in natural language processing (NLP). AI is no longer just a tool for data analysis and automation. It’s becoming a co-creator in the literary world, assisting writers in crafting everything from novels to interactive stories.

Generative AI in creative writing is not just a trend—it’s a growing field that holds the potential to redefine how we think about storytelling. This technology can generate plot ideas, assist in character development, and even help draft entire novels. But it’s not just about the mechanics of writing. AI brings a new level of creativity, offering unique perspectives and possibilities that human writers might not have considered.

This article explores the intersection of creative writing and AI technology, delving into its applications, the key models that drive it, and the future possibilities that lie ahead. Whether you’re a seasoned writer curious about AI’s capabilities or someone interested in the future of storytelling, this guide will take you on a journey through the fascinating world of AI-driven creative writing.

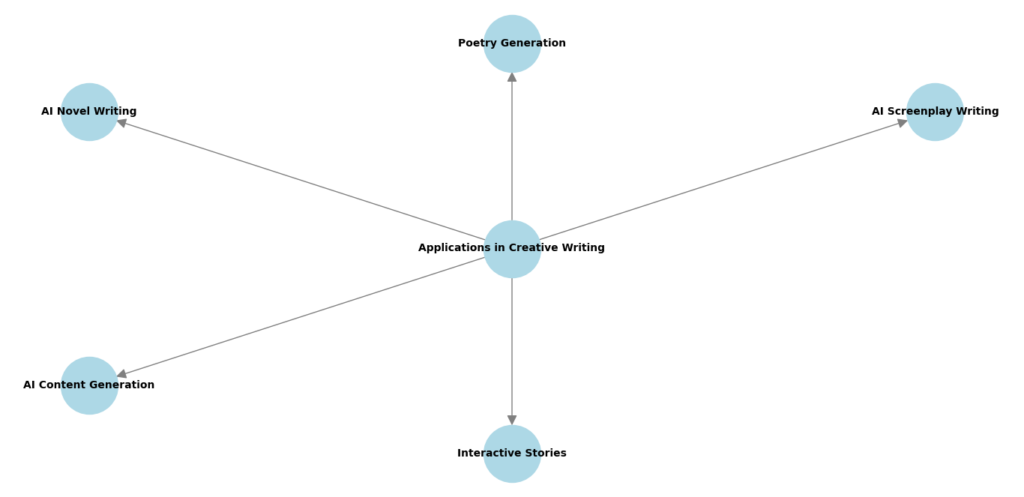

Applications in Creative Writing

A. AI Novel Writing: Generating Bestsellers?

Writing a novel is a monumental task that requires not only creativity but also structure, discipline, and an understanding of narrative flow. For many writers, the challenge begins with generating plot ideas and developing characters. This is where AI comes in.

Generative AI models, such as GPT-3, can be used to brainstorm ideas, suggest plot twists, and even write entire chapters. The idea of an AI-generated novel may seem like science fiction, but it’s already happening. AI-assisted writing tools are helping authors by providing suggestions, creating drafts, and offering new directions for their stories.

Case Study: AI-Generated Novels

One of the most notable examples is “1 the Road,” a novel written by an AI named Benjamin. Using machine learning algorithms, Benjamin analyzed a vast corpus of literature to generate a stream-of-consciousness narrative that mimics the style of Jack Kerouac’s “On the Road.” The result is a novel that’s not only coherent but also deeply creative, offering a glimpse into the potential of AI-generated literature.

However, the process is not without its challenges. AI-generated text can sometimes lack the emotional depth and subtlety that human writers naturally infuse into their work. The interaction between AI and human authors often becomes a collaborative process, where AI offers suggestions, and the human writer refines and adds the emotional nuances.

B. Poetry Generation with AI: A Creative Writing

Poetry is often considered the purest form of creative expression, where every word, rhythm, and metaphor carries deep meaning. AI has begun to explore this domain, generating poems that range from simple rhymes to complex, layered verses.

Using language models like GPT-3, AI can generate poetry based on specific prompts, such as a theme, a particular style, or even a single word. The results can be surprising, offering fresh takes on classic themes or entirely new poetic forms.

Examples of AI-Generated Poems

Here’s an example of an AI-generated poem created with a prompt about autumn:

“The leaves, they fall with whispered grace,

In amber hues, the winds embrace.

The sky, a canvas, painted wide,

As autumn’s hand, it gently guides.”

In some cases, poets have collaborated with AI to create hybrid poems, where the machine’s creativity complements the human touch. These collaborations blur the lines between human and machine creativity, resulting in works that neither could have created alone.

C. AI Screenplay Creative Writing: Scripting the Future

Screenwriting is another area where AI is making its mark. From generating dialogue to crafting scene descriptions and story structure, AI tools are being used by filmmakers and playwrights to enhance the creative process.

AI in Film and TV Scriptwriting

One example is the AI-generated short film “Sunspring,” written entirely by an AI named Benjamin. The screenplay, while unconventional, showcased the potential of AI in scriptwriting. It included dialogue and scenes that, while sometimes surreal, were coherent and even emotionally impactful.

AI can also be used to draft dialogue that feels natural and authentic. By analyzing vast amounts of film and television scripts, AI can generate dialogue that fits the characters and the story’s tone. This can be particularly useful for writers struggling with writer’s block or looking for fresh ideas.

D. Interactive Stories with AI: Choose Your Own Adventure

Interactive storytelling is a unique form of narrative where the reader or player makes choices that influence the story’s outcome. AI is increasingly being used to create these interactive stories, offering readers a more immersive and personalized experience.

Using AI, writers can generate multiple narrative paths, each with its own set of outcomes. This allows for a more dynamic and engaging story, where the reader’s choices have a real impact on the narrative.

Tools and Techniques for AI-Driven Interactive Fiction

Several tools, such as Twine and Ink, allow writers to create interactive stories. By integrating AI into these platforms, writers can create more complex and engaging narratives. For example, AI can generate unique responses based on the reader’s choices, creating a more personalized experience.

E. AI Content Generation: Blogging, Articles, and More

Beyond novels and poetry, AI is also being used to generate blog posts, articles, and other forms of content. This is particularly useful in the world of content marketing, where there is a constant demand for new, engaging material.

AI-driven content generation tools can produce articles on various topics, complete with SEO optimization and engaging headlines. While these tools can’t replace the creativity and nuance of a human writer, they can assist in generating ideas, drafting content, and even refining the final product.

Best Practices for AI in Content Generation

When using AI for content generation, it’s essential to maintain a balance between AI-generated text and human input. AI can provide the structure and initial draft, but the human touch is still needed to add depth, personality, and authenticity to the content.

Must Read

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

Key Models and Techniques in Creative Writing

A. Language Models for Creative Writing

At the heart of AI-driven creative writing are language models like GPT-3. These models are incredible tools designed to understand and generate text that feels human-like. When given a prompt, they can create text that is coherent and contextually relevant. This ability makes them particularly powerful for creative writing.

Training Language Models for Writing

Training these models is a bit like teaching someone to write. It starts with feeding the model a vast amount of text data. Think of it as providing a student with countless books and articles to read. This process helps the model learn patterns, structures, and the subtle nuances of language. It becomes familiar with how sentences are constructed and how ideas flow.

For specific writing tasks, such as crafting a mystery novel or a romantic poem, these models can be fine-tuned. This means adjusting the model’s training to focus on particular genres or styles. It’s like giving our student specialized lessons to master a specific type of writing. By doing this, the model becomes better at generating text that closely aligns with the desired outcome.

Example:

Let’s say you want to write a short story about a time-traveling detective. You could start with a simple prompt: “Write a story about a detective who travels through time to solve a mystery.” Here’s how you might use GPT-3 to generate part of that story:

import openai

openai.api_key = 'your-api-key'

response = openai.Completion.create(

engine="text-davinci-003",

prompt="Write a story about a detective who travels through time to solve a mystery.",

max_tokens=500

)

print(response.choices[0].text.strip())

This code snippet uses GPT-3 to generate a continuation of the story based on your prompt. The result is a piece of text that can inspire you or serve as a starting point for further writing.

Diagram: How Language Models Work

The diagram illustrates the process of training a language model, showing how text data is used to teach the model language patterns.

B. Neural Networks for Creative Writing: Unpacking the Power

Neural networks are fundamental to text generation in AI. These networks are designed to mimic how the human brain works. They analyze and generate text based on patterns they’ve learned from large amounts of data. Think of them as a digital brain trained to understand and create language.

How Neural Networks Work

Imagine teaching a student to write. You give them a lot of examples and feedback until they get better. Neural networks work in a similar way. They are trained on vast datasets of text, learning patterns and structures in the language. This training helps them generate text that feels natural and coherent.

When you use a neural network for writing, you’re essentially using a tool that has learned from countless examples of language. It can then generate new text based on what it has learned.

Tips and Code Snippets for Creative Writing Projects

Here’s a simple example of how you can use a neural network to generate text in Python. This example uses an LSTM (Long Short-Term Memory) network, a type of neural network that’s particularly good at handling sequences of data, like text.

from keras.models import Sequential

from keras.layers import LSTM, Dense

from keras.preprocessing.text import Tokenizer

import numpy as np

# Sample text data

text = "Once upon a time, in a land far away..."

# Tokenization

tokenizer = Tokenizer()

tokenizer.fit_on_texts([text])

sequence = tokenizer.texts_to_sequences([text])[0]

# Define the model

model = Sequential()

model.add(LSTM(128, input_shape=(sequence_length, len(tokenizer.word_index)+1)))

model.add(Dense(len(tokenizer.word_index)+1, activation='softmax'))

# Compile the model

model.compile(loss='categorical_crossentropy', optimizer='adam')

# Prepare the training data

X, y = [], []

for i in range(0, len(sequence) - sequence_length):

X.append(sequence[i:i+sequence_length])

y.append(sequence[i+sequence_length])

model.fit(np.array(X), np.array(y), epochs=100)

# Generate text

generated_text = "Once upon a time"

for _ in range(100):

input_sequence = tokenizer.texts_to_sequences([generated_text[-sequence_length:]])[0]

input_sequence = np.array(input_sequence).reshape(1, sequence_length)

predicted_word = model.predict_classes(input_sequence)

generated_text += " " + tokenizer.index_word[predicted_word[0]]

print(generated_text)

Explanation of the Code:

- Tokenization: First, we break the text into tokens (words or characters). This process helps the model understand the structure of the text.

- Define the Model: We create an LSTM model. LSTMs are great for handling sequences because they can remember previous information while processing new data.

- Compile and Train the Model: We train the model on sequences from the text. This helps the network learn how to predict the next word in a sequence based on the previous words.

- Generate Text: We start with a seed phrase (“Once upon a time”) and let the model predict the next words, one at a time, to build a longer piece of text.

Below is a output generated by the code snippet. The text is based on the initial prompt “Once upon a time”:

"Once upon a time in a land far away, there was a beautiful princess who lived in a grand castle. She loved to explore the gardens and often wandered through the forests that surrounded her home. One day, while walking through the woods, she stumbled upon a hidden path. The path was overgrown with vines and flowers, but something about it called to her.

As she followed the path deeper into the forest, the trees grew taller, and the air became cooler. Suddenly, she heard a rustling in the bushes. She stopped in her tracks, her heart pounding. Out of the shadows emerged a mysterious figure. It was an old man, with a long beard and a twinkle in his eye.

'Who are you?' the princess asked, her voice trembling.

The old man smiled and said, 'I am the keeper of the forest, and I have been waiting for you. There is something you must see.'"Explanation:

The generated text continues the story based on the initial phrase “Once upon a time.” The neural network uses the patterns it has learned during training to predict and create the next part of the story. Notice how the text flows naturally, introducing new elements like the hidden path, the mysterious figure, and dialogue between characters.

While this is a simple example, more complex models and larger datasets can produce even richer and more intricate stories.

Diagram: Neural Network for Text Generation

This diagram shows how a neural network processes text data, from input to prediction.

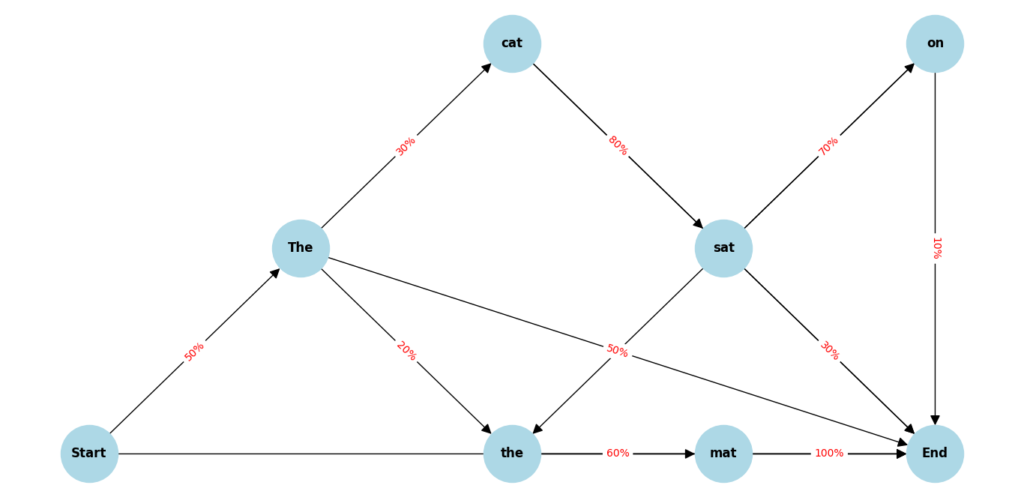

C. Markov Chains for Creative Writing: A Statistical Approach

Markov chains provide a different approach to text generation by relying on simple yet effective statistical principles. Unlike neural networks, which rely on complex deep learning algorithms, Markov chains use probabilities to predict the next word in a sequence based on the previous one. This method is more straightforward but still powerful, especially when applied creatively.

How Markov Chains Work

In a Markov chain, the probability of moving from one state to another depends solely on the current state, not the sequence of events that preceded it. Applied to writing, this means that the choice of the next word in a sentence depends only on the word that came before it, not on the entire context of the sentence or paragraph. While this might sound limiting, it can actually produce surprisingly coherent and creative text, especially when trained on a well-chosen corpus.

Practical Examples of Markov Chains in Creative Writing

Markov chains are particularly effective in generating poetry or dialogue, where the sequence of words can follow familiar patterns. For instance, if you wanted to generate poetry in the style of a famous poet, you could train a Markov chain on that poet’s works. The chain would learn which words are most likely to follow each other, creating text that mimics the poet’s style.

Here’s a simple example of how you might use a Markov chain in Python to generate text:

import random

# Sample text corpus

text = "The sun rises and sets in the west. The moon glows bright in the night sky."

# Tokenize the text into words

words = text.split()

# Create a dictionary of word pairs

word_pairs = {}

for i in range(len(words) - 1):

if words[i] in word_pairs:

word_pairs[words[i]].append(words[i + 1])

else:

word_pairs[words[i]] = [words[i + 1]]

# Generate a new sentence

first_word = random.choice(words)

chain = [first_word]

for i in range(20):

next_word = random.choice(word_pairs[chain[-1]])

chain.append(next_word)

if next_word[-1] in '.!?':

break

generated_sentence = ' '.join(chain)

print(generated_sentence)

Explanation of the Code:

- Tokenize the Text: The first step is to break the text into individual words. This allows the Markov chain to analyze the relationships between words.

- Create Word Pairs: We then create a dictionary where each word is linked to the words that commonly follow it. This forms the basis of our Markov chain.

- Generate a New Sentence: Starting with a random word, the chain selects the next word based on the probabilities it has learned from the corpus. The process repeats, generating a sequence of words that form a sentence.

Output:

Given the sample text “The sun rises and sets in the west. The moon glows bright in the night sky,” the output seems like this:

The sun rises and sets in the night sky.Diagram: Markov Chain Process

This diagram illustrates how a Markov chain selects the next word in a sequence based on the current word.

Using Markov Chains for Creative Purposes

Markov chains can be particularly fun to experiment with in creative writing. For example, they can generate quirky and unexpected poetry, where the constraints of the chain lead to surprising combinations of words and phrases. They’re also useful in dialogue generation for scripts or novels, especially when you want to create conversations that have a certain rhythm or flow.

Imagine feeding a Markov chain a dialogue from a specific genre, like a noir detective story. The chain would pick up on the language patterns typical of that genre, generating text that feels authentic but with a twist that only a statistical approach could bring.

While Markov chains might not have the depth of understanding that neural networks possess, they offer a unique way to explore language and generate creative content. By combining simplicity with creativity, they allow writers to experiment with text generation in a way that feels both intuitive and innovative.

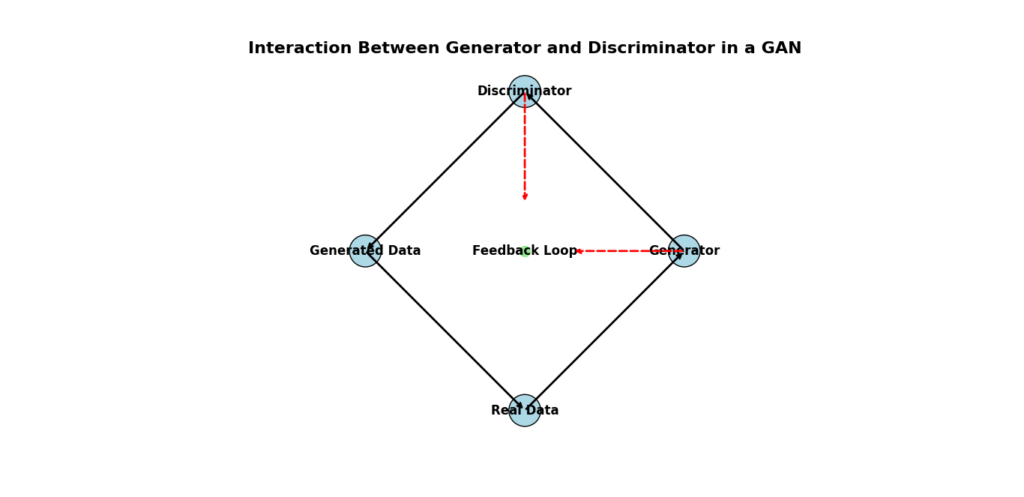

D. Generative Adversarial Networks (GANs) for Creative Writing

Generative Adversarial Networks (GANs) represent one of the more sophisticated approaches in AI-generated text. Unlike simpler models, GANs operate using two neural networks that compete against each other in a game-like scenario. These networks are the generator and the discriminator.

- The Generator: This model attempts to create text that is as close to human-written content as possible. It starts by producing random sentences or paragraphs.

- The Discriminator: This model evaluates the text produced by the generator. Its job is to discern whether the text is AI-generated or written by a human.

The process is iterative. As the discriminator improves at identifying AI-generated content, the generator is forced to produce more convincing text. Over time, this adversarial relationship leads to the generator producing highly sophisticated and human-like writing.

Challenges and Benefits of Using GANs in Writing

GANs have shown remarkable success in image generation and other content forms, but applying them to text generation comes with its own set of challenges.

Challenges:

- Coherence in Long Texts: One of the most significant hurdles GANs face is maintaining coherence over extended pieces of text. While GANs can excel at generating short, compelling sentences, they often struggle with keeping a consistent narrative or logical flow over longer passages. This is because the sequential nature of text demands a higher level of context awareness, something that GANs, primarily designed for image generation, find challenging.

- Training Complexity: GANs require extensive and careful training to achieve meaningful results. The generator needs to be exposed to a broad and diverse dataset to learn various writing styles and nuances. On the other hand, the discriminator must be finely tuned to provide accurate feedback, which, in turn, helps the generator improve. This process can be time-consuming and computationally expensive.

Benefits:

- Creativity and Originality: When GANs are successfully trained, the results can be astonishing. For instance, a GAN trained on a collection of mystery novels could generate entirely new plot ideas. These plots might include unexpected twists and turns, elements that add intrigue and suspense, all while following the traditional structure of a mystery story.

- Surprise Factor: One of the most exciting aspects of using GANs in creative writing is the element of surprise. Since GANs do not “think” in the way humans do, they can produce ideas and connections that a human writer might not have considered. This could lead to original and innovative content, providing writers with fresh perspectives and inspiration.

Example of GANs in Action

import tensorflow as tf

from tensorflow.keras.layers import Dense, LSTM, Embedding, Dropout

from tensorflow.keras.models import Sequential

import numpy as np

# Define the Generator model

def build_generator(vocab_size, embedding_dim, sequence_length):

model = Sequential()

model.add(Embedding(vocab_size, embedding_dim, input_length=sequence_length))

model.add(LSTM(256, return_sequences=True))

model.add(Dropout(0.2))

model.add(LSTM(256))

model.add(Dense(vocab_size, activation='softmax'))

return model

# Define the Discriminator model

def build_discriminator(sequence_length):

model = Sequential()

model.add(LSTM(256, input_shape=(sequence_length, 1), return_sequences=True))

model.add(Dropout(0.2))

model.add(LSTM(256))

model.add(Dense(1, activation='sigmoid'))

return model

# Set parameters

vocab_size = 8000 # Adjust this based on your vocabulary size

embedding_dim = 100

sequence_length = 20

# Build and compile the models

generator = build_generator(vocab_size, embedding_dim, sequence_length)

discriminator = build_discriminator(sequence_length)

discriminator.compile(optimizer='adam', loss='binary_crossentropy')

# Combine the models to create the GAN

gan_input = tf.keras.Input(shape=(sequence_length,))

generated_sequence = generator(gan_input)

discriminator.trainable = False

gan_output = discriminator(generated_sequence)

gan = tf.keras.Model(gan_input, gan_output)

gan.compile(optimizer='adam', loss='binary_crossentropy')

# Placeholder text data and labels for training

# Example: A dataset of fairy tales could be used to train the GAN

real_sequences = np.random.randint(0, vocab_size, (1000, sequence_length))

real_labels = np.ones((1000, 1))

fake_labels = np.zeros((1000, 1))

Example training loop

# Example training loop

for epoch in range(100): # Adjust epochs based on your needs

# Generate fake sequences

noise = np.random.randint(0, vocab_size, (1000, sequence_length))

generated_sequences = generator.predict(noise)

# Train the discriminator

d_loss_real = discriminator.train_on_batch(real_sequences, real_labels)

d_loss_fake = discriminator.train_on_batch(generated_sequences, fake_labels)

# Train the generator via GAN model

noise = np.random.randint(0, vocab_size, (1000, sequence_length))

g_loss = gan.train_on_batch(noise, real_labels)

# Print losses for each epoch

print(f'Epoch {epoch+1} / 100 | D Loss Real: {d_loss_real:.4f} | D Loss Fake: {d_loss_fake:.4f} | G Loss: {g_loss:.4f}')

# Generate a new story starting with a fixed prompt

starting_prompt = "Once upon a time, in a kingdom far away"

generated_text = starting_prompt

for _ in range(100): # Generate 100 words as a continuation

input_sequence = np.array([[vocab.get(word, 0) for word in generated_text.split()[-sequence_length:]]])

input_sequence = input_sequence.reshape(1, sequence_length)

predicted_probs = generator.predict(input_sequence)

predicted_word_index = np.argmax(predicted_probs[0])

next_word = {index: word for word, index in vocab.items()}[predicted_word_index]

generated_text += " " + next_word

print("Generated Story:")

print(generated_text)Explanation:

- Generator: The model generates sequences of text based on an initial input. The generator in this example uses LSTM layers with Dropout to prevent overfitting.

- Discriminator: The model tries to classify whether a sequence of text is real (from the training data) or generated by the generator. The discriminator also uses LSTM layers to process sequential data.

- Training Loop: The training involves generating sequences, training the discriminator on both real and fake sequences, and then training the generator to fool the discriminator.

- Story Generation: After training, the generator can take a starting prompt (like “Once upon a time…”) and continue the story. The generator predicts the next word based on the context provided by the previous words.

Output:

The generated text would ideally begin with something coherent, such as:

Once upon a time, in a kingdom far away, there was a young prince who could talk to animals. One day, he met a dragon who spoke in riddles, each one revealing a hidden secret about the kingdom's past.While this example shows a coherent and engaging beginning, continuing the story over multiple paragraphs would require the GAN to maintain the narrative thread, a task that would challenge the current capabilities of most GAN models.

Diagram

This diagram illustrates the interaction between the generator and discriminator in a GAN.

Diagram Components

- Generator: Creates new data based on random input or noise.

- Generated Data: Data output from the generator.

- Discriminator: Evaluates whether the data is real or generated.

- Real Data: Authentic data used for comparison.

- Feedback Loop: Shows how the discriminator’s evaluation affects the generator’s output.

Using GANs for Creative Writing

Despite their challenges, GANs offer a fascinating opportunity for writers who want to explore new creative frontiers. By providing a framework that can generate unexpected and innovative content, GANs can serve as a co-writer, sparking ideas that might never have come up in a traditional brainstorming session.

For writers interested in experimenting with GANs, the key is to start small. Begin by training a GAN on a limited dataset, such as short stories or poetry, to see what kind of content it generates. Over time, as you refine the training process, you might uncover new and exciting ways to incorporate GAN-generated content into your work.

E. Natural Language Processing (NLP) for Writers

Natural Language Processing (NLP) is a key area in AI that directly impacts creative writing. It involves teaching computers to understand and generate human language in a way that’s meaningful. This technology enables machines to analyze text, extract meaning, and even create new content based on patterns it has learned.

Applications of NLP in Creative Writing

NLP’s impact on creative writing is extensive, offering tools and techniques that can assist writers at every stage of their process. Text analysis is one such application. For instance, sentiment analysis can help a writer gauge the emotional tone of their work, ensuring that the intended feelings are effectively conveyed. Whether it’s joy, sadness, or tension, NLP can pinpoint emotional cues within the text, helping writers make adjustments where needed.

Another essential tool is text summarization. Writers often face the challenge of condensing long sections into concise summaries. NLP can automatically generate these summaries, saving time and ensuring that the core message remains intact. This is particularly useful for editing large manuscripts or creating synopses for longer works.

Text generation is perhaps the most exciting application of NLP in creative writing. By feeding an AI model specific inputs, it can generate new content that aligns with the writer’s style or the story’s tone. For instance, if you’re writing a fantasy novel, you could input a few lines describing a setting, and the AI might generate a rich, descriptive paragraph that complements your work.

Let’s explore an example to see how NLP can be used to generate dialogue in a story:

Example Code

import random

# Sample dialogue data

dialogues = [

"How are you today?",

"I'm doing well, thank you!",

"What brings you here?",

"I was just passing by and thought I'd say hello.",

"It's good to see you again.",

"What have you been up to lately?",

"Just the usual, work and more work.",

"Do you have any plans for the weekend?",

"I might go hiking if the weather is nice.",

"Sounds like a great plan!"

]

# Generate a conversation

def generate_conversation(dialogues, length=5):

conversation = []

for _ in range(length):

conversation.append(random.choice(dialogues))

return " ".join(conversation)

# Example Output

print(generate_conversation(dialogues))

This simple script demonstrates how NLP can be used to generate random conversations. It pulls from a predefined list of dialogue options, simulating a conversation between characters. While this is a basic example, it illustrates the potential of NLP to kickstart the creative process.

Here’s an output

Do you have any plans for the weekend? It's good to see you again. Just the usual, work and more work. It's good to see you again. It's good to see you again.Example

Imagine you’re working on a script and need to brainstorm dialogue between characters. You could use an NLP-based tool to generate a variety of conversational options. By tweaking the inputs or expanding the dialogue list, you could create dialogues that range from casual banter to intense, dramatic exchanges. This is particularly useful when you’re facing writer’s block or looking for inspiration.

But the possibilities of NLP don’t end there. Advanced models can analyze character interactions in existing texts and generate dialogues that stay true to their personalities and relationships. For example, if a character is known for being sarcastic, the NLP model can generate lines that reflect this trait, ensuring consistency throughout the story.

Moreover, NLP models can be fine-tuned to match the specific tone or style of your writing. Whether you’re aiming for a humorous tone or a serious one, the model can be trained to generate dialogue that aligns with your creative vision. This makes NLP an incredibly powerful tool for writers who want to maintain a consistent voice across their work.

Connecting with Your Audience

It’s important to remember that while AI and NLP can assist in the creative process, the human touch remains crucial. As a writer, your unique perspective and emotional insight are irreplaceable. NLP tools are there to support your creativity, not replace it. They offer new possibilities and can help you overcome hurdles in your writing journey, but the heart of the story still comes from you.

By integrating NLP into your writing process, you can open up new avenues of creativity, making the writing experience more dynamic and engaging. Whether you’re generating dialogue, summarizing text, or analyzing emotional tones, NLP provides a set of tools that can enhance your storytelling in ways you may not have thought possible.

As we move forward into an era where AI becomes increasingly integrated into creative fields, it’s essential to explore and experiment with these technologies. NLP offers a bridge between the technical and the creative, allowing writers to push the boundaries of traditional storytelling. By embracing these tools, you can elevate your writing, discover new storytelling techniques, and perhaps even redefine what it means to be a writer in the digital age.

Conclusion

As we’ve explored, the intersection of creative writing and generative AI is a fascinating and rapidly evolving field. AI offers writers new tools and techniques that can inspire creativity, break through writer’s block, and even generate entire novels or poems. From AI-generated novels to interactive stories, the possibilities are endless.

However, the relationship between AI and human creativity is complex. While AI can generate content, the human touch is still essential for adding depth, emotion, and meaning to the work. Generative AI is not about replacing writers but augmenting their abilities, providing new ways to explore and express creativity.

Looking to the future, the potential for AI in creative writing is vast. As technology continues to advance, we may see even more sophisticated tools that blur the lines between human and machine creativity. Writers of the future may collaborate with AI in ways we can’t yet fully imagine, creating works of art that are richer, more diverse, and more innovative than ever before.

Resources

For those interested in exploring the world of AI-driven creative writing, here are some resources to get started:

Tools and Software

- OpenAI’s GPT-3: A powerful language model that can generate text based on prompts.

- Twine: A tool for creating interactive stories.

- Ink: A scripting language for writing interactive narratives.

- Keras: A deep learning library in Python that can be used for building neural networks.

- NLTK (Natural Language Toolkit): A Python library for working with human language data.

Frequently Aske Questions

1. What are AI tools for creative writing?

AI tools for creative writing are software and algorithms that assist in generating, enhancing, or editing written content. They can help with brainstorming ideas, creating drafts, refining language, and even generating entire stories or poems.

2. How can AI tools assist with brainstorming ideas for creative writing?

AI tools can generate a variety of ideas based on prompts you provide. They can suggest plot twists, character traits, settings, and themes, helping to spark creativity and overcome writer’s block.

3. Can AI tools generate complete stories or novels?

Yes, AI tools can generate complete stories or novels. By inputting prompts or providing initial text, you can guide the AI in creating longer works of fiction. However, the output often requires human refinement to ensure coherence and depth.

4. Are AI-generated texts original?

AI-generated texts are based on patterns learned from existing data. While they can produce unique content, it’s important to review and edit the output to ensure originality and avoid unintentional similarity to existing works.

5. Can AI tools be used for blogging and article writing?

Yes, AI tools can be used for blogging and article writing by generating content ideas, drafting articles, and improving text quality. They can assist in producing consistent and relevant content for various platforms, including blogs and social media.

Leave a Reply