How to Develop a Real-Time Translation of Natural Language

Introduction to Real-Time Translation

Overview of Real-Time Translation

What is Real-Time Translation?

Real-time translation refers to the process of translating spoken or written language instantly, so people can understand each other even if they speak different languages. Imagine you’re in a meeting with people from all over the world. With real-time translation, everyone can hear and understand the conversation in their own language, almost as if they were all speaking the same language.

Why is it Important?

Real-time translation is crucial for global communication and business. It helps break down language barriers, allowing people from different countries to work together smoothly. This technology is widely used in international meetings, customer support, travel, and many other areas where clear communication is key. By using real-time translation, businesses can expand their reach and connect with a larger audience without being limited by language differences.

Purpose and Scope

What Will This Guide Do?

This guide aims to provide a clear understanding of real-time translation. We’ll explore how it works, its benefits, and how you can develop or use these systems. Whether you’re interested in the technology behind it or looking to implement it in your own projects, this guide will give you the insights you need.

Who Should Read This Guide?

This guide is perfect for anyone interested in learning more about real-time translation, including those involved in business, technology, or communication. If you’re a developer working on language translation systems or someone looking to understand how these systems can be used effectively, this guide is for you.

- Real-time translation: The core topic of this guide, focusing on instant language translation.

- Natural language translation: Refers to the automatic translation of spoken or written language.

- Language translation: A broad term covering any translation between languages.

- Real-time translation guide: The type of resource this guide represents.

- Developing language translation systems: The process of creating systems that can translate languages in real-time.

Understanding Natural Language Processing (NLP)

Basics of NLP

What is NLP and Why is it Important?

Natural Language Processing (NLP) is a field of artificial intelligence focused on making computers understand and work with human language. It’s like teaching a computer to read and interpret text or speech in a way that makes sense. NLP is crucial in translation because it helps computers convert text from one language to another while keeping the meaning intact. This technology is behind many tools we use daily, like translation apps and voice assistants.

Key Components of NLP

To make NLP work effectively, several key processes are involved:

- Tokenization: This is the first step where text is broken down into smaller pieces called tokens, such as words or phrases. It’s like splitting a sentence into individual words.

- Parsing: This process involves analyzing the structure of the text to understand its grammatical elements. Parsing helps the computer understand how words relate to each other in a sentence.

- Named Entity Recognition (NER): NER helps the computer identify and categorize important elements in the text, such as names of people, places, or organizations.

Challenges in NLP for Translation

Ambiguity and Context

One of the biggest challenges in NLP is dealing with ambiguity. Words and phrases can have multiple meanings depending on the context. For example, the word “bank” could mean a financial institution or the side of a river. NLP needs to understand the context to make the right choice.

Idiomatic Expressions

Idioms are phrases where the meaning isn’t obvious from the individual words, like “kick the bucket” meaning “to die.” Translating these correctly can be tricky because their meanings don’t always translate directly into other languages.

Handling Diverse Languages and Dialects

Languages vary greatly across regions and cultures. NLP must be flexible enough to handle different languages and their unique features, including various dialects and regional expressions. This is essential for accurate translation in a global context.

Must Read

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

Technologies Used in NLP

Machine Learning (ML) and Deep Learning (DL)

Machine Learning and Deep Learning are techniques used to improve NLP. ML involves training algorithms on large datasets to recognize patterns in language, while DL, a subset of ML, uses neural networks to learn from data in a more complex way. These techniques help improve translation accuracy by learning from vast amounts of text data.

Pre-Trained Language Models

Models like BERT and GPT are examples of pre-trained language models that have already learned from large datasets. They are used in NLP to understand and generate text more accurately. For example, GPT can generate human-like text and BERT can understand the context of words in a sentence, making them powerful tools for translation.

- Natural Language Processing (NLP): The technology used to make sense of human language.

- NLP basics: Fundamental concepts and processes involved in NLP.

- Components: Key parts of NLP like tokenization, parsing, and named entity recognition.

- NLP challenges: Difficulties faced in translating languages, such as ambiguity and idiomatic expressions.

- Language ambiguity in translation: Issues with words or phrases having multiple meanings.

- Deep learning in NLP translation: Using advanced machine learning techniques to improve translation.

- Pre-trained language models for translation: Models like BERT and GPT that are used to enhance translation capabilities.

Components of Real-Time Translation Systems

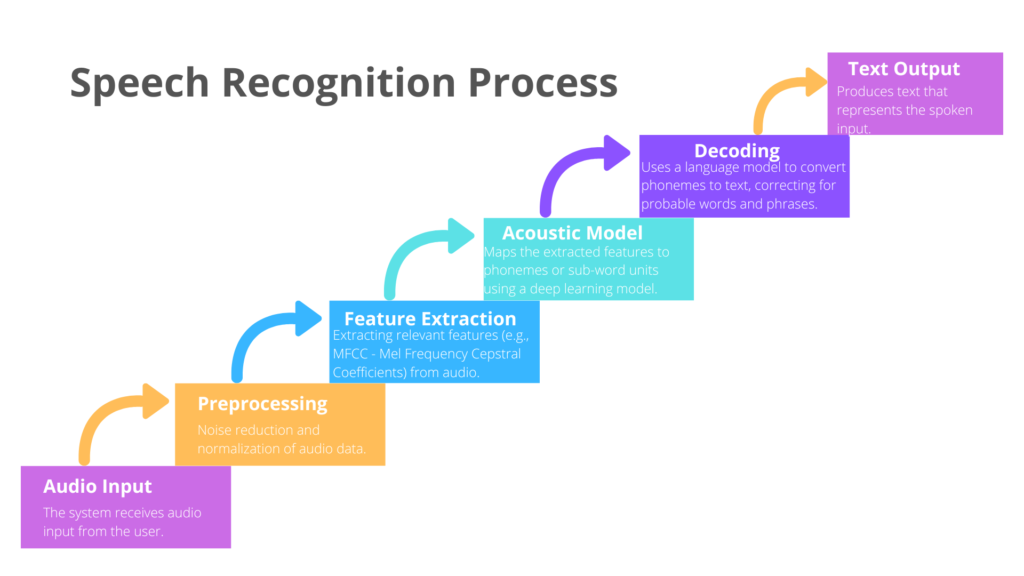

Speech Recognition

How Speech Recognition Works

Speech recognition technology allows computers to understand and process spoken language. It converts spoken words into text, which is the first step in translating speech from one language to another.

Here’s a simple explanation of how it works:

- Capture Audio: The system listens to the audio input through a microphone or other recording device.

- Convert Audio to Text: It uses algorithms to convert the spoken words into written text.

- Process Text: The text is then processed by translation software to translate it into another language.

Popular Speech Recognition APIs

There are several APIs (Application Programming Interfaces) that can be used for speech recognition. These are tools provided by companies to make it easier to add speech recognition to your applications.

Google Speech-to-Text API

Google’s Speech-to-Text API is a popular choice for converting audio into text. It supports multiple languages and is known for its accuracy.

Here’s a basic example of how to use Google’s Speech-to-Text API in Python:

import speech_recognition as sr

# Initialize recognizer

recognizer = sr.Recognizer()

# Capture audio from the microphone

with sr.Microphone() as source:

print("Say something:")

audio = recognizer.listen(source)

# Use Google Speech-to-Text to recognize the audio

try:

text = recognizer.recognize_google(audio)

print("You said: " + text)

except sr.UnknownValueError:

print("Google Speech Recognition could not understand audio")

except sr.RequestError:

print("Could not request results from Google Speech Recognition service")

Output

Say something:

[You speak something]

You said: Hello, how are you today?

IBM Watson Speech-to-Text API

IBM Watson also offers a robust Speech-to-Text service, which can be used to convert audio into text.

Here’s how you might use IBM Watson’s Speech-to-Text API in Python:

from ibm_watson import SpeechToTextV1

from ibm_cloud_sdk_core.authenticators import IAMAuthenticator

# Set up the authenticator and service

authenticator = IAMAuthenticator('your-api-key')

speech_to_text = SpeechToTextV1(authenticator=authenticator)

speech_to_text.set_service_url('your-service-url')

# Read audio from a file

with open('audio-file.wav', 'rb') as audio_file:

result = speech_to_text.recognize(audio=audio_file, content_type='audio/wav').get_result()

# Print the transcribed text

print("You said: " + result['results'][0]['alternatives'][0]['transcript'])

Output

You said: Hello, how are you today?

- Speech recognition for translation: Using speech recognition technology as a component of translation systems.

- Google Speech-to-Text API: Google’s service for converting spoken language into text.

- IBM Watson Speech-to-Text: IBM’s service for speech recognition, which can transcribe audio into text.

Understanding how speech recognition works and the tools available can help you effectively integrate speech-to-text capabilities into real-time translation systems. This is the first step towards creating systems that can understand and translate spoken language instantly.

Language Translation Engines

Language translation engines are systems designed to translate text from one language to another. There are different approaches to creating these engines, each with its own strengths and weaknesses. Here’s a look at the main types: rule-based, statistical, and neural machine translation (NMT).

1. Rule-Based Translation Engines

Rule-based translation engines use a set of predefined rules to translate text. These rules are based on grammar, vocabulary, and syntax of both the source and target languages. This approach requires extensive manual work to create and refine the rules.

Example:

If you’re translating the English sentence “She is eating an apple” into French, a rule-based system would use grammar rules to convert it into “Elle mange une pomme.”

Pros:

- Can be very accurate if the rules are well-defined.

- Good for languages with well-understood grammar structures.

Cons:

- Requires a lot of manual effort to create and maintain the rules.

- May not handle idiomatic expressions or complex sentences well.

2. Statistical Machine Translation (SMT)

Statistical machine translation relies on statistical models to translate text. It uses large amounts of text data to learn how words and phrases are typically translated. This approach does not rely on predefined rules but on patterns found in the data.

Example:

SMT systems would analyze a large corpus of English and French texts to learn that “She is eating an apple” is often translated as “Elle mange une pomme.”

Pros:

- Can handle a wide range of language pairs and text types.

- Adapts to new languages and phrases over time as more data is used.

Cons:

- May produce less accurate translations if the data is not diverse.

- Can struggle with complex or context-dependent sentences.

3. Neural Machine Translation (NMT)

Neural Machine Translation uses deep learning techniques to translate text. NMT models, such as those based on neural networks, are trained on vast amounts of data to understand the context and meaning of words and sentences. NMT is known for its high accuracy and ability to generate more natural-sounding translations.

Overview of NMT and Its Advantages

NMT translates text by understanding the context of entire sentences rather than just word-for-word translation. It uses sophisticated models like Transformer networks to achieve this.

Example:

Here’s a simple example using OpenAI’s GPT model for translation. We’ll use the Hugging Face Transformers library to perform this translation in Python.

from transformers import MarianMTModel, MarianTokenizer

# Load pre-trained MarianMT model and tokenizer

model_name = 'Helsinki-NLP/opus-mt-en-fr'

tokenizer = MarianTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

# Define the text to translate

text = "She is eating an apple."

# Tokenize and translate

tokens = tokenizer(text, return_tensors='pt', padding=True)

translated = model.generate(**tokens)

# Decode the translated text

translated_text = tokenizer.decode(translated[0], skip_special_tokens=True)

print("Translated text:", translated_text)

Output

Translated text: Elle est en train de manger une pomme.

Advantages of NMT:

- Context-Aware: Understands the meaning of entire sentences, which helps produce more accurate translations.

- Natural Sounding: Produces translations that sound more natural and fluent.

- Adaptable: Can handle various languages and complex sentences more effectively.

Key Takeaways

- Rule-based translation engines: Systems that use predefined rules for translation.

- Statistical machine translation: Translation methods based on statistical models from large datasets.

- Neural machine translation: Advanced translation using neural networks and deep learning.

Understanding these different types of translation engines helps you choose the best approach based on your needs and the complexity of the languages involved. Whether you’re using rule-based, statistical, or neural methods, each has its role in making language translation more effective and accurate.

Text-to-Speech (TTS) Systems

How TTS Works in Translation

Text-to-Speech (TTS) systems convert written text into spoken words. In translation, TTS systems are used to read out translated text in a natural-sounding voice. This is particularly useful in applications where users need to hear translations, such as in language learning apps or assistive technologies.

Here’s how TTS works in translation:

- Input Text: The translated text, which is usually the result of a translation engine, is provided as input to the TTS system.

- Text Processing: The TTS system processes the text, breaking it down into phonetic components and applying rules to pronounce it correctly.

- Speech Synthesis: The system uses a voice model to generate spoken words based on the processed text.

- Output Speech: The final step is to play the synthesized speech through speakers or headphones.

Examples of TTS Systems

1. Amazon Polly

Amazon Polly is a cloud-based TTS service that turns text into lifelike speech. It supports multiple languages and voices, allowing for a natural-sounding output.

Here’s an example of how to use Amazon Polly with Python:

import boto3

# Initialize the Polly client

polly = boto3.client('polly', region_name='us-east-1')

# Text to be converted to speech

text = "Hello, how are you today?"

# Request speech synthesis

response = polly.synthesize_speech(

Text=text,

OutputFormat='mp3',

VoiceId='Joanna'

)

# Save the audio stream to a file

with open('output.mp3', 'wb') as file:

file.write(response['AudioStream'].read())

print("Speech synthesized and saved to output.mp3")

Output :

This script converts the text “Hello, how are you today?” into an MP3 file, which can be played to hear the spoken text.

2. Google Text-to-Speech

Google Text-to-Speech is another popular TTS service that provides high-quality, natural-sounding speech. It’s part of Google Cloud and supports various languages and voices.

Here’s an example using Google’s Text-to-Speech API with Python:

from google.cloud import texttospeech_v1beta1 as tts

# Initialize the Google TTS client

client = tts.TextToSpeechClient()

# Define the text input

text_input = tts.SynthesisInput(text="Hello, how are you today?")

# Define the voice parameters

voice_params = tts.VoiceSelectionParams(

language_code="en-US",

ssml_gender=tts.SsmlVoiceGender.FEMALE

)

# Define the audio file type

audio_config = tts.AudioConfig(

audio_encoding=tts.AudioEncoding.MP3

)

# Synthesize the speech

response = client.synthesize_speech(

input=text_input,

voice=voice_params,

audio_config=audio_config

)

# Save the audio response to a file

with open('output_google.mp3', 'wb') as file:

file.write(response.audio_content)

print("Speech synthesized and saved to output_google.mp3")

Output :

This script converts the text “Hello, how are you today?” into an MP3 file using Google’s TTS service, providing natural-sounding speech.

- Text-to-Speech for real-time translation: Using TTS technology to read out translated text in real-time.

- Amazon Polly: Amazon’s TTS service for generating lifelike speech.

- Google Text-to-Speech: Google’s TTS service for high-quality speech synthesis.

By incorporating TTS systems into your translation workflows, you can provide users with audible translations, enhancing accessibility and user experience. Whether using Amazon Polly or Google Text-to-Speech, these tools offer powerful solutions for converting text into natural-sounding speech.

Integration and Synchronization

Combining Speech Recognition, Translation, and TTS

Integrating speech recognition, translation, and Text-to-Speech (TTS) systems creates a complete pipeline for translating spoken language into audible speech in another language. Here’s a step-by-step explanation of how these components work together:

- Speech Recognition: This first step captures spoken language and converts it into text. For instance, if someone speaks in English, the speech recognition system transcribes their words into written English text.

- Translation: The text generated by the speech recognition system is then translated into the target language. This involves using a translation engine to convert the English text into another language, such as French.

- Text-to-Speech (TTS): Finally, the translated text is converted back into spoken words using a TTS system. This provides an audible output in the target language.

Here’s a simple example of how you might integrate these components in Python

1. Set up Speech Recognition

import speech_recognition as sr

recognizer = sr.Recognizer()

def recognize_speech():

with sr.Microphone() as source:

print("Say something:")

audio = recognizer.listen(source)

try:

text = recognizer.recognize_google(audio)

print("Recognized text: " + text)

return text

except sr.UnknownValueError:

print("Could not understand audio")

except sr.RequestError:

print("Could not request results")

# Get recognized text

recognized_text = recognize_speech()

2. Translate Text

For translation, we’ll use the translate function from a library like googletrans. Install it with pip install googletrans==4.0.0-rc1.

from googletrans import Translator

def translate_text(text, dest_lang='fr'):

translator = Translator()

translation = translator.translate(text, dest=dest_lang)

print("Translated text: " + translation.text)

return translation.text

# Translate the recognized text

translated_text = translate_text(recognized_text)

3. Convert Translated Text to Speech

Using gTTS, a simple library for TTS. Install it with pip install gtts.

from gtts import gTTS

import os

def text_to_speech(text):

tts = gTTS(text=text, lang='fr')

tts.save("output.mp3")

os.system("start output.mp3")

# Convert the translated text to speech

text_to_speech(translated_text)

Output:

When the script is run, the following occurs:

- It listens to spoken words and converts them into text.

- Translates this text into French.

- It then converts the translated text into an audio file that is played back.

Real-Time Processing and Latency Issues

In a real-time system, the entire process must happen quickly to be useful. This means minimizing the delay between speech input and the spoken translation output. Here are some common latency issues and how to address them:

- Latency in Speech Recognition: The time taken to convert speech into text. This can be affected by the quality of the microphone and background noise.

- Translation Delay: Translating text can take time, especially if the translation engine is processing a lot of data or if the languages involved are complex.

- Text-to-Speech Delay: The time taken to synthesize spoken words from text. Some TTS systems may have a slight delay, depending on the complexity of the text and the processing power available.

To address these issues:

- Use efficient and fast APIs.

- Optimize the audio input quality.

- Ensure the translation engine is well-suited for the languages involved.

Key Takeaways

- Integration of translation components: Combining speech recognition, translation, and TTS systems.

- Real-time processing in translation: Ensuring quick and efficient conversion of spoken language into translated speech.

By integrating these components effectively, you can create a smooth system that translates spoken language into audible output in real-time, providing a valuable tool for communication and accessibility.

Developing a Real-Time Translation System

Complete Speech-to-Speech Translation System

This Python script demonstrates how to create a simple real-time speech-to-speech translation system. The system listens to spoken input, translates it into another language, and then converts the translated text into speech. Here’s a detailed explanation of each part of the script:

Code

1. Import Required Libraries

import speech_recognition as sr

from transformers import MarianMTModel, MarianTokenizer

from gtts import gTTS

from playsound import playsound

import os

- speech_recognition: For capturing and recognizing speech.

- transformers: For handling the translation model.

- gTTS: For converting text to speech.

- playsound: For playing audio files.

- os: For interacting with the operating system (e.g., file management).

2. Initialize the Recognizer

recognizer = sr.Recognizer()

This creates a speech recognizer instance used to capture and process audio.

3. Capture Audio

If you want to capture audio using Python, you can use the speech_recognition library.

- This function listens to audio through the microphone.

- If no audio is detected within 10 seconds, it times out and returns

None.

Here’s a detailed look at the code:

import speech_recognition as sr

# Create a recognizer instance

recognizer = sr.Recognizer()

def get_audio():

# Open the microphone for capturing audio

with sr.Microphone() as source:

print("Listening...") # Notify that the program is listening for audio

try:

# Listen to the audio with a 10-second timeout

audio = recognizer.listen(source, timeout=10)

return audio # Return the captured audio

except sr.WaitTimeoutError:

# Handle the situation where no audio is detected within the timeout

print("Listening timed out.")

return None # Return None if no audio was detected

Detailed Explanation

Importing the Library:

import speech_recognition as sr: This line imports the speech_recognition library, which allows you to work with audio and perform speech recognition.

Creating a Recognizer Instance:

recognizer = sr.Recognizer(): You need an instance of Recognizer to work with audio data.

Defining the get_audio Function:

def get_audio(): This function will handle the process of capturing audio from the microphone.

Opening the Microphone:

with sr.Microphone() as source: This line opens the microphone and sets it as the source for audio input. The with statement ensures that the microphone is properly managed and closed after the operation.

Listening for Audio:

print("Listening..."): This message is printed to let you know that the program is ready to capture audio.

audio = recognizer.listen(source, timeout=10): This line listens for audio input from the microphone. The timeout parameter is set to 10 seconds, meaning the program will wait for up to 10 seconds for audio input. If no audio is detected within this time, a WaitTimeoutError will be raised.

Handling Timeout:

except sr.WaitTimeoutError: This block of code handles the situation where no audio is detected within the 10-second window.

print("Listening timed out."): This message is printed to let you know that the program did not receive any audio within the specified time.

return None: If the timeout occurs, the function returns None to indicate that no audio was captured.

4. Recognize Speech

- This function converts audio into text using Google’s speech recognition.

- Handles errors if the speech is not understood or if there’s a problem with the service.

def recognize_speech(audio):

if audio:

try:

print("Recognizing speech...")

text = recognizer.recognize_google(audio)

print("You said: " + text)

return text

except sr.UnknownValueError:

print("Sorry, I could not understand the audio.")

return None

except sr.RequestError:

print("Could not request results from the service.")

return None

else:

print("No audio to process.")

return None

Explanation

1. Defining the recognize_speech Function:

def recognize_speech(audio): This function takes an audio input and attempts to convert it into text.

2. Checking if Audio is Provided:

if audio:: This checks if theaudiovariable contains audio data. It does, the function proceeds with speech recognition. If not, it prints a message and returnsNone.

3. Recognizing Speech:

print("Recognizing speech..."): This message is printed to indicate that the program is starting the speech recognition process.text = recognizer.recognize_google(audio): This line uses Google’s speech recognition API to convert the audio into text. Therecognize_googlemethod sends the audio data to Google’s servers and returns the recognized text.

4. Handling Errors:

except sr.UnknownValueError: This block handles errors where the audio is not understood by the speech recognition service.print("Sorry, I could not understand the audio."): This message is printed if the speech recognition service cannot understand the audio.return None: The function returnsNoneto indicate that the recognition failed.

except sr.RequestError: This block handles errors related to making requests to the speech recognition service.print("Could not request results from the service."): This message is printed if there is an issue with the request to the service.return None: The function returnsNoneto indicate that there was an issue with the request.

5. Handling No Audio:

print("No audio to process."): This message is printed if theaudiovariable is empty orNone.return None: The function returnsNonesince there is no audio to process.

- Successful Recognition: If the speech recognition is successful, you will see “Recognizing speech…” followed by “You said: [recognized text]” in the console. The function will return the recognized text.

- Error Handling: If there’s an error understanding the audio or making a request to the service, you will see an appropriate error message and the function will return

None. - No Audio Provided: If no audio is provided, you will see “No audio to process.” and the function will return

None.

5. Loading a Translation Model in Python

- Loads the MarianMT model and tokenizer for translation.

- Handles errors if the model fails to load.

from transformers import MarianTokenizer, MarianMTModel

def load_model(model_name):

try:

print(f"Loading model: {model_name}") # Notify that the model loading process is starting

# Load the tokenizer and model using the given model name

tokenizer = MarianTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

print("Model loaded successfully.") # Notify that the model was loaded successfully

return tokenizer, model # Return the tokenizer and model

except Exception as e:

# Handle any errors that occur during the model loading process

print(f"Error loading model: {e}")

return None, None # Return None for both tokenizer and model if an error occurs

Explanation

1. Defining the load_model Function:

def load_model(model_name): This function is designed to load a pre-trained translation model. It takesmodel_nameas an argument, which specifies which model to load.

2. Starting the Model Loading Process:

print(f"Loading model: {model_name}"): This message is printed to indicate that the model loading process has begun. It helps you know which model is currently being loaded.

3. Loading the Tokenizer and Model:

tokenizer = MarianTokenizer.from_pretrained(model_name): This line loads the tokenizer for the specified model. Thefrom_pretrainedmethod downloads and loads the tokenizer associated withmodel_name.model = MarianMTModel.from_pretrained(model_name): Similarly, this line loads the translation model using thefrom_pretrainedmethod. This method downloads and loads the model associated withmodel_name.

4. Successful Model Loading:

print("Model loaded successfully."): This message is printed if the model and tokenizer are loaded without any errors.return tokenizer, model: The function returns both the tokenizer and the model so they can be used for translating text.

5. Handling Errors:

except Exception as e: This block of code handles any errors that occur during the model loading process.print(f"Error loading model: {e}"): This message is printed if there is an error while loading the model or tokenizer. It includes the error message to help you understand what went wrong.return None, None: If an error occurs, the function returnsNonefor both the tokenizer and the model to indicate that they were not successfully loaded.

- Successful Loading: If the model and tokenizer are loaded correctly, you will see “Loading model: [model_name]” followed by “Model loaded successfully.” The function will return the tokenizer and model.

- Error Loading: If there is an error during loading, you will see an error message like “Error loading model: [error_message]” and the function will return

Nonefor both the tokenizer and model.

6. Translate Text

Here’s how you can translate text using a pre-trained model with the transformers library:

def translate_text(text, tokenizer, model):

if tokenizer and model:

try:

print("Translating text...")

inputs = tokenizer(text, return_tensors="pt", padding=True)

translated = model.generate(**inputs)

translated_text = [tokenizer.decode(t, skip_special_tokens=True) for t in translated]

print("Translation complete.")

return translated_text[0]

except Exception as e:

print(f"Error during translation: {e}")

return None

else:

print("Model or tokenizer not loaded.")

return None

Explanation

- Defining the

translate_textFunction:

def translate_text(text, tokenizer, model): This function translates a given piece of text using the provided tokenizer and model.

2. Checking if Tokenizer and Model are Available:

if tokenizer and model:: This checks if both the tokenizer and the model are provided. If either is missing, the function will not proceed with translation.

3. Translating the Text:

print("Translating text..."): This message indicates that the translation process is starting.inputs = tokenizer(text, return_tensors="pt", padding=True): This line converts the input text into tokens suitable for the model. Thereturn_tensors="pt"argument tells the tokenizer to return PyTorch tensors, andpadding=Trueensures that the text is padded to the same length.translated = model.generate(**inputs): This line uses the model to generate the translated text based on the tokenized input.translated_text = [tokenizer.decode(t, skip_special_tokens=True) for t in translated]: This line converts the generated tokens back into human-readable text. Theskip_special_tokens=Trueargument ensures that any special tokens used by the model are removed.print("Translation complete."): This message indicates that the translation process is finished.return translated_text[0]: This returns the first item in the list of translated texts (in case there are multiple outputs).

4. Handling Errors:

except Exception as e: This block catches any errors that occur during the translation process.print(f"Error during translation: {e}"): This message prints the error details to help understand what went wrong.return None: If an error occurs, the function returnsNoneto indicate that the translation was not successful.

5. Handling Missing Model or Tokenizer:

print("Model or tokenizer not loaded."): This message is printed if the tokenizer or model is missing.return None: The function returnsNonein this case, as it cannot perform the translation without these components.

This function translates the text using the MarianMT model.Handles errors that might occur during translation.

7. Convert Text to Speech

def text_to_speech(translated_text):

if translated_text:

try:

print("Converting text to speech...")

tts = gTTS(text=translated_text, lang='de')

tts.save("translated_speech.mp3")

print("Speech saved as 'translated_speech.mp3'.")

playsound("translated_speech.mp3")

print("Playing the translated speech.")

except Exception as e:

print(f"Error during speech synthesis: {e}")

else:

print("No text to convert to speech.")

Explanation

1. Defining the text_to_speech Function:

def text_to_speech(translated_text): This function takestranslated_textas an argument and converts it into speech.

2. Checking if Text is Provided:

if translated_text:: This checks if there is any text provided. If there is no text, the function will not proceed.

3. Converting Text to Speech:

print("Converting text to speech..."): This message indicates that the text-to-speech process is starting.tts = gTTS(text=translated_text, lang='de'): This line creates agTTSobject with the provided text. Thelang='de'parameter specifies that the speech should be in German. You can change'de'to another language code if needed.tts.save("translated_speech.mp3"): This saves the generated speech as an MP3 file namedtranslated_speech.mp3.print("Speech saved as 'translated_speech.mp3'."): This message confirms that the speech file has been saved successfully.

4. Playing the Audio File:

playsound("translated_speech.mp3"): This line plays the saved MP3 file so you can hear the converted speech.print("Playing the translated speech."): This message indicates that the audio file is being played.

Converts the translated text into speech using gTTS and saves it as an MP3 file.Plays the generated audio file.

8. Main Function

def main():

audio = get_audio()

text = recognize_speech(audio)

if text:

model_name = 'Helsinki-NLP/opus-mt-en-de' # Model name from Hugging Face model hub

tokenizer, model = load_model(model_name)

if tokenizer and model:

translated_text = translate_text(text, tokenizer, model)

text_to_speech(translated_text)

else:

print("Model or tokenizer not loaded. Exiting.")

else:

print("No text to translate.")

if __name__ == "__main__":

main()

Explanation

- Defining the

mainFunction:def main(): This line defines themainfunction. This function coordinates the workflow of capturing audio, recognizing speech, translating text, and converting the translated text into speech.

- Capturing Audio:

audio = get_audio(): This line calls theget_audio()function to capture audio from the microphone. The captured audio is stored in theaudiovariable.

- Recognizing Speech:

text = recognize_speech(audio): This line uses therecognize_speech()function to convert the captured audio into text. The recognized text is stored in thetextvariable.

- Checking if Text is Recognized:

if text:: This checks if thetextvariable contains recognized text. If thetextvariable is empty orNone, the function will print a message and exit.

- Loading the Translation Model:

model_name = 'Helsinki-NLP/opus-mt-en-de': This specifies the name of the translation model to be used. Here, it is an English-to-German model from the Hugging Face model hub.tokenizer, model = load_model(model_name): This line calls theload_model()function to load the specified translation model and tokenizer. The loaded tokenizer and model are stored intokenizerandmodel, respectively.

- Checking if Model and Tokenizer are Loaded:

if tokenizer and model:: This checks if both thetokenizerandmodelwere successfully loaded. If either is missing, the function prints a message and exits.

- Translating the Text:

translated_text = translate_text(text, tokenizer, model): This line calls thetranslate_text()function to translate the recognized text using the loaded model and tokenizer. The translated text is stored intranslated_text.

- Converting Translated Text to Speech:

text_to_speech(translated_text): This line calls thetext_to_speech()function to convert the translated text into speech. The speech is then saved as an MP3 file and played back.

- Handling Errors:

else: print("Model or tokenizer not loaded. Exiting."): If the model or tokenizer was not loaded successfully, this message is printed.else: print("No text to translate."): If no text was recognized from the audio, this message is printed.

- Running the Main Function:

if __name__ == "__main__": main(): This line ensures that themainfunction is called when the script is run directly. It will not run if the script is imported as a module in another script.

Output

- Successful Execution: If everything works correctly, you will see:

- “Converting text to speech…”

- “Speech saved as ‘translated_speech.mp3’.”

- “Playing the translated speech.”

- Error Handling:

- “Model or tokenizer not loaded. Exiting.” if the model or tokenizer could not be loaded.

- “No text to translate.” if no text was recognized from the audio.

This main function brings together various parts of the program to provide a complete solution for translating spoken words and converting the result into speech. It ensures that each step is executed in the right order and handles any issues that may arise.

Use Cases and Applications

1. Business and Customer Support

Enhancing Customer Service with Real-Time Translation

In today’s global marketplace, businesses often interact with customers who speak different languages. Real-time translation helps bridge this communication gap, improving customer service and satisfaction. For example, a company can use real-time translation tools during live chat support to assist customers in their preferred language. This ensures that customers receive accurate information and support without language barriers.

Case Studies and Examples

Global Tech Company: A major tech company implemented real-time translation for their customer service chatbots. This allowed them to handle support queries from customers around the world efficiently. As a result, they saw increased customer satisfaction and faster resolution times.

Retail Chain: A large retail chain used real-time translation in their call centers. This enabled their agents to assist international customers in their native languages, leading to improved customer relationships and higher sales.

2. Healthcare and Emergency Services

Importance in Healthcare Settings

In healthcare, real-time translation is crucial for effective communication between patients and medical professionals, especially in multilingual settings. It helps ensure that medical instructions, symptoms, and diagnoses are understood correctly, which is essential for providing appropriate care.

Real-World Applications and Success Stories

- Hospital Emergency Rooms: Some hospitals use real-time translation services in their emergency rooms to communicate with non-English-speaking patients quickly. This has led to better patient outcomes and reduced misunderstandings during critical situations.

- Medical Consultations: A healthcare provider implemented real-time translation during virtual consultations. This allowed patients from different linguistic backgrounds to receive consultations in their own language, improving accessibility and patient satisfaction.

3. Travel and Tourism

Improving Travel Experiences with Real-Time Translation

For travelers, language barriers can be a major challenge. Real-time translation tools help tourists navigate new countries by translating signs, menus, and conversations on the fly. This makes travel more enjoyable and less stressful, as tourists can communicate effectively and access important information.

Examples of Translation Applications for Tourists

- Translation Apps: Many travel apps now offer real-time translation features. For example, a tourist can use an app to translate menu items at a restaurant or ask for directions in a foreign city. This instant translation helps tourists feel more comfortable and engaged during their travels.

- Tour Guides: Some tour companies use real-time translation devices to provide multilingual tours. This allows tourists from different countries to enjoy the same tour experience without language barriers.

Real-time translation technology is transforming various fields by breaking down language barriers. In business, it enhances customer service and support. Healthcare, it improves patient care and communication. In travel, it enriches the travel experience by making it easier to navigate and interact in foreign environments.

These examples show how real-time translation can make interactions smoother and more effective in diverse situations, leading to better outcomes and experiences.

Future Trends and Innovations

1. Advancements in Translation Technology

Emerging Technologies

Translation technology is evolving rapidly, with new advancements enhancing its capabilities:

- Context-Aware Translation: New technologies are focusing on understanding the context in which words or phrases are used. This means translations will be more accurate because the technology will understand not just the words but the situation or intent behind them. For example, a phrase used in a formal business setting will be translated differently than the same phrase used casually among friends.

- Multi-Language Support: Modern translation tools are increasingly able to handle multiple languages at once. This is useful in multilingual settings where users might need translations in several languages simultaneously. For example, a real-time translation device might support translations between English, Spanish, Chinese, and French all at the same time.

Impact of AI and Machine Learning Advancements

- AI and Machine Learning: Artificial Intelligence (AI) and machine learning are making translation technology smarter. AI algorithms can learn from large amounts of data to improve translation quality. Machine learning helps the technology understand nuances and idioms in different languages, making translations more natural and accurate.

- Personalization: AI is also enabling more personalized translations. For instance, translation tools can learn a user’s preferred style or terminology over time, offering more tailored and relevant translations.

2. Challenges and Opportunities

Addressing Limitations and Potential Improvements

- Accuracy and Nuance: Despite advances, translation technology still struggles with nuances and context. Translating idiomatic expressions or cultural references can be challenging. Future improvements will focus on making translations more precise and culturally aware.

- Real-Time Performance: Ensuring that translations are accurate and delivered instantly is another challenge. Advances in computing power and algorithms are expected to improve real-time performance, making translations faster and more reliable.

Future Research Directions

- Cross-Language Understanding: Researchers are exploring ways to improve understanding between languages that are very different from each other. This involves developing methods that can bridge gaps between languages with distinct grammatical structures or cultural contexts.

- Integration with Other Technologies: Future research may focus on integrating translation technology with other emerging technologies like augmented reality (AR) or virtual reality (VR). For example, AR glasses could provide real-time translations of text seen through the lenses.

Conclusion

Translation technology is on an exciting path of innovation. Emerging technologies like context-aware translation and multi-language support are enhancing how translations are performed. AI and machine learning are making these tools smarter and more accurate. However, there are still challenges to overcome, such as improving accuracy and real-time performance. Future research will continue to address these challenges and explore new opportunities, including integrating translation technology with other advanced technologies.

External Resources

Here are some external resources to help you develop a real-time translation system for natural language:

- Google Cloud Speech-to-Text Documentation

- URL: Google Cloud Speech-to-Text

- Description: Provides comprehensive guides and API references for integrating Google’s Speech-to-Text service, which converts audio into text for further processing.

- Microsoft Azure Speech Service Documentation

- URL: Microsoft Azure Speech Service

- Description: Offers detailed information on Microsoft’s Speech-to-Text and Text-to-Speech services, including how to implement real-time speech recognition and synthesis.

- Hugging Face Transformers Documentation

- URL: Hugging Face Transformers

- Description: Features documentation and tutorials for using pre-trained models like MarianMT for language translation. It includes code examples and model details for integration.

- Google Text-to-Speech API Documentation

- URL: Google Text-to-Speech

- Description: Offers resources for using Google’s TTS API to convert text into natural-sounding speech, with details on supported languages and voices.

- Amazon Polly Documentation

- URL: Amazon Polly

- Description: Provides guidelines on using Amazon Polly for text-to-speech synthesis, including how to generate lifelike speech in various languages.

- OpenAI GPT Models Documentation

- URL: OpenAI GPT

- Description: Includes information on using OpenAI’s language models for various natural language processing tasks, including translation and understanding.

FAQs

What is a real-time translation system?

A real-time translation system listens to spoken language, translates it into another language, and then converts it into speech almost instantly.

What are the key components of a real-time translation system?

The key components are speech recognition, language translation, and text-to-speech (TTS) synthesis.

Which tools are commonly used for speech recognition?

Common tools include Google Speech-to-Text, Microsoft Azure Speech Service, and IBM Watson Speech-to-Text.

What models are used for language translation?

Models like MarianMT, Google Translate, and Microsoft Translator are commonly used for language translation.

How is text converted to speech?

Text is converted to speech using TTS services like Google Text-to-Speech, Amazon Polly, and Microsoft Azure TTS.

What programming languages are typically used to develop a real-time translation system?

Python is commonly used due to its extensive libraries and frameworks for speech recognition, translation, and TTS.

Leave a Reply