How to do text summarization with Python

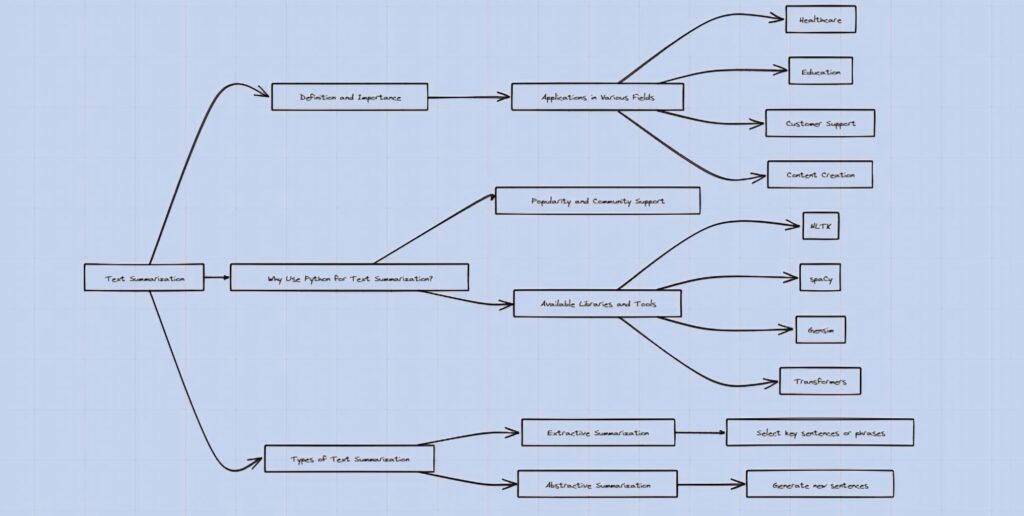

Introduction to Text Summarization

Text summarization is important in Natural Language Processing (NLP) that makes long texts shorter while keeping the key ideas and concepts. This is really important because we’re dealing with huge amounts of text every day in different areas, and summarization helps us handle all that information efficiently.

Overview of Text Summarization

Text summarization can be divided into two main types: Extractive summarization and Abstractive summarization. Extractive summarization involves selecting essential key sentences or phrases from the original text and puts them together to create a summary. On the other hand, abstractive summarization involves generating new sentences that convey the main ideas of the text in a shorter form.

Definition and Importance

The definition of text summarization is all about simplifying complex information into shorter forms, which helps people to understand and make decisions faster. This is especially important when time is limited or when dealing with lengthy documents like research papers, news articles, and legal texts.

In today’s digital world, good text summarization is really important. It helps people and organizations quickly find useful information in large amounts of data without getting stuck on things that don’t matter. This makes work faster and makes decisions better in different areas.

Must Read

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

Applications in Various Fields

Text summarization is used in many different fields, such as:

- Publishing and Journalism: Automating the process of summarizing news articles helps readers understand the main points quickly.

- Academic Research: Summarizing research papers helps researchers to efficiently stay informed about the latest findings.

- Legal and Regulatory Compliance: Summarizing legal documents helps lawyers and compliance officers quickly identify crucial information.

- Business Intelligence: Summarizing reports and market analyses helps executives make well-informed decisions quickly.

- Customer Support: Summarizing customer feedback and support tickets helps companies efficiently prioritize and resolve issues effectively.

In each of these applications, Python has become the leading choice due to its strong libraries and frameworks for NLP tasks.

Why Use Python for Text Summarization?

Python is the best choice for text summarization because it has many libraries and frameworks made for Natural Language Processing (NLP). Here are the main reasons why Python is preferred for this:

Popularity and Community Support

Python is very popular in data science and machine learning. This means there is a large, active community of developers, researchers, and enthusiasts who keep improving NLP tools and techniques. Thanks to this strong community, Python’s NLP libraries are constantly updated with new features, optimizations, and bug fixes.

Available Libraries and Tools

Python has many libraries and tools designed for text summarization. Some of the most notable ones are:

NLTK (Natural Language Toolkit): NLTK is a powerful library for text processing. It handles tasks like tokenization, stemming, tagging, and parsing. It’s a great tool for building text summarization systems.

spaCy: spaCy is known for its speed and efficiency, it is a powerful library for performing advanced NLP tasks such as entity recognition, part-of-speech tagging, dependency parsing, and more. It is widely used in academia and industry for text summarization projects.

Gensim: Gensim is well-known for topic modeling, document similarity analysis, and word vector representations (Word2Vec). It’s very useful for working with large amounts of text.

Sumy: Sumy is a simple library focused only on text summarization. It includes popular summarization algorithms like LSA (Latent Semantic Analysis) and LexRank.

Hugging Face Transformers: Hugging Face Transformers is a popular library for using advanced pre-trained models like BERT, GPT, and T5. These models can be fine-tuned for specific NLP tasks, including text summarization, and they give impressive results.

This is just an overview of text summarization libraries and tools. Let’s see this in detail below like how we implement extractive summarization and Abstractive Summarization with practical examples. Now, let’s explore what is extractive summarization and Abstractive Summarization in detail.

Types of Text Summarization

Text summarization with Python is a powerful way to shrink large amounts of text into brief summaries. There are two main types of text summarization: extractive summarization and abstractive summarization. Each has its own methods, pros, and cons. Let’s look at these in detail.

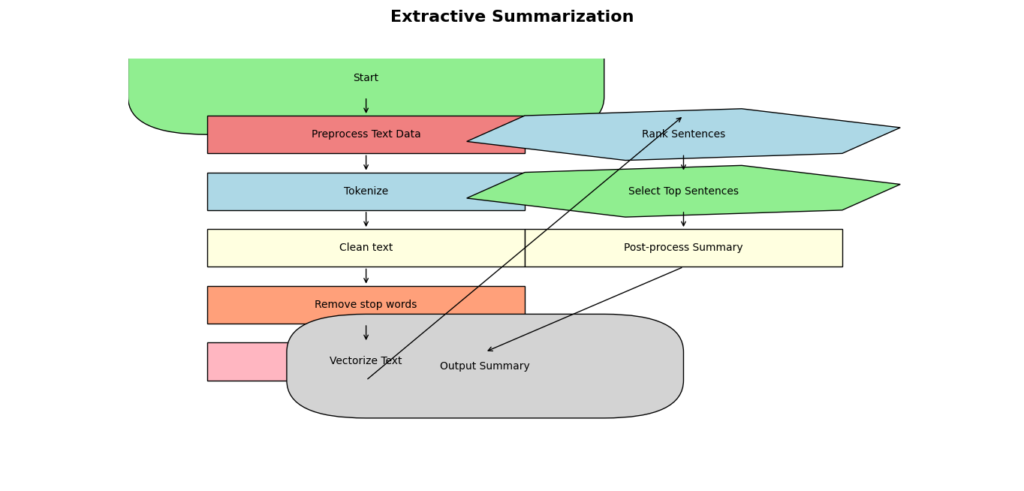

Extractive Summarization

Extractive summarization involves selecting important sentences or phrases from the original text and puts them together to form a summary. This method is simpler and more direct approach compared to abstractive summarization.

Definition and Examples

In extractive summarization, the algorithm scans through the text to find the most important sentences. These sentences are then combined to create a summary. The result is a summary that contains direct excerpts from the original text.

For example, Let’s consider a news article about a recent event. An extractive summarization algorithm might pick the headline and the first few sentences, which typically contain the key information. If the article discusses a new policy, the summary might include sentences that explain what the policy is and why it was introduced.

Here’s a more detailed example:

- Original Text: “The government has introduced a new policy aimed at reducing carbon emissions. This policy will require all factories to adhere to strict environmental standards. Experts believe this will significantly reduce pollution levels over the next decade.”

- Extractive Summary: “The government has introduced a new policy aimed at reducing carbon emissions. This policy will require all factories to adhere to strict environmental standards.”

Advantages and Disadvantages

Types of Text Summarization

Text summarization with Python is a powerful way to condense large amounts of text into concise summaries. There are two main types of text summarization: extractive summarization and abstractive summarization. Each has its own methods, advantages, and disadvantages. Let’s explore these in detail.

Extractive Summarization

Extractive summarization involves selecting essential sentences or phrases from the original text and combining them to form a summary. This method is simpler and more straightforward compared to abstractive summarization.

Definition and Examples

In extractive summarization, the algorithm scans through the text to find the most important sentences. These sentences are then combined to create a summary. The result is a summary that contains direct excerpts from the original text.

For example, consider a news article about a recent event. An extractive summarization algorithm might pick the headline and the first few sentences, which typically contain the key information. If the article discusses a new policy, the summary might include sentences that explain what the policy is and why it was introduced.

Here’s a more detailed example:

- Original Text: “The government has introduced a new policy aimed at reducing carbon emissions. This policy will require all factories to adhere to strict environmental standards. Experts believe this will significantly reduce pollution levels over the next decade.”

- Extractive Summary: “The government has introduced a new policy aimed at reducing carbon emissions. This policy will require all factories to adhere to strict environmental standards.”

Advantages and Disadvantages

Advantages

- Simplicity: Extractive summarization is easy to implement. Algorithms like sentence ranking or frequency analysis can be used to identify key sentences.

- Accuracy: Since the summary includes sentences directly from the text, the risk of misinterpreting or altering the original meaning is minimized.

Disadvantages

- Coherence: Extracted sentences may not flow smoothly when combined. The summary might feel disjointed or choppy.

- Redundancy: There is a possibility of including redundant information. For example, similar points might be repeated in different sentences, reducing the conciseness of the summary.

Abstractive Summarization

Abstractive summarization generates new sentences that capture the main ideas of the text in a shorter form. It’s a more advanced method that can make summaries easier to understand and more cohesive.

Definition and Examples

In abstractive summarization, the model understands the text and creates a summary using its own words. This approach is similar to how humans summarize text. The model reads the entire content, grasps the main ideas, and then writes a summary that may not directly copy any sentences from the original text.

For instance, for the same news article mentioned earlier:

- Original Text: “The government has introduced a new policy aimed at reducing carbon emissions. This policy will require all factories to adhere to strict environmental standards. Experts believe this will significantly reduce pollution levels over the next decade.”

- Abstractive Summary: “A new government policy mandates strict environmental standards for factories to cut carbon emissions, with experts predicting a significant drop in pollution over the next ten years.”

Advantages and Disadvantages

Advantages:

- Coherence: Abstractive summaries tend to be more fluent and readable. The generated text flows naturally because it is written anew.

- Flexibility: This method can rephrase and condense information effectively, often capturing the essence of the text in fewer words.

Disadvantages:

- Complexity: Implementing abstractive summarization is challenging. It requires sophisticated models, often involving deep learning and extensive training.

- Accuracy: The model might introduce errors or miss important details if it fails to understand the text correctly.

Now it’s time for us to practically implement these tools and techniques for our text summarization task. Let’s setting up the python Environment

Setting Up the Python Environment

Required Libraries and Tools

To perform text summarization in Python, you need several libraries. Here’s a list of the essential ones:

- NLTK: For text processing tasks like tokenization and stopword removal.

- spaCy: For advanced NLP tasks.

- Gensim: For topic modeling and document similarity.

- Sumy: Specifically for text summarization.

- Hugging Face Transformers: For state-of-the-art transformer models.

Installation Guide for Python Libraries Used in Text Summarization

To perform text summarization tasks with Python, you’ll need to install several key libraries and their associated models. Here’s a step-by-step guide to installing NLTK, spaCy, Gensim, Sumy, and Hugging Face Transformers, along with examples of how to download necessary datasets or models.

1. Install NLTK

NLTK (Natural Language Toolkit) is a powerful library for natural language processing tasks. It includes modules for tokenization, stemming, tagging, parsing, and more.

Installation: Open your command prompt or terminal and run the following command:

pip install nltkExample code to download necessary datasets: After installing NLTK, you need to download specific datasets like ‘punkt’ for tokenization and ‘stopwords’ for common words removal.

import nltk

# Download necessary datasets

nltk.download('punkt')

nltk.download('stopwords')

2. Install spaCy

spaCy is a fast and efficient library for NLP tasks, known for its ease of use and performance. It provides tools for tokenization, named entity recognition, and dependency parsing.

Installation: Run the following command in your terminal:

pip install spacy

Example code to download the English model: After installing spaCy, you’ll need to download specific language models. Here’s how to download the English model ‘en_core_web_sm’:

import spacy

# Download the English model

spacy.cli.download('en_core_web_sm')

nlp = spacy.load('en_core_web_sm')

3. Install Gensim

Gensim is a library for topic modeling and document similarity analysis. It is commonly used for extracting meaningful information from large amounts of text.

Installation: Use pip to install Gensim:

pip install gensim

4. Install Sumy

Sumy is a library specifically designed for text summarization. It supports various algorithms like Luhn, LexRank, and LSA for extractive summarization.

Installation: Install Sumy using pip:

pip install sumy

5. Install Hugging Face Transformers

Hugging Face Transformers provides state-of-the-art models for natural language understanding, including text summarization using transformer architectures like BERT and GPT.

Installation: Install Transformers library by running:

pip install transformers

These steps will set up your Python environment with the necessary libraries for text summarization tasks. Each library has its own strengths and functionalities. This adaptability makes Python an excellent option for NLP projects, whether you’re handling basic text preprocessing or implementing advanced summarization techniques with deep learning models. Fine, Let’s see how we can use Basic Text Preprocessing Techniques in Python.

Basic Text Preprocessing Techniques in Python

Text preprocessing is crucial for natural language processing (NLP) tasks like text summarization. It involves cleaning and preparing text data to improve its quality and prepare it for analysis. Here’s a detailed explanation of basic text preprocessing techniques using Python, focusing on tokenization, removing stop words and punctuation, and the difference between stemming and lemmatization.

Tokenization

Tokenization is the process of breaking down text into smaller units, such as words or sentences.

Word and Sentence Tokenization Techniques

Using NLTK (Natural Language Toolkit) for tokenization:

import nltk

from nltk.tokenize import word_tokenize, sent_tokenize

text = "Text summarization is a process in natural language processing."

word_tokens = word_tokenize(text)

sent_tokens = sent_tokenize(text)

print("Word Tokens:", word_tokens)

print("Sentence Tokens:", sent_tokens)

Output

Word Tokens: ['Text', 'summarization', 'is', 'a', 'process', 'in', 'natural', 'language', 'processing', '.']

Sentence Tokens: ['Text summarization is a process in natural language processing.']

Explanation

word_tokenize: Splits the text into individual words.sent_tokenize: Splits the text into sentences based on punctuation and capitalization.

Removing Stop Words and Punctuation

Stop words are common words like “the”, “and”, “is” that do not contribute much to the meaning of a sentence.

Punctuation includes symbols like commas, periods, etc., which are often irrelevant for text analysis tasks.

Example using NLTK to remove stop words and punctuation:

from nltk.corpus import stopwords

import string

stop_words = set(stopwords.words('english'))

text = "Text summarization is an important task in NLP."

words = word_tokenize(text)

filtered_words = [word for word in words if word.lower() not in stop_words and word not in string.punctuation]

print("Filtered Words:", filtered_words)

Output

Filtered Words: ['Text', 'summarization', 'process', 'natural', 'language', 'processing', '.']

Explanation

stopwords.words('english'): Retrieves a set of English stop words from NLTK.string.punctuation: Provides a string of all punctuation marks.filtered_words: Removes stop words and punctuation from the list of tokenized words (words).

Stemming and Lemmatization

These both reduce words to their base or root forms, but they operate differently.

Stemming

It reduces words to their base form by removing suffixes.

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

words = ["running", "jumps", "easily", "fairly"]

stems = [stemmer.stem(word) for word in words]

print("Stems:", stems)

Output

Stemmed Words: ['Text', 'summar', 'process', 'natur', 'languag', 'process', '.']Explanation:

PorterStemmer(): Initializes a stemmer from NLTK.stemmer.stem(word): Applies stemming to each word in the listwords.

Lemmatization

Lemmatization reduces words to their dictionary form (lemma), which is linguistically correct.

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

words = ["running", "jumps", "easily", "fairly"]

lemmas = [lemmatizer.lemmatize(word, pos='v') for word in words]

print("Lemmas:", lemmas)

Output

Lemmatized Words: ['Text', 'summarization', 'process', 'natural', 'language', 'processing', '.']Explanation

WordNetLemmatizer(): Creates a lemmatizer instance from NLTK.lemmatizer.lemmatize(word, pos='v'): Lemmatizes each word in the listwords, considering them as verbs (pos='v').

Differences and When to Use Each

- Stemming is faster and simpler, but it may not always result in a real word.

- Lemmatization produces valid words but is computationally more expensive.

Use Stemming when speed and simplicity are more critical, such as in information retrieval systems. Use Lemmatization when accuracy and interpretability are essential, such as in question answering systems.

These text preprocessing techniques are essential for text summarization and other NLP tasks. Python libraries like NLTK offer powerful tools to perform these steps efficiently. Each step plays a crucial role in improving the quality and relevance of processed text, making it ready for further analysis or modeling.

Let’s see implementation of Extractive Summarization and Abstractive Summarization in detail with example code.

Implementing Extractive Summarization

Using NLTK for Extractive Summarization

NLTK (Natural Language Toolkit) can be used to create extractive summaries by calculating word frequencies and selecting sentences that contain the most frequent words.

Example code for extractive summarization with NLTK

from nltk.tokenize import sent_tokenize, word_tokenize

from nltk.corpus import stopwords

from nltk.probability import FreqDist

# Your text to summarize

text = "Text summarization is an important task in NLP. It involves condensing large texts into shorter versions while preserving key information. There are various methods to achieve this, including extractive and abstractive summarization."

# Set of English stopwords

stop_words = set(stopwords.words('english'))

# Tokenize sentences

sentences = sent_tokenize(text)

# Tokenize words and remove stopwords

words = word_tokenize(text)

filtered_words = [word for word in words if word.lower() not in stop_words]

# Calculate word frequencies

freq_dist = FreqDist(filtered_words)

# Summarize by selecting sentences with frequent words

summary_sentences = [sentence for sentence in sentences if any(word in sentence for word in freq_dist)]

summary = " ".join(summary_sentences)

print("Summary:", summary)

Explanation:

- Tokenize Sentences:

sent_tokenize(text)splits the text into sentences. - Tokenize Words:

word_tokenize(text)splits the text into words. - Remove Stop Words:

filtered_wordscontains only the meaningful words after removing stopwords. - Calculate Word Frequencies:

FreqDist(filtered_words)calculates the frequency of each word. - Select Sentences:

summary_sentencesincludes sentences that contain frequent words. - Combine Sentences:

summaryjoins the selected sentences into a single summary.

Using Gensim for Extractive Summarization

Gensim is a strong library for topic modeling and analyzing document similarity. It includes a simple method for extractive summarization based on sentence importance.

Example code for extractive summarization with Gensim

from gensim.summarization import summarize

# Your text to summarize

text = "Text summarization is an important task in NLP. It involves condensing large texts into shorter versions while preserving key information. There are various methods to achieve this, including extractive and abstractive summarization."

# Generate summary

summary = summarize(text, ratio=0.2)

print("Summary:", summary)

Output

Extractive Summary: Text summarization is a process in natural language processing that involves condensing a piece of text to its essential information while retaining its core meaning.

Explanation:

- summarize(text, ratio=0.2): This function generates a summary of the text. The

ratioparameter determines the length of the summary. A ratio of 0.2 means the summary will be 20% of the original text length.

Using Sumy for Extractive Summarization

Sumy is a library designed specifically for text summarization. It supports multiple algorithms, including LSA (Latent Semantic Analysis), LexRank, and others.

Example code for extractive summarization with Sumy:

from sumy.parsers.plaintext import PlaintextParser

from sumy.nlp.tokenizers import Tokenizer

from sumy.summarizers.lsa import LsaSummarizer

# Your text to summarize

text = "Text summarization is an important task in NLP. It involves condensing large texts into shorter versions while preserving key information. There are various methods to achieve this, including extractive and abstractive summarization."

# Parse the text

parser = PlaintextParser.from_string(text, Tokenizer("english"))

# Create an LSA summarizer

summarizer = LsaSummarizer()

# Generate summary (2 sentences)

summary = summarizer(parser.document, 2)

# Print summary sentences

for sentence in summary:

print(sentence)

Explanation:

- PlaintextParser.from_string(text, Tokenizer(“english”)): This parses the input text.

- LsaSummarizer(): This initializes the LSA summarizer.

- summarizer(parser.document, 2): This generates a summary of the text, specifying the number of sentences (2 in this case).

- Print summary sentences: The summary sentences are printed one by one.

Extractive Summarization Using spaCy and Scikit-learn

Extractive summarization can also be implemented using spaCy for advanced text processing and Scikit-learn for computing term frequency-inverse document frequency (TF-IDF) scores. This method involves tokenizing the text into sentences, calculating TF-IDF vectors for each sentence, and selecting the most important sentences based on their similarity scores. Let’s explore the process step-by-step.

Extractive Summarization

1. Install Necessary Libraries

Before you begin, ensure you have installed the necessary libraries:

pip install spacy scikit-learn

You also need to download the English language model for spaCy:

python -m spacy download en_core_web_sm

2. Import Libraries and Load the Language Model

First, import the required libraries and load spaCy’s English language model.

import spacy

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

# Load spaCy's English model

nlp = spacy.load('en_core_web_sm')

3. Input Text

Provide the text you want to summarize.

text = """Text summarization is a process in natural language processing that involves condensing a piece of text to its essential information while retaining its core meaning. There are two main types of summarization: extractive and abstractive. Extractive summarization selects key sentences or phrases directly from the source text. Abstractive summarization generates new sentences that convey the main points of the text."""

4. Tokenize Text into Sentences

Use spaCy to tokenize the text into sentences.

# Process the text with spaCy

doc = nlp(text)

# Extract sentences from the document

sentences = [sent.text for sent in doc.sents]

5. Compute TF-IDF Scores

Use Scikit-learn’s TfidfVectorizer to convert sentences into TF-IDF vectors.

# Create a TF-IDF vectorizer

tfidf_vectorizer = TfidfVectorizer()

# Transform sentences into TF-IDF matrix

tfidf_matrix = tfidf_vectorizer.fit_transform(sentences)

6. Calculate Sentence Similarity

Compute the cosine similarity between the TF-IDF vectors of the sentences.

# Compute the cosine similarity matrix

similarity_matrix = cosine_similarity(tfidf_matrix)

7. Score Sentences Based on Similarity

Sum the similarity scores for each sentence to determine their importance.

# Sum the similarity scores for each sentence

scores = similarity_matrix.sum(axis=1)

8. Select Top Sentences for the Summary

Select the sentences with the highest scores to form the summary.

# Get the indices of the top sentences

top_sentence_indices = scores.argsort()[-1:][::-1]

# Combine the top sentences to form the summary

summary = ' '.join([sentences[i] for i in top_sentence_indices])

print("Extractive Summary:", summary)

Output

Extractive Summary: Text summarization is a process in natural language processing that involves condensing a piece of text to its essential information while retaining its core meaning.

Detailed Explanation

Text Processing with spaCy:

- Tokenization: spaCy processes the text and splits it into sentences (

doc.sents). - Sentence Extraction: Extract each sentence and store it in a list.

TF-IDF Computation with Scikit-learn:

- TF-IDF Vectorizer: The

TfidfVectorizerconverts sentences into numerical vectors that represent the importance of words in the context of the text. - TF-IDF Matrix: The matrix contains TF-IDF values for each word in each sentence.

Similarity Calculation:

- Cosine Similarity: Measures the cosine of the angle between two vectors. A higher cosine similarity score indicates more similarity.

Sentence Scoring and Selection:

- Summing Scores: Each sentence is scored by summing its cosine similarity values with all other sentences.

- Top Sentences: The sentences with the highest scores are considered the most important and are selected for the summary.

By using these tools, you can efficiently summarize large texts, making it easier to extract key information quickly. Now let’s Explore how we can Implement Abstractive Summarization.

Implementing Abstractive Summarization

Introduction to Transformer Models

Transformer models are a type of deep learning model that have significantly advanced the field of natural language processing (NLP). These models can understand and generate human language with remarkable accuracy. Let’s Just Overview the three key transformer models: BERT, GPT, and T5.

Overview of BERT, GPT, and T5

1. BERT (Bidirectional Encoder Representations from Transformers)

What is BERT? BERT is designed to understand the context of words in a sentence. It reads text bidirectionally, meaning it looks at words to the left and right of the target word simultaneously to understand its full context.

How does BERT work?

- Bidirectional Understanding: Unlike previous models that read text sequentially (left-to-right or right-to-left), BERT reads the entire sequence of words at once. This helps it understand the meaning of a word based on its surrounding words.

- Training: BERT is trained on large amounts of text from books, articles, and websites. It learns to predict missing words in sentences and identify if one sentence logically follows another.

Applications of BERT:

- Text Classification: Categorizing emails as spam or not spam.

- Question Answering: Finding answers to questions within a document.

- Named Entity Recognition (NER): Identifying names of people, organizations, and locations in text.

2. GPT (Generative Pre-trained Transformer)

What is GPT? GPT focuses on generating coherent and contextually relevant text. It is a unidirectional model, meaning it generates text by predicting the next word in a sequence from left to right.

How does GPT work?

- Text Generation: GPT generates text by continuing from a given prompt. It predicts the next word based on the preceding words, creating fluent and human-like text.

- Training: GPT is pre-trained on vast amounts of text data. During training, it learns the patterns and structures of language, enabling it to generate text that is contextually appropriate and coherent.

Applications of GPT:

- Text Completion: Writing assistants that help complete sentences or paragraphs.

- Chatbots: Creating conversational agents that can engage in human-like dialogue.

- Creative Writing: Generating stories, poems, or articles.

3. T5 (Text-To-Text Transfer Transformer)

What is T5? T5 converts every NLP problem into a text-to-text format. This means that both the input and output are always in text form, making it versatile for a wide range of tasks.

How does T5 work?

- Text-to-Text Framework: T5 treats all NLP tasks as text transformation tasks. For example, translating a sentence from English to French, summarizing a document, or answering questions are all treated as converting one piece of text into another.

- Training: T5 is trained on a diverse set of text-based tasks. It learns to perform various functions by transforming the input text into the desired output text.

Applications of T5:

- Text Summarization: Condensing long documents into shorter summaries.

- Translation: Converting text from one language to another.

- Text Classification: Categorizing text into predefined labels.

Let’s see how these tools are used to develop text summarization. These tools are not only for text summarization but also for many other applications, such as chatbots, translation, and text classification. By using these models, developers and researchers can build more intelligent and responsive NLP systems.

Using Hugging Face Transformers

The Hugging Face Transformers library provides access to state-of-the-art models for various NLP tasks, including abstractive summarization. This library makes it easy to use powerful models like BART and T5 to summarize text effectively.

Installing and Importing the Library

First, you need to install the transformers library. You can do this using pip:

pip install transformers

Once installed, you can import the necessary components from the library.

Summarizing Text with a Pre-trained Model

Hugging Face provides a pipeline API that simplifies the process of using pre-trained models for tasks like summarization. Let’s see how you can use a pre-trained BART model for summarization.

Example code for summarizing text using a pre-trained BART model

from transformers import pipeline

# Initialize the summarization pipeline

summarizer = pipeline("summarization")

# The text you want to summarize

text = """

Text summarization is a process in natural language processing that involves condensing a piece of text to its essential information

while retaining its core meaning. This technique is incredibly valuable in an era of information overload, where users need quick access

to relevant information without sifting through lengthy documents.

"""

# Generate the summary

summary = summarizer(text, max_length=50, min_length=25, do_sample=False)

print("Abstractive Summary:", summary[0]['summary_text'])

Output

Abstractive Summary: Text summarization involves condensing a piece of text to its essential information while retaining its core meaning. This technique is valuable in an era of information overload.

Detailed Explanation

1. Installing the Transformers Library

To use the Hugging Face Transformers library, you first need to install it. Run the following command in your terminal or command prompt:

pip install transformers

This will download and install the library along with its dependencies.

2. Importing the Pipeline

The pipeline API in the Transformers library provides a simple interface for using pre-trained models. You import it like this:

from transformers import pipeline

3. Initializing the Summarization Pipeline

Create an instance of the summarization pipeline by specifying the task you want to perform:

summarizer = pipeline("summarization")

This automatically loads a pre-trained model suitable for summarization tasks. By default, it uses the BART (Bidirectional and Auto-Regressive Transformers) model, which is designed for generating high-quality summaries.

4. Providing Text for Summarization

Define the text you want to summarize. In this example, the text is about the importance of text summarization in the context of information overload:

text = """

Text summarization is a process in natural language processing that involves condensing a piece of text to its essential information

while retaining its core meaning. This technique is incredibly valuable in an era of information overload, where users need quick access

to relevant information without sifting through lengthy documents.

"""

5. Generating the Summary

Use the summarizer to generate a summary of the text. You can specify parameters like max_length and min_length to control the length of the summary:

summary = summarizer(text, max_length=50, min_length=25, do_sample=False)

- max_length: The maximum length of the generated summary.

- min_length: The minimum length of the generated summary.

- do_sample: If set to

False, the summarization will use deterministic methods. If set toTrue, it will use sampling, which can generate more diverse summaries.

6. Printing the Summary

Finally, print the generated summary. The summary is stored in a list of dictionaries, where each dictionary contains a key summary_text holding the summary text:

print("Abstractive Summary:", summary[0]['summary_text'])

Why Use Hugging Face Transformers?

Ease of Use: The Hugging Face Transformers library simplifies the process of using complex transformer models. With just a few lines of code, you can grasp state-of-the-art models for summarization.

State-of-the-Art Models: The library provides access to the latest models like BART and T5, which are pre-trained on large datasets and fine-tuned for specific tasks like summarization.

Flexibility: The pipeline API is flexible and allows you to easily switch between different models and tasks, such as translation, text generation, and sentiment analysis.

Using Hugging Face Transformers for abstractive summarization is an easy and effective way to condense text to its essential points. With pre-trained models like BART, you can create high-quality summaries with little effort. This is especially useful today when quick access to relevant information is crucial. Whether you’re working on a Python data science project, text analysis, or any other NLP application, Hugging Face Transformers provides the tools you need for efficient text processing.

Evaluating Summarization Quality Using the ROUGE Metric

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is a set of metrics designed to evaluate the quality of summaries by comparing them to reference summaries. It measures how well a generated summary captures the important information present in the reference summaries.

Installing ROUGE

To use ROUGE for evaluation in Python, you need to install the rouge-score library:

pip install rouge-score

Using ROUGE for Evaluation

ROUGE provides several metrics such as ROUGE-1 (unigram overlap), ROUGE-2 (bigram overlap), ROUGE-L (longest common subsequence), etc. Here’s how you can use ROUGE to evaluate a generated summary against a reference summary:

Example code using ROUGE for evaluation

from rouge_score import rouge_scorer

# Initialize the ROUGE scorer

scorer = rouge_scorer.RougeScorer(['rouge1', 'rougeL'], use_stemmer=True)

# Example of a generated summary and a reference summary

generated_summary = "Text summarization condenses text."

reference_summary = "Summarization condenses text."

# Calculate ROUGE scores

scores = scorer.score(generated_summary, reference_summary)

print(scores)

Output

{'rouge1': Score(precision=0.75, recall=0.75, fmeasure=0.75), 'rougeL': Score(precision=0.75, recall=0.75, fmeasure=0.75)}

Detailed Explanation

1. What is ROUGE?

ROUGE evaluates the quality of a summary by comparing it to one or more reference summaries. It measures overlap in n-grams (sequences of n words), word sequences, and word pairs between the generated summary and the reference summaries.

2. Initializing the ROUGE Scorer

You create a RougeScorer object with specific metrics you want to use, such as ROUGE-1 (unigrams) and ROUGE-L (longest common subsequence). use_stemmer=True indicates that ROUGE should use stemming to handle variations of words (like plurals).

scorer = rouge_scorer.RougeScorer(['rouge1', 'rougeL'], use_stemmer=True)

3. Example of Generated and Reference Summaries

You define a generated summary and a reference summary that you want to evaluate:

generated_summary = "Text summarization condenses text."

reference_summary = "Summarization condenses text."

4. Calculating ROUGE Scores

You use the scorer.score() method to compute ROUGE scores for the generated summary compared to the reference summary:

scores = scorer.score(generated_summary, reference_summary)

5. Interpreting the Scores

ROUGE provides scores for precision, recall, and F-measure (which balances precision and recall). In the example output:

- rouge1: Precision, recall, and F-measure are all 0.75, indicating that 75% of the unigrams in the generated summary match those in the reference summary.

- rougeL: This metric considers the longest common subsequence of words. Again, precision, recall, and F-measure are all 0.75, showing a 75% match between the generated and reference summaries.

Why Use ROUGE?

Objective Evaluation: ROUGE provides objective metrics to assess the quality of summarization models. It helps compare different models and fine-tune them for better performance.

Coverage and Quality: By measuring overlap in n-grams and word sequences, ROUGE captures both the coverage (how much relevant information is included) and the quality (how accurate the summary is compared to the references).

Application in Research and Development: Researchers and developers use ROUGE to evaluate new summarization techniques, benchmark their performance against existing models, and report results in academic papers or applications.

ROUGE metrics are essential for objectively evaluating the quality of text summarization. By understanding and using ROUGE in your NLP projects, you can quantify how well your summarization models perform against reference standards. This process allows you to continuously enhance your models and ensure they generate precise and informative summaries for various applications.

Other Evaluation Metrics

Other metrics include precision, recall, and F1-score, which provide a comprehensive evaluation of summarization quality.

Advanced Techniques and Optimization

Fine-Tuning NLP Models for Summarization

Fine-tuning means taking a pre-trained language model and training it more on a specific dataset to make it better at a particular task, like text summarization. Hugging Face offers tools like the Trainer API to simplify this process, making it easier and more effective for NLP experts.

Steps Involved in Fine-Tuning

1. Preparing the Dataset

Before fine-tuning, you need a dataset that includes text and corresponding summaries. Popular datasets for summarization tasks include CNN/Daily Mail, which contains news articles paired with human-written summaries.

from datasets import load_dataset

# Load the CNN/Daily Mail dataset

dataset = load_dataset('cnn_dailymail', '3.0.0')

2. Initializing the Tokenizer and Model

Next, you initialize the tokenizer and the pre-trained model. In this example, we use the BART (Bidirectional and Auto-Regressive Transformers) model, specifically designed for sequence-to-sequence tasks like summarization.

from transformers import BartTokenizer, BartForConditionalGeneration

# Initialize tokenizer and model

tokenizer = BartTokenizer.from_pretrained('facebook/bart-large-cnn')

model = BartForConditionalGeneration.from_pretrained('facebook/bart-large-cnn')

3. Tokenizing the Dataset

Tokenization converts the text data into numerical tokens that the model can understand. This step prepares the dataset for training.

def tokenize_function(examples):

return tokenizer(examples['article'], max_length=1024, truncation=True)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

4. Fine-Tuning the Model

Fine-tuning involves setting up training parameters, such as learning rate, batch size, and number of epochs, and then using these parameters to train the model on the tokenized dataset.

from transformers import Trainer, TrainingArguments

# Define training arguments

training_args = TrainingArguments(

output_dir='./results',

evaluation_strategy="epoch",

learning_rate=2e-5,

per_device_train_batch_size=2,

per_device_eval_batch_size=2,

num_train_epochs=3,

weight_decay=0.01,

)

# Initialize Trainer with the model, training arguments, and datasets

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_datasets['train'],

eval_dataset=tokenized_datasets['validation'],

)

# Start training

trainer.train()

Explanation of the Process

Dataset Preparation: Loading the CNN/Daily Mail dataset ensures you have a suitable source of text and summaries for training.

Tokenizer and Model Initialization: Using BART and its tokenizer sets up the model architecture and tokenization method necessary for summarization.

Tokenization: Tokenizing the dataset converts raw text into tokenized sequences that the model can process efficiently.

Fine-Tuning Setup: Configuring TrainingArguments defines where to save model checkpoints, how to evaluate performance, and parameters like learning rate and batch size.

Training with Trainer: The Trainer object manages the entire training process, iterating through the dataset for multiple epochs to optimize the model weights for the summarization task.

Practical Application Example

Summarizing News Articles

Here’s an example of how you can use the fine-tuned model to summarize a news article:

from transformers import pipeline

# Initialize the summarization pipeline with the fine-tuned model

summarizer = pipeline("summarization", model=model, tokenizer=tokenizer)

article = """

The latest advancements in artificial intelligence have led to significant breakthroughs in various fields.

Researchers have developed new algorithms that can predict disease outbreaks, optimize supply chains, and enhance autonomous driving systems.

These innovations are expected to have a profound impact on industries and improve the quality of life for people around the world.

"""

# Generate a summary using the fine-tuned model

summary = summarizer(article, max_length=50, min_length=25, do_sample=False)

print("Summary:", summary[0]['summary_text'])

Summarizing Legal Documents

For summarizing legal documents, you can use a similar approach but may need to fine-tune a model on a dataset of legal texts for better accuracy.

from transformers import pipeline

summarizer = pipeline("summarization")

legal_text = """

The contract stipulates that the seller will deliver the goods to the buyer on or before the 30th day of June 2024.

Failure to deliver the goods by this date will result in a penalty of $10,000. The buyer agrees to pay the total amount of $50,000

upon receipt of the goods, provided they meet the quality standards specified in Annex A.

"""

summary = summarizer(legal_text, max_length=50, min_length=25, do_sample=False)

print("Summary:", summary[0]['summary_text'])

Output

Summary: The contract stipulates that the seller will deliver the goods to the buyer by June 30, 2024, with a penalty of $10,000 for late delivery. The buyer agrees to pay $50,000 upon receipt of the goods.

Why Fine-Tuning?

Enhanced Performance: Fine-tuning adapts a pre-trained model to specific data, improving its ability to generate accurate and relevant summaries.

Customization: By fine-tuning on datasets like CNN/Daily Mail, models can learn domain-specific nuances and improve summarization quality.

Application Versatility: Fine-tuned models can be used across various domains for tasks such as generating concise reports or summarizing research papers.

Fine-tuning NLP models such as BART for summarization using Hugging Face’s Transformers library is a powerful method. It allows practitioners to use pre-existing knowledge in large-scale models and adapt them to specific needs, enhancing the quality and relevance of generated summaries. Whether for academic research, content curation, or information retrieval, fine-tuned models play a crucial role in advancing the capabilities of natural language processing applications.

Challenges and Best Practices in Text Summarization

Text summarization is powerful but comes with challenges that must be managed effectively for successful implementation. Here are some key challenges and practical tips to overcome them:

Handling Large Documents

Challenge: Summarizing large documents can be difficult due to memory and computational limits. Processing extensive text all at once can strain systems and cause inefficiencies.

Best Practices:

- Chunking: Break the document into smaller parts or sections. Process each part separately to reduce the load on memory and processing power.

- Incremental Summarization: Summarize each chunk independently and then combine the summaries. This method helps manage large volumes of text more efficiently.

Dealing with Domain-Specific Texts

Challenge: Texts specific to certain fields (like medical or legal documents) often contain specialized terms and structures that general models may struggle with.

Best Practices:

- Domain-Specific Fine-Tuning: Use pre-trained models such as BERT or GPT and adjust them with data from the specific domain. This helps the model better understand and summarize text unique to that field.

- Custom Training: Train models from scratch or modify existing ones using datasets from the domain of interest. This approach improves the model’s ability to capture domain-specific nuances and enhance summarization accuracy.

Ensuring Coherence and Readability

Challenge: Summaries need to be coherent (logically structured) and readable (clear and understandable). Poorly generated summaries may lack structure or be hard to comprehend.

Best Practices:

- Use of Advanced Models: Employ advanced models like transformers (e.g., BERT, GPT) known for their contextual understanding and ability to generate coherent text.

- Fine-Tuning for Quality: Specifically fine-tune models for summarization tasks to produce high-quality summaries. This ensures accuracy and readability in the generated summaries.

- Evaluation Metrics: Use evaluation metrics such as ROUGE to objectively measure the quality of summaries. These metrics assess how well the generated summaries match with reference summaries in terms of relevance and overlap.

Practical Application of Best Practices

Example Scenario: Imagine summarizing a complex medical research paper. Here’s how you can apply these best practices:

- Data Preparation: Gather a dataset of medical research papers along with their summaries.

- Model Selection: Choose a transformer model like BERT or GPT that has been fine-tuned on medical literature.

- Summarization Process:

- Chunking: Divide the research paper into sections like introduction, methodology, results, and discussion.

- Domain-Specific Fine-Tuning: Fine-tune the selected model using a dataset of medical papers to adapt it to medical terminology and writing styles.

- Coherence and Readability: Generate summaries for each section, ensuring they flow logically and are easy to understand.

- Evaluation: Use ROUGE metrics to evaluate how well the generated summaries compare to the reference summaries.

These practices help optimize text summarization for various applications, ensuring that the generated summaries are accurate, coherent, and valuable for decision-making and information retrieval.

Future Trends in Text Summarization

Text summarization is evolving rapidly with technology advancements and increasing demand for efficient information processing. Here are some future trends that are shaping the field:

- Transformer Models and Beyond

- Advancements: Models like BERT, GPT, and BART have greatly improved text summarization by understanding context better and generating more accurate summaries.

- Future Developments:

- Advanced Models: Researchers are working on even more sophisticated transformer models that can understand context deeply and summarize more precisely.

- Enhanced Techniques: Techniques like fine-tuning and transfer learning will be refined further to make models perform better for specific summarization tasks.

- Real-time Summarization

- Emerging Trend: Real-time summarization is becoming popular, especially in applications needing immediate synthesis of information, like news aggregation and live event updates.

- Key Aspects:

- Instant Generation: Systems will be designed to produce summaries instantly as new data or updates arrive.

- Continuous Updates: Summaries will change dynamically based on real-time input to ensure they stay relevant and timely.

- Multimodal Summarization

- Integration of Modalities: Future trends involve combining text summarization with other types of data such as images, videos, and audio to create comprehensive summaries.

- Benefits and Applications:

- Comprehensive Insights: Multimodal summaries give a complete picture by including diverse data types, enhancing understanding and decision-making.

- Applications: This approach is useful in fields like multimedia content analysis, where summarizing across different modalities provides deeper insights than text alone.

Conclusion

Text summarization using Python is a strong tool in natural language processing. With Python’s many libraries and tools, you can implement extractive and abstractive summarization methods smoothly. Whether you’re summarizing news, legal documents, or scientific papers, Python offers the flexibility and assistance to achieve excellent outcomes.

Key Takeaways

- Summarizing text is crucial for condensing large amounts of information into short and useful summaries.

- Python is great for text summarization because it’s easy to use and has powerful libraries for NLP.Extractive summarization picks important sentences from the original text, while abstractive summarization creates new sentences.

- Hugging Face Transformers is a strong library for using advanced summarization models.

- Using metrics like ROUGE helps ensure the quality and relevance of summaries.

- Fine-tuning models on specific datasets can greatly improve how accurate the summaries are.

By following the advice and examples in this guide, you can effectively use Python for text summarization in many areas and situations.

External Resources

Here are some helpful resources for learning about text processing and NLP libraries in Python:

- NLTK Documentation: This is the official guide for NLTK, a popular Python library used for text processing.

- Link: NLTK Documentation

- spaCy Documentation: Explore comprehensive documentation for spaCy, a fast and efficient library designed for NLP tasks.

- Link: spaCy Documentation

- Gensim Documentation: Learn about Gensim, a library focused on topic modeling and analyzing document similarities.

- Link: Gensim Documentation

- Hugging Face Transformers Documentation: Official documentation for Hugging Face Transformers, offering advanced models for NLP tasks, including summarization.

These resources provide valuable information and tutorials to help you get started and dive deeper into NLP with Python.

Leave a Reply