How to Work with Different File Formats in Python

Introduction

Working with files is a key programming part, especially when handling data. Whether you’re new or experienced knowing how to handle different file formats in Python is important. From simple text files to more complex formats like CSV, JSON, and Excel, Python makes it easy to read, write, and manipulate files. But if you’re not familiar with the process, it can feel overwhelming.

In this post, I’ll guide you through working with various file formats in Python. We’ll cover the basics with practical examples, so by the end, you’ll feel confident managing almost any file type. Let’s get you comfortable with files to help smoothen your projects.

Whether you’re dealing with CSVs, JSON files, or images, this guide will show you the best practices. Stick around, and you’ll see how flexible and powerful Python can be with file handling.

Why File Formats Matter in Python

Python provides user-friendly tools for file handling, allowing developers to read, write, and manipulate various file types seamlessly. This capability is invaluable since modern applications often handle data from diverse sources such as APIs, databases, and user inputs.

By mastering Python File I/O, you gain the ability to process file formats like text files, CSVs, and JSON efficiently. This skill ensures that you can manage data operations without hiccups, keeping your workflow organized and adaptable for various data-driven tasks.

Common File Formats in Python

Let’s explore some of the most commonly used file formats in Python and how you can work with them effectively. Each format serves a different purpose, and by learning how to handle them, you’ll become more proficient in Python File I/O.

CSV (Comma-Separated Values)

CSV files are widely used for storing tabular data. Python’s csv module makes reading and writing CSV files simple. Many businesses use CSV for exporting or importing data from spreadsheets. It’s lightweight and easy to understand. For example:

import csv

# Reading a CSV file

with open('data.csv', 'r') as file:

reader = csv.reader(file)

for row in reader:

print(row)

# Writing to a CSV file

with open('output.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(['Name', 'Age', 'Profession'])

writer.writerow(['Alice', 30, 'Engineer'])

JSON (JavaScript Object Notation)

JSON is perfect for handling structured data and is often used in APIs. Python’s json library simplifies reading and writing JSON files. JSON files are both human-readable and machine-readable, making them extremely popular for data exchange.

import json

# Reading a JSON file

with open('data.json') as file:

data = json.load(file)

print(data)

# Writing to a JSON file

with open('output.json', 'w') as file:

json.dump(data, file)

XML (eXtensible Markup Language)

XML is another format used for storing structured data, often in web services. Although less popular than JSON today, it’s still important to know. Python provides the xml.etree.ElementTree library for handling XML files.

import xml.etree.ElementTree as ET

tree = ET.parse('data.xml')

root = tree.getroot()

for child in root:

print(child.tag, child.attrib)

Excel Files

Excel files are common in business environments. Python’s pandas library allows you to work with Excel files easily. You can read, modify, and write Excel data using simple functions, which makes it a popular choice when handling larger datasets.

import pandas as pd

# Reading an Excel file

df = pd.read_excel('data.xlsx')

print(df)

# Writing to an Excel file

df.to_excel('output.xlsx', index=False)

Text Files

Text files are the most basic file format. Python’s open() function lets you read and write text files without much complexity. This format is great for simple tasks like logs or configuration files.

# Reading a text file

with open('file.txt', 'r') as file:

content = file.read()

print(content)

# Writing to a text file

with open('output.txt', 'w') as file:

file.write("This is an example text.")

Binary Files

Binary files store data in a more efficient format than text files. These are perfect for non-text data like images or videos. To handle binary files, Python allows opening the file in binary mode ('rb' for reading, 'wb' for writing).

# Reading a binary file

with open('image.png', 'rb') as file:

content = file.read()

print(content)

# Writing to a binary file

with open('output.bin', 'wb') as file:

file.write(content)

Why You Should Know These File Formats

By mastering these file formats, you’ll be better equipped to handle a variety of data types. For instance, CSVs and Excel files are very common in data science, while web developers often work with JSON and XML. Understanding how to work with each format can save you time and help avoid unnecessary errors.

Comparison of File Formats

Here’s a quick comparison of key features in each file format:

| File Format | Strengths | Weaknesses |

|---|---|---|

| CSV | Easy to use, lightweight, human-readable | Limited to tabular data |

| JSON | Great for structured data, used widely in APIs | Not ideal for very large datasets |

| XML | Flexible, supports complex structures | More verbose than JSON |

| Excel | Familiar format, used in business | Requires external libraries for handling |

| Text | Simple, universal format | Not ideal for structured data |

| Binary | Efficient for large files (media, images) | Not human-readable, harder to manipulate |

Must Read

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

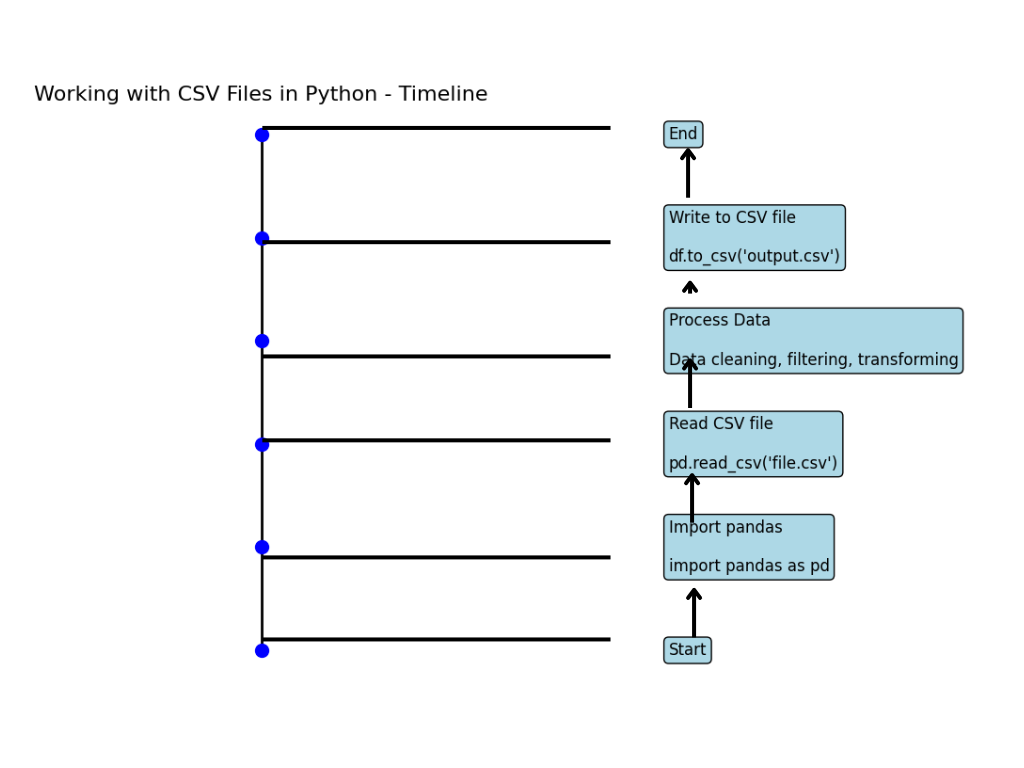

Working with CSV Files in Python

What Are CSV Files?

CSV (Comma Separated Values) is a simple and widely-used format for storing structured data. Each line in a CSV file represents a data record, with fields separated by commas. Its simplicity makes it highly accessible for both humans and machines.

You’ll encounter CSV files in many scenarios, such as exporting data from spreadsheets, exchanging information between applications, and managing datasets in databases. Its universal compatibility and ease of use make it an essential format in data handling and analysis.

Where is CSV Used?

- Data analysis: CSV is commonly used in data science to import and export large datasets.

- Web applications: APIs often send or receive data in CSV format because it’s lightweight and easy to handle.

- Spreadsheets: Programs like Microsoft Excel and Google Sheets allow data export in CSV, making it simple to move data between tools.

One of the main reasons CSV files are popular is their simplicity. You don’t need complex tools to manage them, just basic Python skills will do the job. Plus, CSV is human-readable and platform-independent.

How to Read CSV Files in Python

Reading CSV files in Python is incredibly easy. There are two main ways to do this: using the built-in csv module or using pandas, a powerful data analysis library.

Reading CSV with Pandas

The pandas library simplifies file handling. With a single line of code, you can load an entire CSV file into a DataFrame, which makes it easier to manipulate and analyze the data.

import pandas as pd

# Reading CSV file using pandas

df = pd.read_csv('file.csv')

# Display the first 5 rows of the DataFrame

print(df.head())

Why Use Pandas?

- Ease of use: It allows you to load and explore data with just a few commands.

- Powerful data manipulation: You can filter, group, and perform complex operations on the data.

If you’re dealing with large datasets or need advanced features like missing value handling, pandas is the best tool.

Reading CSV with Python’s Built-in CSV Module

If you don’t need the advanced functionality that pandas offers, Python’s built-in csv module can handle CSV files efficiently.

import csv

# Open and read the CSV file

with open('file.csv', 'r') as file:

reader = csv.reader(file)

# Print each row

for row in reader:

print(row)

The csv module gives you more control over how the data is read. It’s lightweight and part of Python’s standard library, making it a good choice for smaller or less complex tasks.

Benefits of Using CSV in Python:

- Human-readable: Anyone can open a CSV file in a text editor or spreadsheet.

- Cross-platform: CSV works across different systems without compatibility issues.

- Easy to manipulate: Python’s built-in tools and third-party libraries make it easy to process CSV files.

How to Write CSV Files in Python

Just as reading CSV files is simple, writing them is also simple. You can use either the pandas library or Python’s csv module.

Writing CSV with Pandas

Writing data to a CSV file using pandas is as easy as reading it.

import pandas as pd

# Writing DataFrame to CSV

df.to_csv('output.csv', index=False)

In this example, the to_csv() function exports the DataFrame to a CSV file. The index=False argument prevents pandas from writing row numbers to the file, keeping it clean and structured.

Writing CSV with Python’s CSV Module

For smaller datasets or when you need more control over formatting, Python’s csv module can also be used to write CSV files.

import csv

# Writing data to CSV using csv module

with open('output.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(['Name', 'Age', 'Profession'])

writer.writerow(['Alice', 30, 'Engineer'])

writer.writerow(['Bob', 25, 'Doctor'])

This approach is useful when you need to manually control the format of the output or when working in environments with limited resources.

Handling Large CSV Files in Python

When working with large CSV files, loading everything into memory might cause your program to slow down or even crash. Python provides chunking to handle such scenarios. By reading the file in smaller parts, you can process it more efficiently without consuming too much memory.

Using Pandas for Chunking

Pandas allows you to read large CSV files in chunks, which reduces memory usage.

import pandas as pd

# Reading CSV in chunks

chunk_size = 1000 # Number of rows per chunk

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

print(chunk.head())

With this method, you load and process small portions of the file at a time. This makes it easier to work with large datasets without overwhelming your system.

Using CSV Module for Chunking

While pandas is more efficient for chunking, you can also do this with the csv module by reading the file line by line.

import csv

# Reading large CSV file in chunks using csv module

with open('large_file.csv', 'r') as file:

reader = csv.reader(file)

for index, row in enumerate(reader):

if index % 1000 == 0: # Process every 1000 rows

print(row)

This approach gives you control over exactly how much data you’re processing at a time, making it useful for optimizing performance.

Optimizing Memory Usage for Large CSV Files

When handling large files, it’s important to keep memory usage in check. Here are some techniques:

- Use chunks: As shown above, reading data in smaller chunks reduces memory load.

- Select specific columns: When you only need certain columns, you can avoid loading the entire file. In

pandas, this can be done with theusecolsargument.

df = pd.read_csv('large_file.csv', usecols=['Column1', 'Column2'])

Use generators: Instead of loading all data into memory at once, generators allow you to process rows one at a time.

def csv_generator(file_path):

with open(file_path, 'r') as file:

reader = csv.reader(file)

for row in reader:

yield row

With these techniques, you can handle large CSV files in Python without running into memory issues.

Summary of Techniques for Handling CSV Files

Here’s a quick summary of the different approaches you can take when working with CSV files in Python:

| Task | Tool | Code Example |

|---|---|---|

| Reading small CSV files | pandas | df = pd.read_csv('file.csv') |

| Reading large CSV files | pandas (chunking) | for chunk in pd.read_csv('file.csv', chunksize=1000): |

| Writing to CSV | pandas | df.to_csv('output.csv', index=False) |

| Reading small CSV files | csv module | with open('file.csv', 'r') as file: reader = csv.reader(file) |

| Writing to CSV | csv module | with open('output.csv', 'w') as file: writer = csv.writer(file) |

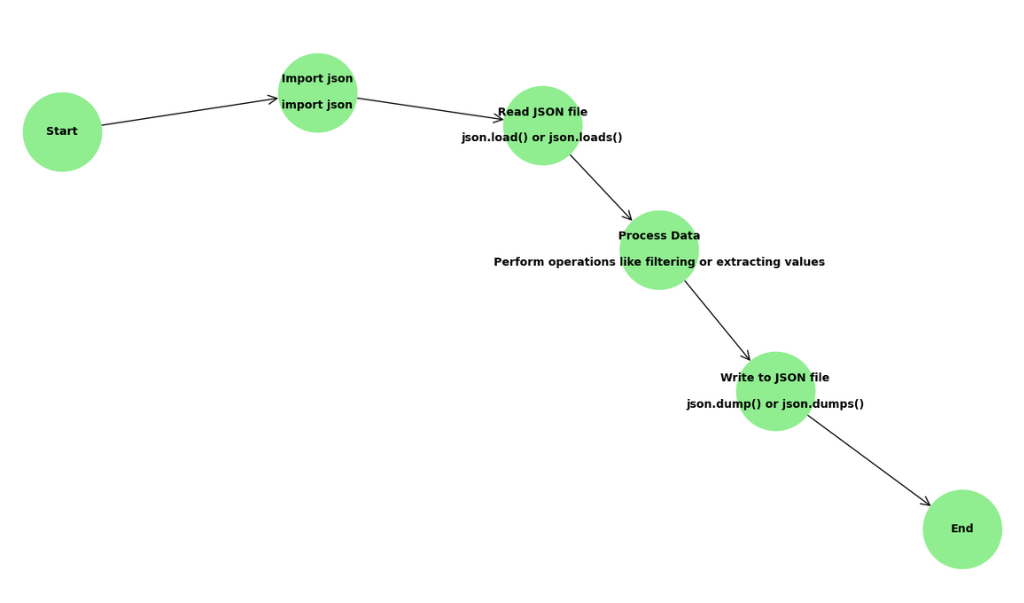

Working with JSON Files in Python

What is JSON and Why It’s Popular?

JSON, which stands for JavaScript Object Notation, is a lightweight data format commonly used for web development and data transfer. Despite its name, it isn’t limited to JavaScript—it’s supported in almost every programming language, including Python. JSON’s popularity stems from its simplicity and readability, making it an excellent format for exchanging data between servers and web applications.

Why is JSON so widely used in Python applications? It’s because JSON is flexible. Unlike other file formats, it supports complex data structures, such as lists and dictionaries, without adding much overhead. This makes it easy to use when working with APIs or when storing data in configurations.

Key Benefits of JSON:

- Human-readable: The structure of JSON makes it easy for both developers and non-developers to read.

- Lightweight: It transfers data efficiently, especially when compared to XML.

- Flexibility: JSON can handle various types of data, from numbers and strings to arrays and nested objects.

In the modern world of web development and data exchange, JSON has become the go-to choice because it’s fast and adaptable. Whether you’re building an API or simply transferring data between your programs, JSON fits the bill perfectly.

How to Read JSON Files in Python

Python provides a built-in json module that makes reading and working with JSON files simple and intuitive. With just a few lines of code, you can load JSON data into Python objects like dictionaries, which you can manipulate easily.

Here’s how you can read a JSON file in Python:

import json

# Opening and reading a JSON file

with open('file.json', 'r') as f:

data = json.load(f)

# Printing the loaded JSON data

print(data)

In this example, the json.load() function reads the JSON file and converts it into a Python dictionary. This process is known as deserialization—transforming a JSON string into a Python object.

Why Use JSON in Python Applications?

- It is widely used in web APIs: Many web applications and services return data in JSON format, making it easy to fetch and process in Python.

- It’s great for configuration files: JSON is often used to store configuration settings for applications, as its structure allows for easy modification and understanding.

How to Write JSON Files in Python

Writing data to a JSON file is just as simple as reading it. Python’s json module allows you to serialize Python objects into JSON format, which can then be saved as a file.

Here’s an example of how to write (serialize) data to a JSON file:

import json

# Sample data to be written as JSON

data = {

'name': 'John Doe',

'age': 28,

'profession': 'Software Developer'

}

# Writing data to a JSON file

with open('output.json', 'w') as f:

json.dump(data, f, indent=4)

The json.dump() method converts the Python dictionary data into a JSON string and writes it to the file output.json. The indent=4 argument formats the JSON file to be more readable by adding indentation.

Advantages of Using JSON in Python

Here’s why JSON serialization and deserialization are essential for File Formats in Python:

- Simplicity: Python’s built-in

jsonmodule makes handling JSON files a breeze. - Flexibility: JSON can handle different types of data, from simple key-value pairs to more complex nested structures.

- Readability: Both human and machine-readable, JSON is easy to debug and parse.

Use Cases of JSON in Python:

- Web APIs: When you interact with web services or third-party APIs, data is often returned in JSON format.

- Data storage: JSON is commonly used to store configurations, logs, or settings for applications.

- Data exchange: In Python applications where data needs to be shared or communicated between components or systems, JSON simplifies the process.

Handling Complex JSON Files in Python

One of the strengths of JSON is its ability to store nested structures, like lists within dictionaries. Here’s an example of how you can read and manipulate more complex JSON files:

{

"person": {

"name": "Alice",

"age": 32,

"skills": ["Python", "JavaScript", "SQL"]

}

}

To handle this in Python:

import json

# Reading complex JSON data

with open('complex_file.json', 'r') as f:

data = json.load(f)

# Accessing nested data

print(data['person']['skills'])

Here, the json.load() function still works, and you can access nested structures using simple indexing.

Optimizing JSON Handling in Python

While JSON is lightweight and easy to use, there are a few optimizations you can apply when dealing with larger or more complex files:

- Compact encoding: If file size is a concern, you can write JSON without unnecessary whitespace using

indent=Noneorseparators=(',', ':').

json.dump(data, f, separators=(',', ':'))

Partial reading: For very large files, it might be better to read the file in chunks or process it incrementally.

Working with XML Files in Python

Understanding XML File Format

XML (eXtensible Markup Language) is a structured format designed to store and transport data. Unlike JSON, which is more focused on simplicity and speed, XML is used when you need to represent hierarchical or nested data with a more formal structure. XML is commonly seen in web services, data exchange between systems, and even in configuration files. It offers tag-based structure similar to HTML, making it easy to read and organize.

An example of an XML structure:

<library>

<book id="1">

<title>Python Programming</title>

<author>John Doe</author>

</book>

<book id="2">

<title>Data Science</title>

<author>Jane Doe</author>

</book>

</library>

Each piece of information is wrapped in tags, making XML a great choice for representing hierarchical data. The structure is more formal than JSON and allows you to define custom tags, which is why it’s still used in many industries like publishing, finance, and healthcare.

While JSON is more popular today due to its simplicity, XML’s structured nature makes it powerful when data needs strict organization.

How to Read and Parse XML Files in Python

Python makes it simple to work with XML format using libraries like ElementTree, which is part of Python’s standard library. You can easily parse an XML file, extract the data, and work with it just like a Python object.

Here’s an example using ElementTree to read and parse an XML file:

import xml.etree.ElementTree as ET

# Parse the XML file

tree = ET.parse('file.xml')

root = tree.getroot()

# Loop through the XML tree structure

for child in root:

print(child.tag, child.attrib)

In this example:

- The

ET.parse()function reads the XML file. getroot()grabs the root element of the tree, allowing you to traverse it.- The loop iterates through the child nodes of the root, printing their tags and attributes.

This makes parsing XML in Python efficient and straightforward. You can use similar methods to extract specific data or process nested structures.

Alternative: Using lxml for Advanced Parsing

For more complex XML structures, or when performance is a concern, you can use the lxml library. It is faster and provides more features compared to ElementTree.

Here’s an example of using lxml:

from lxml import etree

# Parse XML file

tree = etree.parse('file.xml')

# Get the root of the tree

root = tree.getroot()

# Find and print specific elements

for book in root.findall('book'):

print(book.find('title').text, book.find('author').text)

lxml offers more powerful querying capabilities (like XPath support), making it the go-to library for complex XML parsing in Python applications. If you’re working with massive or deeply nested XML files, lxml provides better performance than ElementTree.

How to Write XML Files in Python

Writing to XML is just as important as reading from it. In Python, you can create new XML files or modify existing ones using ElementTree. Here’s how you can create an XML structure and write it to a file:

import xml.etree.ElementTree as ET

# Create the root element

root = ET.Element("library")

# Create a child element

book = ET.SubElement(root, "book")

book.set("id", "1")

# Add sub-elements

title = ET.SubElement(book, "title")

title.text = "Python Programming"

author = ET.SubElement(book, "author")

author.text = "John Doe"

# Write the tree to an XML file

tree = ET.ElementTree(root)

tree.write("output.xml", xml_declaration=True, encoding='utf-8', method="xml")

In this example:

- The

ET.Element()function creates the root element. ET.SubElement()is used to add children to the root.- Finally,

ET.ElementTree()writes the entire structure to a file namedoutput.xml.

When working with File Formats in Python, you’ll often need to create or edit structured data, and writing XML files is an essential skill. It allows you to store information in a consistent and readable way, which is important for data exchange between different systems.

XML’s Use in Modern Python Applications

Though JSON has gained more popularity for data transfer and storage, XML still holds a strong position in industries like:

- Publishing: For managing documents and metadata.

- Finance: When dealing with complex data that needs strict validation.

- Healthcare: For exchanging data in formats like HL7 or CCD.

If you’re working with web services that use SOAP or large enterprise systems, you’ll likely encounter XML. Knowing how to handle this format in Python will give you an edge in dealing with legacy systems or specific industry standards.

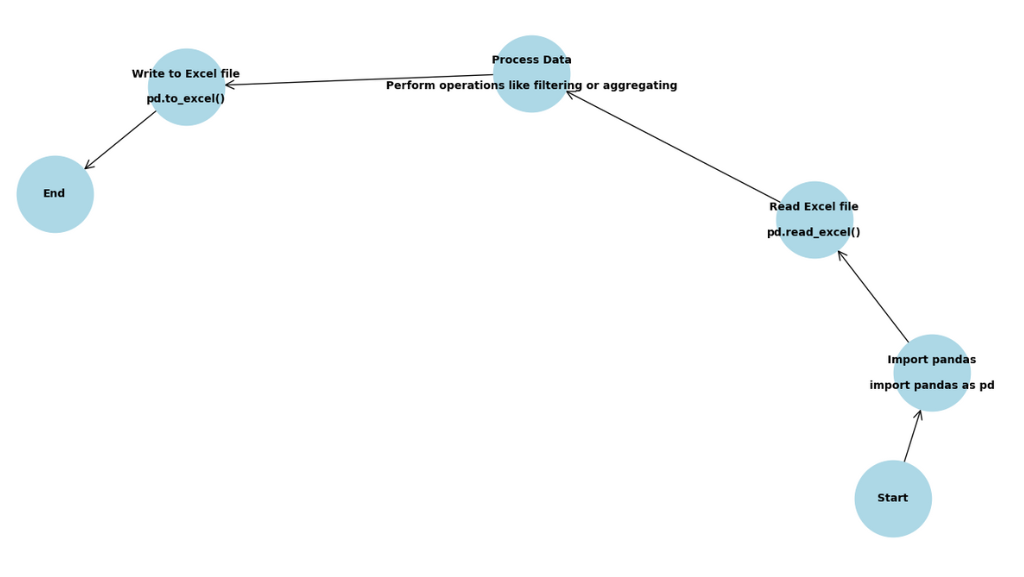

Working with Excel Files in Python

Excel files are widely used in data management, making them a critical format to handle in Python applications. Luckily, Python provides powerful tools, like the pandas library, that make it easy to read from and write to Excel files. Whether you’re working with large datasets, creating reports, or processing user input, understanding how to work with Excel file formats in Python is essential.

Reading Excel Files in Python with Pandas

Reading Excel files is a common task in data analysis, and pandas is the go-to library for this in Python. The pandas.read_excel() function allows you to load an Excel file into a DataFrame, a two-dimensional, tabular data structure in Python. This method works well with different Excel formats, including .xls and .xlsx.

Here’s how you can read an Excel file:

import pandas as pd

# Reading the Excel file

df = pd.read_excel('data.xlsx')

# Display the first 5 rows

print(df.head())

In this example:

- The

pd.read_excel()function loads the file'data.xlsx'into a pandas DataFrame. - You can display the first few rows with

df.head()to get a glimpse of the data.

Why Use Pandas for Excel?

Pandas is an excellent choice for reading Excel files because:

- Simple syntax: Loading data with just one line.

- Data structure: Pandas automatically converts the data into a DataFrame, which is perfect for analysis and manipulation.

- Handling large datasets: It efficiently manages large files, allowing you to work with millions of rows.

If you’re dealing with multiple sheets in an Excel file, pandas can easily handle that too:

# Reading a specific sheet

df_sheet = pd.read_excel('data.xlsx', sheet_name='Sheet2')

# Show data from a different sheet

print(df_sheet.head())

This code allows you to load data from specific sheets, making reading Excel in Python flexible and powerful.

Writing Data to Excel in Python

Once you’ve processed your data, you might need to write it back to an Excel file. Again, pandas makes this simple with its to_excel() function. Whether you’re exporting the results of an analysis or saving modified data, this method will handle it with ease.

Here’s how you can write data to an Excel file:

# Writing the DataFrame to Excel

df.to_excel('output.xlsx', index=False)

In this example:

df.to_excel()exports your DataFrame into an Excel file calledoutput.xlsx.- The

index=Falseargument ensures that row indices are not written to the Excel file (which you typically don’t need).

Advantages of Using Pandas for Excel:

- Simple writing: One line to export data.

- Multiple sheets: You can write to multiple sheets in a single Excel file if needed.

- Customization: Pandas allows you to adjust formatting, add conditional logic, and manage large datasets with ease.

For example, to write data to multiple sheets:

with pd.ExcelWriter('output_multisheet.xlsx') as writer:

df.to_excel(writer, sheet_name='Sheet1')

df_sheet.to_excel(writer, sheet_name='Sheet2')

In this case:

- You create an

ExcelWriterobject to handle writing to multiple sheets. - The same data is written to two different sheets in the

output_multisheet.xlsxfile.

Handling Large Excel Files in Python

When dealing with very large Excel files, performance and memory usage can become concerns. Pandas can handle this through chunking, which breaks down large datasets into manageable chunks for processing.

Here’s how to read a large Excel file in chunks:

chunk_size = 1000 # Number of rows per chunk

for chunk in pd.read_excel('large_data.xlsx', chunksize=chunk_size):

print(chunk.head()) # Process each chunk

In this example:

- The file is read in chunks of 1,000 rows at a time, reducing memory usage.

Chunking is especially useful when working with massive files that would otherwise be too large to load into memory all at once. This technique ensures that processing large Excel files in Python remains efficient, even with limited resources.

Why Excel Files Matter in Python

Excel is widely used for managing and organizing data in many industries. Understanding how to read and write Excel files is essential for:

- Data Analysis: Working with reports or customer data.

- Automation: Automating Excel-based tasks and workflows.

- Data Sharing: Exchanging data between teams or applications.

The pandas library makes this process simple, but you can also use other libraries like openpyxl or xlrd for specific use cases. While pandas is a popular choice, these other libraries can offer more control over formatting, formulas, and other Excel-specific features.

For example, with openpyxl, you can directly manipulate Excel files without needing to convert them into a DataFrame. This is useful when working with complex formatting or Excel formulas.

Summary of Key Functions for Excel Handling in Python

| Task | Code Example |

|---|---|

| Reading Excel Files | df = pd.read_excel('data.xlsx') |

| Writing to Excel Files | df.to_excel('output.xlsx') |

| Reading Large Files | for chunk in pd.read_excel('data.xlsx', chunksize=1000): |

| Writing Multiple Sheets | with pd.ExcelWriter('output_multisheet.xlsx') as writer: |

Handling Excel file formats in Python is a critical skill for anyone working with data. With tools like pandas, you can effortlessly read, write, and manipulate Excel data in Python, enhancing your workflows and improving efficiency.

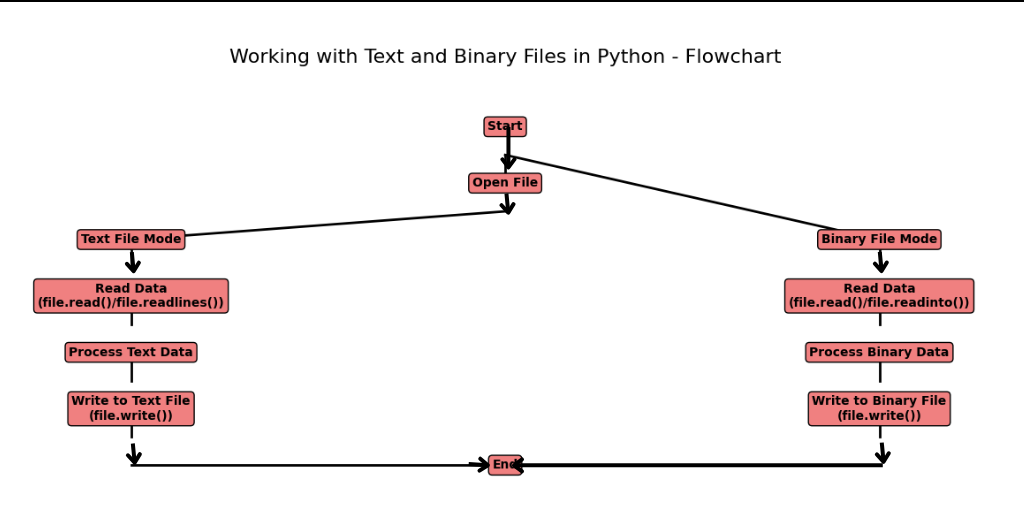

Working with Text and Binary Files in Python

Handling files is an essential skill when working with data in Python. Whether you’re working with simple text files or complex binary files, Python provides simple methods to read from and write to these files. In this section, we’ll break down the concepts of working with both text and binary file formats in Python, using simple explanations and examples to help you understand how to manage data in these different file types.

How to Read and Write Text Files in Python

Text files store data in human-readable format, and working with them is quite common in programming tasks. Python’s built-in open() function allows you to easily read text files in Python or write to them. Let’s explore how to do both.

Reading a Text File

The most basic way to read a text file is by using open() in reading mode ('r'):

# Reading a text file

with open('example.txt', 'r') as file:

content = file.read()

print(content)

Here’s what happens:

- The

open()function opens the file'example.txt'in read mode. - The

withstatement ensures that the file is automatically closed after the block of code is executed. - The

file.read()method reads the entire content of the file and stores it in thecontentvariable.

If the file is large, you might want to read it line by line:

# Reading a file line by line

with open('example.txt', 'r') as file:

for line in file:

print(line.strip())

Why use line-by-line reading?

When working with large files, it’s more efficient to read them line by line to avoid loading the entire file into memory at once. This can prevent memory overloads, especially with big text files.

Writing to a Text File

Writing to text files is just as simple. You open the file in write mode ('w'), and if the file doesn’t exist, Python will create it for you:

# Writing to a text file

with open('example.txt', 'w') as file:

file.write("This is a new line of text.")

- In this case,

open()creates or opens the file'example.txt'in write mode. - The

file.write()method writes the specified string to the file.

If you want to append text instead of overwriting the file, use append mode ('a'):

# Appending to a text file

with open('example.txt', 'a') as file:

file.write("\nThis is an additional line of text.")

Key Points:

'r'mode is for reading text files.'w'mode is for writing (and overwriting) files.'a'mode allows appending new data without erasing existing content.

How to Read and Write Binary Files in Python

While text files store data in a human-readable format, binary files store data in binary (0s and 1s) format. Handling binary files in Python is useful when working with non-text data like images, videos, or executables.

Reading Binary Files

To read binary files, such as images, we use the open() function with the 'rb' mode (read binary):

# Reading a binary file

with open('image.jpg', 'rb') as file:

binary_data = file.read()

print(binary_data[:10]) # Display the first 10 bytes

Here, 'rb' tells Python to open the file in binary read mode. The file.read() method reads the entire binary data of the file, which can then be processed or stored.

Why read binary files?

Reading binary files is essential when you’re dealing with file formats that are not human-readable, such as images or audio files, where data is stored in raw binary form.

Writing to Binary Files

Writing to binary files works similarly, using 'wb' mode (write binary). For example, if you wanted to create a new binary file or modify an existing one:

# Writing to a binary file

with open('output_image.jpg', 'wb') as file:

file.write(binary_data)

In this example:

- The file is opened in binary write mode (

'wb'). - The

binary_datais written directly to the file.

Binary file handling allows us to process non-text data effectively, making it an essential tool when working with multimedia formats or binary file formats in Python.

Understanding the Differences: Text vs. Binary Files

Here’s a quick breakdown of the key differences between text and binary file formats in Python:

| Feature | Text Files | Binary Files |

|---|---|---|

| Data Format | Human-readable (ASCII/Unicode) | Machine-readable (binary data) |

| Access Mode | 'r', 'w', 'a' | 'rb', 'wb', 'ab' |

| Common Use Cases | Logs, configuration files | Images, videos, executables |

| Example | .txt, .csv | .jpg, .png, .exe |

Important Notes:

- Text files are easier to read and manipulate manually, while binary files are optimized for efficient storage and transfer of data.

- Always use the appropriate mode (

'r','w','rb','wb') based on the type of file you are working with.

Practical Uses of Text and Binary Files

Working with different file formats in Python, such as text and binary files, is a common need across various applications. Here are a few practical scenarios where you might encounter these file types:

- Text files: Used for logging system events, writing configuration files, or saving processed data in readable formats like CSV.

- Binary files: Used in multimedia applications, saving and reading image, audio, or video files, or even working with hardware drivers and firmware updates.

Real-World Example: Combining Text and Binary Files

Sometimes, you might need to work with both text and binary files within a single project. For instance, when building a data pipeline that processes file formats in Python, you might log events in a text file and process images in a binary format.

# Logging an event in a text file and processing an image (binary)

with open('log.txt', 'a') as log_file, open('image.jpg', 'rb') as img_file:

log_file.write("Processing started...\n")

binary_data = img_file.read()

# Perform operations on binary_data (e.g., image processing)

log_file.write("Processing completed.\n")

This example illustrates how you can handle different file formats in Python within the same context, which is a common requirement in complex applications.

Handling Other File Formats in Python

In the modern world of data processing, it’s essential to handle a variety of file formats efficiently. Python, being a highly flexible language, provides libraries that make it easier to work with a wide range of file types, including images and audio. In this section, we’ll cover how to work with image files using the Pillow library and how to process audio files using librosa. These libraries make managing multimedia files effortless and can be integrated seamlessly into your projects, whether you’re building data pipelines or developing applications that require file formats in Python.

Working with Image Files in Python

Images are a common type of data you might handle, whether you’re working on web development, machine learning, or even simple data visualization projects. Python’s Pillow library is a popular tool for processing image files in Python, offering a range of features to open, manipulate, and save images in formats like JPEG, PNG, and more.

Opening an Image File

To start working with images, you’ll first need to install the Pillow library. This can be done via pip:

pip install Pillow

Once installed, opening an image file in Python is incredibly simple:

from PIL import Image

# Open an image file

image = Image.open('sample_image.jpg')

image.show()

In this example:

- The

Image.open()function opens the image file, andimage.show()displays it. - This approach works for common image formats in Python, such as JPEG, PNG, and GIF.

Resizing and Saving an Image

One of the most common tasks is resizing an image, which can be done easily using the resize() method. You can also save the image in a different format if needed:

# Resizing an image

resized_image = image.resize((200, 200))

# Save the resized image

resized_image.save('resized_image.png')

- The

resize()method allows you to define new dimensions, such as(200, 200). - The image is saved as a PNG file using the

save()method.

Converting Image Formats

Sometimes, you may need to convert an image from one format to another. The Pillow library makes this process easy:

# Convert JPEG to PNG

image.save('new_image.png')

With just one line of code, you can convert between various formats, making handling image files in Python.

Working with Audio Files in Python

Audio files come in different formats, such as WAV and MP3, and are commonly used in various applications, from game development to podcast editing. Python’s librosa library is a powerful tool for handling audio files in Python, especially when it comes to sound file processing and analysis.

Installing Librosa

Before you can start working with audio files, you’ll need to install the librosa library, which can be done using pip:

pip install librosa

Loading an Audio File

The first step in processing an audio file is to load it into Python. Here’s how you can do this using librosa:

import librosa

# Load an audio file

audio_data, sample_rate = librosa.load('sample_audio.wav', sr=None)

print(f"Sample Rate: {sample_rate}")

In this example:

- The

librosa.load()function loads the audio file, and thesr=Noneargument ensures the sample rate is preserved. - The

audio_datavariable stores the actual waveform of the audio, whilesample_rateprovides the number of samples per second.

Analyzing Audio Files

Once the audio file is loaded, you can perform various types of analysis. For example, you might want to calculate the tempo of the audio:

# Calculate tempo (beats per minute)

tempo, _ = librosa.beat.beat_track(y=audio_data, sr=sample_rate)

print(f"Tempo: {tempo} BPM")

- The

librosa.beat.beat_track()function estimates the tempo of the audio in beats per minute (BPM). - This is useful for music analysis, where identifying the tempo of an audio file can provide insights into its rhythm and structure.

Saving Processed Audio

If you modify or process the audio and need to save it back to a file, you can use the soundfile library, which works well with librosa:

pip install soundfile

import soundfile as sf

# Save the processed audio data

sf.write('output_audio.wav', audio_data, sample_rate)

This process ensures that the modified audio is stored in the desired format, allowing you to work efficiently with audio files in Python.

Summary of Image and Audio File Processing

Here’s a quick comparison of working with image and audio files in Python:

| Task | Image Files (Pillow) | Audio Files (librosa) |

|---|---|---|

| Open File | Image.open() | librosa.load() |

| Resize/Process File | image.resize() | librosa.effects.time_stretch() |

| Save File | image.save() | sf.write() |

| Supported Formats | JPEG, PNG, BMP, GIF, etc. | WAV, MP3 |

Practical Use Cases

Both image files and audio files are crucial in many real-world applications:

- Image Processing: Used in web development for managing website images, building machine learning models for image recognition, or creating data visualizations.

- Audio Processing: Useful for developing media players, processing podcasts, or analyzing music tracks for rhythm and melody detection.

By understanding how to process image and audio files in Python, you’ll have a solid foundation for building applications that require multimedia handling, enhancing your ability to work with various file formats in Python.

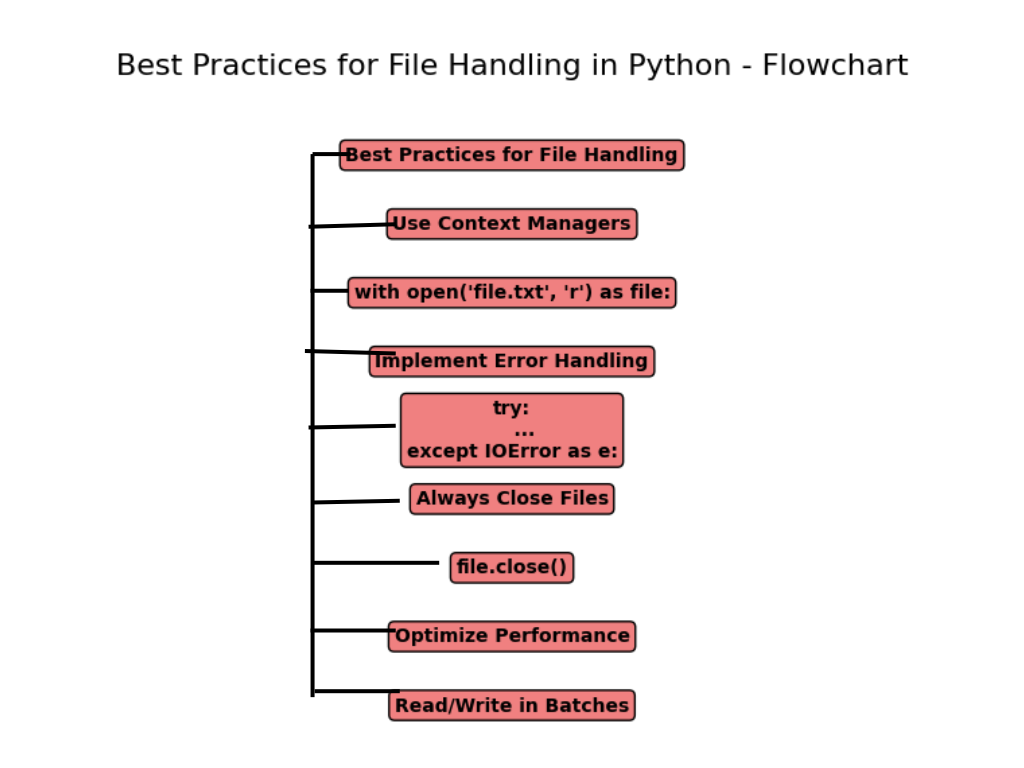

Best Practices for File Handling in Python

Working with files is a fundamental part of programming, and Python offers efficient tools to manage file operations. To optimize file handling and prevent common mistakes, adopting best practices is key. Below, we’ll explore how to optimize file handling in Python, offer tips for efficient file processing, and touch on crucial error-handling techniques.

Efficient File Processing Techniques

When working with file formats in Python, performance matters. Mismanagement of file resources can lead to memory leaks, performance lags, and unhandled errors. One effective way to optimize file handling in Python is through the use of the with statement.

Using with for File Operations

The with statement ensures that files are properly closed after their suite finishes executing, even if an error occurs. This technique eliminates the need to manually close files, making code cleaner and reducing memory-related issues.

Here’s a simple example:

# Efficiently reading a file using 'with' statement

with open('example.txt', 'r') as file:

content = file.read()

print(content)

- In this example, the

withstatement automatically handles the closing of the file after the block of code completes, ensuring proper resource management. - This practice reduces errors like trying to read from or write to an already-closed file, making file handling in Python safer and more efficient.

Avoiding Large File Load

When working with large files, loading everything into memory at once can be inefficient. Instead, processing files line by line reduces memory consumption and enhances performance:

# Reading a large file line by line

with open('large_file.txt', 'r') as file:

for line in file:

process_line(line)

This method works well when you’re dealing with large datasets, especially in file formats in Python that require frequent I/O operations. It allows your program to manage memory more effectively while processing each line.

Error Handling in File Operations

When handling files, errors are inevitable. Files may not exist, permissions may be denied, or read/write operations could fail. Having robust error handling mechanisms is vital in preventing program crashes.

Handling File Not Found Errors

A common error is trying to access a file that doesn’t exist. To tackle this, we use the try-except block to gracefully handle such situations:

try:

with open('non_existent_file.txt', 'r') as file:

content = file.read()

except FileNotFoundError:

print("File not found. Please check the file path.")

- The

FileNotFoundErrorexception catches the specific error if the file does not exist. - By using a

try-exceptblock, we prevent the program from crashing and offer the user an error message, which enhances the user experience.

Handling Permission Errors

Another common issue is lacking proper permissions to access a file. In such cases, handling PermissionError can prevent your code from breaking:

try:

with open('restricted_file.txt', 'r') as file:

content = file.read()

except PermissionError:

print("Permission denied. You do not have access to this file.")

Handling these errors allows for smoother file processing, making your code more resilient in various situations.

Latest Advancements in Python File Handling

Python continues to evolve, offering better tools and libraries for file management, particularly when handling complex or large datasets. In this section, we’ll explore latest Python libraries and discuss advancements in big data file processing.

Latest Libraries for File Formats

In recent years, new libraries and updates to existing ones have made it easier to handle complex file formats like Parquet or Avro, commonly used in big data applications. Some notable advancements include:

- Pyarrow: An Apache Arrow library that allows efficient reading and writing of large datasets in formats like Parquet.

- Fastavro: A fast and efficient library for working with Avro data, offering better performance over the traditional Avro library.

Here’s how you can use Pyarrow to handle Parquet files, which are often used in big data applications:

import pyarrow.parquet as pq

# Reading a Parquet file

table = pq.read_table('data.parquet')

print(table)

Pyarrow provides the tools necessary for working with modern file formats in file handling in Python, offering speed and memory efficiency.

Using Python for Big Data File Processing

As datasets grow larger, processing them efficiently becomes a challenge. Handling big data files in Python has become easier with libraries like Dask, which allows parallel computing and the handling of extremely large datasets that would not fit into memory at once.

Introducing Dask for Big Data

Dask is a powerful library for handling large data files, such as CSVs or Excel files, without loading the entire file into memory. It splits the data into smaller chunks and processes them in parallel, making it perfect for big data file formats in Python.

Here’s how you can use Dask to process a large CSV file:

import dask.dataframe as dd

# Reading a large CSV file

df = dd.read_csv('large_dataset.csv')

# Perform operations on the dataset

df_filtered = df[df['column_name'] > 1000]

By using Dask, your program can handle gigabytes or even terabytes of data efficiently, without the risk of crashing due to memory limitations.

Comparison: Dask vs. Pandas

| Library | Best Use Case | Memory Efficiency | Performance |

|---|---|---|---|

| Pandas | Small to medium-sized datasets | Loads entire file | Moderate |

| Dask | Large datasets that exceed memory capacity | Processes in chunks | High |

Conclusion

Choosing the right file format for your Python project can significantly impact both performance and ease of use. Depending on the nature of your project, some formats may be better suited than others. Below is a quick guide to help you choose the file format in Python that best fits your needs:

CSV – Best for Data Analysis

- Ideal for: Handling large datasets and performing data analysis.

- Libraries to Use: Pandas, Dask.

- Advantages: Easy to read, manipulate, and store tabular data. Well-suited for tasks like machine learning and data wrangling.

JSON – Best for Web Applications

- Ideal for: Storing and transmitting data in web applications or APIs.

- Libraries to Use:

jsonmodule, Pandas (for dataframes). - Advantages: Lightweight and human-readable. Works well with JavaScript and web frameworks.

Parquet – Best for Big Data

- Ideal for: Large datasets and big data processing.

- Libraries to Use: Pyarrow, Dask.

- Advantages: Highly efficient for reading and writing columnar data. Used in big data ecosystems like Hadoop and Spark.

XML – Best for Document Storage

- Ideal for: Document storage and data interchange between different systems.

- Libraries to Use:

xml.etree.ElementTree, lxml. - Advantages: Extensively used in web services and for document formatting (like RSS feeds).

Pickle – Best for Python Objects

- Ideal for: Serializing Python objects to disk.

- Libraries to Use:

pickle. - Advantages: Simple and native to Python, best for storing Python-specific data structures.

WAV or MP3 – Best for Audio Data

- Ideal for: Audio processing and storage.

- Libraries to Use: librosa, soundfile.

- Advantages: Well-established formats for handling sound data.

Conclusion Summary

- CSV: Best for handling structured data for analysis.

- JSON: Go-to for web apps and data exchange.

- Parquet: Perfect for managing large datasets in big data environments.

- XML: Best for structured document storage.

- Pickle: Ideal for saving Python objects directly.

- WAV/MP3: Top choices for audio file processing.

Ultimately, the best file format for your Python project depends on your specific use case. Whether you’re analyzing data, building a web app, or managing big data, Python’s flexible libraries allow you to work efficiently with various formats.

FAQ on Working with File Formats in Python

The Pandas library is highly recommended for working with CSV files in Python due to its powerful functions for reading, writing, and manipulating large datasets efficiently.

For handling large JSON files, consider using Python’s built-in json module with file chunking or use libraries like ijson to process files lazily, which helps reduce memory usage.

Yes, Python can handle binary files using the open() function with modes 'rb' (read binary) and 'wb' (write binary). These modes allow you to read and write raw binary data effectively.

External Resources

- File Input/Output: Python I/O

- This section covers file handling in Python, including reading and writing files, which serves as a fundamental resource for understanding file operations.

JSON Documentation

- Python JSON Module: Python json

- The official documentation for the JSON module in Python, which explains how to parse and serialize JSON data.

Python XML Processing Documentation

- ElementTree: xml.etree.ElementTree

- This documentation provides insights into how to read and write XML files using the ElementTree library.

OpenPyXL Documentation

- OpenPyXL: OpenPyXL Documentation

- A library for reading and writing Excel files (XLSX) in Python. This documentation is essential for working with Excel file formats.

Python Imaging Library (PIL)

- Pillow Documentation: Pillow (PIL Fork)

- The official documentation for Pillow, a library used for opening and processing image files in various formats.

Leave a Reply