Top Chunking Strategies to Boost Your RAG System Performance

Introduction

RAG stands for Retrieval-Augmented Generation. It’s a system that can search through large amounts of information, pick out relevant bits, and then generate meaningful responses based on what it finds. This makes it great for tasks like answering questions or summarizing complex information. One key technique to make these systems more effective is chunking strategies.

These strategies involve breaking large blocks of information into smaller, bite-sized pieces. Think of it like cutting a big loaf of bread into slices. It makes it easier to handle and consume. When information is in smaller chunks, RAG systems can find and understand it more easily, leading to better and faster results.

This blog is all about showing you the best chunking strategies and explaining when to use them. Whether you’re a beginner or experienced in working with AI systems, you’ll find tips and examples to help you get better results.

What is Chunking in RAG Systems?

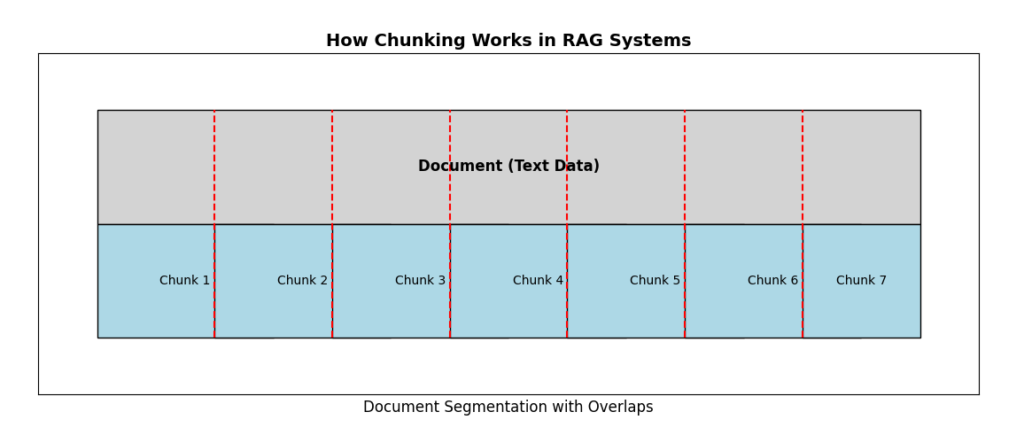

Chunking in RAG (Retrieval-Augmented Generation) systems refers to breaking down large pieces of text into smaller, meaningful sections or chunks. These systems work by retrieving relevant information from data sources and generating accurate responses. When information is neatly divided into chunks, the system can quickly find and process the right content.

How Chunking Works

Instead of feeding a whole book or a long document into the system, we chop it into smaller parts, like paragraphs or topic-specific sections. Each chunk becomes easier for the model to search through and use during retrieval.

Why Maintaining Contextual Integrity Matters

When we break down information, keeping the meaning and connections between chunks is crucial. Imagine reading a book chapter split into random sentences — it would be confusing! Poor chunking can cause the model to miss key details or misunderstand the context, leading to inaccurate answers.

Good chunking ensures that:

- Relevant information stays connected.

- The system doesn’t lose track of important relationships between ideas.

Challenges with Poorly Implemented Chunking

- Loss of context: If a chunk is too small, it may miss essential information, leading to incomplete answers.

- Irrelevant retrieval: Chunks that lack meaningful boundaries might confuse the system, causing it to pull out unnecessary data.

- Performance issues: Inefficient chunking can make searches slower and increase processing time.

Effective chunking ensures the RAG system stays accurate, fast, and context-aware, providing better responses every time.

Why Chunking Matters for RAG Performance

In RAG systems, chunking isn’t just about dividing text randomly. It directly affects how well the system finds information, responds to queries, and handles large amounts of data efficiently.

Improved Retrieval Accuracy

When information is chunked properly, the system has smaller, focused sections to search through. This makes it easier to find the most relevant details. For example, if you ask, “What are the benefits of a healthy diet?” the system can quickly pull the right chunk containing the answer instead of scanning through irrelevant sections.

Faster Response Times

Small, meaningful chunks mean the system doesn’t have to scan massive blocks of text for every query. When the system searches through compact chunks, it finds the answer more quickly. This makes real-time applications like virtual assistants more responsive and efficient.

Enhanced Memory Efficiency

RAG systems often have memory limits when processing data. Chunking ensures that each section of information stays within these limits. This reduces the risk of memory overload, allowing the system to work smoothly without slowing down or crashing.

Reduction of Information Loss

Poor chunking can cause important details to be separated or lost. Proper chunking maintains connections between related information, making sure that no key points are missed. This helps the system provide complete and accurate responses.

Must Read

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

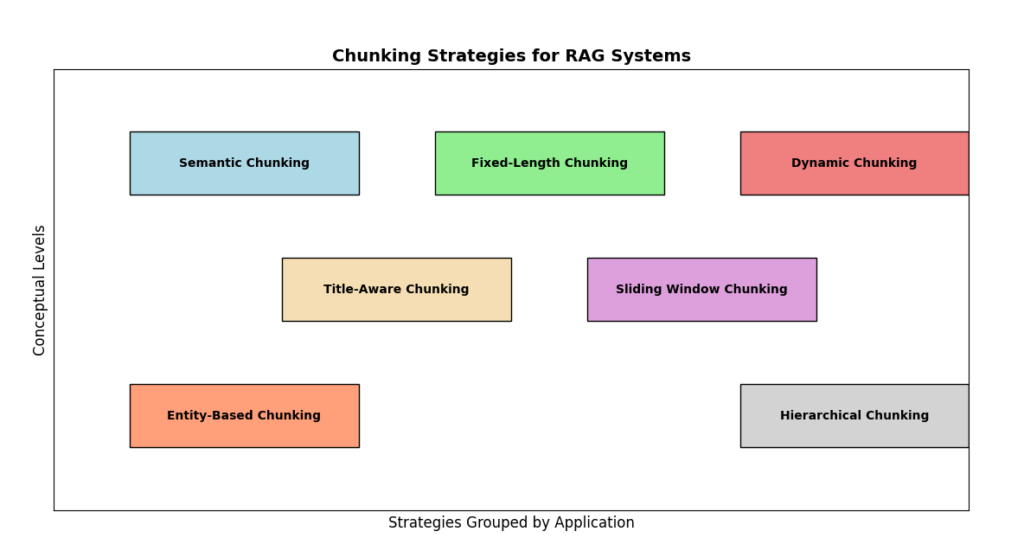

Best Chunking Strategies for RAG Systems

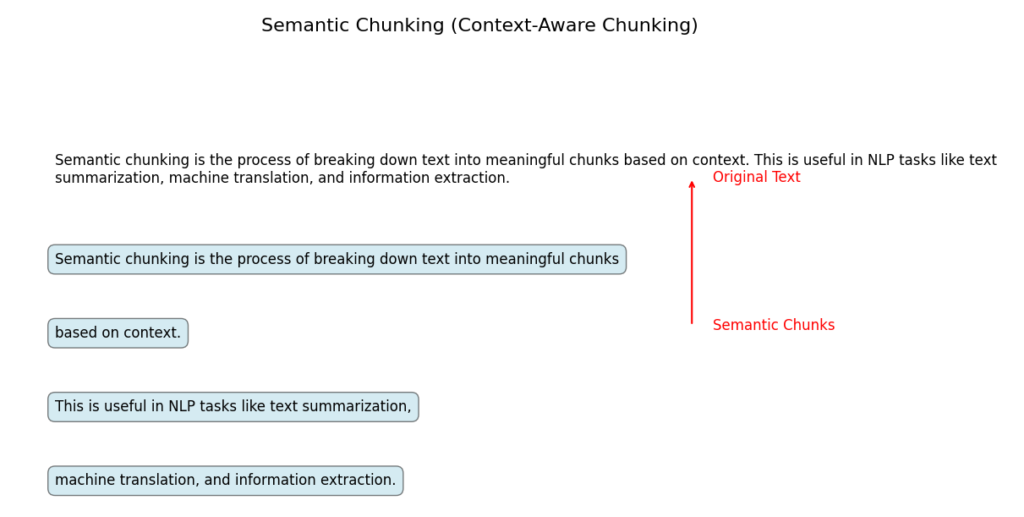

Semantic Chunking (Context-Aware Chunking)

Semantic chunking means breaking down text based on meaning instead of just dividing it by size or sentence count. It’s all about keeping related information together so that it makes sense when retrieved by a system.

How It Works

- The system looks at what the text is saying, not just how long it is.

- It finds points where a new idea or topic begins and creates chunks based on those boundaries.

- Think of it like grouping sentences that belong to the same story, so they don’t get mixed up.

Example to Understand

Let’s say you have a recipe:

- First, there are ingredients: “2 cups flour, 1 cup sugar…”

- Then, instructions: “Mix the flour and sugar, bake at 350°F…”

If you were dividing this text by size, “1 cup sugar” might end up in one chunk, and “Mix the flour and sugar” could be in another. This would make no sense.

Semantic chunking keeps related information together—ingredients in one chunk, steps in another. Now it makes sense when someone asks for either ingredients or instructions.

Why Use Semantic Chunking?

When AI systems look for answers, they need meaningful groups of information.

If the chunks are random or meaningless, the answers can be confusing or incomplete.

By keeping ideas intact, AI finds better and clearer answers.

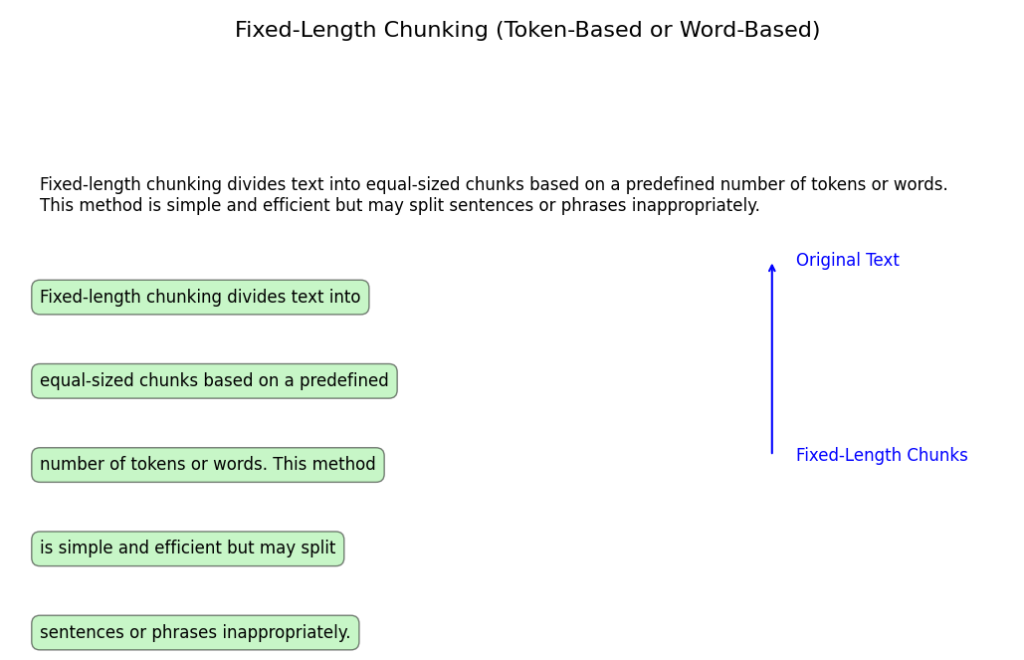

Fixed-Length Chunking (Token-Based or Word-Based)

Fixed-length chunking is a simple way to split text by counting either tokens or words. Each chunk contains the same number of words or tokens, regardless of where sentences or ideas naturally begin or end.

How It Works and Why It’s Simple

- Decide on a chunk size, for example, 100 words or 150 tokens (tokens are smaller units of text like parts of words).

- The system reads the text and splits it into equally sized chunks.

- If the text ends before reaching the chunk size, that chunk will just be shorter.

This method is simple because it doesn’t require analyzing the meaning or structure of the text. You just count words or tokens and split.

Use Case: Structured Datasets with Predictable Content Sizes

Fixed-length chunking works well for datasets that follow a fixed pattern or structure, where sentences are short and consistent.

For example:

- Data tables: customer records, transaction logs, or user reviews.

- Code files: where instructions are usually self-contained in small blocks.

Benefits

- Easy to Implement: No need for complex processing—just set the chunk size and split.

- Memory Efficient: Fixed-sized chunks help manage memory usage better since the system knows exactly how much text is in each chunk.

- Faster Processing: AI systems work faster when handling predictable-sized chunks because they don’t have to process varying sizes.

This technique is a simple and efficient solution when the data doesn’t need complex meaning-based segmentation.

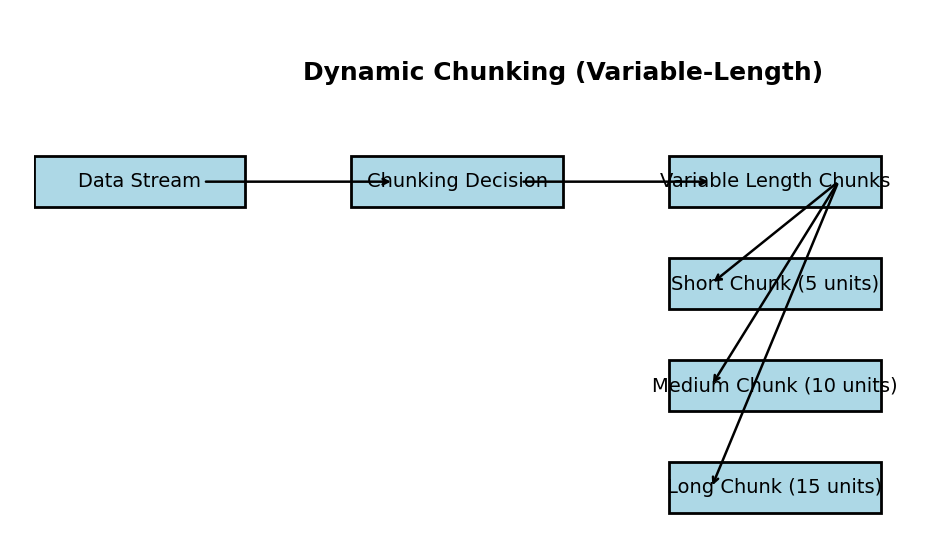

Dynamic Chunking (Variable-Length)

Dynamic chunking means splitting text into chunks where the size varies based on the content. Instead of using fixed rules, it adapts to how the text naturally flows. This approach ensures that related information stays together in the same chunk.

How It Works and Dynamic Adaptation

- The system reads and analyzes the content to find logical breaks, like topic shifts or new sections.

- Chunks are created where the content naturally separates, ensuring the text is grouped meaningfully.

- The size of each chunk can be different, depending on how much related information it contains.

For example:

- A detailed paragraph about a single topic may be one chunk.

- Several smaller, related points might form another chunk.

Use Case: Mixed-Content Documents with Topic Variations

Dynamic chunking is ideal for documents that contain different types of content or varying topics, such as:

- Research papers: where one section might have a long explanation and another just a short summary.

- News articles: where the introduction, body, and conclusion naturally vary in length.

- Business reports: with a mix of graphs, summaries, and detailed analysis.

Benefits of Dynamic Chunking

- Better Context Preservation: Since chunks are created based on meaning, related information stays together.

- Improved AI Performance: Systems can provide more accurate answers because they get meaningful, coherent chunks.

- More Flexible Retrieval: Different types of content are handled naturally without being forced into the same size chunks.

Dynamic chunking helps when text content varies, ensuring clearer and better-organized information retrieval.

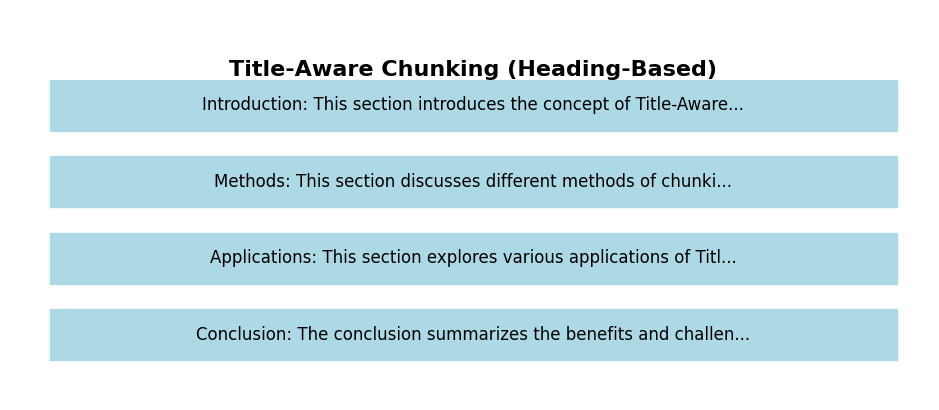

Title-Aware Chunking (Heading-Based)

Title-aware chunking involves splitting text based on section titles or headings. It uses the natural structure of documents to create meaningful content segments, making information retrieval more accurate and organized.

Step-by-Step Process

- Detect Headings:

The system scans for section titles like headings in articles or chapters in books (e.g., “Chapter 1: Introduction” or “Step 3: Configuration”). - Segment Content:

Text beneath each heading is grouped into a single chunk. This keeps related information in one place. - Include Heading as Context:

The heading is stored with the chunk, acting as a label or identifier for that content. - Store Chunks for Retrieval:

The system saves these structured chunks in its database, ready for efficient searching when users have queries.

Example

Consider a user manual:

Heading: “How to Connect Your Device”

- Instructions on connecting the device.

Next Heading: “Troubleshooting Connection Issues”

- Details on fixing common problems.

Each section becomes a separate, labeled chunk based on its title.

Use Case: Manuals, Guides, and Structured Documents

This method is ideal for content with well-defined sections, such as:

- User manuals: Different sections for setup, troubleshooting, and maintenance.

- Instruction guides: Clearly outlined steps for completing a task.

- Training materials: Lessons or modules with separate topics.

Benefits of Title-Aware Chunking

- Precise Response Generation: AI systems can retrieve the correct section directly when users ask questions.

- Efficient Information Organization: Content is neatly divided by topic, reducing clutter and confusion.

- Better Context Clarity: The title provides instant context, making the system’s responses more relevant and user-friendly.

This approach makes structured content easier to search and more efficient to navigate.

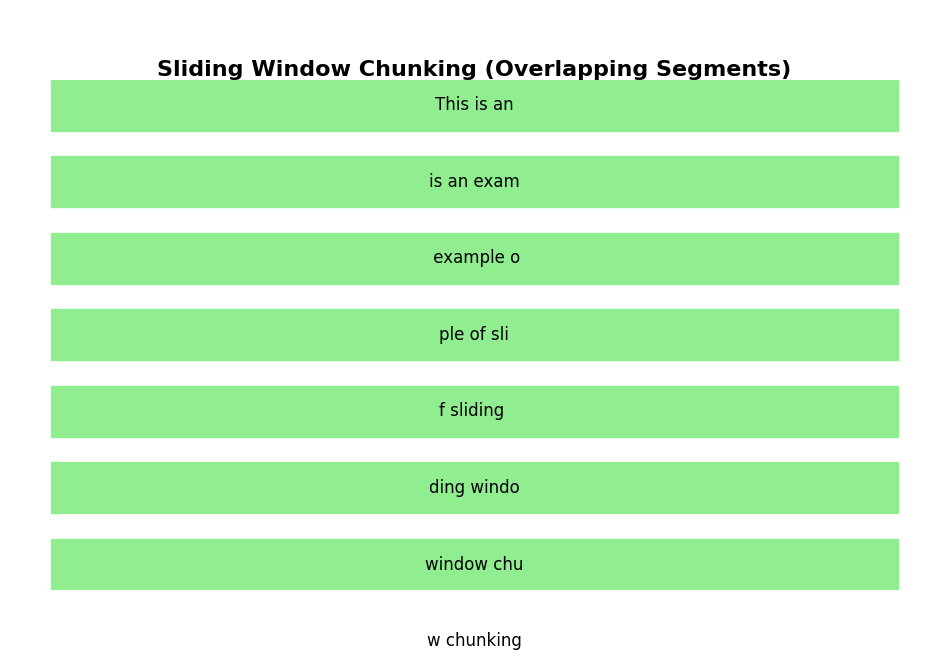

What Is Sliding Window Chunking (Overlapping Segments)?

This is a method of breaking large text into smaller overlapping parts.

Why overlapping? So that when the system processes the text, it doesn’t miss important information between segments.

How It Works (Super Simple Example)

Let’s say you have this sentence:

“The cat jumped over the fence and chased the dog across the park.”

If we split this without overlap:

- Chunk 1: “The cat jumped over the fence”

- Chunk 2: “and chased the dog across the park”

Now, notice that Chunk 1 and Chunk 2 don’t connect well. If the AI only sees one chunk, it might not understand the full meaning.

Sliding window solves this by sharing some words between chunks:

- Chunk 1: “The cat jumped over the fence”

- Chunk 2: “over the fence and chased the dog”

See how the second chunk repeats “over the fence” from the first one? This overlap helps keep the meaning clear.

Why It’s Useful

It works great for long documents where context flows between sections:

- Stories: Ensures smooth reading without losing key details.

- Research papers: Keeps related information together.

Benefits

- Keeps Context: No missing pieces when moving between chunks.

- More Accurate Responses: AI can make better sense of overlapping text.

- Prevents Information Loss: Helps the system “remember” important parts.

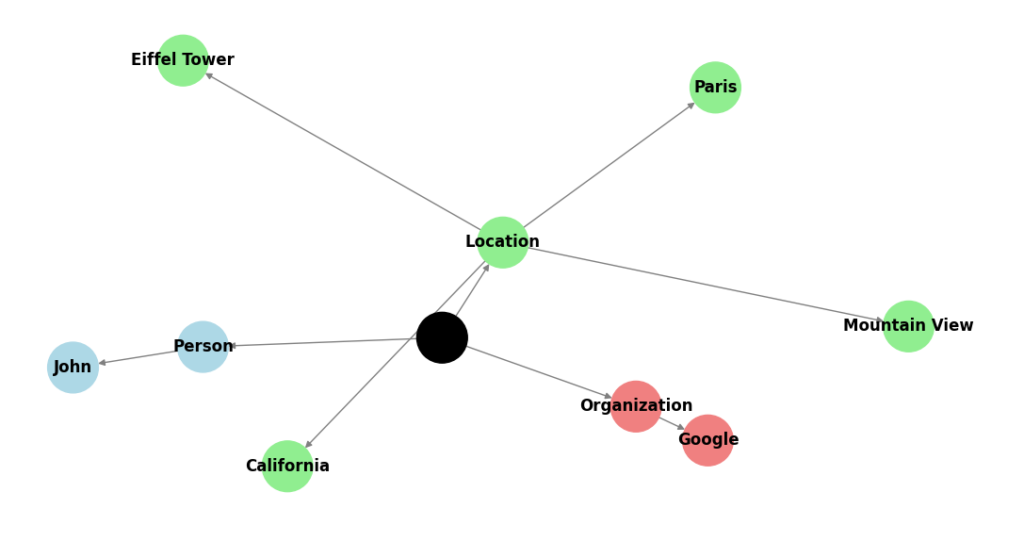

Entity-Based Chunking

Entity-based chunking focuses on breaking down text around specific important entities, such as names, dates, locations, or specialized terms. This technique ensures that information connected to these key entities stays together.

How It Works

- Identify Entities: The system scans the text to find important entities.

For example:- In medical documents: entities could be disease names or medications.

- Legal documents: they might be case numbers, contract clauses, or parties involved.

- In customer support logs: they could be product names or service categories.

- Group Text Around Entities:

The system chunks the text by grouping related sentences that mention or explain these entities.

Simple Example

Text:

“Patient John Doe was prescribed Aspirin 100mg. He reported severe headaches starting on January 3rd, 2023.”

Chunks:

- Entity 1 (John Doe): “Patient John Doe was prescribed Aspirin 100mg.”

- Entity 2 (January 3rd, 2023): “He reported severe headaches starting on January 3rd, 2023.”

By organizing content around entities, the system maintains more meaningful connections between facts.

Use Case: Medical, Legal, or Customer Support Documents

- Medical Records: Ensure that patient history and medications stay in context.

- Legal Documents: Keep contract clauses or case details together for easier retrieval.

- Customer Support Logs: Maintain details about specific customer issues and related resolutions.

Benefits

- Domain-Specific Precision: Keeps the most relevant information intact for specialized fields.

- Efficient Information Retrieval: AI can find and retrieve chunks based on key entities faster.

- Context Preservation: Reduces the risk of separating related information, making responses more accurate.

This approach ensures that critical information isn’t fragmented and stays connected to key topics.

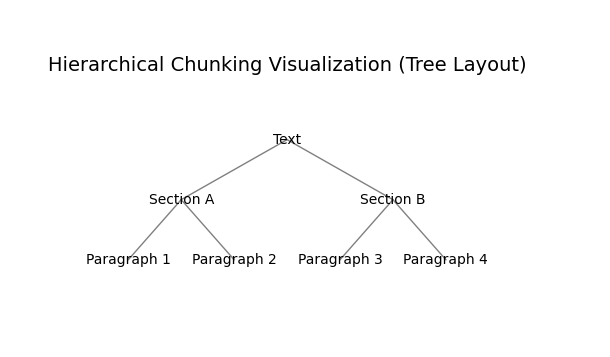

Hierarchical Chunking (Multi-Level Segmentation)

Hierarchical chunking is a method where text is divided into chunks at multiple levels, like creating layers in an outline. This approach is useful for large datasets, such as books or research archives, where information needs to be organized efficiently.

How It Works

- Top-Level Segmentation:

The text is first divided into broad sections, such as chapters in a book or major topics in a research archive. - Mid-Level Segmentation:

Each top-level segment is broken down into smaller parts, such as sections or subtopics. - Fine-Level Segmentation:

The system further divides the text into detailed chunks, like individual paragraphs or sentences.

This layered structure helps maintain the connection between related information while allowing more targeted retrieval.

Simple Example

Let’s take the example of a book:

- Level 1 (Top-Level): Chapter 1 – Introduction to AI

- 2nd Level (Mid-Level): Section 1.1 – History of AI

- Level 3 (Fine-Level): Paragraphs explaining early AI developments

Each level provides a logical breakdown, making it easier for AI models to search and retrieve information accurately.

Use Case: Large Datasets Like Books or Research Archives

- Books: Helps AI understand the structure and retrieve specific content, such as summaries or key insights.

- Research Archives: Allows researchers to quickly access relevant sections without sifting through the entire dataset.

Benefits

- Balances Granularity with Efficiency: Organizes information at multiple levels to avoid overwhelming the system while maintaining detailed context.

- Faster Information Retrieval: Multi-level segmentation makes it easier to pinpoint specific content.

- Improved Context Preservation: Maintains relationships between related chunks across different levels.

Hierarchical chunking offers a well-structured and scalable solution for managing and retrieving large volumes of information effectively.

How to Choose the Right Chunking Strategy for Your RAG System

Selecting the best chunking strategy depends on three key factors:

- Content Structure:

Is your content simple or complex? Structured datasets (like spreadsheets) may only need fixed-length chunking. Complex documents (like research papers) benefit from hierarchical or title-aware chunking. - Query Complexity:

Are users looking for precise answers or broader context? If they need exact details, entity-based or title-aware chunking works best. For broader insights, sliding window chunking can be a better fit. - Performance Goals:

Are speed and memory efficiency a priority? Simple strategies like fixed-length chunking excel at this, while hierarchical or dynamic chunking may trade some speed for better context retention.

Comparative Table: Chunking Strategies

| Chunking Strategy | Best For | Benefits | Best-Fit Scenarios |

|---|---|---|---|

| Fixed-Length (Token-Based) | Structured datasets | Easy to implement, memory-efficient | Spreadsheets, predictable content sizes |

| Dynamic (Variable-Length) | Mixed-content documents | Better context preservation | Blogs, varied-length documents |

| Title-Aware (Heading-Based) | Structured documents | Precise response generation | Manuals, guides, technical documents |

| Sliding Window (Overlap) | Long continuous content | Minimizes information loss | E-books, long-form research papers |

| Entity-Based Chunking | Domain-specific text | Domain-specific precision | Medical, legal, customer support logs |

| Hierarchical Chunking | Large datasets | Balances granularity with efficiency | Books, research archives |

Choosing the Right Strategy

There’s no one-size-fits-all solution. You may even combine multiple strategies to meet your specific needs. Start by analyzing your content structure and user requirements—that will guide you to the right strategy for your RAG system.

Best Tools and Frameworks for Chunking

When it comes to optimizing chunking in Retrieval-Augmented Generation (RAG) systems, using the right tools and frameworks can make a significant difference. Let’s explore some top options that can help you efficiently segment text and maintain contextual accuracy.

1. LangChain for Intelligent Chunking

What is it?

LangChain is a powerful framework designed for building applications around language models. It excels at handling text segmentation and retrieval tasks.

Why Use LangChain?

- Automates chunking and retrieval processes.

- Supports dynamic chunking based on content characteristics.

- Offers customizable text-processing pipelines.

How to Get Started:

pip install langchain

Sample Use:

from langchain.text_splitter import RecursiveCharacterTextSplitter

text = "Your long document text here..."

splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

chunks = splitter.split_text(text)

print(chunks)

2. Hugging Face Transformers

What is it?

Hugging Face is a widely-used library for working with state-of-the-art language models like BERT and GPT.

Why Use Hugging Face for Chunking?

- Excellent for token-based chunking using pre-trained models.

- Provides tools for sequence length management to prevent token overflow.

- Can be combined with other chunking strategies for better performance.

How to Install:

pip install transformers

Sample Use for Token Chunking:

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

text = "Your long document text here..."

tokens = tokenizer(text, return_tensors="pt", truncation=True, max_length=512)

print(tokens)

3. OpenAI APIs for Context-Aware Chunking

What is it?

OpenAI APIs, including models like GPT, are excellent for dynamically chunking text while preserving context.

Why Use OpenAI APIs?

- Automatically handle large text inputs and break them down intelligently.

- Supports fine-tuning for specific chunking strategies.

- Can be integrated with custom preprocessing pipelines.

How to Use:

import openai

openai.api_key = "your-api-key"

text = "Your long document text here..."

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": text}]

)

print(response.choices[0].message.content)

Choosing the Right Tool

Each tool has its own strengths. If you’re building a comprehensive RAG system, LangChain is great for intelligent chunking, while Hugging Face works well for token-based strategies. For context-aware dynamic chunking, OpenAI APIs offer unmatched flexibility.

Practical Tips for Implementing Chunking in RAG Systems

Effective chunking can significantly boost the performance of Retrieval-Augmented Generation systems. Below are actionable tips to help you define chunk sizes, manage overlapping chunks, and refine your strategy through testing and iteration.

1. Guidelines for Defining Chunk Sizes

Choosing the right chunk size is essential for preserving context while maintaining efficient memory usage.

- Consider Token Limits: Language models like GPT have token limits (e.g., GPT-4 maxes out at 8k or 32k tokens). Aim for chunk sizes that fit well within these limits, accounting for prompt and response lengths.

- Content Complexity: For simple, structured content (like FAQs), smaller chunks (500–800 tokens) are effective. For complex documents (like research papers), opt for larger chunks (1,000–2,000 tokens) to retain context.

- Manual Sampling: Test chunks of different sizes manually to see if they capture the full meaning without unnecessary truncation.

2. Handling Overlapping Chunks Effectively

Overlapping chunks help maintain contextual continuity between segments. However, excessive overlap can waste memory and computation.

Tips for Effective Overlap:

- Balance Overlap Size: Aim for overlaps of 10%–20% of the chunk length to avoid information gaps without significant redundancy.

- Sliding Window Technique: Implement a sliding window approach where each chunk overlaps slightly with the previous one.

Sample Code for Overlapping Chunking:

def chunk_with_overlap(text, chunk_size, overlap_size):

chunks = []

for i in range(0, len(text), chunk_size - overlap_size):

chunks.append(text[i:i + chunk_size])

return chunks

text = "Your long document text here..."

chunks = chunk_with_overlap(text, chunk_size=500, overlap_size=100)

print(chunks)

3. Testing and Iterating on Chunking Strategies

The best chunking strategy often requires testing and refinement.

- Initial Testing: Start with fixed-length or simple dynamic chunking. Evaluate retrieval accuracy and model response quality.

- User Feedback: If your system is user-facing, gather feedback on response relevance and completeness.

- Performance Metrics: Track metrics like retrieval accuracy, response latency, and memory usage.

- A/B Testing: Test multiple chunking strategies simultaneously to determine which one yields the best results.

- Fine-Tuning: Continuously refine chunk sizes and overlaps based on your findings.

By following these practical tips, you’ll be able to build a more efficient and context-aware RAG system that delivers better results for users.

Conclusion

Chunking is a powerful way to break down large amounts of text for better performance in RAG (Retrieval-Augmented Generation) systems. Choosing the right method for your content, whether it’s fixed-length, dynamic, title-aware, or hierarchical chunking, can make your system smarter and more efficient.

When you implement the best chunking strategy, your system will:

- Retrieve information faster

- Provide accurate and context-aware answers

- Reduce memory usage and improve overall performance

By using tools like LangChain, Hugging Face, and OpenAI APIs and applying practical tips for testing and refining your chunking strategy, you’ll create a system that delivers better results.

Want to learn more about making your RAG systems even smarter? Visit EmitechLogic for more expert tips and guides!

FAQs

1. What is chunking in RAG systems?

2. Why is chunking important for RAG performance?

3. What are some popular chunking strategies?

4. Which tools can help with chunking?

External Resources

LangChain Documentation – LangChain

Explore how LangChain can be used for intelligent chunking and efficient retrieval-based AI applications.

Hugging Face Transformers – Hugging Face

Learn about powerful NLP models and how to manage chunked inputs for better performance.

Efficient Information Retrieval in NLP – Medium Article

A detailed article discussing techniques for chunking and optimizing information retrieval in AI models.

Understanding RAG Systems by Cohere – Cohere Blog

A beginner-friendly guide to understanding Retrieval-Augmented Generation and its practical use cases.

Leave a Reply