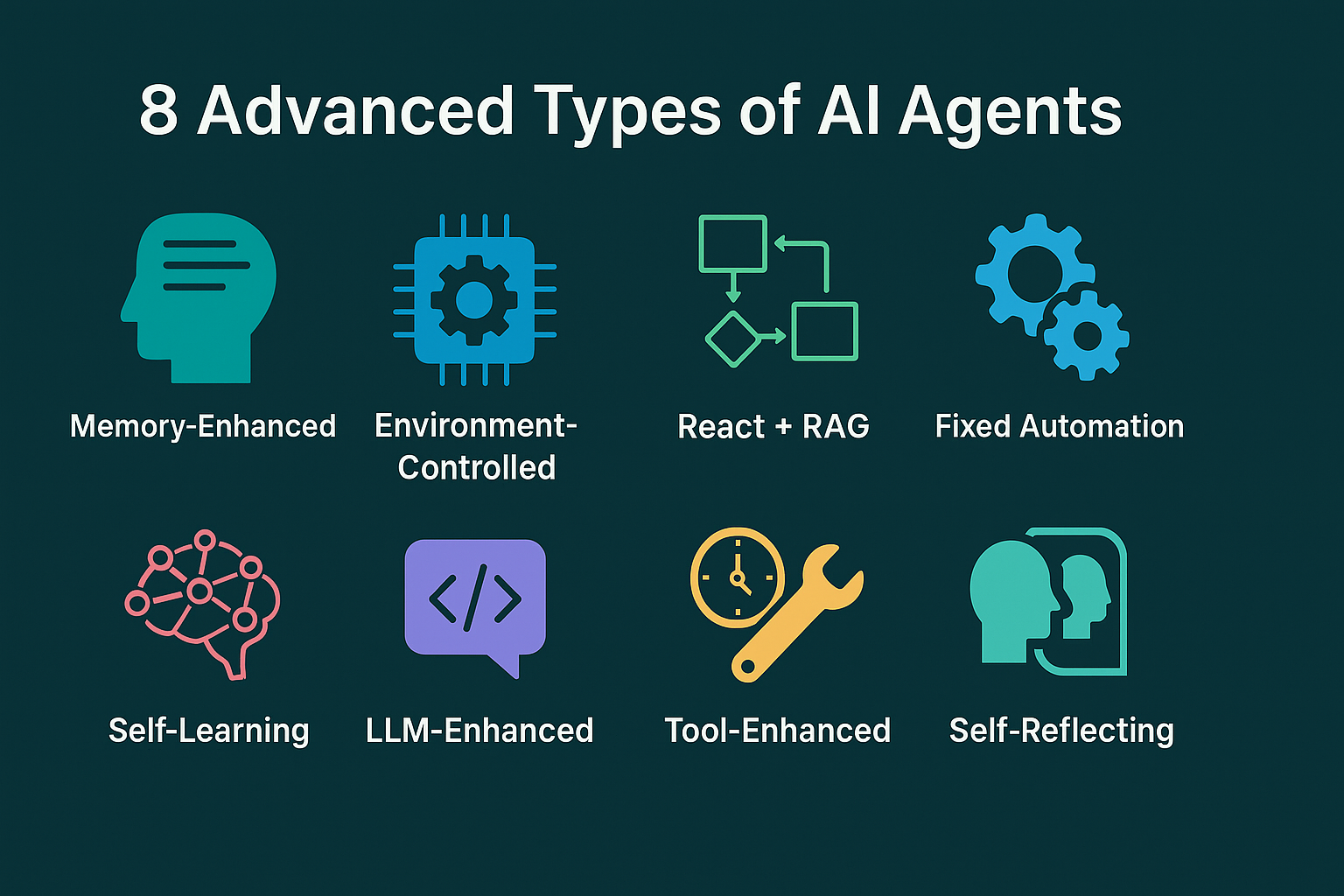

8 Advanced Types of AI Agents You Should Know About

What is an AI Agent?

An AI Agent is a software system that uses artificial intelligence to get input from the user or environment and then act autonomously to achieve a specific goal on behalf of the user or system.

It is like a smart assistant that watches what’s happening around it and then does something useful based on what it sees.

What Does It Need to Work?

Every AI agent has:

- Perception: It senses its environment. (E.g., reading a user message or detecting a face.)

- Decision-making: It chooses an action. (E.g., give a reply or move forward.)

- Action: It does something. (E.g., answers a question or turns a wheel.)

Some agents also learn from experience, which makes them smarter over time.

Now let’s explore 8 advanced types of AI agents

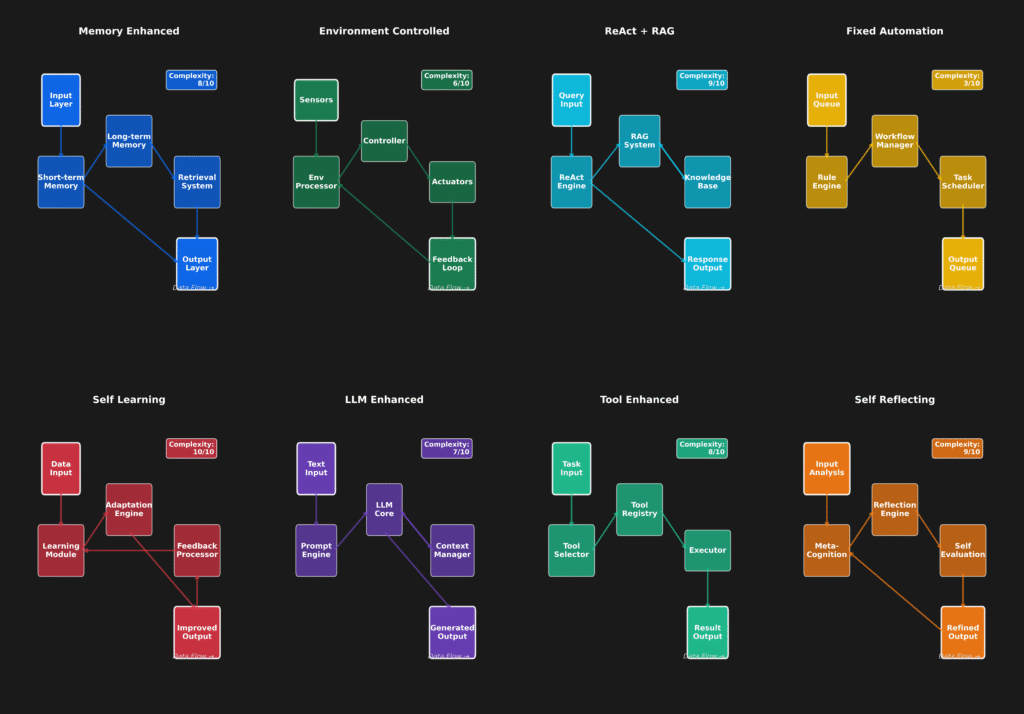

8 Advanced Types of AI Agents

1. Fixed Automation Agent

Let’s say you’re setting up an AI agent in a factory that checks the size of metal rods. Now, instead of training a model or adding some intelligent behavior, you just give it a fixed set of steps:

- Measure the length.

- If it’s not exactly 100 cm, reject it.

- If it is, pass it on to the next machine.

That’s it. No learning. No adapting. Just strict rules.

It’ll do that even if the lights go out and come back on, even if 1,000 perfect rods have already passed—it won’t change behavior. It won’t say “Hey, maybe I should double-check rod #1001” — because it’s not built to think. It’s built to follow a predefined workflow.

Honestly, it’s like writing a really neat Python script that always runs the same way every time you execute it. You could call it smart because it’s faster than a human at repetitive tasks. But it’s also very dumb in the sense that it can’t adjust if something unexpected happens.

So when we say these agents don’t adapt — we really mean it. Even if the environment changes, they’ll behave as if everything is the same.

Now, why are they still useful? Because in places like manufacturing or assembly lines, you don’t want the system to “think.” You want perfect repeatability. Any kind of smart guesswork or change in logic could introduce errors, and that’s expensive.

So while it’s tempting to always aim for learning agents or something more flexible, fixed automation agents have their place — where reliability is more important than intelligence.

Technical Implementation

Implementation typically involves:

- State machines with predefined transitions

- Rule-based systems with hardcoded decision trees

- Sequential workflows with no branching logic

- Direct mapping between inputs and outputs

- No memory or learning mechanisms

- Error handling through predetermined fallback procedures

Key Advantages

- Completely predictable behavior

- High reliability and consistency

- Low computational requirements

- Easy to debug and maintain

- Perfect for repetitive tasks

- No unexpected behavior

Limitations

- Cannot adapt to new situations

- Brittle when facing unexpected inputs

- Requires manual updates for changes

- Limited problem-solving capabilities

- No learning from experience

- Cannot handle edge cases not programmed

Real-World Applications

- Assembly line robotic arms

- Automatic door systems

- Basic chatbot responses

- Automated email responders

- Traffic light controllers

- Vending machine operations

Here’s a simple Python script that simulates a fixed automation agent. This agent watches a folder for incoming files, checks if the filename ends with .pdf, and then moves it to a processed/ folder. If the file doesn’t match the rule, it moves it to a rejected/ folder.

Python Code: Fixed Automation Agent

Folder Setup:

Make sure you have these folders in the same directory as the script:

/incoming

/processed

/rejected

You can simulate the agent by placing test files into the incoming/ folder.

import os

import shutil

import time

# Define the folders

INCOMING_FOLDER = 'incoming'

PROCESSED_FOLDER = 'processed'

REJECTED_FOLDER = 'rejected'

# Fixed rule: Only accept PDF files

def is_valid_file(filename):

return filename.lower().endswith('.pdf')

# Fixed Automation Agent Logic

def fixed_automation_agent():

print("Fixed Automation Agent Started. Monitoring incoming files...")

while True:

# List files in the incoming folder

files = os.listdir(INCOMING_FOLDER)

for file in files:

src_path = os.path.join(INCOMING_FOLDER, file)

if os.path.isfile(src_path):

if is_valid_file(file):

dest_path = os.path.join(PROCESSED_FOLDER, file)

shutil.move(src_path, dest_path)

print(f"Moved valid file: {file} → {PROCESSED_FOLDER}")

else:

dest_path = os.path.join(REJECTED_FOLDER, file)

shutil.move(src_path, dest_path)

print(f"Rejected file: {file} → {REJECTED_FOLDER}")

# Wait for 5 seconds before checking again

time.sleep(5)

# Run the agent

if __name__ == "__main__":

fixed_automation_agent()

How It Works:

- Places

.pdffiles intoprocessed/ - Moves all other files to

rejected/ - Repeats this check every 5 seconds, forever

This Is a Fixed Automation Agent Because:

- It uses a hardcoded rule (

endswith('.pdf')) - It doesn’t learn or adapt

- and It behaves exactly the same way each time

2. Environment-Controlled Agent

When we say environment-controlled agent, we’re talking about an agent that can take smart actions, but only inside a known setup.

Let’s use a practical situation.

Say you’re designing a smart air conditioner. It doesn’t just turn on and off like a basic device. Instead, it:

- Reads room temperature,

- Checks humidity,

- Looks at how many people are inside (maybe via a motion sensor),

- And based on all that, adjusts the fan speed or cooling level.

Now here’s the key — this AI agent understands the environment, but that environment is controlled. It doesn’t have to deal with outside noise like weather forecasts or stock prices. It doesn’t learn new behaviors beyond what you’ve allowed. But within the room, it makes decisions on its own.

So what makes it different from fixed automation?

If this were fixed automation, it would do this:

if temperature > 25:

turn_on_cooling()

That’s it. Every time. No variation.

But an environment-controlled agent might say:

if temperature > 25 and humidity < 60 and people_in_room > 1:

turn_on_cooling(fan_speed="high")

So it’s not learning like a human, but it’s responding based on rules inside a defined world. That world is its environment — and it knows how to operate smartly within that space.

Let’s make this real with a question:

Suppose we build a robot for a greenhouse. It waters plants based on soil moisture, sunlight, and time of day. But it doesn’t know about traffic, holidays, or what’s happening outside the greenhouse. Would you say that’s an environment-controlled agent?

If your answer is yes, then you’re getting it.

Let’s build a simple environment-controlled agent in Python — one that simulates a greenhouse assistant.

Goal:

We’ll create an agent that decides whether to water the plants based on:

- Soil moisture

- Sunlight level

- Time of day

And remember: it only operates within the greenhouse, so it won’t react to anything beyond this environment.

Step-by-Step Behavior

Let’s define the environment and then how the agent reacts inside it.

Environment Variables:

- Soil moisture (

moisture) - Sunlight level (

sunlight) - Time of day (

hour)

Rules for Decision:

- If moisture is below 30%, and

- Sunlight is not intense (to avoid burning wet leaves), and

- Time is morning or evening

Then water the plants.

Python Code: Environment-Controlled Agent

import random

# Environment simulation

def get_environment_state():

# Simulate sensor readings

moisture = random.randint(10, 100) # Soil moisture in percentage

sunlight = random.choice(["low", "medium", "high"])

hour = random.randint(0, 23) # 24-hour format

return {

"moisture": moisture,

"sunlight": sunlight,

"hour": hour

}

# Agent decision logic

def should_water_plants(env):

moisture = env["moisture"]

sunlight = env["sunlight"]

hour = env["hour"]

# Decision boundaries

if moisture < 30 and sunlight != "high" and (6 <= hour <= 9 or 17 <= hour <= 19):

return True

return False

# Agent simulation

def greenhouse_agent():

env = get_environment_state()

print(f"Environment: {env}")

if should_water_plants(env):

print("✅ Action: Water the plants.")

else:

print("❌ Action: Do not water the plants.")

# Run the simulation

for _ in range(5):

greenhouse_agent()

print("-" * 40)

What’s happening?

- The environment is controlled: moisture, sunlight, and time.

- The agent doesn’t learn, but it makes smart decisions within this space.

- This is not just fixed automation—it checks multiple variables before deciding.

Technical Implementation

Key components include:

- Environmental state monitoring systems

- Constraint-based decision making

- Feedback loops for optimization

- Safety protocols and boundary enforcement

- Rule-based adaptation within limits

- Sensor integration for environmental awareness

- Optimization algorithms for efficiency

Key Advantages

- Optimal performance within constraints

- Safe and predictable operation

- Can adapt to environmental changes

- Efficient resource utilization

- Reduced complexity through boundaries

- Robust error handling within scope

Limitations

- Cannot operate outside defined environment

- Limited creativity in problem-solving

- Requires environment to remain stable

- May struggle with unexpected constraints

- Performance depends on environment design

- Cannot transfer learning to new environments

Real-World Applications

- Smart thermostats adjusting temperature

- Elevator control systems

- Automated parking systems

- Smart irrigation controllers

- HVAC optimization systems

- Warehouse management robots

3. Memory-Enhanced AI Agent

Now, I’ll walk you through how a memory-enhanced AI agent actually works, step by step.

so here’s the scene:

You’re chatting with an AI agent, right?

At first, it’s just listening. You tell it:

“My name is Emmimal Alexader, and I’m building a blog on AI agents for my website EmiTechLogic.”

Now, here’s the magic part:

A memory-enhanced agent doesn’t just respond and forget. It says:

“Okay, Emmimal — noted. You’re writing a blog about AI agents for your website EmiTechLogic.”

Then the next time you speak to it — maybe a day later — you ask:

“Hey, can you help me write the next section?”

A basic agent would say:

“Sure. What are you working on?”

But a memory-enhanced agent responds:

“Of course, Emmimal! Last time you said you were writing about AI agents — want to continue from there?”

See the difference? Now let’s see how it does work.

So how does it actually work?

Let’s split it down like this:

- The agent receives your input.

It stores not just what you said, but also who you are, what you’re working on, your preferences. - It saves the info in a memory store.

This could be:- A file

- A database

- Or a vector store like in RAG models

- Next time you talk, it checks that memory before replying.

Based on that memory, it gives smarter, more context-aware answers.

It’s like a smart assistant.

- You said you like blogs about AI? It will suggest better titles next time.

- You asked for code examples before? It will start including them automatically.

- and You prefer human-style explanations? This Agent remembers that tone.

So instead of being a one-time answer machine, this agent becomes a long-term assistant — like a helpful writing partner who actually remembers how you work.

Let me walk you through a simple Python example of a memory-enhanced AI agent that:

- Remembers your name

- Remembers your favorite topic

- Uses a file-based memory (you can replace it with a database later)

Step-by-step Breakdown

The agent will:

- Check if memory exists (a JSON file)

- If not, ask your name and topic — and save them

- If memory exists, greet you with your name and talk about your topic

Python Code: Memory-Enhanced AI Agent

import json

import os

MEMORY_FILE = "agent_memory.json"

def load_memory():

if os.path.exists(MEMORY_FILE):

with open(MEMORY_FILE, "r") as f:

return json.load(f)

return {}

def save_memory(memory):

with open(MEMORY_FILE, "w") as f:

json.dump(memory, f)

def agent():

memory = load_memory()

if not memory.get("name"):

name = input("Hello! What's your name? ")

memory["name"] = name

save_memory(memory)

else:

print(f"Welcome back, {memory['name']}!")

if not memory.get("favorite_topic"):

topic = input(f"{memory['name']}, what's your favorite topic in AI? ")

memory["favorite_topic"] = topic

save_memory(memory)

else:

print(f"Still exploring {memory['favorite_topic']}? I’ve got more content for you!")

# The agent responds using memory

print(f"Okay {memory['name']}, let's continue learning about {memory['favorite_topic']}.")

# Run the agent

agent()

Output (First Run)

Hello! What's your name? Emmimal Alexander

Emmimal Alexander, what's your favorite topic in AI? AI Agents

Okay Emmimal, let's continue learning about AI Agents.

Second Run (after memory is saved)

Welcome back, Emmimal Alexander!

Still exploring AI Agents? I’ve got more content for you!

Okay Emmimal, let's continue learning about AI Agents.

Technical Implementation

Memory architecture includes:

- Long-term storage systems for user data

- Conversation history databases

- Preference learning algorithms

- Context window management

- Memory retrieval and ranking systems

- Privacy-preserving data handling

- Memory consolidation and summarization

Key Advantages

- Personalized user experiences

- Improved responses over time

- Context-aware conversations

- Relationship building capability

- Efficient handling of repeat queries

- Adaptive behavior patterns

Limitations

- Privacy and security concerns

- Storage and computational overhead

- Potential for biased learning

- Memory management complexity

- Risk of outdated information

- Difficult to scale across users

Real-World Applications

- Personal assistant apps (Siri, Alexa)

- Recommendation systems (Netflix, Spotify)

- Customer service chatbots

- Learning management systems

- Personal finance advisors

- Health monitoring applications

4. React + RAG Agent

what exactly is a React + RAG Agent?

Alright, let’s split it into two parts:

Part 1: React (Reactive Response)

This is like the fast reflexes of the agent.

When you ask it something, it doesn’t just sit there trying to be super smart — it immediately responds with something quick and relevant.

Example:

You: “What’s GPT-4?”

Agent: “Let me check that for you…”

That’s the “React” part. It knows how to hold the conversation naturally, just like a good assistant would say, “Hang on, I’ll check.”

Part 2: RAG (Retrieval-Augmented Generation)

This is where an Agent works smart.

Once it gives that quick reaction, it doesn’t stop there. It goes out and retrieves the best answer from a knowledge base, website, PDF, or even a private database you gave it.

Then it generates a final response using what it just pulled in.

Example continued:

Agent: “GPT-4 is a large language model developed by OpenAI. It’s known for its ability to understand and generate human-like text.”

This final answer was not memorized — it was fetched and then composed.

So how do these two work together?

Let’s say you’re chatting with an AI on a website.

- You ask something.

- React kicks in: it responds fast — “Let me look that up for you.”

- RAG goes hunting in the background: maybe it checks a PDF, a database, or even Google.

- It finds the right info, brings it back, and generates a high-quality response.

- You get a reply that’s not only quick, but also deep and accurate.

Let’s build a simple React + RAG agent in Python using OpenAI + a small knowledge base. We’ll use:

- React = Fast initial response

- RAG = Retrieve relevant info from documents (we’ll use FAISS + LangChain)

What This Agent Will Do:

- React immediately when asked a question

- Retrieve relevant answers from a small text-based knowledge base

- Generate a helpful response using OpenAI

Required Installations:

pip install langchain openai faiss-cpu tiktoken

Step 1: Sample Knowledge Base

documents = [

"GPT-4 is a multimodal large language model developed by OpenAI.",

"LangChain is a framework for developing applications powered by language models.",

"Retrieval-Augmented Generation enhances language models with external data access.",

"FAISS is a library for efficient similarity search on dense vectors."

]

Step 2: Index the Knowledge Base (using FAISS)

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.docstore.document import Document

# Split long text into chunks

text_splitter = CharacterTextSplitter(chunk_size=100, chunk_overlap=0)

docs = [Document(page_content=chunk) for doc in documents for chunk in text_splitter.split_text(doc)]

# Create vector store

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(docs, embeddings)

Step 3: Set Up the Retrieval + Generation Agent

from langchain.chains import RetrievalQA

from langchain.chat_models import ChatOpenAI

# Load OpenAI model

llm = ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo")

# Combine retriever and generator

rag_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=vectorstore.as_retriever(),

return_source_documents=True

)

Step 4: Run the Agent (React + RAG)

while True:

query = input("You: ")

# React Part: Immediate feedback

print("Agent: Let me find that for you...") # Reactive behavior

# RAG Part: Retrieve + Answer

result = rag_chain(query)

print("Answer:", result['result'])

if query.lower() in ['exit', 'quit']:

break

Output

You: What is LangChain?

Agent: Let me find that for you...

Answer: LangChain is a framework for developing applications powered by language models.

Want to Connect This to Your Own Data?

Replace documents with:

- Text from PDFs

- Data from websites

- Internal wikis or Notion pages

Technical Implementation

System components:

- Reactive response generation for immediate feedback

- Vector databases for semantic search

- Embedding models for query understanding

- Retrieval ranking and filtering systems

- Information synthesis and summarization

- Real-time knowledge base integration

- Context-aware response generation

Key Advantages

- Access to current information

- Responsive user interaction

- High accuracy through retrieval

- Scalable knowledge integration

- Reduced hallucination risks

- Dynamic information updates

Limitations

- Dependent on knowledge base quality

- Higher computational requirements

- Potential retrieval latency

- Complex system architecture

- Information source reliability issues

- Difficulty handling conflicting sources

Real-World Applications

- Modern search engines with AI

- Customer support systems

- Research assistance tools

- Documentation querying systems

- News and information aggregators

- Academic research assistants

5. Self-Learning Agents

A self-learning agent is like that one curious person who doesn’t just follow rules—they try something, watch what happens, learn from it, and then try again… better.

It is like a child who learns through trial and error. No one gives it the correct answer—it figures it out by doing things and watching what happens.

- It doesn’t follow a fixed set of instructions.

- It learns from experience.

- and It changes its behavior to get better over time.

So instead of being told what to do, it explores, experiments, remembers outcomes, and improves.

Let’s say you’re teaching a robot to choose the right button out of two:

- Button A gives 1 point.

- Button B gives 10 points.

But the robot doesn’t know that. So what should it do?

It tries both buttons randomly, like this:

- Presses Button A → gets 1 point

- Presses Button B → gets 10 points

- Then Presses Button A again → gets 1 point

- Presses Button B again → gets 10 points

Eventually, it realizes:

“Hmm… Button B gives more reward.”

Now it starts choosing B more often. That’s self-learning.

Workflow of a Self-Learning Agent

Here’s how the agent works step by step:

- Observe the environment (What options are available? What happened last time?)

- Act (Make a choice — like pressing Button A or B)

- Receive Feedback (Did it get 1 point or 10 points?)

- Learn from the feedback (Store this result)

- Adjust its future actions (Choose better next time)

It loops this process again and again, improving each time.

These agents use reinforcement learning, neural networks, or pattern recognition to make this happen. So they aren’t stuck with fixed instructions—they evolve. Just like we do when we learn from our mistakes.

Let’s Code a Simple Self-Learning Agent in Python

We’ll build an agent that has to choose between two options (A and B), and learns which one gives more reward.

import random

# Rewards are hidden from the agent

REWARDS = {

"A": 1,

"B": 10

}

# Agent's knowledge (initially equal chances)

agent_knowledge = {

"A": 0,

"B": 0

}

# Total number of rounds

rounds = 20

for step in range(rounds):

# Choose randomly in the beginning or based on learned value

if random.random() < 0.2: # 20% exploration

choice = random.choice(["A", "B"])

else: # 80% exploitation of learned best

choice = max(agent_knowledge, key=agent_knowledge.get)

# Get reward from environment

reward = REWARDS[choice]

# Update knowledge

agent_knowledge[choice] += reward

print(f"Step {step+1}: Chose {choice}, Got Reward = {reward}")

print(f"Current Knowledge: {agent_knowledge}")

print("-" * 40)

What’s Happening in This Code?

- The agent starts knowing nothing.

- It tries both buttons randomly.

- After every choice, it updates its score for that option.

- Over time, it sees that Button B gives more reward, so it starts choosing B more.

- The agent is learning from experience — no one told it that B was better.

Why Is This Important?

This is how AI systems like ChatGPT, recommendation engines, and game-playing bots work:

- They try things (respond to users, play moves, suggest content).

- They see what works (Do users like it? Did the move win the game?)

- and They learn and improve every time.

This is the power of self-learning — they keep getting smarter on their own.

Technical Implementation

Learning mechanisms include:

- Reinforcement learning algorithms

- Performance feedback analysis

- Strategy optimization techniques

- Pattern recognition systems

- Adaptive parameter tuning

- Experience replay mechanisms

- Multi-armed bandit approaches

Key Advantages

- Continuous performance improvement

- Adaptation to changing conditions

- Discovery of optimal strategies

- Reduced need for manual tuning

- Emergent problem-solving abilities

- Long-term value accumulation

Limitations

- Potential for learning incorrect patterns

- Risk of catastrophic forgetting

- Computational intensive training

- Unpredictable behavior during learning

- Requires significant training data

- Difficult to interpret learning process

Real-World Applications

- Recommendation algorithms (Amazon, YouTube)

- Trading and investment bots

- Game-playing AI (AlphaGo, OpenAI Five)

- Autonomous vehicle systems

- Personalized learning platforms

- Dynamic pricing systems

6. LLM-Enhanced Agent

Already we know what is Agent. Agent is is anything that perceives its environment, makes decisions, and takes actions to achieve a goal. For example:

- A recommendation engine is an agent that suggests products based on your preferences.

- A chatbot is an agent that responds to your questions.

What is a Large Language Model (LLM)?

A Large Language Model, like GPT-4 or Llama 3, is trained on massive amounts of text (books, websites, etc.). It learns how words relate to each other in context. It doesn’t memorize—it learns patterns.

For example:

- If you say, “I want to book a hotel,” it knows you’re looking for lodging.

- If you say, “I need a quiet place to stay near the beach,” it still understands you’re asking the same thing—just in a different way.

So, LLMs understand meaning, generate responses, and follow instructions.

Now Combine Them: LLM + Agent

When you plug an LLM into an agent, you get something smarter.

A LLM-enhanced agent doesn’t just follow pre-written rules. It reasons with language.

Let’s say it’s a customer support agent.

Without LLM:

“I have a problem with my order.”

– It checks: “Is ‘problem’ a keyword?”

– If yes, it replies: “Please explain your problem.”

But it can’t understand the real issue.

With LLM:

“I ordered a phone last week. It hasn’t arrived and the tracking link says pending.”

– It understands:

- What item was ordered

- When it was ordered

- The current status

– It can reply like a human:

“Thanks for reaching out. I see your phone hasn’t shipped yet. That can happen during high-demand periods. Would you like me to check with the warehouse or issue a refund?”

Here’s what’s happening internally:

| Component | What it does |

|---|---|

| Perception | Reads your message and understands it |

| Reasoning | Figures out what might’ve gone wrong |

| Planning | Decides how to handle the issue (check, refund, etc.) |

| Action | Sends a response that makes sense |

Internal Structure of an LLM-Enhanced Agent

It’s not just one big block. These agents are modular. They often include:

- Input Interpreter – Parses the user’s request (e.g., “Book a ticket”).

- LLM Core – This is the “brain” that understands and reasons using language.

- Memory System – Keeps track of the ongoing conversation or prior tasks.

- Tools/Plugins – Can access external systems like:

- Flight booking APIs

- Calendar apps

- Browsers

- Planner – Breaks tasks into steps, like:

- Find flights

- Compare times and prices

- Ask user for confirmation

- Executor – Carries out actions (e.g., “Book the 6 PM flight”).

Example:

User: “Reschedule my dentist appointment next week to Friday morning.”

The agent:

- Understands “reschedule,” “dentist,” “next week,” and “Friday morning”

- Checks your calendar for the appointment

- Sees dentist appointment on Tuesday at 3 PM

- Contacts the dentist’s system (via API)

- Books a Friday morning slot

- Confirms with you

Technical Implementation

Architecture includes:

- Pre-trained transformer models

- Fine-tuning for specific domains

- Prompt engineering and optimization

- Context window management

- Response generation and filtering

- Multi-modal integration capabilities

- Safety and alignment mechanisms

Key Advantages

- Natural language interaction

- Complex reasoning abilities

- Versatile task handling

- Human-like communication

- Creative content generation

- Contextual understanding

Limitations

- High computational requirements

- Potential for hallucinations

- Bias in training data

- Limited real-time knowledge

- Expensive to operate at scale

- Difficulty with factual accuracy

Real-World Applications

- ChatGPT and similar conversational AI

- Code generation tools (GitHub Copilot)

- Writing assistants (Grammarly, Jasper)

- Document analysis systems

- Language translation services

- Content creation platforms

7. Tool-Enhanced Agent

What Is a Tool-Enhanced Agent?

A Tool-Enhanced Agent is an AI agent that doesn’t just rely on its internal knowledge.

Instead of relying on internal knowledge, it get helps from external tools — like a calculator, a code interpreter, a web browser, a database, or an API — to complete tasks it can’t do alone.

In short:

It knows what it knows, if it doesn’t know, it can use particular tool to use to fill the gap.

What Makes It Special

The point is, it’s still powered by a language model that understands your request. But here’s where it gets clever: when it sees you asking something like “What’s 234.5 multiplied by 98.7?” it doesn’t guess. It runs the calculation through a calculator tool, then gives you a precise result, like “23,144.15”—no round-offs, no fuzzy math.

Similarly, if you ask “What’s the weather in Newyork?” it doesn’t rely on memory from training data. Instead, it taps into a weather API or live web search and replies with something current: “It’s 21°C and cloudy.”

So it’s not just speaking; it’s acting—knowing when it needs help and reaching out for it.

Strength of Tool-Enhanced Agents

The real strength of tool-enhanced agents lies in their ability to act — not just generate. They decide which tool is needed for a task, execute it, and then combine the results with natural language. So if you ask something complex like, “Calculate the square root of 2048 and tell me the capital of Burkina Faso,” it will handle the math using a calculator and confirm the capital using a search tool, finally replying with both results in a smooth, human-like answer.

In modern applications, these agents are becoming the backbone of AI-powered platforms. They help customer support teams by checking order status using backend tools. Assist students by solving math problems with precise logic. Power research assistants that can fetch academic papers, summarize them, and even generate citations. Behind the scenes, they switch between tools like calculators, code runners, file readers, web search engines, and more — depending on what you need.

The point is simple: a tool-enhanced agent doesn’t try to know everything — it knows how to find and do things using the right external tools at the right time. This makes it much more capable, especially when accuracy, real-time data, or action-taking is involved.

Architecture of a Tool-Enhanced Agent

Let’s now break it into components, step by step.

Main Components:

| Component | Description |

|---|---|

| LLM Core | Understands language, makes decisions |

| Toolbox / Toolset | Collection of tools it can use |

| Tool Selector | Chooses which tool is needed |

| Controller | Manages flow: “What next?” |

| Memory (optional) | Stores past actions and results |

Agent Workflow: Step by Step

Let’s take an example query:

“What is 234.5 * 98.7, and what’s the weather in Coimbatore?”

Here’s what happens internally:

- Input: User gives the question.

- LLM Core: Reads and understands that this query has two tasks:

- A math calculation.

- A weather search.

- Tool Selector:

- Picks the calculator tool for math.

- Picks the web API for weather.

- Tool Calls:

- Sends “234.5 × 98.7” to the calculator tool.

- Sends “weather in Coimbatore” to a weather API.

- Results Received:

- Gets

23144.15from calculator. - Gets

31°C, cloudyfrom weather API.

- Gets

- LLM Response Generator:

- Combines both results and replies:

What Are These “Tools”?

Tools are external functions or services the agent can call when needed.

Here are different types of tools:

| Tool Type | What It Does |

|---|---|

| Calculator | Performs accurate math |

| Code Interpreter | Runs Python or other code |

| Web Search Tool | Looks up information online |

| Weather API | Gets real-time weather info |

| PDF Reader | Extracts text from files |

| Database Tool | Reads or writes user-specific data |

| Email/Calendar API | Reads, sends, schedules emails or meetings |

Each tool can be a function, a script, or a third-party service.

Let’s see how we can access tools through python

Code Example: Tool-Enhanced Agent

# TOOL: Calculator

def calculator(expression):

return eval(expression)

# TOOL: Weather API (mocked)

def weather_lookup(city):

if city.lower() == "coimbatore":

return "31°C, Cloudy"

return "Unknown"

# AGENT

def tool_enhanced_agent(user_input):

if "multiply" in user_input or "*" in user_input:

return f"Answer: {calculator('234.5 * 98.7')}"

elif "weather" in user_input and "coimbatore" in user_input.lower():

return f"Weather: {weather_lookup('Coimbatore')}"

else:

return "I don’t know."

print(tool_enhanced_agent("What is 234.5 * 98.7 and the weather in Coimbatore?"))

In a real agent, these decisions are made dynamically, and tools are accessed through APIs or function calls.

Technical Implementation

Integration components:

- API management and authentication

- Tool selection and routing logic

- Request formatting and validation

- Response parsing and interpretation

- Error handling and fallback mechanisms

- Security and sandboxing systems

- Tool capability discovery

Key Advantages

- Extended capability range

- Access to real-time data

- Specialized task execution

- Modular functionality expansion

- Reduced hallucination in factual tasks

- Cost-effective capability scaling

Limitations

- Dependency on external services

- API reliability and availability issues

- Security and privacy concerns

- Integration complexity

- Potential for cascading failures

- Tool maintenance overhead

Real-World Applications

- Wolfram Alpha integration in assistants

- Code execution environments

- Research tools with database access

- Weather and news integration services

- Financial data analysis tools

- Scientific computation platforms

8. Self-Reflecting Agent

A Self-Reflecting Agent is an AI system that can analyze its own thoughts, decisions, and actions—not just solve problems, but also think about how it solved them and improve itself based on that reflection.

It doesn’t just answer questions like a chatbot. It looks back at its own reasoning, asks “Was this the best way?”, and even changes its behavior if needed.

Simple Example: The Difference

Let’s say an agent is solving a math problem:

“What’s 17 × 24?”

- A regular agent answers: “408.”

- A self-reflecting agent answers: “17 × 24 = 408.”

[Afterward]: “Let me double-check. I did 17 × 24 = 408. I used multiplication directly. That looks correct, no error in logic.”

If it had made a mistake, it might catch it and say:

“Wait—my answer was wrong. I forgot to carry over the digit in the ones place. Let me fix that.”

That self-correction process is what makes it different.

What It Actually Does

A self-reflecting agent goes through two phases:

- Initial Reasoning – It tries to solve the task as usual.

- Reflection Phase – It stops and reviews its own reasoning path:

- Was the solution correct?

- Was there a better way?

- Did I miss a step?

- Did I contradict myself?

This is like a student solving a problem, then checking their steps before submitting it.

Where Is Reflection Used?

Here are real examples where reflection helps:

- Math reasoning: Fixing missteps in multi-step problems.

- Code generation: Reviewing generated code and catching syntax errors.

- Long-form answers: Reviewing text for logical consistency.

- Planning tasks: Rechecking if steps are in the right order or if some step was skipped.

Reflection helps reduce hallucination, improve accuracy, and teach the agent to learn from its own mistakes.

How It Works Internally

Technically, self-reflection can be implemented using:

- Chain-of-thought prompting: Agent writes down each step.

- Self-evaluation prompts: The agent is prompted: “Check your answer.”

- Critic models: Another AI model acts as a reviewer or critic.

- Multi-pass loops: The agent solves, reflects, and refines until it’s confident.

It might look like this:

Thought 1: Step-by-step solution

Thought 2: Wait, this doesn't seem right

Reflection: I assumed something that’s not true

Revised Thought: Let's correct it by adjusting step 2

Final Answer: [Corrected result]

In Short

A Self-Reflecting Agent is like an AI with a second brain—one that watches the first and says: “Let’s make sure this is right.”

It’s no longer just about solving.

It’s about solving better, checking, and improving itself.

Let’s explore building a simple self-reflecting agent in Python — from scratch — using only basic logic and language model simulation. We won’t use any big frameworks like LangChain.

This simple agent will:

- Generate a solution to a math problem.

- Reflect on its own solution.

- Correct itself if it detects a mistake.

We’ll simulate this behavior using Python functions. In practice, you’d use a real LLM (like GPT or Claude), but here we’ll focus on the logic behind it.

Python Code: Self-Reflecting Agent

import re

# Step 1: Agent solves the problem

def solve_problem(prompt):

if "15% of 240" in prompt:

return "15% of 240 is 24." # Intentional mistake

elif "12 * 11" in prompt:

return "12 * 11 = 132."

else:

return "I don't know."

# Step 2: Agent reflects on its answer

def reflect_on_answer(problem, answer):

if "15% of 240" in problem:

# Let's verify using actual math

correct = 0.15 * 240

reflection = f"Wait. 15% means 0.15 × 240 = {correct}. So the original answer was incorrect."

revised = f"The correct answer is {correct}."

return reflection + "\n" + revised

elif "12 * 11" in problem:

return "I rechecked the multiplication. 12 × 11 = 132. The original answer is correct."

else:

return "I can't verify this one."

# Step 3: Self-Reflecting Agent

def self_reflecting_agent(prompt):

print(f"Problem: {prompt}")

# Initial answer

answer = solve_problem(prompt)

print(f"Initial Answer: {answer}")

# Reflection

reflection = reflect_on_answer(prompt, answer)

print(f"Reflection:\n{reflection}")

# Try the agent on two problems:

self_reflecting_agent("What is 15% of 240?")

print("\n" + "="*50 + "\n")

self_reflecting_agent("What is 12 * 11?")

Output:

Problem: What is 15% of 240?

Initial Answer: 15% of 240 is 24.

Reflection:

Wait. 15% means 0.15 × 240 = 36.0. So the original answer was incorrect.

The correct answer is 36.0.

==================================================

Problem: What is 12 * 11?

Initial Answer: 12 * 11 = 132.

Reflection:

I rechecked the multiplication. 12 × 11 = 132. The original answer is correct.

Here:

- The agent tries a first answer (which might be wrong).

- Then it reflects using a second function that redoes the logic.

- If the first answer was wrong, it corrects itself.

- If the answer was right, it explains why.

Technical Implementation

Reflection mechanisms:

- Output quality assessment algorithms

- Logical consistency checkers

- Alternative solution generation

- Error detection and correction systems

- Confidence scoring mechanisms

- Multi-step reasoning validation

- Self-criticism and improvement loops

Key Advantages

- Higher output quality and accuracy

- Error detection and correction

- Improved logical consistency

- Enhanced reliability for critical tasks

- Transparent reasoning processes

- Continuous self-improvement

Limitations

- Increased computational overhead

- Potential for over-analysis paralysis

- Risk of infinite reflection loops

- Complex implementation requirements

- Difficulty in defining quality metrics

- May slow down response times

Real-World Applications

- Code review and analysis tools

- Mathematical proof checkers

- Scientific hypothesis validators

- Legal document analyzers

- Medical diagnosis verification systems

- Financial risk assessment tools

Summary Table:

| Type | Key Feature | Example Use Case |

|---|---|---|

| Fixed Automation | Hardcoded tasks | Manufacturing robots |

| Environment-Controlled | Bounded, stable environment | Elevators, smart thermostats |

| Memory-Enhanced | Remembers past states | Personalized chatbots |

| React + RAG | Combines reactions with retrieval | Dynamic question-answering |

| Self-Learning | Learns from experience | Adaptive recommendation systems |

| LLM-Enhanced | Language understanding & reasoning | Document summarizers, AI assistants |

| Tool-Enhanced | Uses external APIs/tools | Math solver, AI researcher |

| Self-Reflecting | Evaluates own output | Code analyzers, logic-critical bots |

Conclusion

The world of AI agents is growing rapidly. It’s not just about smart assistants anymore—these agents are:

- Learning from experience

- Calling tools in real time

- Retrieving knowledge from external sources

- Reflecting on their own performance

Whether you’re a developer, researcher, or product builder, knowing these 8 advanced AI agent types will help you design more capable, adaptable systems.

🤖 AI Agents Knowledge Quiz

Test your understanding of the 8 advanced AI agent types

8 Advanced Types of AI Agents

Explore the cutting-edge AI agent architectures shaping the future

-

Memory Enhanced AI Agents

Memory Enhanced AI Agents are sophisticated systems that maintain persistent memory across interactions, enabling them to learn from past experiences and build context over time.

Key Features:

- Long-term memory storage and retrieval

- Context-aware decision making

- Continuous learning from interactions

- Personalized responses based on history

These agents excel in applications requiring continuity, such as personal assistants, customer service bots, and educational tutors that adapt to individual learning patterns.

-

Environment Controlled AI Agents

Environment Controlled AI Agents operate within defined virtual or physical environments where they can perceive, interact, and modify their surroundings in controlled ways.

Core Capabilities:

- Real-time environment perception

- Dynamic adaptation to environmental changes

- Safe exploration within boundaries

- Multi-modal interaction with surroundings

Common applications include robotics, simulation environments, smart home systems, and autonomous vehicles where controlled interaction with the physical world is crucial.

-

ReAct+RAG AI Agents

ReAct+RAG agents combine Reasoning and Acting (ReAct) with Retrieval-Augmented Generation (RAG) to create powerful systems that can reason through problems while accessing external knowledge bases.

Integration Benefits:

- Step-by-step reasoning with external knowledge lookup

- Dynamic information retrieval during thinking process

- Fact-checking capabilities with authoritative sources

- Reduced hallucination through grounded responses

These agents are particularly effective for research assistance, complex problem-solving, and knowledge-intensive tasks where both reasoning and accurate information retrieval are essential.

-

Fixed Automation AI Agents

Fixed Automation AI Agents are designed to execute predefined workflows and processes with high reliability and consistency, operating within well-defined parameters.

Characteristics:

- Deterministic behavior and predictable outcomes

- Rule-based decision making

- High reliability and consistency

- Optimized for specific, repetitive tasks

These agents excel in scenarios requiring precision and reliability, such as data processing pipelines, quality control systems, regulatory compliance checks, and routine administrative tasks.

-

Self Learning AI Agents

Self Learning AI Agents continuously improve their performance through experience, automatically adapting their strategies and knowledge base without explicit programming.

Learning Mechanisms:

- Reinforcement learning from environmental feedback

- Pattern recognition and strategy optimization

- Automatic feature extraction and model updates

- Self-evaluation and performance monitoring

Applications include recommendation systems, adaptive user interfaces, game AI, and any domain where continuous improvement based on user behavior or environmental changes is valuable.

-

LLM Enhanced AI Agents

LLM Enhanced AI Agents integrate Large Language Models as their core reasoning engine, enabling sophisticated natural language understanding and generation capabilities.

Enhanced Capabilities:

- Natural language reasoning and planning

- Complex instruction following and interpretation

- Multi-turn conversation and context management

- Code generation and technical documentation

These agents are transforming applications like content creation, code assistance, educational platforms, and customer support by providing human-like interaction and understanding.

-

Tool Enhanced AI Agents

Tool Enhanced AI Agents can dynamically access and utilize external tools, APIs, and services to extend their capabilities beyond their core training.

Tool Integration Features:

- Dynamic tool selection based on task requirements

- API integration and external service calls

- Multi-step workflow orchestration

- Real-time data access and processing

Examples include agents that can search the web, perform calculations, access databases, send emails, schedule meetings, or interact with specialized software systems to complete complex tasks.

-

Self Reflecting AI Agents

Self Reflecting AI Agents possess meta-cognitive abilities, enabling them to analyze their own reasoning processes, identify mistakes, and improve their decision-making strategies.

Reflection Capabilities:

- Self-evaluation of reasoning quality and outcomes

- Error detection and correction mechanisms

- Strategy refinement based on performance analysis

- Confidence assessment and uncertainty quantification

These agents are crucial for high-stakes applications where reliability and self-awareness are paramount, such as medical diagnosis systems, financial analysis, and critical decision-making platforms.

External Resources

Dive deeper into AI agent architectures and implementations

Explore these authoritative resources to gain deeper insights into the advanced AI agent types discussed in this post. Each resource provides technical depth and practical implementations for building sophisticated AI systems.

-

Technical OverviewIBM: What is an Intelligent Agent?

Comprehensive introduction to intelligent agents, covering fundamental concepts, architectures, and real-world applications. Perfect for understanding the theoretical foundations behind modern AI agent systems.

-

Implementation GuideLangChain: Building AI Agents

Practical documentation for implementing AI agents using LangChain framework. Includes code examples, best practices, and patterns for building ReAct, tool-enhanced, and LLM-powered agents.

-

Research PaperReAct: Synergizing Reasoning and Acting in Language Models

Foundational research paper introducing the ReAct paradigm that combines reasoning and acting in language models. Essential reading for understanding how modern AI agents integrate thinking and action capabilities.

Leave a Reply