The Rise of Qwen-2.5 Max: How Alibaba’s New AI Model Outperforms Competitors

Introduction

Artificial intelligence (AI) is growing fast, and it’s hard to keep up with all the changes. Companies and researchers are always creating new models to solve problems better and faster. Alibaba Cloud recently launched a new AI model called Qwen-2.5 Max.

This is an improved version of their previous models and performs better in many tests. It’s even more powerful than other AI models like DeepSeek-V3.

In this post, we’ll explain what makes Qwen-2.5 Max special, how well it performs, and how it compares to other models.

Qwen-2.5 Max: The AI That’s Beating GPT-4 and DeepSeek-V3!

Before we dive deeper into the features and capabilities of Qwen-2.5 Max, let’s take a quick look at this insightful video that gives you an overview of why this AI model is creating a buzz in the tech world. Whether you’re new to the concept of multimodal AI or already familiar with its potential, this video will help you understand how Qwen-2.5 Max stands out from other models. Enjoy!

What is Qwen 2.5 Max

Qwen 2.5 Max is the newest and smartest AI model from Alibaba Cloud’s Qwen series. It can understand and process natural language, handle multiple types of data (like text and images), and solve complex problems in more than 100 languages.

What Sets Qwen-2.5 Max Apart?

1. Smart Use of Different Types of Data

Qwen-2.5 Max is great at handling text, images, audio, and video all at once. Many AI models are good at just text, but Qwen-2.5 Max goes further by using advanced methods to combine different types of information.

Here’s how it stands out:

- For image captions, it not only identifies objects but understands their relationships and the context.

- For video analysis, it spots complex patterns, making it useful for security systems, content filtering, and recommending videos.

2. Handles Large Tasks Without Slowing Down

Big AI models often need a lot of computing power, making them slow and expensive. Qwen-2.5 Max solves this with smart methods to save resources. It uses efficient processing strategies to handle large-scale tasks without delays.

This means companies can run it on cloud services without high costs or performance problems, which is important for real-world use.

3. Strong Problem-Solving Skills

Qwen-2.5 Max is not just good at simple tasks like translations. It shines in situations that require complex thinking and step-by-step reasoning.

Here’s what it can do well:

- Solve difficult math problems in calculus, algebra, and statistics.

- Explain scientific concepts clearly based on data.

- Answer tricky open-ended questions by combining ideas.

This makes it perfect for tasks that need deep understanding and creativity.

Performance Benchmarks: How Does Qwen-2.5 Max Stack Up?

To get a clear picture of how Qwen-2.5 Max stands out, let’s compare it with other top models like DeepSeek-V3, GPT-4o, and Llama3:

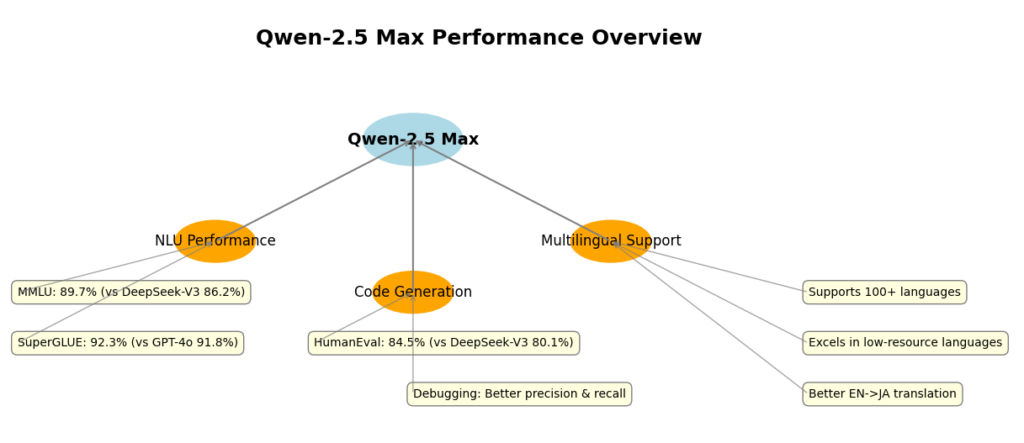

1. Natural Language Understanding (NLU) Performance

Qwen-2.5 Max excels in Natural Language Understanding (NLU) tasks, consistently ranking high in popular benchmarks like GLUE, SuperGLUE, and MMLU. Here’s how it compares:

- On the MMLU benchmark, which tests knowledge across 57 subjects (from history to physics), Qwen-2.5 Max scored 89.7%, outperforming DeepSeek-V3’s score of 86.2%.

- For SuperGLUE, which challenges models with tough language tasks, Qwen-2.5 Max scored 92.3%, slightly beating GPT-4o’s 91.8%.

2. Code Generation and Debugging Performance

As AI-assisted coding tools become more essential, Qwen-2.5 Max stands out for its code generation and debugging capabilities:

- On the HumanEval dataset, which tests how well a model generates correct Python code, Qwen-2.5 Max achieved a pass rate of 84.5%, outpacing DeepSeek-V3 (80.1%) and Llama3 (79.8%).

- When it comes to debugging code, Qwen-2.5 Max demonstrated better precision and recall, catching bugs faster and with more accuracy than the other models.

3. Multilingual Support

For global businesses, having an AI that works well in multiple languages is crucial. Qwen-2.5 Max supports over 100 languages, making it accessible and inclusive for diverse audiences. It excels in multilingual tasks, even in low-resource languages where other models often face challenges.

Here’s how it stands out:

- In translating technical documents from English to Japanese, Qwen-2.5 Max kept the meaning and formatting intact, performing better than DeepSeek-V3.

- When generating marketing content in Spanish, it created culturally relevant copy that felt natural to native speakers.

How Does Qwen-2.5 Max Work?

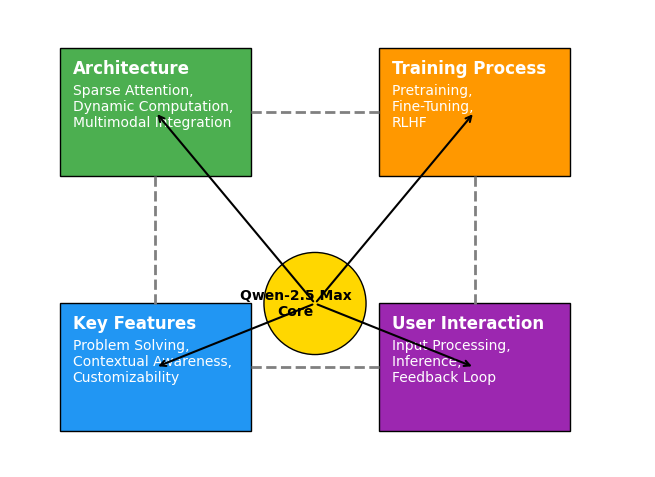

1. Architecture: The Backbone of Qwen-2.5 Max

Qwen-2.5 Max is a transformer-based architecture, which is the foundation for many powerful language models. What makes Qwen-2.5 Max special is how it has improved this design with some innovative features:

a. Sparse Attention Mechanisms

Traditional transformer models use dense attention, meaning every word in a sentence interacts with every other word. While this method works well, it can become very expensive in terms of computing power, especially as the model grows larger. To solve this, Qwen-2.5 Max uses sparse attention, a technique that focuses only on the most important parts of the input. This reduces the amount of computation needed without losing accuracy, allowing Qwen-2.5 Max to handle longer sequences of text more efficiently.

b. Dynamic Computation

Qwen-2.5 Max also has a smart feature called dynamic computation. This means the model can adjust how much computing power it uses based on the difficulty of the task. For simple tasks, it uses fewer resources, and for more complex problems, it uses more. This helps ensure that it runs efficiently, saving energy while still giving great performance. Whether it’s a small task or a large, complicated one, Qwen-2.5 Max can handle it without wasting resources.

c. Multimodal Integration

Unlike many other models that only process text, Qwen-2.5 Max can also handle images, audio, and video. It has special modules for processing these types of data along with text, and all of them work together in the model’s shared space. This means that Qwen-2.5 Max can understand and create content across different forms of media.

For example:

- If you give it an image, Qwen-2.5 Max can describe what’s in the image using natural language.

- If you give it text instructions, it can generate an image based on those instructions or even understand and respond to audio cues.

2. Training Process: Building Knowledge and Skills

The training of Qwen-2.5 Max is a multi-stage process, each phase designed to improve its abilities and ensure it works well in many different situations.

a. Pretraining on Massive Datasets

Qwen-2.5 Max begins with unsupervised pretraining, where it learns from a huge amount of data gathered from sources like the internet, books, scientific papers, and other reliable places. During this stage, the model picks up on the basic patterns of language, logic, and reasoning. The size of the dataset, which includes trillions of tokens, helps the model gain a broad understanding of many subjects and areas.

Key points in pretraining include:

- Learning the grammar, syntax, and meaning of different languages.

- Gaining knowledge about the world, including facts in history, science, and culture.

- Developing skills in tasks like translation, summarization, and question-answering.

b. Fine-Tuning for Specialized Tasks

Once pretraining is complete, Qwen-2.5 Max goes through supervised fine-tuning. This step uses specially chosen datasets for particular tasks, helping the model improve in areas like coding, conversational generation, and problem-solving. Fine-tuning also aligns the model’s output with human preferences, ensuring it is coherent, accurate, and appropriate for different contexts.

Examples include:

- To improve coding skills, the model is trained on millions of lines of code in popular programming languages like Python, Java, and C++.

- For conversational AI, it is trained using dialogues from customer service, social media, and technical support.

c. Reinforcement Learning from Human Feedback (RLHF)

The final step in training is reinforcement learning from human feedback (RLHF). In this phase, humans provide ratings and corrections for the model’s responses, helping guide it to produce better answers. RLHF is key in teaching Qwen-2.5 Max to:

- Prioritize ethical considerations.

- Avoid harmful biases.

- Maintain a polite tone during interactions.

3. Key Features That Power Qwen-2.5 Max

Several standout features make Qwen-2.5 Max perform at an exceptional level. These features allow it to excel in many areas, from complex problem-solving to maintaining context in long conversations.

a. Advanced Problem Solving

Qwen-2.5 Max is really good at solving tough problems that require deep thinking. It has been trained on puzzles, math problems, and scientific challenges, which means it can:

- Solve math problems step-by-step, like differential equations.

- Help with solving real-life problems, like improving supply chains or planning marketing strategies.

b. Contextual Awareness

Unlike other models that struggle with remembering things over long conversations or documents, Qwen-2.5 Max can keep track of information across longer discussions. This means it can:

- Remember what was said earlier in a conversation or document.

- Keep responses consistent and coherent throughout the entire conversation or text.

c. Customizability

Qwen-2.5 Max is flexible and can be customized for different needs. This is useful for businesses or industries that have specific requirements. For example, businesses can:

Adjust it to follow guidelines important to their industry, like healthcare or finance.

Fine-tune the model to understand their specific data.

4. How Qwen-2.5 Max Interacts with Users

Once you start using Qwen-2.5 Max, it’s designed to work with you in real time. Here’s how it works:

a. Input Processing

When you ask a question or give a task to Qwen-2.5 Max, it first tries to understand your input. Whether you give it text, images, or both, it works out what you’re asking by identifying important parts of the input.

- For text, it looks for key words and understands the connections between them (like who, what, where).

- For images or audio, it has special tools to understand and make sense of that data too.

b. Inference and Generation

Once Qwen-2.5 Max understands what you’ve asked, it processes the information using its built-in technology (called transformers) and comes up with a response. Depending on what you need, it can:

- Write text, like a report or a story.

- Create code if you need help with programming.

- Provide explanations for educational topics.

It does all of this by using its knowledge from earlier training to figure out the best way to answer.

c. Feedback Loop

After Qwen-2.5 Max gives you a response, you can give it feedback, like a correction or more details. Qwen-2.5 Max learns from that and gets better over time. This helps it give even better responses in future conversations.

In short, Qwen-2.5 Max listens to your input, processes it, gives a response, and gets better each time you give it more feedback.

Must Read

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

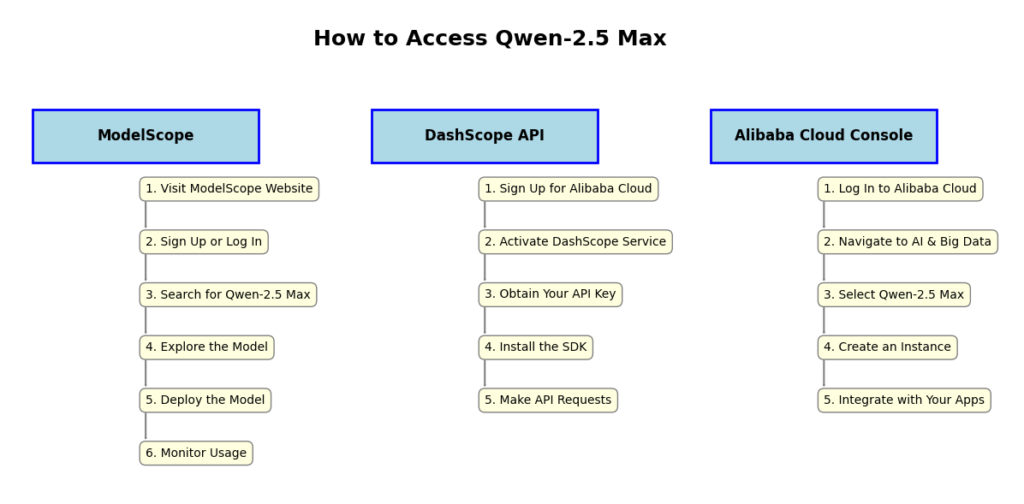

How to Access Qwen-2.5 Max

In this section, I’ll walk you through the steps to access Qwen-2.5 Max, including how to use it via Alibaba Cloud’s ModelScope , DashScope API , and other methods.

Qwen-2.5 Max via ModelScope:

1. Visit ModelScope Website

- Go to the ModelScope website: Open your browser and go to the ModelScope platform, which is hosted by Alibaba Cloud. This is where you’ll find Qwen-2.5 Max and many other AI models.

2. Sign Up or Log In

- Sign up: If you’re new to ModelScope, you’ll need to create an account. Look for a “Sign Up” button, click on it, and follow the prompts to complete your registration.

- Log in: If you already have an account, simply click “Log In” and enter your credentials to access your account.

3. Search for Qwen-2.5 Max

- Use the search bar: Once logged in, go to the search bar at the top of the page and type “Qwen-2.5 Max.”

- Alternatively, you can browse the “Large Language Models” category to find Qwen-2.5 Max among other models.

4. Explore the Model

- Click on the model: After locating Qwen-2.5 Max, click on it to open the model’s page. Here, you’ll find detailed information like:

- Features of the model

- Benchmarks and performance stats

- Documentation and usage instructions

- Try the model: You can even test it out by entering sample inputs directly on the platform to see how it responds.

5. Deploy the Model

- Deploy to your environment: If you’re happy with the model’s performance, you can deploy it to your own system. ModelScope offers different deployment options, including:

- Cloud Servers: Run the model in the cloud for easy scaling and access.

- Local Machines: Download and run it locally for more control.

- Custom Applications: Integrate the model into your software or services.

6. Monitor Usage

- Track usage and performance: ModelScope allows you to track your usage of Qwen-2.5 Max, including:

- Performance metrics: See how the model is performing on your tasks.

- Cost management: Monitor costs related to running the model in your environment.

By following these steps, you can access and utilize Qwen-2.5 Max directly from ModelScope, taking full advantage of its powerful features.

Accessing Qwen-2.5 Max via DashScope API

1. Sign Up for Alibaba Cloud Account

- Create an account: If you don’t have one already, go to the Alibaba Cloud website and sign up for a free account. This account will give you access to various cloud services, including DashScope.

2. Activate DashScope Service

- Navigate to the DashScope console: Once you’ve logged in to your Alibaba Cloud account, go to the DashScope console from your dashboard.

- Activate DashScope: If you haven’t activated DashScope yet, you will need to do so. This will enable access to APIs for various AI models, including Qwen-2.5 Max.

3. Obtain Your API Key

- Generate an API key: In the DashScope console, look for an option to generate an API key. This key is necessary for authenticating your requests to the API when interacting with Qwen-2.5 Max. It ensures that the service knows you are authorized to make requests.

4. Install the SDK (Optional)

- Install SDK for easier integration: To make working with the DashScope API easier, you can install the DashScope SDK for your preferred programming language. SDKs are available for languages such as: Python, Java, Node.js, and more.

pip install dashscopeMake API Requests :

- Use the API key to send requests to Qwen-2.5 Max. Below is an example of how to interact with the model using Python:

import dashscope

# Set your API key

dashscope.api_key = 'your_api_key_here'

# Define your input prompt

response = dashscope.TextGeneration.call(

model='qwen-2.5-max',

prompt='Explain the concept of quantum mechanics in simple terms.'

)

# Print the model's response

print(response.output.text)Monitor and Optimize Usage via DashScope Console

Once you’ve integrated Qwen-2.5 Max into your applications using the DashScope API, it’s important to monitor and optimize your usage to ensure efficient performance and cost management.

Accessing Qwen-2.5 Max via Alibaba Cloud Console

If you prefer managing your AI resources directly from the Alibaba Cloud ecosystem, the Alibaba Cloud Console provides a centralized platform for accessing, configuring, and deploying Qwen-2.5 Max.

Here’s how you can do it:

1. Log In to Alibaba Cloud

- Visit the Alibaba Cloud website and log in using your account credentials. If you don’t have an account, you can sign up for one.

2. Navigate to the AI & Big Data Section

- From the Alibaba Cloud Console dashboard, go to the AI & Big Data section.

- Under this section, select Language Models or Large Models to explore the available AI models.

3. Select Qwen-2.5 Max

- Browse through the list of available models and locate Qwen-2.5 Max. Once found, click on it to access detailed information about:

- Features of Qwen-2.5 Max.

- Pricing details.

- Deployment options available for your use case.

4. Create an Instance

- To start using Qwen-2.5 Max, you need to create an instance.

- During the setup, specify your desired configurations, such as:

- Compute power (CPU, GPU resources, etc.).

- Storage capacity.

- You can choose whether to deploy the model in the cloud or on on-premises depending on your infrastructure and needs.

5. Integrate with Your Applications

- Once the Qwen-2.5 Max instance is up and running, you can integrate it into your applications:

- Use REST APIs for easy integration with external systems.

- Access SDKs for various programming languages to speed up the development process.

- Or directly connect the model to your applications based on your preferred setup.

With these steps, you’ll be able to manage Qwen-2.5 Max seamlessly from the Alibaba Cloud Console, ensuring smooth deployment and integration into your workflows.

Accessing Qwen-2.5 Max via Third-Party Platforms

In addition to Alibaba Cloud’s native platforms, Qwen-2.5 Max can also be accessed through various third-party services and integrations, making it even more flexible for different use cases.

Here are a couple of ways to access Qwen-2.5 Max on third-party platforms:

1. Hugging Face

- Hugging Face is a popular platform for AI models, including Qwen models.

- While Qwen-2.5 Max might not be open-source or fully available there, you can find related versions or similar models.

- You can fine-tune these models (modify them to suit your needs) directly on Hugging Face.

- This option is good if you want a quick, easy way to test or use a Qwen model for specific tasks.

2. Custom Integrations

- If you’re a developer, you can create your own way to access Qwen-2.5 Max by using Alibaba Cloud’s APIs and SDKs.

- APIs let you connect to the model from your own software or systems, while SDKs (software development kits) provide tools for integrating Qwen-2.5 Max into your code.

- This gives you the flexibility to add the model to any system you already use, making it fit perfectly into your business or project.

In short, you can either use Hugging Face for a simpler approach or build custom solutions using Alibaba Cloud’s APIs if you need more control over how you use Qwen-2.5 Max.

Pricing and Free Tier Options

When it comes to using Qwen-2.5 Max through Alibaba Cloud, you have flexible options that cater to different needs. Here’s a quick overview:

Pricing Structure

- Pay-as-you-go:

You pay only for what you use. This is perfect for smaller projects or if you’re just testing out Qwen-2.5 Max. You can control your costs by scaling resources up or down based on your usage. - Subscription Plans:

For businesses or larger-scale deployments, subscription plans provide more predictability. You get a discount for committing to a certain usage level, which helps you save in the long run.

Free Tier

- New Users:

If you’re new to Alibaba Cloud, you may qualify for a free tier. This allows you to try Qwen-2.5 Max without any charges up to a certain limit. It’s an excellent option to experiment, test, or get familiar with the model before committing financially.

For the most up-to-date pricing and details on free tier offerings, it’s best to check the Alibaba Cloud pricing page directly.

Qwen Chat

To experience Qwen-2.5 Max quickly and easily, you can use the Qwen Chat platform, a user-friendly, web-based interface that allows you to interact with the model directly through your browser. Here’s how to get started:

- Navigate to the Qwen Chat Interface:

- Open your web browser and go to the Qwen Chat platform.

- Select Qwen-2.5 Max:

- Once you’re on the platform, locate the model dropdown menu.

- From the list, select Qwen-2.5 Max to start interacting with the model.

- Start Engaging:

- You can now use the platform for various tasks such as natural language understanding, reasoning, and multimodal interactions.

- Ask questions, explore tasks, or test different queries to experience the model’s capabilities.

This platform provides an intuitive way to directly engage with Qwen-2.5 Max without needing to go through complex setup steps. Perfect for quick testing and exploring!

Best Practices for Using Qwen-2.5 Max

To maximize your experience with Qwen-2.5 Max, here are some best practices that will help you get the best results and ensure efficiency:

1. Fine-Tune for Specific Tasks

- While Qwen-2.5 Max is pre-trained on a wide variety of data, fine-tuning it on domain-specific datasets can dramatically improve its performance for specialized tasks.

- How to do it: If you’re working in a specific industry like healthcare, finance, or education, consider fine-tuning Qwen-2.5 Max with your own data to enhance accuracy and relevance for your application.

2. Optimize Input Prompts

- Why it matters: Clear, well-structured prompts help the model understand your query better, which leads to more accurate and relevant responses.

- Be specific in your instructions, provide context where necessary, and avoid overly vague queries. For example, instead of asking “Tell me about history,” ask “Give me an overview of the history of the Roman Empire.”

3. Monitor Costs

- Why it matters: If you’re working on larger projects or high-usage tasks, it’s important to keep an eye on your usage to avoid unexpected charges.

- How to do it: Use Alibaba Cloud’s tools to track your usage, set up alerts for when you’re nearing usage limits, and regularly check billing details to stay on top of your costs.

4. Stay Updated

- As Qwen-2.5 Max evolves and improves, new features, updates, and optimizations can enhance performance or open up new possibilities.

- Regularly check for model updates, attend webinars, or follow Alibaba Cloud’s announcements to keep up-to-date with the latest advancements.

Conclusion: The Rise of Qwen-2.5 Max

Qwen-2.5 Max by Alibaba is an advanced AI model that stands out due to its enhanced reasoning, contextual awareness, and customizability. It can handle complex tasks such as solving mathematical problems, generating content, and providing detailed explanations across industries.

You can access it via platforms like ModelScope, DashScope API, and the Alibaba Cloud Console. These options allow easy integration for developers and businesses, whether through a web interface, API, or cloud deployment.

To make the most of Qwen-2.5 Max, it’s recommended to fine-tune the model for specific applications, craft precise input prompts, and monitor usage to optimize costs. Staying updated with its new features also ensures you’re using its full potential.

Leave a Reply