Artificial Intelligence in Robotics

Artificial Intelligence in Robotics: How Machines Learn, Adapt, and Act in the Real World

The convergence of artificial intelligence and robotics marks a turning point in human history. Machines no longer simply follow instructions. They perceive their surroundings, make decisions, and learn from experience. This transformation affects manufacturing floors, hospital operating rooms, agricultural fields, and even outer space. Traditional automation systems execute predetermined sequences. AI-driven robots operate differently. They adapt to changing conditions, handle uncertainty in real-world environments, and improve performance over time without explicit reprogramming. This article explains how artificial intelligence transforms robots into intelligent agents. You will see visual demonstrations, understand the technical stack, and explore real-world applications backed by current research and deployment data.

Understanding Artificial Intelligence in Robotics

What Is AI in Robotics?

Artificial intelligence provides robots with three critical capabilities:

- Perception – understanding the environment through sensors

- Reasoning – making intelligent decisions based on observations

- Learning – improving performance through experience

Robotics provides physical embodiment. The combination creates machines that interact with the physical world intelligently.

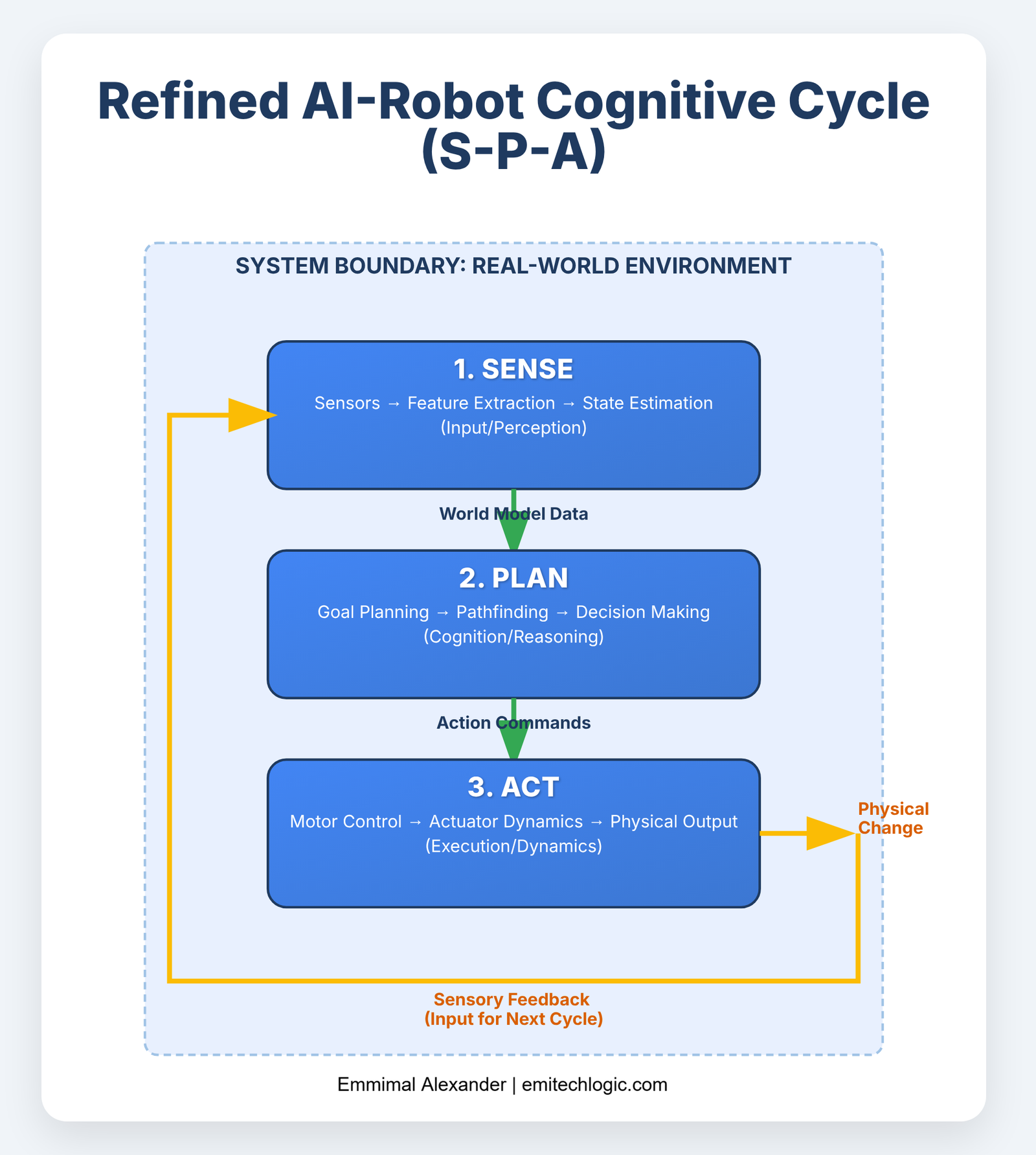

A traditional robot follows a fixed program. An AI-driven robot observes its environment through sensors, processes this information, makes decisions based on observations, and adjusts actions when conditions change.

Example: Consider a welding robot on an assembly line. Traditional systems weld at predetermined coordinates. AI-enabled systems use computer vision to identify weld points on parts that may vary slightly in position. They adjust their trajectory in real time and verify weld quality automatically.

Core Components of an AI-Driven Robot

Every AI-powered robot integrates five essential subsystems. Each subsystem handles a specific aspect of intelligent behavior.

Perception systems gather information from the environment. Cameras capture visual data. LiDAR sensors measure distances. Microphones record audio signals. Touch sensors detect physical contact. Force sensors measure applied pressure. These inputs feed into the AI system for processing.

Decision-making modules analyze sensor data and determine appropriate actions. Path planning algorithms find routes through obstacles. Task scheduling systems prioritize multiple objectives. Resource allocation routines manage energy and computation effectively.

Learning mechanisms improve performance over time through three key approaches:

- Machine learning models identify patterns in sensor data

- Reinforcement learning optimizes behaviors through trial and error

- Transfer learning applies knowledge from one task to similar tasks

Motion control systems translate decisions into physical movements. Inverse kinematics calculate joint angles for desired positions. Trajectory generators create smooth motion paths. Feedback controllers maintain accuracy despite disturbances.

Human-robot interaction interfaces enable communication between people and machines. Natural language processing interprets voice commands. Gesture recognition understands hand signals. Augmented reality displays visualize robot intentions and planned actions.

Key AI Techniques Powering Modern Robotics

Machine Learning for Robotics

Machine learning algorithms enable robots to recognize patterns and make predictions without explicit programming for every scenario.

Supervised learning trains robots to classify objects and predict outcomes. A robot in a sorting facility learns to identify different package types by training on labeled images. The system receives thousands of examples showing packages and their correct categories. It learns features that distinguish one category from another. After training, it classifies new packages with high accuracy.

Unsupervised learning discovers hidden patterns in data without labeled examples. Robots use this for environment mapping. As a mobile robot explores a warehouse, it clusters similar locations based on sensor readings. It identifies distinct zones like loading docks, storage areas, and corridors without human annotation.

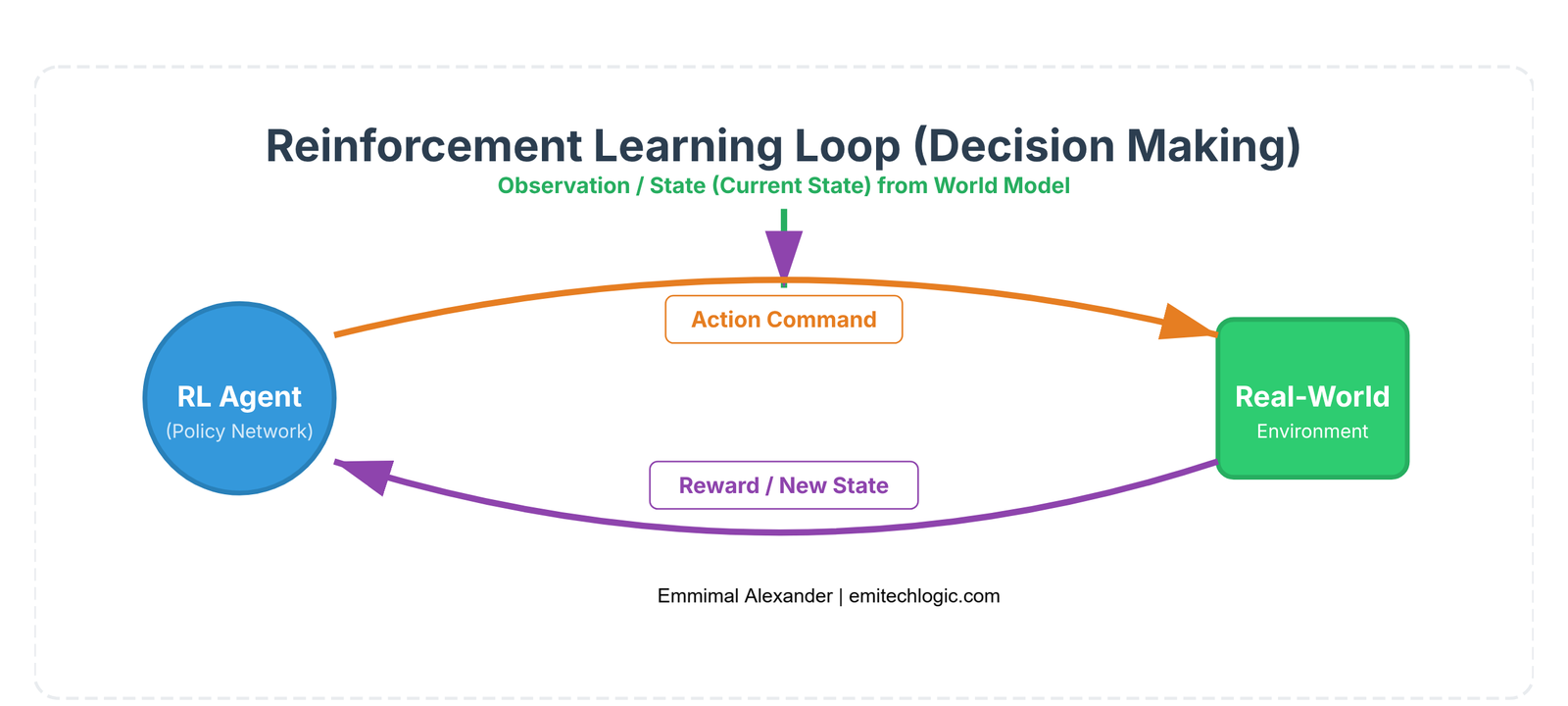

Reinforcement learning optimizes robot behavior through reward signals. Key characteristics include:

- Robots learn through trial and error over thousands of attempts

- Successful actions receive positive rewards while failures receive negative rewards

- System discovers optimal strategies that maximize success rates

- Boston Dynamics used this approach to train Atlas robot for parkour movements

Deep Learning in Robotics

Deep neural networks process complex sensory data and extract meaningful representations for robot decision-making.

Convolutional Neural Networks (CNNs) excel at visual understanding. Robots use CNNs to recognize objects, detect obstacles, and understand scenes. A CNN processes camera images through multiple layers. Early layers detect edges and textures. Deeper layers recognize object parts and relationships. Autonomous vehicles use CNNs to identify pedestrians, vehicles, traffic signs, and lane markings from camera feeds. Tesla Autopilot processes inputs from eight cameras using CNN architectures to build a complete understanding of the vehicle surroundings.

Recurrent Neural Networks (RNNs) and LSTMs handle temporal sequences. Robots use these architectures to predict future states and understand motion patterns. An RNN tracking a moving object predicts where it will be in the next moment. This prediction enables the robot to intercept or avoid the object effectively.

Transformer models process multiple sensor streams simultaneously. A robot navigating indoors combines camera images, LiDAR scans, and IMU readings. Transformer attention mechanisms determine which sensor inputs matter most for each decision. This multi-modal fusion provides more robust perception than any single sensor alone.

Computer Vision and Sensor Fusion

Robots perceive the world through multiple sensors. Computer vision algorithms extract meaning from this sensory data.

Modern robots integrate several sensor types. RGB cameras capture color images and textures. Depth cameras measure distances to surfaces. LiDAR sensors create precise 3D point clouds. Radar detects objects through fog and darkness. Infrared sensors work in low light conditions. Each sensor type provides complementary information about the environment.

Feature extraction algorithms identify important elements in sensor data:

- Edge detection finds boundaries between objects

- Corner detection identifies intersection points for tracking

- SIFT and ORB algorithms extract distinctive keypoints that remain recognizable despite viewpoint changes

Real-time 3D mapping builds spatial representations as robots move. SLAM algorithms simultaneously localize the robot and map its environment. As a robot moves through an unknown space, it matches features between successive sensor readings. It estimates its motion and updates the map. This process runs continuously at 30 to 60 times per second, enabling navigation in unknown spaces.

| Sensor Type | Range | Accuracy | Primary Use Case |

|---|---|---|---|

| RGB Camera | 1-100m | Color, texture | Object recognition, scene understanding |

| Depth Camera | 0.5-10m | 1-5cm | Close-range navigation, manipulation |

| LiDAR | 1-200m | 1-3cm | Precise mapping, obstacle detection |

| Radar | 10-250m | 10-50cm | All-weather detection, velocity measurement |

| Ultrasonic | 0.1-5m | 2-10cm | Proximity detection, parking assistance |

Natural Language Processing for Robots

Natural language interfaces allow people to communicate with robots using everyday speech. This capability makes robots accessible to users without technical training.

Voice command systems convert speech to actionable instructions. A user tells a service robot “bring me a bottle of water from the kitchen.” The NLP system identifies the action (bring), the object (bottle of water), and the location (kitchen). It maps these elements to executable robot commands.

Instruction following requires understanding complex language. Instructions may contain multiple steps. They may reference objects by description rather than exact names. Advanced NLP models handle this complexity. GPT-based systems can parse elaborate instructions and break them into sequential robot actions.

Context-aware dialog systems maintain conversation history. A user might say “bring the red one instead.” The system recalls the previous exchange to understand what “the red one” refers to. This contextual understanding creates natural, flowing interactions between humans and robots.

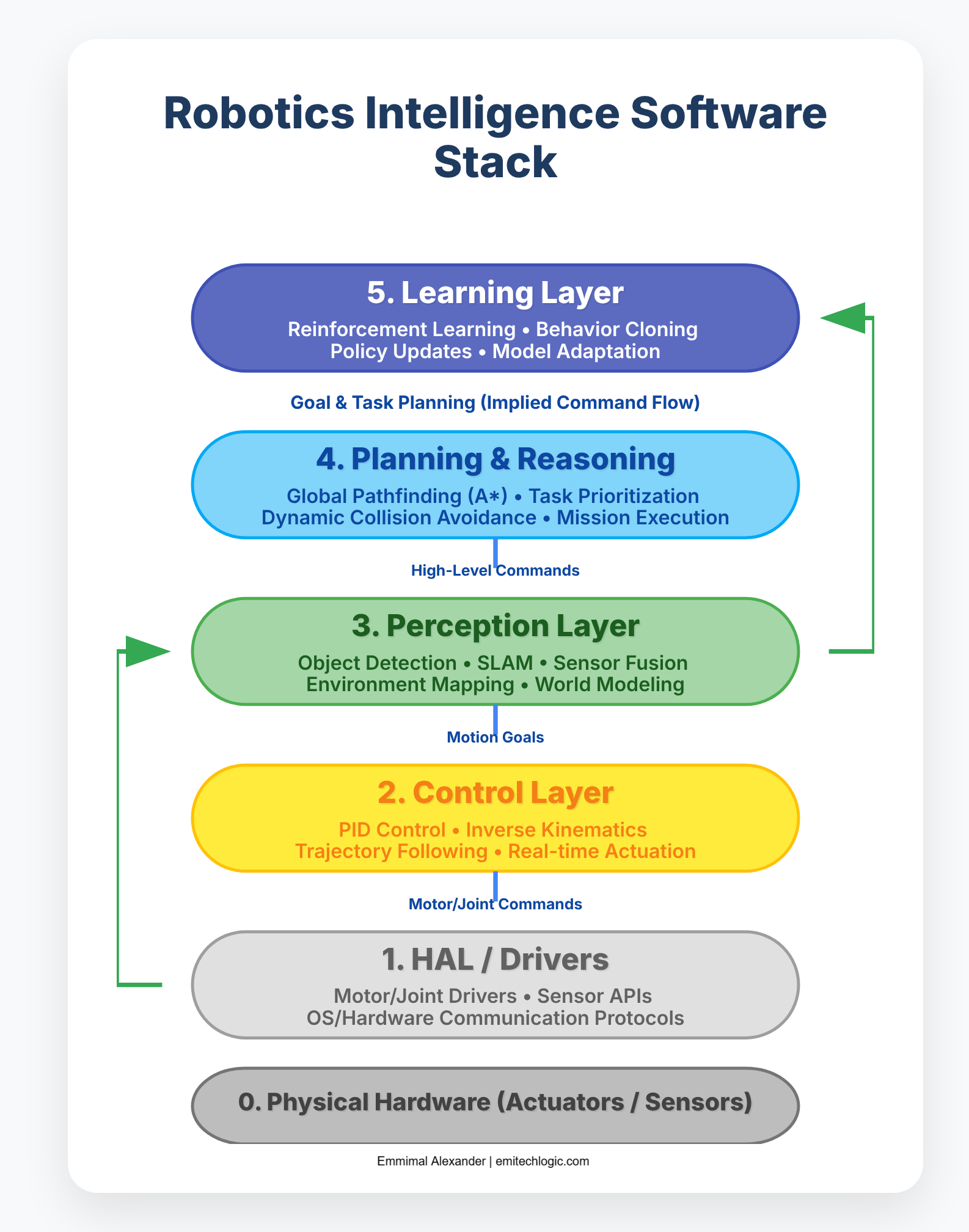

The Robotics Stack: How AI Works Inside a Robot

Perception Layer

The perception layer transforms raw sensor data into structured information about the environment. This layer runs continuously, processing inputs at rates from 10 to 60 Hz depending on the sensor and application.

Scene understanding algorithms segment the environment into meaningful regions. A robot distinguishes floors from walls, identifies doorways, and recognizes furniture. Semantic segmentation assigns a category to every pixel in a camera image. Instance segmentation further distinguishes individual objects within each category.

Object detection locates and classifies items of interest. YOLO and similar architectures detect objects in real-time from video streams. These systems output bounding boxes around detected objects along with confidence scores and category labels. Modern systems process 30 or more frames per second.

Depth estimation calculates distances to surfaces:

- Stereo vision compares images from two cameras to identify corresponding points

- Disparity between matching points reveals depth information

- Monocular estimation uses deep learning to predict depth from single images

Planning and Reasoning Layer

The planning layer determines what actions the robot should take to achieve its goals. This involves computing paths, avoiding obstacles, and selecting behaviors appropriate for the current situation.

Path planning algorithms find routes from current position to goal position. A-star search explores the space systematically, expanding nodes with lowest estimated cost to goal. RRT algorithms grow trees of random paths until they connect start and goal. Sampling-based planners work well in high-dimensional spaces like robot arm configurations.

Collision avoidance predicts and prevents impacts:

- Dynamic window approaches evaluate possible velocities and select paths that avoid collisions

- Model predictive control optimizes trajectories over short time horizons

- Both methods account for robot dynamics and environmental obstacles

Behavior trees organize complex behaviors into hierarchical structures. Nodes represent conditions to check or actions to execute. The tree structure determines behavior selection and sequencing. This architecture makes robot behaviors interpretable and modifiable by engineers.

Control Layer

The control layer executes planned motions accurately despite uncertainties and disturbances. Controllers run at high frequencies (100-1000 Hz) to maintain stability.

Motion control regulates joint positions and velocities. PID controllers calculate corrective actions based on position error, rate of error change, and accumulated error. More advanced controllers account for robot dynamics, gravity, and inertia to achieve precise positioning during operation.

Trajectory tracking follows desired paths precisely. Controllers receive position, velocity, and acceleration setpoints along trajectories. They compute torques or forces to track these setpoints despite model uncertainties and external forces.

Stable manipulation requires force control in addition to position control. Impedance control regulates the relationship between position error and contact force. This allows robots to maintain gentle contact when handling delicate objects or collaborating with humans safely.

Learning Layer

The learning layer improves robot performance through experience. Adaptation occurs at different timescales, from real-time adjustments to overnight retraining.

On-device learning updates models during operation. A grasping robot adjusts its grasp classifier after each attempt. Online learning algorithms modify model parameters based on immediate feedback. This enables rapid adaptation to new objects or changing conditions with limited computational resources.

Cloud-assisted training uses data collected by robots to retrain models on powerful servers:

- Fleet learning aggregates experiences from multiple robots

- Models trained on millions of examples from diverse environments

- Performance improves beyond what individual robots could achieve alone

Continual learning adds new capabilities without forgetting previous ones. Standard neural networks suffer catastrophic forgetting when trained on new tasks. Techniques like elastic weight consolidation and memory replay preserve important parameters while learning new skills, enabling lifelong learning in robots.

Types of Robots That Use AI Today

Industrial Robots

Industrial robots perform manufacturing tasks with precision and consistency. AI enhances these systems with adaptive capabilities that traditional automation lacks.

Welding robots use computer vision to locate weld seams on parts that vary in position. They adjust welding parameters based on material properties detected visually. Quality inspection systems examine welds immediately, identifying defects that require correction. Assembly robots adapt to part variations without requiring precise fixturing.

Predictive maintenance systems monitor robot health through multiple channels:

- Sensors track motor currents, vibrations, and temperatures

- Machine learning models detect anomalies indicating impending failures

- Factories schedule maintenance during planned breaks rather than unexpected shutdowns

- Systems reduce downtime by 70% according to industry reports

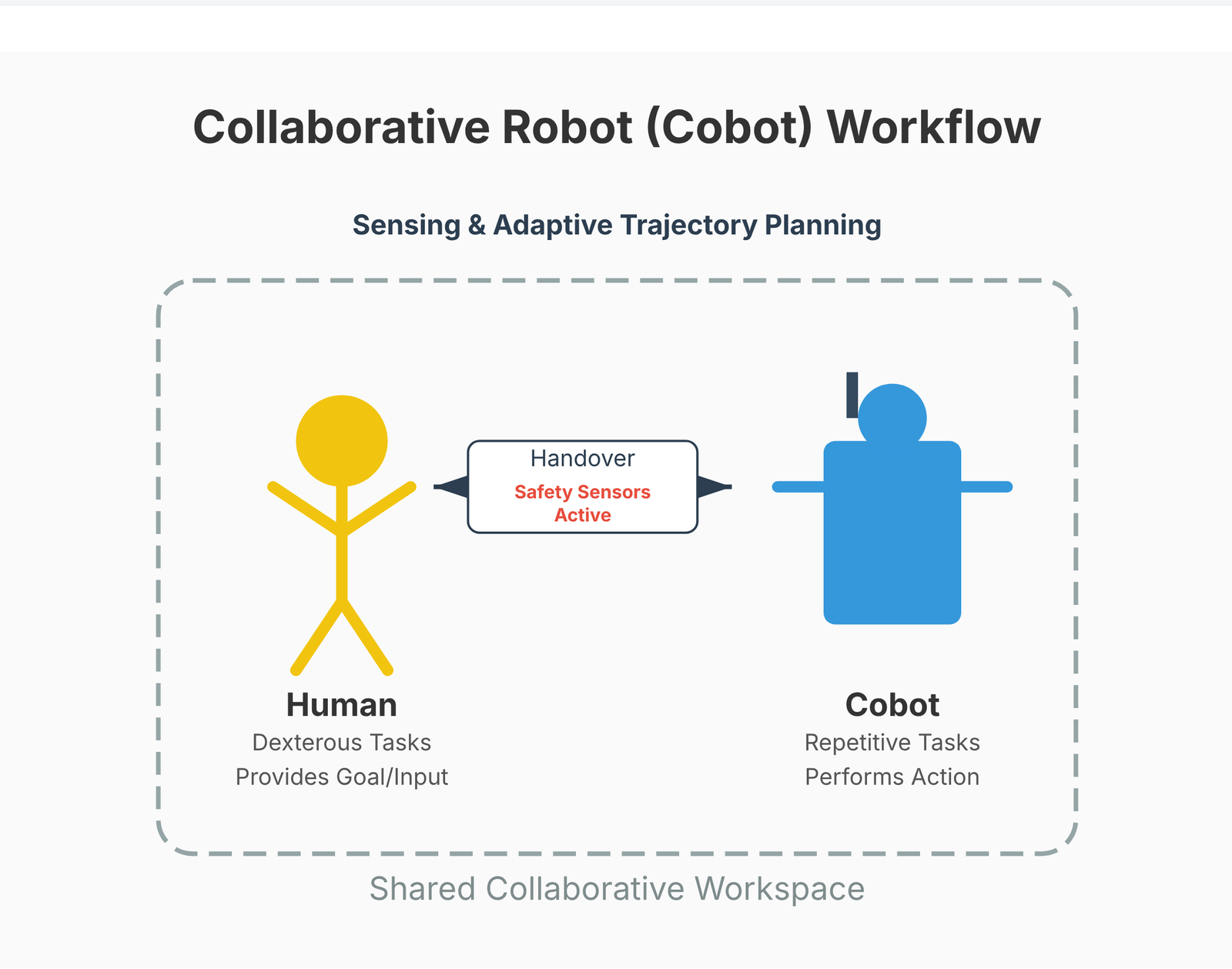

Collaborative Robots (Cobots)

Collaborative robots (cobots) work alongside humans without safety cages. This requires sophisticated environment awareness and safe motion control.

Real-time environment awareness uses sensors to detect human presence. Depth cameras track worker positions. Force sensors detect unexpected contacts. When humans enter the workspace, robots slow down or stop. This prevents injuries while maintaining productivity.

Human-robot teamwork divides tasks between human and machine. Humans handle dexterous tasks requiring judgment. Robots handle repetitive or physically demanding tasks. BMW uses cobots to install door seals. Workers guide the cobot along the seal path once. The cobot then repeats this motion on subsequent doors, freeing workers for higher-value activities.

Service Robots

Service robots assist humans in commercial and public environments. These robots navigate among people, interact through natural interfaces, and perform useful tasks.

Hospitality robots deliver items to hotel rooms, provide information to guests, and guide visitors. Relay robots operate in over 5,000 hotels worldwide, delivering amenities to rooms autonomously. They navigate hallways, summon elevators, and call guests upon arrival.

Retail robots perform inventory management by scanning shelves to identify out-of-stock items, misplaced products, and pricing errors. Simbe Robotics’ Tally robot operates in grocery stores during business hours, navigating among shoppers to maintain accurate inventory data.

Public space robots provide cleaning, security, and information services:

- Knightscope security robots patrol parking lots and campuses

- Detect anomalies and deter crime through visible presence

- Cleaning robots operate in airports and malls

Healthcare Robots

Healthcare robots assist surgeons, rehabilitate patients, and care for elderly individuals. These applications demand exceptional safety and reliability.

Surgical robots enhance surgeon capabilities. The da Vinci system translates surgeon hand motions into scaled micro-movements of surgical instruments. This enables minimally invasive procedures through small incisions. AI-enhanced systems provide instrument tracking, tissue identification, and surgical guidance, reducing recovery time and complications.

Rehabilitation robots assist patients recovering from strokes or injuries. They provide precisely controlled resistance during therapy exercises. They measure patient progress objectively. They adapt difficulty based on patient performance, enabling consistent therapy sessions.

Elderly care robots address caregiver shortages through multiple functions:

- Remind patients to take medications on schedule

- Detect falls and summon help immediately

- Provide social interaction to reduce isolation

- Paro robotic seal provides therapeutic benefits through animal-like responsiveness

Autonomous Mobile Robots (AMRs)

Autonomous mobile robots (AMRs) navigate dynamically changing environments without fixed infrastructure. They transport goods, inspect facilities, and gather data.

Warehousing robots move inventory efficiently:

- Amazon employs over 500,000 mobile robots in fulfillment centers

- Transport shelving units to human pickers

- Brings inventory to workers rather than workers walking to inventory

- Increases efficiency and reduces picker fatigue

Last-mile delivery robots:

- Starship Technologies completed millions of autonomous deliveries

- Navigate pedestrian areas and cross streets at intersections

- Summon customers when they arrive at destination

- Operate on sidewalks safely among pedestrians

Urban mobility robots transport people:

- Autonomous shuttles operate in controlled environments

- Used in campuses and retirement communities

- Follow fixed routes but adapt to obstacles in real-time

Humanoid Robots

Humanoid robots possess human-like physical forms. This shape offers advantages in environments designed for humans.

Capabilities of humanoid robots:

- Climb stairs and navigate narrow spaces

- Open doors and operate switches

- Use tools designed for human hands

- Work in spaces built for human proportions

Leading humanoid robot projects:

- Tesla Optimus – 5’8″ robot for general-purpose tasks in homes and factories

- Figure 01 – targets commercial warehouse and manufacturing applications

- Boston Dynamics Atlas – demonstrates advanced mobility including backflips and parkour

Current challenges:

- Bipedal locomotion requires precise balance control

- Dexterous manipulation demands sophisticated tactile sensing

- Power consumption limits operation duration

- High costs compared to specialized robots

- Currently constrained to research and limited commercial trials

Real-World Applications of AI in Robotics

Smart Manufacturing

Smart manufacturing integrates AI-powered robots into flexible production systems. These systems adapt to product variations and optimize processes.

Adaptive assembly lines:

- Reconfigure for different products through software updates

- Vision systems identify component types

- Robots adjust motions automatically

- Single line produces multiple product variants

- No dedicated tooling needed for each variant

Predictive maintenance with AI:

- Siemens reports 20% reduction in maintenance costs

- 70% decrease in breakdowns using AI-driven systems

- Sensors monitor equipment continuously

- ML models learn normal operating signatures

- Deviations trigger maintenance alerts before failures

Agriculture

Agricultural robots address labor shortages and improve efficiency. They monitor crops, perform selective interventions, and operate autonomously outdoors.

Crop monitoring robots:

- Patrol fields continuously

- Capture multispectral images revealing plant health

- Identify diseased plants, nutrient deficiencies, and pest infestations

- Enable early interventions when most effective

Autonomous harvesting robots:

- Abundant Robotics developed apple harvesting robots with computer vision

- Use vacuum systems to detach fruit gently

- Root AI soft grippers harvest tomatoes without bruising

- Operate 24/7 during harvest season

Weed detection and removal:

- Blue River Technology See and Spray system

- Reduces herbicide usage by 90%

- Sprays only weeds rather than entire fields

- Computer vision distinguishes crops from weeds at centimeter accuracy

- Operates at field speeds

Autonomous Vehicles and Drones

Autonomous vehicles and drones navigate complex environments using AI perception, planning, and control. They operate on roads, in airspace, and across varied terrain.

Navigation systems fuse multiple sensors:

- Combine GPS, inertial sensors, cameras, LiDAR, and radar

- Maintain precise localization despite GPS dropouts

- Predict motion of surrounding vehicles and pedestrians

- Plan safe trajectories accounting for uncertainty

3D mapping creates detailed environmental models:

- Build high-definition maps during normal operation

- Include lane markings, traffic signs, and elevation at centimeter accuracy

- Vehicles localize by matching current sensors to stored maps

- Update maps continuously with new data

Obstacle avoidance prevents collisions:

- Detect obstacles at ranges from centimeters to hundreds of meters

- Classify objects as static or dynamic

- Predict future positions of moving obstacles

- Plan evasive maneuvers when needed

Space Exploration

Space robots operate in extreme environments with minimal human oversight. Communication delays prevent real-time control. Robots must function autonomously for extended periods.

Mars rovers navigate autonomously:

- Perseverance rover uses AutoNav system for autonomous driving

- Processes stereo camera images to identify hazards

- Plans safe paths without waiting for Earth commands

- Autonomous capability increased driving speed 5x compared to previous rovers

Robotic probes explore distant bodies:

- Europa Clipper will orbit Jupiter’s moon Europa starting in 2030

- AI systems identify scientifically interesting surface features

- Schedule observations autonomously

- Necessary due to 45-minute communication delay with Earth

Autonomous landers perform precision touchdowns:

- Mars 2020 used Terrain Relative Navigation during landing

- Compared camera images to onboard maps during descent

- Identified hazards and diverted to safer landing sites

- All decisions made in seven minutes between entry and touchdown

Defense and Security

Military and security robots perform dangerous tasks while keeping personnel safe. These applications demand exceptional reliability and ethical consideration.

Surveillance robots patrol critical areas:

- Monitor borders, facilities, and critical infrastructure

- Ground-based robots and aerial drones detect intrusions

- AI systems distinguish normal activity from potential threats

- Reduce false alarms that burden security personnel

Bomb disposal robots:

- Approach and neutralize explosive devices

- Provide operators with visual and sensor feedback

- AI recognizes explosive types

- Suggests neutralization approaches

- Speeds operations and improves safety

Tactical robots support military operations:

- PackBot and similar systems scout dangerous areas

- Investigate buildings and gather intelligence

- Climb stairs and traverse rubble

- Operate in conditions hazardous to humans

Challenges and Limitations of AI in Robotics

Technical Challenges

Despite advances, significant technical obstacles constrain AI robotics capabilities.

Data scarcity in real-world situations:

- Simulation provides unlimited training data

- Simulated data differs from real-world observations

- Transfer learning from simulation to reality remains imperfect

- Collecting real-world data proves expensive and time-consuming

Real-time computation limits:

- Robots must process data within milliseconds

- Complex deep learning models require substantial computation

- Mobile robots have limited onboard computing power

- Constrained by size, weight, and power budgets

Hardware constraints in edge systems:

- Powerful GPUs consume too much energy for battery-powered robots

- Specialized AI accelerators improve efficiency but add cost

- Balancing performance, power consumption, and cost remains challenging

Safety and Reliability

Robots operate in environments with humans and valuable assets. Failures can cause injuries or damage.

Uncertainty in perception creates safety risks:

- Computer vision systems misclassify objects

- Sensors fail or provide noisy readings

- Rare situations may not appear in training data

- Robots must recognize when perceptions are unreliable

- Must act conservatively under uncertainty

Fail-safe mechanisms prevent harm:

- Emergency stops halt robot motion immediately

- Redundant systems provide backup capability

- Software watchdogs monitor for algorithmic errors

- Multiple layers of safety protection

Verification and validation challenges:

- Traditional software has formal specifications

- Neural networks lack formal specifications

- Testing all possible inputs proves infeasible

- Statistical validation provides limited guarantees

Ethical and Social Issues

AI robotics raises societal concerns that extend beyond technical considerations.

Job displacement affects workers:

- Manufacturing employment declined as automation increased

- New jobs emerge in robot programming, maintenance, and supervision

- Require different skills than displaced roles

- Retraining programs help but do not reach all affected workers

Data privacy concerns:

- Service robots record video of people without explicit consent

- Robots in homes access intimate details of daily life

- Manufacturers must implement privacy protections

- Balance functionality with privacy rights

Human safety and trust:

- People fear robot malfunctions could cause injury

- Building trust demands transparent operation

- Requires predictable behavior

- Needs demonstrated safety records

- Clear communication of capabilities and limitations

Future Trends in AI Robotics

Foundation Models for Robotics

Foundation models trained on massive datasets enable robots to perform diverse tasks without task-specific training.

Robotics transformers process sequences:

- Learn relationships between visual inputs, language, and motor commands

- Google’s RT-2 combines vision and language understanding

- Allows robots to follow complex instructions

- Works with objects robots have never encountered

Vision-Language-Action (VLA) models:

- Unify perception, understanding, and control

- Process camera images and interpret language commands

- Output motor actions directly

- Train on millions of demonstrations from different robots

- Generalize across embodiments and tasks

Universal skill learning:

- Creates libraries of reusable behaviors

- Learn elemental skills like grasping, pushing, and placing

- Complex tasks combine these skills

- Compositional approach scales better than task-specific training

Self-Supervised Learning for Embodied Agents

Self-supervised learning eliminates the need for labeled training data. Robots learn from raw sensory streams.

Learning from raw sensory streams:

- Trains models to understand physics and causality

- Robots observe objects falling and learn gravity

- Observe pushing objects and learn forces cause movement

- Learned physical principles transfer to manipulation tasks

Zero-shot action capabilities:

- Models predict sensory consequences of actions

- Plan action sequences for novel goals without additional training

- Robots reason about which actions will achieve desired outcomes

- Based on learned forward models

Swarm Robotics with AI

Swarm robotics coordinates many simple robots to accomplish complex tasks. AI enables decentralized cooperation without central control.

Multi-agent coordination algorithms:

- Let robots divide tasks efficiently

- Market-based approaches assign tasks based on capabilities and positions

- Graph neural networks learn coordination strategies

- Swarms adapt to robot failures by reallocating tasks

Distributed intelligence emerges from local interactions:

- Each robot follows simple rules based on neighbors

- Complex collective behaviors emerge without global planning

- Ant-inspired algorithms for foraging and nest construction

- Translates to robot swarms for search and construction tasks

Human-Robot Collaboration at Scale

Future workplaces will feature extensive human-robot collaboration. AI enables robots to understand and adapt to human coworkers.

AI for personalized interactions:

- Adapts robot behavior to individual preferences

- Learns that some workers prefer direct task assignment

- Others want suggestions rather than commands

- Adjusts communication style, working pace, and assistance level

- Based on worker responses over time

Robots adapting to human intentions:

- Predict what humans will do next

- Watch worker’s gaze and posture

- Anticipate which tool the worker needs

- Position tool within reach proactively

- Improves workflow efficiency

Conclusion

Artificial intelligence transforms robots from mechanical tools into intelligent partners. Perception systems give robots senses. Learning algorithms provide adaptability. Natural language interfaces enable communication. This combination creates machines that operate effectively in the messy, unpredictable real world.

The robotics stack integrates multiple AI subsystems:

- Perception layers process sensor data

- Planning layers decide actions

- Control layers execute motions

- Learning layers improve performance

Understanding this architecture helps engineers design better systems and users set realistic expectations.

Real-world applications span multiple industries:

- Manufacturing – industrial robots adapt to product variations

- Agriculture – robots perform selective interventions

- Healthcare – surgical robots assist surgeons and caregivers

- Logistics – mobile robots navigate dynamic environments autonomously

- Space exploration – robots explore distant worlds with minimal oversight

Challenges remain substantial:

- Technical limitations constrain capabilities

- Safety concerns require careful design

- Ethical issues demand thoughtful policies

Addressing these challenges will determine how quickly and widely AI robotics deploys.

Future developments promise more capable systems:

- Foundation models will enable general-purpose robots

- Self-supervised learning will reduce data requirements

- Swarm robotics will scale to thousands of coordinated agents

- Human-robot collaboration will become seamless and natural

Understanding these systems empowers students, engineers, and decision-makers. Students learn valuable skills for emerging careers. Engineers build better systems. Business leaders make informed investment decisions. Policymakers craft appropriate regulations. Everyone benefits from thoughtful development of this transformative technology.

Frequently Asked Questions About AI in Robotics

Get answers to the most common questions about artificial intelligence in robotics, from basic concepts to practical applications and future trends.

1What is the difference between AI robots and traditional robots?

Traditional robots execute predetermined sequences without adaptation. AI robots perceive their environment through sensors, make decisions based on observations, and learn from experience. Traditional robots require reprogramming for new tasks. AI robots adapt automatically. For example, a traditional welding robot welds at fixed coordinates. An AI welding robot uses computer vision to find weld points on parts with position variations.

2What are the main types of AI used in robotics today?

Four main AI types power modern robots:

- Machine Learning – enables pattern recognition and classification in sensor data

- Computer Vision – processes camera and sensor inputs for object detection and navigation

- Natural Language Processing – interprets voice commands and enables human communication

- Reinforcement Learning – optimizes robot behaviors through trial-and-error learning

Most advanced robots combine multiple AI techniques for robust performance.

3Which industries use AI robotics the most?

Manufacturing leads AI robotics adoption. Factories deploy industrial robots for welding, assembly, and quality inspection. Healthcare ranks second with surgical robots and rehabilitation systems. Agriculture uses autonomous harvesters and crop monitoring robots. Logistics relies on warehouse robots for inventory management. Space exploration depends on autonomous rovers. Amazon alone employs over 500,000 mobile robots in fulfillment centers.

4How do robots learn new tasks?

Robots learn through three primary methods:

Supervised learning – robots train on labeled examples showing correct outputs for given inputs. A sorting robot learns to classify packages by training on thousands of labeled images.

Reinforcement learning – robots learn through trial and error with reward signals. Successful actions receive positive rewards. Failed actions receive negative rewards. The robot discovers optimal strategies over thousands of attempts.

Imitation learning – robots observe human demonstrations and replicate behaviors. A robot watches a human grasp objects and learns grasping strategies from these observations.

5Can AI robots work safely alongside humans?

Yes, collaborative robots (cobots) are designed specifically for human interaction. They use multiple safety systems including depth cameras to track human positions, force sensors to detect unexpected contacts, and speed limiting when humans approach. BMW uses cobots to install door seals alongside human workers. When a person enters the robot workspace, the robot automatically slows or stops. Modern cobots meet safety standards ISO/TS 15066 for collaborative operation.

6How much does an AI robot cost?

Costs vary significantly by robot type and capabilities:

- Industrial robots – $25,000 to $400,000 depending on payload and precision

- Collaborative robots – $15,000 to $50,000 for entry-level systems

- Service robots – $20,000 to $100,000 for commercial applications

- Autonomous mobile robots – $30,000 to $150,000 for warehouse systems

- Humanoid robots – $100,000 to over $1 million for research platforms

Prices decrease as technology matures and production scales increase.

7What programming languages are used for AI robotics?

Python dominates AI robotics programming due to extensive machine learning libraries like TensorFlow, PyTorch, and scikit-learn. C++ is used for real-time control systems requiring high performance. ROS (Robot Operating System) provides a framework in both Python and C++. MATLAB is common in research and prototyping. Additional languages include Java for enterprise applications and Julia for numerical computing. Most modern robots use multiple languages with Python for AI and C++ for low-level control.

8How long does it take to train an AI robot?

Training time varies dramatically by task complexity:

Simple classification tasks – hours to days with supervised learning on existing datasets.

Manipulation skills – weeks to months using reinforcement learning with thousands of practice attempts.

Complex navigation – months of training in simulation plus real-world fine-tuning.

General-purpose capabilities – continuous learning over months or years of operation.

Google’s robotic grasping project trained robots over several months with 800,000 grasp attempts to achieve 96% success rates on novel objects.

9What sensors do AI robots use to perceive their environment?

AI robots integrate multiple sensor types for comprehensive perception:

- RGB cameras – capture color images for object recognition

- Depth cameras – measure distances to surfaces for 3D understanding

- LiDAR – creates precise 3D point clouds for mapping

- Radar – detects objects through fog and darkness

- IMU sensors – track orientation and acceleration

- Force/torque sensors – measure contact forces for manipulation

- Microphones – capture audio for voice commands

Sensor fusion combines these inputs for robust perception exceeding any single sensor.

10Will AI robots take human jobs?

AI robots will transform jobs rather than simply eliminate them. Automation affects repetitive, predictable tasks most significantly. Manufacturing employment declined as automation increased. However, new roles emerge in robot programming, maintenance, supervision, and system design. The World Economic Forum estimates automation will displace 85 million jobs by 2025 while creating 97 million new roles. Successful adaptation requires retraining programs and education in AI, robotics, and automation technologies. Human skills in creativity, complex problem-solving, and emotional intelligence remain difficult to automate.

11What are the biggest challenges in AI robotics?

Five major challenges limit current AI robotics:

Real-world data scarcity – collecting diverse training data from physical robots proves expensive and time-consuming.

Computational constraints – mobile robots have limited onboard computing power for complex AI models.

Safety and reliability – ensuring robots operate safely despite perception uncertainties and unexpected situations.

Generalization – robots trained on specific tasks struggle with variations and novel scenarios.

Cost – advanced AI robotics systems remain expensive, limiting widespread adoption.

12How do autonomous robots navigate without GPS?

Robots use SLAM (Simultaneous Localization and Mapping) to navigate indoors and in GPS-denied environments. SLAM algorithms build maps while tracking robot position. The robot matches features in current sensor readings to its map. It estimates movement by tracking how these features change between readings. Visual SLAM uses cameras. LiDAR SLAM uses laser scanners. The Mars Perseverance rover uses visual odometry and terrain-relative navigation to traverse the Martian surface without GPS. Warehouse robots combine SLAM with pre-built maps for efficient navigation.

13What is the future of AI robotics?

Foundation models will enable general-purpose robots that perform diverse tasks without task-specific training. Key future trends include:

- Vision-language-action models – robots understanding complex instructions involving objects they have never seen

- Self-supervised learning – robots learning from raw sensory streams without labeled data

- Swarm robotics – thousands of coordinated robots accomplishing complex tasks collaboratively

- Seamless human-robot collaboration – robots predicting human intentions and adapting to individual preferences

- Humanoid robots – general-purpose robots operating in human environments

Experts predict the robotics market will exceed $150 billion by 2030, with service robots representing the fastest-growing segment.

External Resources and Further Reading

Explore these verified resources to deepen your understanding of artificial intelligence in robotics. Each link opens in a new tab for your convenience.

Important Note

These external resources are provided for educational purposes. All links have been verified and open in new tabs with nofollow attributes.

Official Documentation and Frameworks

ROS (Robot Operating System)

The most widely used robotics middleware framework with comprehensive documentation for building robot applications.

Visit ROS.orgTensorFlow

Google’s open-source machine learning framework with extensive robotics applications and tutorials.

Visit TensorFlow.orgPyTorch

Deep learning framework widely adopted in robotics research for developing neural networks and AI models.

Visit PyTorch.orgOpenCV

Open-source computer vision library essential for robot perception and real-time image processing.

Visit OpenCV.orgResearch Papers and Academic Publications

arXiv Robotics

Open-access repository of robotics research papers and preprints from leading researchers worldwide.

Visit arXiv.orgGoogle Scholar – Robotics

Search engine for scholarly literature on robotics, AI, and machine learning research papers.

Visit Google ScholarIEEE Xplore

Digital library with technical literature in robotics, automation, and artificial intelligence.

Visit IEEE XplorePapers With Code – Robotics

Research papers paired with code implementations for reproducible robotics and machine learning.

Visit Papers With CodeOnline Courses and Learning Platforms

Coursera Robotics Courses

University-level robotics courses covering perception, planning, control, and machine learning.

Visit CourseraedX Robotics Programs

Free online courses from top universities on autonomous systems and robot programming.

Visit edXYouTube – Robotics Tutorials

Video tutorials and lectures on robotics programming, ROS, and AI implementation.

Visit YouTubeGitHub Robotics Projects

Open-source robotics projects, code examples, and collaborative development repositories.

Visit GitHubNews and Industry Updates

IEEE Spectrum Robotics

Latest news, trends, and developments in robotics technology and automation.

Visit IEEE SpectrumThe Robot Report

Industry news covering robotics companies, products, research, and market analysis.

Visit The Robot ReportMIT Technology Review – Robotics

In-depth articles on emerging robotics technologies and their societal impact.

Visit MIT Technology ReviewRobotics Business Review

Business intelligence and market insights for the robotics and automation industry.

Visit Robotics Business ReviewDevelopment Tools and Simulators

Gazebo Simulator

Open-source 3D robotics simulator for testing algorithms, designing robots, and training AI systems.

Visit GazeboWebots Robot Simulator

Professional robot simulation software with realistic physics and multiple robot models.

Visit WebotsNVIDIA Isaac Sim

GPU-accelerated robotics simulation platform for AI-powered robot development and testing.

Visit NVIDIA IsaacMoveIt Motion Planning

State-of-the-art software for mobile manipulation, motion planning, and collision avoidance.

Visit MoveIt

Leave a Reply