A Closer Look at Generative Adversarial Networks (GANs)

Introduction to Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) have truly changed the game in artificial intelligence and deep learning since they were introduced by Ian Goodfellow in 2014. At their core, GANs involve two neural networks: a Generator and a Discriminator. These networks work together in a unique way to create new, synthetic data.

In this article, we’ll take a close look at GANs, starting with their basic structure and how they function. We’ll explore their various uses and the challenges they face. By the end, you’ll have a solid grasp of GANs and how they might transform different industries.

Additionally, we can also explore the practical implementation of GANs using Python for image generation.

Definition of Generative Adversarial Networks GANs

Generative Adversarial Networks (GANs) are a type of machine learning system made up of two parts: the Generator and the Discriminator. These two parts work together in a way that’s like a game.

- Generator: This part creates new data, like fake images or text. Its job is to make this data look as real as possible.

- Discriminator: This part checks the data and tries to figure out if it’s real or if it was made by the Generator.

Here’s how it works: The Generator creates some data, and then the Discriminator examines it to decide if it’s real or fake. The Generator’s goal is to trick the Discriminator into thinking its data is real, while the Discriminator’s job is to get better at detecting fakes. They go back and forth like this: the Generator keeps improving its data to fool the Discriminator, and the Discriminator keeps getting better at spotting what’s fake. Over time, this ongoing competition helps both the Generator and the Discriminator improve, leading to more realistic data being created.

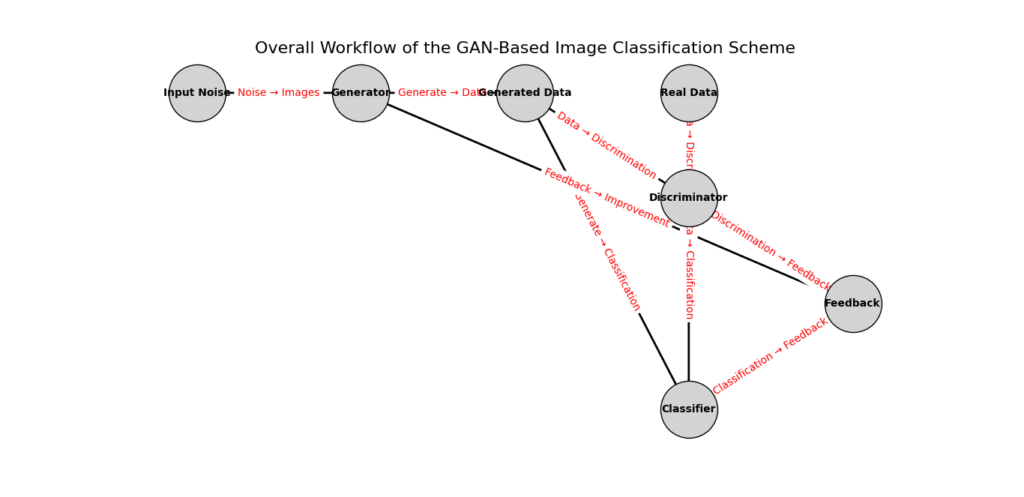

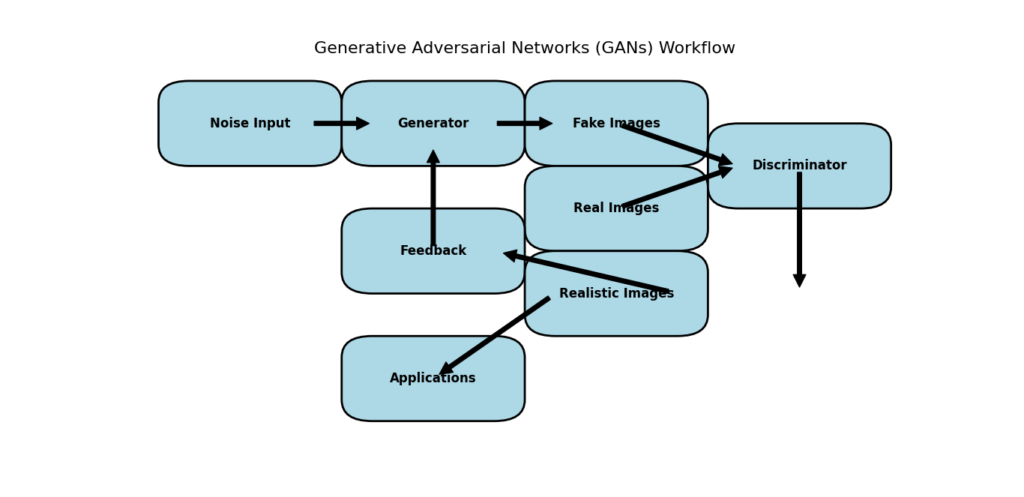

GAN Architecture

Figure 1: Basic architecture of Generative Adversarial Networks (GANs).

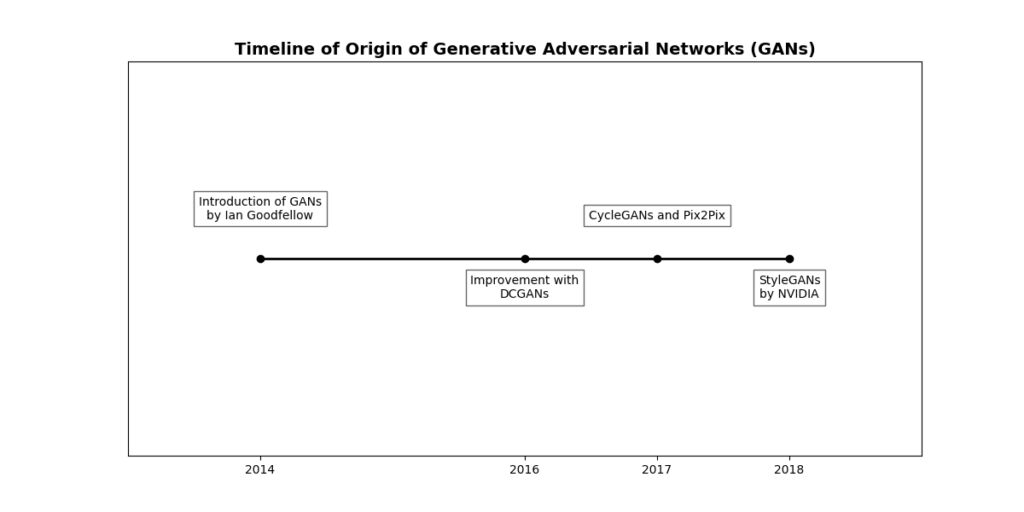

Brief History and Origin of Generative Adversarial Networks (GANs)

Introduction by Ian Goodfellow (2014): In 2014, Ian Goodfellow and his team introduced Generative Adversarial Networks (GANs) in a groundbreaking paper. The idea was fresh and innovative: they proposed using two neural networks that work in opposition to each other. Goodfellow’s introduction of GANs marked a significant shift in how artificial intelligence models can be trained, paving the way for advancements in creating synthetic data that closely resembles real-world information.

Initial Reactions and Development (2014-2015): When Ian Goodfellow and his team first introduced Generative Adversarial Networks (GANs) in 2014, the idea sparked significant interest in the AI community. Researchers were excited about the potential of GANs to create highly realistic data and push the boundaries of what artificial intelligence could achieve.

In the first year, the focus was on understanding and improving GANs. Challenges like training stability and the quality of generated data were addressed, with researchers experimenting with different methods to enhance GAN performance. Early developments showed promising results, especially in generating high-quality images, setting the stage for further advancements and wider use of GANs in the future.

First Successful Applications (2016): By 2016, Generative Adversarial Networks (GANs) started to prove their practical worth. One standout achievement was their ability to create incredibly realistic images of human faces that looked just like real photographs. This showed that GANs could make a big impact in areas like computer vision and image creation. Seeing these successes helped establish GANs as an important tool in machine learning.

Improvement of GAN Architectures (2017-2018): As GANs gained popularity, researchers developed improved versions to address the limitations of the original model. For example:

- Deep Convolutional GANs (DCGANs): Introduced in 2015, DCGANs used convolutional layers to improve the quality of generated images and enhance training stability.

- Wasserstein GANs (WGANs): Introduced in 2017, WGANs provided a new approach to measuring the distance between real and generated data, leading to more stable training and better results. These innovations helped make GANs more effective and versatile.

Wider Adoption and Innovations (2019-Present): GANs have become widely adopted across various fields. They are now used for applications such as generating art, improving medical imaging, creating realistic virtual environments, and even generating synthetic data for training other machine learning models. New variants of GANs, such as Conditional GANs (CGANs) and StyleGANs, continue to emerge, offering even more capabilities and applications. This ongoing innovation highlights GANs’ growing impact and their role in pushing the boundaries of what AI can achieve.

Importance and Impact of GANs in AI and Machine Learning

Generative Adversarial Networks (GANs) have revolutionized various fields by enabling realistic data generation and enhancing technological innovation. Here’s a closer look at their impact:

- Realistic Data Generation:

- Image Generation: Generative Adversarial Networks (GANs) can create highly realistic images of people, animals, or objects that don’t exist. For example, in entertainment, studios can generate lifelike characters or environments, saving the need for real-life counterparts.

- Data Augmentation: In machine learning, having diverse data helps improve model performance. GANs can generate additional training data, which helps models learn better and generalize to new situations, especially when real data is scarce or expensive.

- Advancements in Creative Fields:

- Art and Design: GANs have transformed creative industries by generating new pieces of artwork. Artists and designers use GANs to create unique paintings or designs based on existing styles, exploring new creative avenues.

- Music and Literature: Generative Adversarial Networks (GANs) are used to compose music and generate text, creating new musical compositions or stories, which helps creators brainstorm ideas and produce content more efficiently.

- Fashion Industry: Designers use GANs to explore new fashion trends by generating new clothing designs, predicting styles, and creating virtual fashion shows without producing physical garments.

- Enhanced Medical Imaging:

- Synthetic Medical Images: In healthcare, GANs generate synthetic medical images that mimic real patient data, which is useful for training diagnostic models when real data is scarce or confidential.

- Improving Image Quality: Generative Adversarial Networks (GANs) enhance the resolution and clarity of medical images, aiding doctors in diagnosing conditions more accurately by sharpening MRI scans or CT images.

- Innovation in Virtual Worlds:

- Virtual Reality (VR) and Augmented Reality (AR): GANs help generate detailed virtual worlds for VR applications, providing users with immersive experiences. They create realistic 3D environments that enhance the sense of presence.

- Augmented Reality: Generative Adversarial Networks (GANs) improve AR applications by generating realistic virtual objects and integrating them smoothly with the real world, useful for interactive gaming or virtual try-ons.

- Improved Data Privacy and Security:

- Synthetic Data for Privacy: GANs generate synthetic data that mimics real datasets without revealing personal or sensitive information. This is valuable for research and development, allowing safer experimentation without privacy risks.

- Research and Analysis: Generative Adversarial Networks (GANs) can be used in research and analysis without exposing confidential information, facilitating safer and more effective development processes.

- Advances in Language Processing:

- Text Generation and NLP: GANs produce coherent and contextually relevant text, useful for automated content creation, such as generating articles, stories, or reports.

- Improving Translation and Summarization: Generative Adversarial Networks (GANs) enhance machine translation and text summarization by generating more accurate and fluent language outputs, leading to better communication tools and information processing.

- Enhancement of Existing Technologies:

- Image and Video Enhancement: GANs improve the resolution of images, making low-resolution images clearer and more detailed, beneficial in applications like satellite imaging and photography.

- Style Transfer: Generative Adversarial Networks (GANs) enable the transfer of artistic styles to different images, such as applying the style of a famous painting to a photograph, creating unique and visually appealing results.

Now Let’s Explore the Components of Generative Adversarial Networks (GANs)

Must Read

- Why sorted() Is Safer Than list.sort() in Production Python Systems

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

Components of Generative Adversarial Networks (GANs)

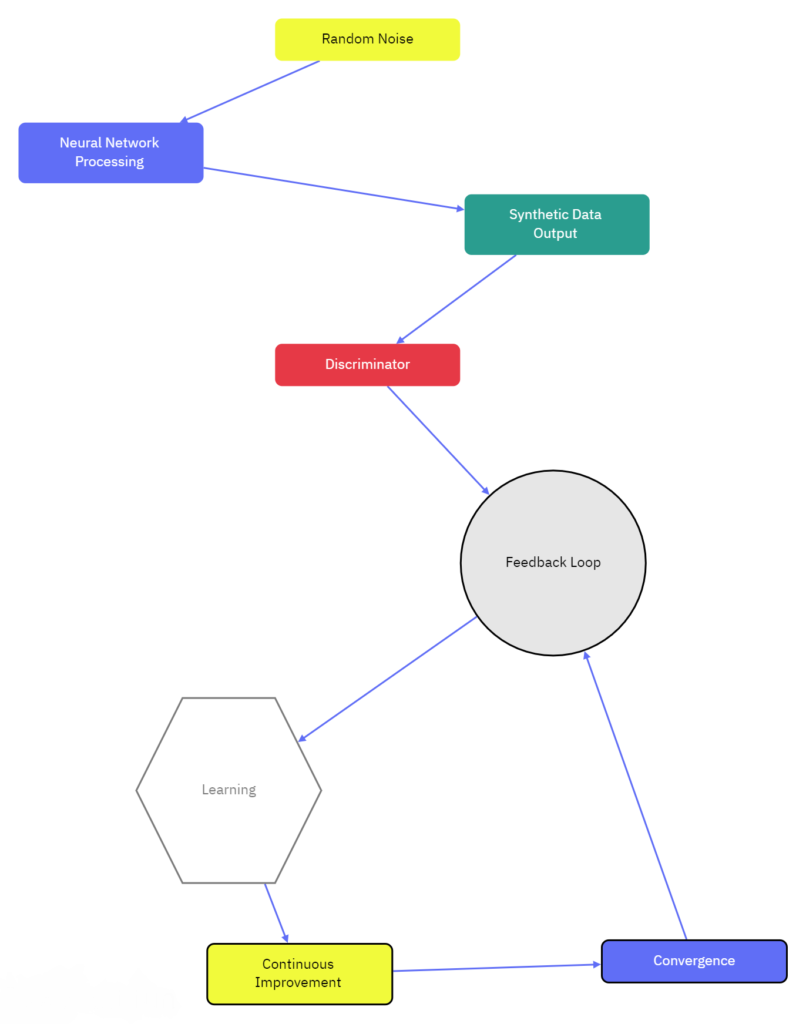

Generator

The Generator in a Generative Adversarial Network (GAN) is designed to create synthetic data that closely resembles real data. Here’s a detailed breakdown of how it functions:

- Starting Point – Random Noise:

- The Generator begins with random noise, which is a vector of random numbers. This noise acts as the initial input to the Generator. Think of it like a blank canvas or an unstructured set of data points.

- Transformation – Neural Network Processing:

- The random noise is fed into a neural network, which is a series of interconnected layers designed to process and transform this input. The neural network consists of several layers, including fully connected layers, activation functions, and sometimes convolutional layers if the data is image-based.

- Data Generation – Creating Synthetic Output:

- As the neural network processes the random noise, it transforms it into synthetic data. For example, if the task is image generation, the output will be an image that looks like it could be a real photograph. The Generator aims to produce data that mimics the features and patterns of real data.

- Output – Synthetic Data:

- The final result is the synthetic data, which is generated by the neural network based on the random noise input. This could be an image of a human face, a piece of text, or any other type of data that the GAN is trained to produce.

- Feedback Loop – Interaction with the Discriminator:

- The synthetic data generated by the Generator is then sent to the Discriminator. The Discriminator’s role is to evaluate the data and decide whether it looks real or fake. The feedback from the Discriminator is crucial for the Generator’s learning process.

- Learning – Adjusting Based on Feedback:

- The Generator uses the feedback from the Discriminator to improve its output. If the Discriminator identifies the data as fake, the Generator adjusts its neural network weights and biases to make the synthetic data more realistic. This adjustment is made through a process called backpropagation, where the error from the Discriminator’s feedback is used to refine the Generator’s parameters.

- Continuous Improvement – Iterative Process:

- This process of generating data, receiving feedback, and adjusting continues iteratively. With each cycle, the Generator learns from its mistakes and gets better at creating data that looks increasingly real. The goal is for the Generator to produce data so convincing that the Discriminator has a hard time telling it apart from real data.

- Convergence – Achieving High Quality:

- Over time, as the Generator and Discriminator train together, the Generator’s synthetic data becomes very close to real data. The training process aims for a balance where the Generator is producing highly realistic data and the Discriminator is becoming very good at detecting subtle differences.

Example Code

Here’s how you might define a simple Generator for image data in TensorFlow/Keras:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape, LeakyReLU, BatchNormalization, Conv2DTranspose

def build_generator():

model = Sequential()

model.add(Dense(128, input_dim=100, activation=LeakyReLU(0.2)))

model.add(BatchNormalization())

model.add(Dense(784, activation=LeakyReLU(0.2)))

model.add(Reshape((28, 28, 1)))

model.add(Conv2DTranspose(64, kernel_size=3, strides=2, padding='same', activation=LeakyReLU(0.2)))

model.add(Conv2DTranspose(1, kernel_size=3, strides=1, padding='same', activation='tanh'))

return model

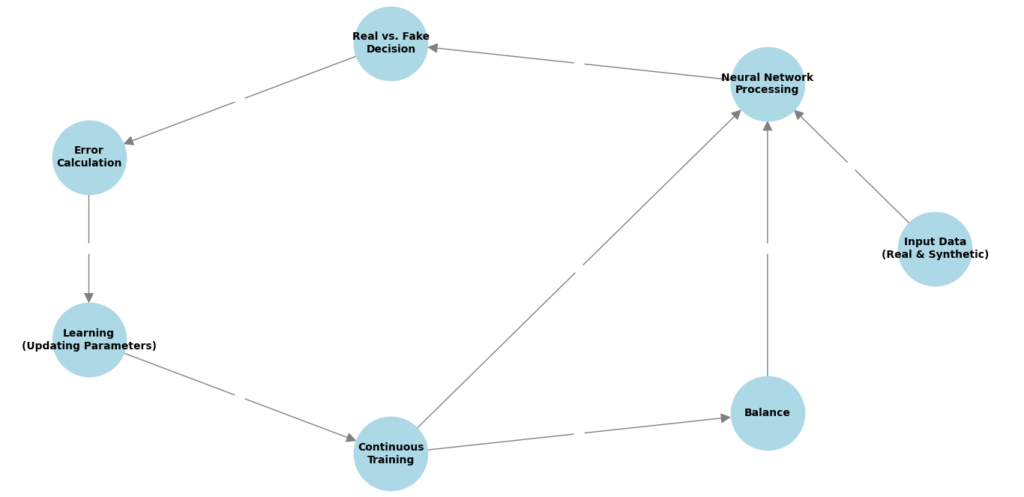

Discriminator

The Discriminator in a Generative Adversarial Network (GAN) is responsible for distinguishing between real and synthetic data. Here’s a detailed breakdown of how it operates:

- Input Data:

- The Discriminator receives data from two sources: real data (e.g., actual images) and synthetic data generated by the Generator.

- Data Evaluation – Neural Network Processing:

- The Discriminator uses a neural network to process the input data. This network typically includes several layers that analyze various features of the data, such as patterns, textures, or shapes.

- Real vs. Fake Decision – Classification:

- The goal of the Discriminator is to classify the input data as either real or fake. It does this by producing a probability score or classification result, indicating how likely it is that the data is real. For instance, a score close to 1 might indicate that the Discriminator believes the data is real, while a score close to 0 suggests it is fake.

- Feedback Generation – Error Calculation:

- After making its decision, the Discriminator calculates the error based on the correctness of its classification. If the Discriminator correctly identifies real and fake data, the error is low. If it makes mistakes, the error is higher.

- Learning – Updating Parameters:

- The Discriminator uses the calculated error to update its neural network parameters through a process called backpropagation. This involves adjusting the weights and biases in the network to improve its ability to distinguish between real and fake data. The goal is to minimize the classification error and enhance accuracy over time.

- Continuous Training – Iterative Improvement:

- The Discriminator undergoes continuous training as it interacts with the Generator. With each round of data, the Discriminator refines its ability to detect subtle differences between real and synthetic data. This ongoing training helps the Discriminator become more adept at spotting fake data and providing better feedback to the Generator.

- Balance – Maintaining Effective Training:

- For effective GAN training, the Discriminator needs to strike a balance. If it becomes too good at detecting fake data, the Generator may struggle to improve. Conversely, if the Discriminator is too lenient, the Generator might not receive accurate feedback. The dynamic between the Discriminator and Generator is crucial for both networks to develop and improve.

Example Code:

Here’s a simple Discriminator model for image data in TensorFlow/Keras:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense, LeakyReLU

def build_discriminator():

model = Sequential()

model.add(Flatten(input_shape=(28, 28, 1)))

model.add(Dense(128))

model.add(LeakyReLU(0.2))

model.add(Dense(1, activation='sigmoid'))

return model

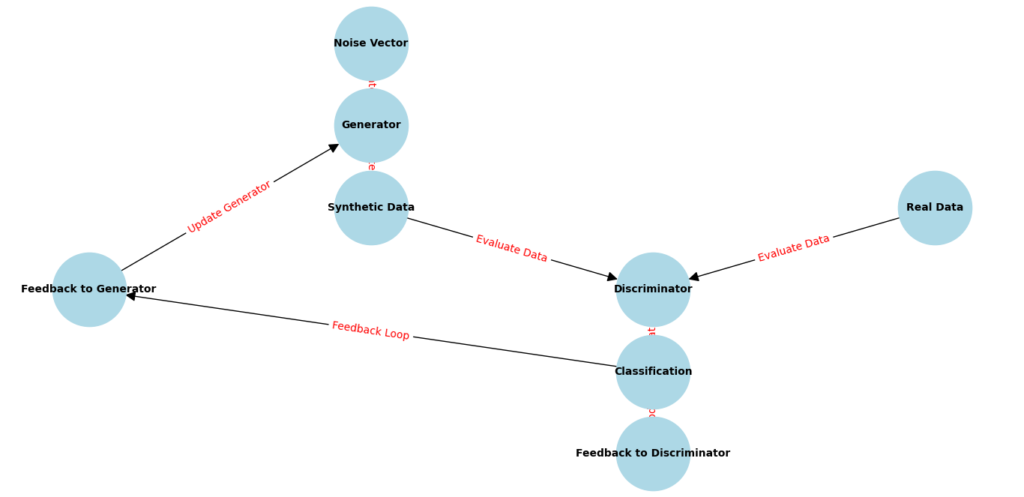

The Adversarial Process

The adversarial process in Generative Adversarial Networks (GANs) involves a continuous competition between two neural networks: the Generator and the Discriminator. Here’s a detailed breakdown of how this process works:

- Data Generation:

- The Generator creates synthetic data from random noise. This data is intended to mimic real data as closely as possible, such as images, text, or other types of information.

- Discriminator Evaluation:

- The synthetic data generated by the Generator is then passed to the Discriminator. The Discriminator also receives real data from the actual dataset. It’s tasked with determining which data is real and which is fake.

- Real vs. Fake Classification:

- The Discriminator analyzes both the real and synthetic data. It provides a classification or probability score indicating how likely it is that each piece of data is real. This helps in distinguishing genuine data from the synthetic data produced by the Generator.

- Feedback Loop:

- Based on the Discriminator’s classification, feedback is generated. If the Discriminator correctly identifies synthetic data as fake, it signals that the Generator’s output needs improvement. If the Discriminator fails to spot the fake data accurately, it suggests that the Generator is getting better at creating realistic data.

- Generator Update:

- The Generator uses the feedback from the Discriminator to refine its data generation process. It adjusts its neural network parameters to produce more realistic data in an attempt to fool the Discriminator. This is done through a learning process called backpropagation.

- Discriminator Update:

- Simultaneously, the Discriminator updates its parameters based on the feedback from its evaluations. It adjusts its neural network to improve its accuracy in detecting fake data. This ensures that the Discriminator becomes better at distinguishing between real and synthetic data.

- Iterative Improvement:

- This process of generating data, evaluating it, and updating both networks happens repeatedly in an iterative cycle. Each cycle helps both the Generator and Discriminator to improve. The Generator gets better at creating realistic data, while the Discriminator enhances its ability to spot fakes.

- Convergence:

- Over time, as the adversarial process continues, both networks reach a point where the Generator produces highly realistic data and the Discriminator becomes very skilled at detecting fake data. The goal is for the Generator to produce data so convincing that the Discriminator can no longer easily tell the difference.

Example of Training Process

Here’s how you might set up the training process in code:

def train_gan(generator, discriminator, gan, epochs, batch_size):

(x_train, _), (_, _) = tf.keras.datasets.mnist.load_data()

x_train = (x_train / 255.0) * 2 - 1 # Normalize to [-1, 1]

x_train = np.expand_dims(x_train, axis=-1)

for epoch in range(epochs):

# Train Discriminator

idx = np.random.randint(0, x_train.shape[0], batch_size)

real_imgs = x_train[idx]

fake_imgs = generator.predict(np.random.randn(batch_size, 100))

real_labels = np.ones((batch_size, 1))

fake_labels = np.zeros((batch_size, 1))

d_loss_real = discriminator.train_on_batch(real_imgs, real_labels)

d_loss_fake = discriminator.train_on_batch(fake_imgs, fake_labels)

# Train Generator

noise = np.random.randn(batch_size, 100)

g_loss = gan.train_on_batch(noise, real_labels)

if epoch % 1000 == 0:

print(f"Epoch {epoch} | Discriminator Loss: {0.5 * (d_loss_real[0] + d_loss_fake[0])} | Generator Loss: {g_loss}")

plot_generated_images(generator, epoch)

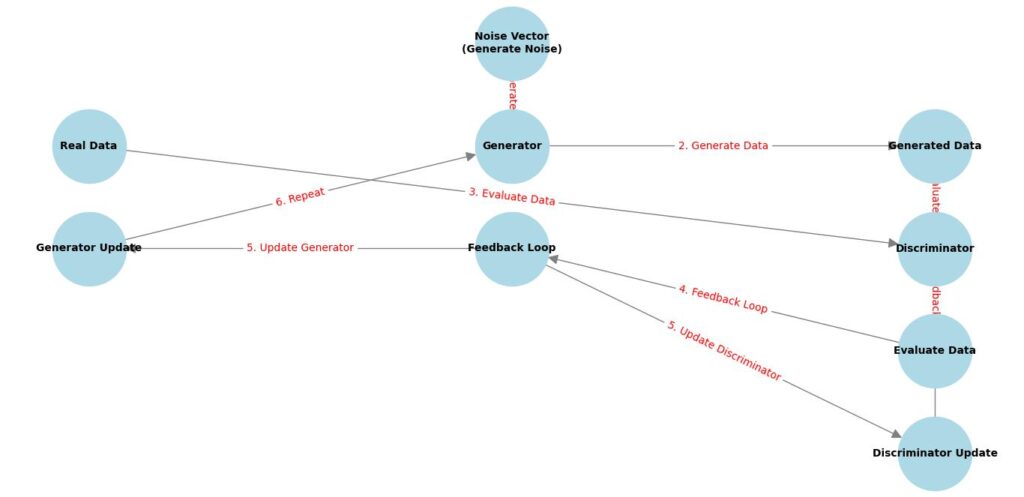

How Do Generative Adversarial Networks (GANs) Work?

Detailed Explanation of the Training Process

GANs are trained through a iterative process involving the following steps:

1. Generate Noise

Concept:

- Noise Vector: The Generative Adversarial Networks (GANs) process starts with a noise vector. This vector is generated randomly, often using a Gaussian (normal) distribution or a uniform distribution. It acts as the input to the Generator and is crucial because it provides the initial variability for data creation.

- Dimensionality: The dimensionality of the noise vector (e.g., 100 dimensions) determines the variety of features the Generator can capture. Larger dimensions allow for more complex data generation but can also increase training time.

Role:

- Seed for Data Generation: The noise vector is essentially a seed that the Generator uses to create synthetic data. By sampling different noise vectors, the Generator can produce diverse outputs.

2. Generate Data

Concept:

- Data Creation: The Generator processes the noise vector through several layers, which might include:

- Dense Layers: Fully connected layers where each neuron is connected to every neuron in the previous layer. They help in transforming the noise into a data format.

- Activation Functions: Functions like ReLU (Rectified Linear Unit) or Leaky ReLU introduce non-linearity, enabling the network to learn complex patterns.

- Reshaping Layers: In the case of image generation, the output of dense layers is reshaped into a 2D or 3D structure (e.g., an image).

- Objective: The Generator’s goal is to produce data that looks as realistic as possible. It adjusts its parameters (weights and biases) based on feedback from the Discriminator to improve the realism of the generated data.

Role:

- Realistic Data Generation: The ultimate goal of the Generator is to produce synthetic data that closely mimics the real data. Over time, with feedback from the Discriminator, the Generator learns to create increasingly convincing data.

3. Evaluate Data

Concept:

- Discriminator’s Task: The Discriminator’s job is to classify data as either real (from the actual dataset) or fake (produced by the Generator). It uses its neural network to make this classification.

- Flattening Layers: For image data, the Discriminator typically starts with a flattening layer to convert 2D data into 1D.

- Dense Layers: Followed by dense layers to process the data and make classification decisions.

- Output Layer: The final layer often uses a sigmoid activation function to output a probability score (between 0 and 1) indicating how likely it is that the data is real.

Role:

- Data Assessment: The Discriminator’s role is critical in providing the Generator with feedback. By evaluating the synthetic data, it helps in guiding the Generator to improve.

4. Feedback Loop

Concept:

- Feedback Mechanism: After evaluating the data, the Discriminator provides feedback to the Generator. This feedback is used to update the Generator’s parameters.

- Loss Functions: The feedback is quantified using loss functions:

- Generator Loss Function: Measures how well the Generator has managed to fool the Discriminator.

- Discriminator Loss Function: Measures how accurately the Discriminator has classified the data.

- Optimization: Both models are optimized using techniques like gradient descent or its variants (e.g., Adam optimizer). The gradients calculated from the loss functions are used to update the network parameters.

- Loss Functions: The feedback is quantified using loss functions:

Role:

- Continuous Improvement: The feedback loop is a dynamic process where each model improves based on the performance of the other. This iterative process continues until the Generator produces data that is indistinguishable from real data.

5. Update Models

Concept:

- Model Updates: Based on the feedback received, both the Generator and Discriminator adjust their parameters to improve their respective performance.

- Generator’s Update: The Generator’s parameters are adjusted to generate data that is more likely to be classified as real by the Discriminator.

- Discriminator’s Update: The Discriminator’s parameters are adjusted to become better at distinguishing real data from fake data.

Role:

- Parameter Adjustment: Regular updates help in fine-tuning the models. The Generator learns to produce better data, while the Discriminator improves its classification accuracy.

6. Repeat

Concept:

- Iterative Training: The training process involves repeating the steps of generating data, evaluating it, providing feedback, and updating models over multiple epochs.

- Epochs: An epoch refers to one complete pass through the entire dataset. GANs typically require many epochs to converge and produce high-quality results.

- Batch Size: Each iteration involves a batch of data. The batch size can affect the training stability and speed.

Role:

- Convergence: The repeating process helps both networks converge to a state where the Generator produces very realistic data and the Discriminator becomes proficient at distinguishing real from fake data.

Generation with Generative Adversarial Networks (GANs) as a Minimax Game

1. The Minimax Game Framework

Game Theory Overview:

- Game Theory: In game theory, a game consists of players who take actions to achieve their goals. Each player’s outcome depends not only on their own actions but also on the actions of the other players.

- Minimax Principle: This principle is used in zero-sum games where one player’s gain is another player’s loss. The objective is to minimize the maximum possible loss.

GANs as a Minimax Game:

- Players: In GANs, the two players are the Generator and the Discriminator. Each has distinct goals and strategies, leading to a competitive environment.

- Objective Function:

- Generator (G): The Generator aims to minimize the Discriminator’s ability to correctly classify fake data. Its strategy is to create data that is as realistic as possible.

- Discriminator (D): The Discriminator aims to maximize its accuracy in distinguishing between real and fake data. Its strategy is to become better at identifying the generated data.

2. Detailed Explanation of the Generator’s Goal

Function of the Generator:

- Data Creation: The Generator creates synthetic data (e.g., images) from random noise. The randomness in the noise allows the Generator to explore various possibilities in data creation.

- Learning Objective: The Generator’s goal is to produce data that is indistinguishable from real data. It wants the Discriminator to classify its output as real.

Loss Function of the Generator:

- Minimizing Loss: The Generator’s loss function is designed to decrease when the Discriminator is fooled. The loss function is often formulated as:

Training Dynamics:

- Parameter Updates: During training, the Generator adjusts its weights to improve the realism of its output. If the Discriminator successfully detects the generated data as fake, the Generator updates its parameters to make the data more convincing.

3. Detailed Explanation of the Discriminator’s Goal

Function of the Discriminator:

- Data Evaluation: The Discriminator evaluates data and classifies it as either real or fake. It is trained to distinguish between genuine data samples and those produced by the Generator.

- Learning Objective: The Discriminator’s goal is to maximize its classification accuracy. It wants to be able to correctly identify whether data is from the real dataset or generated by the Generator.

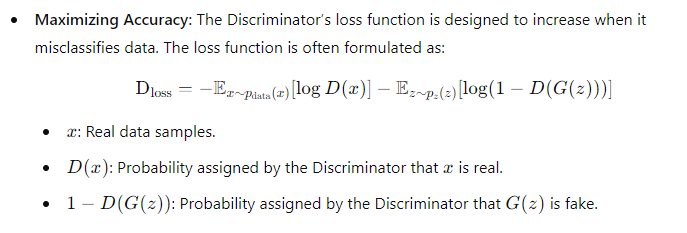

Loss Function of the Discriminator:

Training Dynamics:

- Parameter Updates: During training, the Discriminator adjusts its weights based on its classification performance. If it incorrectly classifies data, it updates its parameters to improve its detection capabilities.

4. The Feedback Loop in the Minimax Game

Iterative Improvement:

- Training Process: The training process involves a continuous feedback loop between the Generator and the Discriminator. This feedback loop is essential for the improvement of both networks.

- Generator’s Update: Receives feedback from the Discriminator on how well it fooled the network. It adjusts its parameters to produce better data.

- Discriminator’s Update: Receives feedback on its performance, including errors made in classification. It adjusts its parameters to improve its ability to detect fake data.

Training Steps:

- Generate Noise: The Generator produces synthetic data from random noise.

- Evaluate Data: The Discriminator evaluates this synthetic data and provides feedback.

- Update Models: Both the Generator and Discriminator update their parameters based on the feedback.

- Repeat: This process is repeated for many iterations, with each network continuously improving based on the other’s performance.

Convergence to Nash Equilibrium:

- Nash Equilibrium: The Nash Equilibrium in GANs represents a state where the Generator creates data so realistic that the Discriminator cannot reliably distinguish it from real data. At this equilibrium, the Generator’s goal of minimizing the Discriminator’s ability to classify data is achieved, and the Discriminator’s goal of maximizing its classification accuracy reaches its limit. This equilibrium is crucial for the effective functioning of GANs, as it ensures that the Generator produces high-quality data and the Discriminator learns to improve its detection capabilities.

The minimax game concept provides a structured way to understand the interaction between the Generator and Discriminator in GANs. It highlights the competitive nature of their relationship, where the Generator aims to produce realistic data, while the Discriminator aims to correctly classify data as real or fake. This interplay drives the learning process, leading to improved performance of both networks. The iterative feedback loop ensures that both the Generator and Discriminator continuously refine their strategies until they reach a point of equilibrium.

How are Generative Adversarial Networks (GANs) evaluated?

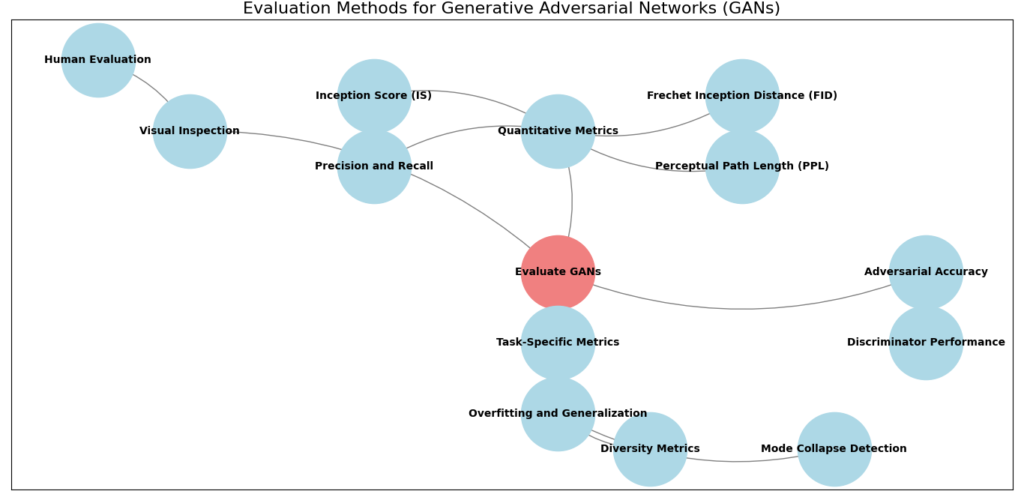

Evaluating Generative Adversarial Networks (GANs) involves assessing both the generator and the discriminator. Here are some common methods and metrics used:

1. Visual Inspection:

- Human Evaluation: Experts or users look at the generated images to see how real they look. Even though it’s a bit subjective, this approach gives clear, direct feedback on how good the generated images are.

2. Quantitative Metrics:

- Inception Score (IS): This score measures how realistic the generated images are and how diverse the generated images are. It uses a pre-trained neural network (like Inception) to classify generated images and evaluates the entropy of the predicted classes.

- Frechet Inception Distance (FID): FID compares the statistics of generated images to real images by calculating the distance between feature vectors of these images, which are obtained from a pre-trained network. Lower FID indicates closer resemblance to real data.

- Precision and Recall: These metrics assess the quality and diversity of generated images by comparing the distribution of generated images to real images.

- Perceptual Path Length (PPL): This measures the smoothness and consistency of the image transformations in the latent space. Lower PPL values indicate smoother and more consistent transformations.

3. Adversarial Accuracy:

- Discriminator Performance: Evaluating the accuracy of the discriminator in distinguishing real from fake images can give insight into the GAN’s training progress. A balanced GAN should have a discriminator that is not easily fooled and a generator that can produce realistic images.

4. Overfitting and Generalization:

- Mode Collapse Detection: Ensuring that the generator produces diverse images and does not collapse to generating only a few types of images.

- Diversity Metrics: Assessing the variety in the generated images to ensure the GAN has learned to generate a wide range of outputs, rather than a limited subset.

5. Task-Specific Metrics:

- Application-Based Evaluation: Depending on the application, different metrics may be used. For instance, in medical imaging, experts might evaluate the clinical relevance of generated images. In text generation, coherence and relevance to the given context might be assessed.

6. User Studies:

- User Feedback: Collecting feedback from users on the quality, realism, and usefulness of the generated outputs can provide valuable insights, especially for applications in creative fields like art and design.

Types of Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) come in various forms, each tailored to different tasks and improvements. Here’s an in-depth look at some of the prominent types:

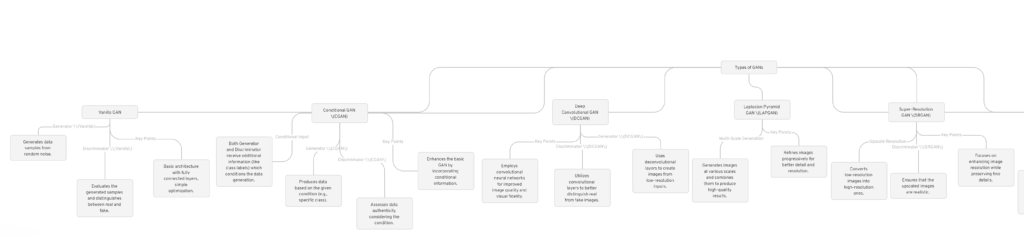

1. Vanilla GAN

The Vanilla GAN represents the foundational architecture of GANs. It consists of two basic neural networks: the Generator and the Discriminator.

- Generator: Takes random noise as input and generates data samples.

- Discriminator: Evaluates the generated samples and determines whether they are real or fake.

Key Points:

- Architecture: Uses fully connected layers (multi-layer perceptrons) in both the Generator and Discriminator.

- Training: Simple optimization using stochastic gradient descent to minimize the difference between generated and real data.

Applications: Basic image generation and introductory GAN tasks.

2. Conditional GAN (CGAN)

Conditional GANs extend Vanilla GANs by introducing additional information into the training process.

- Conditional Input: Both the Generator and Discriminator receive extra input, such as class labels or other data, which conditions the data generation.

Key Points:

- Generator: Produces data that corresponds to the given condition (e.g., generating images of a specific class).

- Discriminator: Assesses the authenticity of data based on the given condition.

Applications: Tasks requiring data generation based on specific conditions, like generating images of specific objects or applying labels to generated images.

3. Deep Convolutional GAN (DCGAN)

Deep Convolutional GANs use convolutional neural networks (ConvNets) instead of fully connected layers, enhancing the quality of generated images.

- Architecture: Utilizes convolutional layers with techniques like batch normalization and leaky ReLU activations. Max pooling is replaced by strided convolutions.

Key Points:

- Generator: Employs deconvolutional layers to generate images from low-resolution inputs.

- Discriminator: Uses convolutional layers to improve the accuracy of distinguishing real from fake images.

Applications: High-quality image generation, including realistic images and improved visual fidelity.

4. Laplacian Pyramid GAN (LAPGAN)

Laplacian Pyramid GANs use a multi-scale approach to generate images at various levels of detail.

- Pyramid Structure: Images are processed at different scales or levels, starting from coarse to fine details.

Key Points:

- Multi-Scale Generation: Generates images at different resolutions and then combines them to produce high-quality final results.

- Detailed Reconstruction: Enhances image quality by progressively refining the generated images.

Applications: High-resolution image generation, especially useful for creating detailed and realistic images from coarse inputs.

5. Super-Resolution GAN (SRGAN)

Super-Resolution GANs focus on enhancing the resolution of images, converting low-resolution images into high-resolution ones.

- Architecture: Combines a deep convolutional network with adversarial training to improve image resolution.

Key Points:

- Generator: Upscales low-resolution images while maintaining fine details.

- Discriminator: Ensures that upscaled images appear realistic and closely resemble high-resolution images.

Applications: Improving image quality in fields like medical imaging, satellite imagery, and any application where high-resolution images are crucial.

6. CycleGAN

CycleGANs enable image-to-image translation without paired examples, making them suitable for tasks where matching input-output pairs are not available.

- Cycle Consistency: Ensures that translating images to one domain and then back to the original domain results in the same image.

Key Points:

- Bidirectional Translation: Capable of converting images between different domains, such as converting horse images to zebra images and vice versa.

- Unpaired Training: Does not require aligned pairs of images for training.

Applications: Artistic style transfer, domain adaptation, and scenarios where paired data is not available.

7. Progressive Growing GAN (PGGAN)

Progressive Growing GANs improve the stability and quality of generated images by progressively increasing the complexity of the network.

- Progressive Training: Starts with low-resolution images and gradually adds layers to increase resolution during training.

Key Points:

- Stable Training: Reduces training instability and improves the quality of generated images by introducing new layers incrementally.

- High-Resolution Generation: Achieves superior results in generating high-resolution images.

Applications: Generating high-resolution and photorealistic images, including complex textures and detailed scenes.

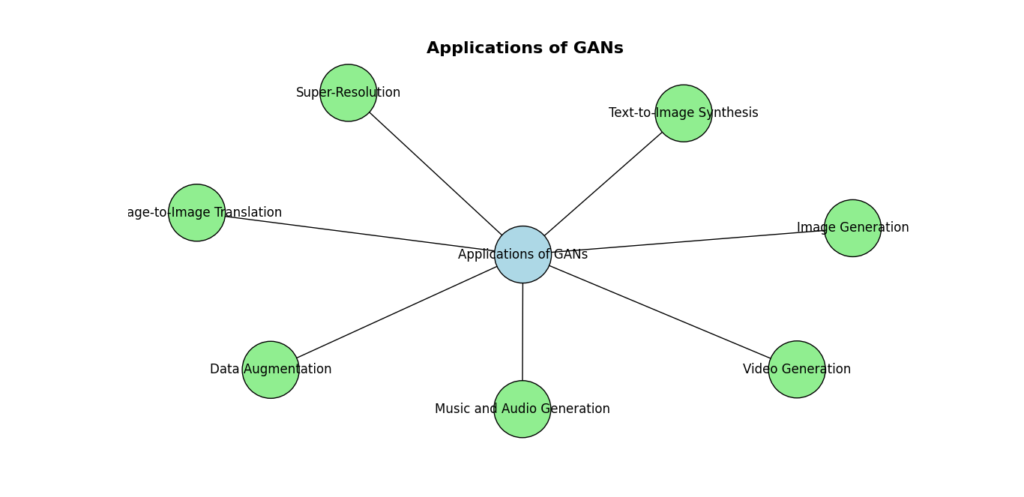

Applications of Generation with Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) have revolutionized various domains by enabling the creation of highly realistic data and enhancing existing data through innovative techniques. Here’s a detailed look at their diverse applications:

Image Generation

Generative Adversarial Networks (GANs) excel in generating entirely new images from scratch. This capability is widely used in creative industries such as art and design. For example, GANs can create photorealistic images of landscapes, people, or imaginary creatures. They can also generate artwork and designs that are not limited by the constraints of traditional artistic methods, offering new creative possibilities.

Data Augmentation

In machine learning, having a large and diverse dataset is crucial for training strong models. GANs address this need by generating additional data that mirrors the characteristics of existing datasets. This is particularly useful when working with small datasets, as the augmented data helps in improving the performance and generalization of machine learning models.

Image-to-Image Translation

Generative Adversarial Networks (GANs) are highly effective in transforming images from one domain to another. This includes applications like converting sketches into detailed photographs, colorizing black-and-white images, and applying artistic styles to photos. This ability to translate images across domains is valuable in fields such as graphic design, entertainment, and historical photo restoration.

Text-to-Image Synthesis

Text-to-image synthesis involves generating images based on textual descriptions. For instance, Generative Adversarial Networks (GANs) can create illustrations from written narratives or generate visuals that match specific textual content. This technology is beneficial for creating visual content for books, advertisements, and any other medium where visual representation is derived from textual information.

Super-Resolution

GANs enhance the resolution of images, a process known as super-resolution. This involves taking low-resolution images and converting them into high-resolution versions with improved details. Super-resolution is particularly useful in medical imaging, where clear and detailed images are essential for accurate diagnosis, as well as in satellite photography for better geographical analysis.

Video Generation

In video production, GANs can create realistic video sequences, which is great for filmmakers, animators, and video game developers. They can make new video clips from scratch or improve existing footage, providing a powerful tool for creating high-quality visual content.

Music and Audio Generation

Generative Adversarial Networks (GANs) are not limited to visual data; they can also be applied to audio generation. This includes creating new music compositions, sound effects, and background scores. In the audio industry, GANs offer new ways to generate and manipulate sound, expanding creative possibilities and providing tools for composers, sound designers, and filmmakers.

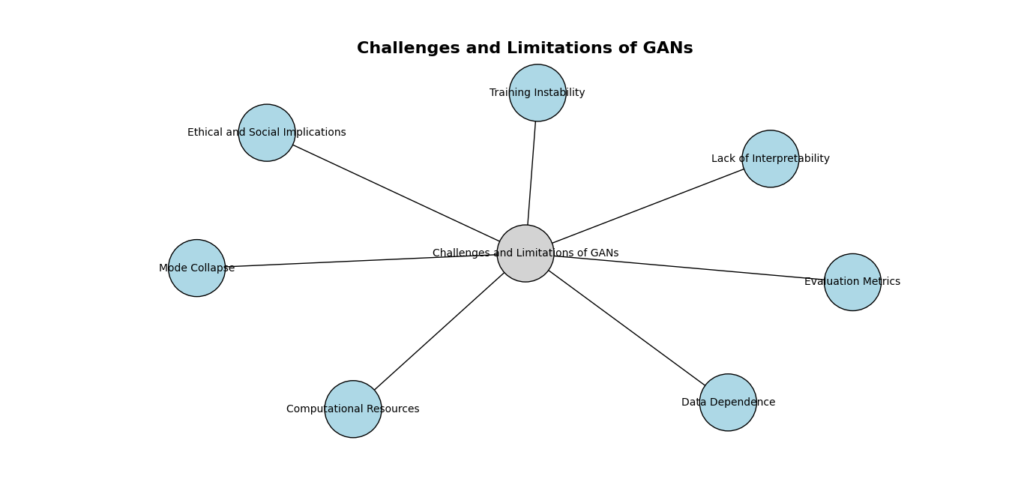

Challenges and Limitations of Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are powerful tools in AI, but they come with several challenges that can make them difficult to work with. Here’s a closer look at the main issues you might face with GANs:

1. Training Instability

What It Means: Training Generative Adversarial Networks (GANs) can be unstable. The Generator and Discriminator are constantly competing, which can lead to problems like mode collapse or the training process not settling down.

Details:

- Mode Collapse: The Generator might produce only a few types of outputs repeatedly, rather than capturing the full range of possible results.

- Oscillations and Divergence: The training might not settle into a stable pattern, causing the model to jump between different states or never fully converge.

Possible Solutions:

- Use advanced training techniques and network designs, such as gradient penalties or specific loss functions, to stabilize the training process.

2. Evaluation Metrics

What It Means: It’s tough to measure how well a GAN is performing because traditional metrics (like accuracy) don’t apply well to this kind of model.

Details:

- Quantitative Metrics: Measures like Inception Score (IS) and Fréchet Inception Distance (FID) are used, but they might not always reflect the true quality of the generated images.

- Qualitative Evaluation: Often, human judgment is needed to assess the quality of the generated images, which can be subjective and inconsistent.

Possible Solutions:

- Develop better metrics or use a combination of methods to evaluate the quality of the GAN outputs more reliably.

3. Computational Resources

What It Means: Training GANs, especially for complex tasks or high-quality outputs, requires a lot of computational power, which can be a barrier.

Details:

- Resource Intensive: Training can be very time-consuming and costly, requiring powerful hardware and lots of memory.

- Scalability Issues: As you increase the size or complexity of the data, the resources needed also grow.

Possible Solutions:

- Use more efficient algorithms and hardware, or consider cloud-based services to manage the computational load.

4. Mode Collapse

What It Means: Mode collapse happens when the Generator produces very similar outputs, missing out on the variety in the data it’s supposed to learn.

Details:

- Limited Diversity: The model may fail to generate diverse samples and instead focus on a narrow set of results.

- Impact on Applications: This can be problematic in areas where diversity is important, such as creative work or simulations.

Possible Solutions:

- Apply techniques like minibatch discrimination or regularization to encourage the Generator to produce a wider range of outputs.

5. Lack of Interpretability

What It Means: GANs are often seen as “black boxes,” meaning it’s hard to understand how they make decisions or generate outputs.

Details:

- Complex Models: The internal workings of Generative Adversarial Networks (GANs)ANs can be complex and opaque.

- Debugging Issues: It can be challenging to pinpoint issues or understand why the model is behaving a certain way.

Possible Solutions:

- Use methods to visualize and interpret the internal workings of GANs, or develop more transparent models.

6. Ethical and Social Implications

What It Means: GANs can be used in ways that raise ethical and social concerns, such as creating misleading or harmful content.

Details:

- Deepfakes: Generative Adversarial Networks (GANs) can generate realistic but fake images or videos, which can be used to spread misinformation or harm individuals.

- Privacy Concerns: There are risks related to generating synthetic data that mimics real people or sensitive information.

Possible Solutions:

- Implement guidelines and safeguards to prevent misuse and ensure GAN technology is used responsibly.

7. Data Dependence

What It Means: Generative Adversarial Networks (GANs) depend heavily on the data they are trained on. If the data is biased or insufficient, it affects how well the GAN performs.

Details:

- Bias in Data: If the training data has biases, the GAN might reproduce those biases in its outputs.

- Data Scarcity: Limited or poor-quality data can prevent the Generator from producing high-quality results.

Possible Solutions:

- Ensure training data is diverse and representative, and use techniques to address any biases present in the data.

Real-World Project: Image Generation with Generative Adversarial Networks (GANs)

Let’s create a simple image generation app using a GAN with Python and TensorFlow/Keras. We’ll follow these steps:

- Define the Generator and Discriminator models.

- Compile the GAN model.

- Train the GAN.

- Generate images.

Here’s the complete code:

1. Define the Generator and Discriminator Models

First, we need to define the generator and discriminator models. The generator will take random noise as input and generate images, while the discriminator will take an image as input and classify it as real or fake.

Import Necessary Libraries

import tensorflow as tf

from tensorflow.keras.layers import Dense, Reshape, Flatten, LeakyReLU, Dropout

from tensorflow.keras.layers import Conv2D, Conv2DTranspose

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam

import numpy as np

import matplotlib.pyplot as plt

Here we import necessary libraries:

- TensorFlow and Keras for building and training neural networks.

- NumPy for numerical operations.

- Matplotlib for plotting images.

Define the Generator

The generator creates images from random noise. It aims to produce images that the discriminator cannot distinguish from real ones.

def build_generator():

model = Sequential()

model.add(Dense(256 * 7 * 7, activation="relu", input_dim=100))

model.add(Reshape((7, 7, 256)))

model.add(Conv2DTranspose(128, kernel_size=3, strides=2, padding="same"))

model.add(LeakyReLU(alpha=0.01))

model.add(Conv2DTranspose(64, kernel_size=3, strides=1, padding="same"))

model.add(LeakyReLU(alpha=0.01))

model.add(Conv2DTranspose(1, kernel_size=3, strides=2, padding="same", activation="tanh"))

return model

This function defines a generator model in a GAN that transforms a random noise vector into an image. Let’s break down each part of the code:

- Sequential Model Initialization:

model = Sequential()

This line initializes a sequential model, which is a linear stack of layers.

Dense Layer:

model.add(Dense(256 * 7 * 7, activation="relu", input_dim=100))

Here, a dense layer is added with 256 * 7 * 7 neurons. The input to this layer is a 100-dimensional noise vector (input_dim=100). The ReLU activation function introduces non-linearity, allowing the model to learn complex patterns. This layer projects the 100-dimensional noise vector into a higher-dimensional space (256 * 7 * 7 = 12544 dimensions).

Reshape Layer:

model.add(Reshape((7, 7, 256)))

This layer reshapes the output of the dense layer into a 7x7x256 tensor. This transformation is necessary to convert the flat vector from the dense layer into a 3D tensor suitable for processing by convolutional layers.

First Conv2DTranspose Layer:

model.add(Conv2DTranspose(128, kernel_size=3, strides=2, padding="same"))

This layer upsamples the tensor from 7x7x256 to 14x14x128. It uses 128 filters with a kernel size of 3, strides of 2, and ‘same’ padding to ensure the output has the same spatial dimensions as the input. This upsampling helps increase the resolution of the image.

LeakyReLU Activation:

model.add(LeakyReLU(alpha=0.01))

This activation function introduces a small negative slope (alpha=0.01) when the unit is not active, preventing the “dying ReLU” problem, where neurons stop learning.

Second Conv2DTranspose Layer:

model.add(Conv2DTranspose(64, kernel_size=3, strides=1, padding="same"))

This layer maintains the spatial dimensions (14×14) but reduces the depth from 128 to 64. It uses 64 filters, a kernel size of 3, strides of 1, and ‘same’ padding.

Second LeakyReLU Activation:

model.add(LeakyReLU(alpha=0.01))

This is another instance of the LeakyReLU activation function to introduce non-linearity and prevent the dying ReLU problem.

Final Conv2DTranspose Layer:

model.add(Conv2DTranspose(1, kernel_size=3, strides=2, padding="same", activation="tanh"))

This layer upsamples the tensor from 14x14x64 to the final output size of 28x28x1. It uses 1 filter (since the output is a grayscale image), a kernel size of 3, strides of 2, ‘same’ padding, and the tanh activation function. The tanh activation outputs values in the range [-1, 1], which is suitable for image data.

Define the Discriminator

The discriminator classifies images as real or fake. It learns to distinguish between genuine images from the dataset and fake images generated by the generator.

def build_discriminator():

model = Sequential()

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same", input_shape=(28, 28, 1)))

model.add(LeakyReLU(alpha=0.01))

model.add(Dropout(0.3))

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(LeakyReLU(alpha=0.01))

model.add(Dropout(0.3))

model.add(Flatten())

model.add(Dense(1, activation="sigmoid"))

return model

This function defines a discriminator model in a GAN that classifies images as real or fake. Let’s break down each part of the code:

Sequential Model Initialization:

model = Sequential()

This initializes a sequential model, which is a linear stack of layers.

First Conv2D Layer:

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same", input_shape=(28, 28, 1)))

This layer applies 64 convolution filters with a kernel size of 3×3 to the input image. The strides parameter set to 2 means the filter moves 2 steps at a time, effectively downsampling the image by half. ‘Same’ padding ensures the output has the same spatial dimensions as the input. The input shape is specified as (28, 28, 1) for 28×28 grayscale images.

LeakyReLU Activation:

model.add(LeakyReLU(alpha=0.01))

This activation function introduces a small negative slope (alpha=0.01) when the unit is not active, preventing the “dying ReLU” problem, where neurons stop learning.

Dropout Layer:

model.add(Dropout(0.3))

This layer randomly sets 30% of the input units to 0 during each forward pass during training, which helps prevent overfitting by making the model more robust.

Second Conv2D Layer:

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

This layer applies 128 convolution filters with a kernel size of 3×3 and strides of 2, further downsampling the image. ‘Same’ padding is used again to maintain spatial dimensions.

Second LeakyReLU Activation:

model.add(LeakyReLU(alpha=0.01))

Another instance of the LeakyReLU activation function to introduce non-linearity and prevent the dying ReLU problem.

Second Dropout Layer:

model.add(Dropout(0.3))

This layer again applies dropout, randomly setting 30% of the input units to 0 during each forward pass during training.

Flatten Layer:

model.add(Flatten())

This layer flattens the 3D tensor output from the convolutional layers into a 1D tensor, making it suitable for the dense layer.

Dense Layer:

model.add(Dense(1, activation="sigmoid"))

This final layer consists of a single neuron with a sigmoid activation function. The sigmoid function outputs a probability between 0 and 1, representing the likelihood that the input image is real.

Compile the GAN Model

We compile the generator and discriminator and then combine them into a GAN model.

# Build and compile the discriminator

discriminator = build_discriminator()

discriminator.compile(loss="binary_crossentropy", optimizer=Adam(), metrics=["accuracy"])

Building and Compiling the Discriminator:

- The

build_discriminator()function is called to create the discriminator model. - The model is compiled using the

binary_crossentropyloss function, which is suitable for binary classification tasks. - The Adam optimizer is used for its efficiency and adaptive learning rate properties.

- The accuracy metric is included to monitor the classification accuracy during training.

# Build the generator

generator = build_generator()

- The

build_generator()function is called to create the generator model.

# Create the GAN

gan = Sequential()

gan.add(generator)

discriminator.trainable = False

gan.add(discriminator)

gan.compile(loss="binary_crossentropy", optimizer=Adam())

Creating and Compiling the GAN Model:

- A sequential model is initialized for the GAN.

- The generator is added to the GAN model.

- The discriminator’s

trainableattribute is set toFalse. This ensures that only the generator is trained when the GAN model is trained, preventing the discriminator from updating its weights during this process. - The discriminator is added to the GAN model.

- The GAN model is compiled using the

binary_crossentropyloss function and the Adam optimizer.

Train the GAN

The training process alternates between training the discriminator and the generator.

def train_gan(gan, generator, discriminator, epochs=10000, batch_size=128, sample_interval=1000):

(X_train, _), (_, _) = tf.keras.datasets.mnist.load_data()

X_train = X_train / 127.5 - 1.0

X_train = np.expand_dims(X_train, axis=3)

real = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

Data Preparation:

- The MNIST dataset is loaded and normalized to the range [-1, 1] to match the tanh activation output in the generator.

- The dataset is expanded to add a channel dimension, converting it to shape (28, 28, 1).

- Arrays for real and fake labels are created, filled with ones and zeros respectively.

for epoch in range(epochs):

idx = np.random.randint(0, X_train.shape[0], batch_size)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (batch_size, 100))

gen_imgs = generator.predict(noise)

Training Loop Initialization:

- The training process begins with a loop running for a specified number of epochs.

- A random batch of real images is selected from the training dataset.

- Random noise is generated and fed into the generator to produce fake images.

d_loss_real = discriminator.train_on_batch(imgs, real)

d_loss_fake = discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

Training the Discriminator:

- The discriminator is trained on real images with real labels and on fake images with fake labels.

- The loss for both real and fake image training steps is calculated.

- The total discriminator loss is the average of the two losses.

noise = np.random.normal(0, 1, (batch_size, 100))

g_loss = gan.train_on_batch(noise, real)

Training the Generator:

- New random noise is generated and used to train the GAN.

- The GAN is trained on this noise with the labels set as real. This step updates only the generator’s weights since the discriminator is not trainable in the GAN model.

if epoch % sample_interval == 0:

print(f"{epoch} [D loss: {d_loss[0]} | D accuracy: {100*d_loss[1]}] [G loss: {g_loss}]")

sample_images(generator)

- Monitoring and Sampling:

- At specified intervals (every

sample_intervalepochs), the current epoch number, discriminator loss and accuracy, and generator loss are printed. - A function

sample_images(generator)is called to generate and save sample images from the generator.

- At specified intervals (every

This code demonstrates the process of alternating training between the discriminator and the generator, allowing the GAN to improve over time.

Sample Images Function

This function helps visualize the generated images during training.

def sample_images(generator, image_grid_rows=4, image_grid_columns=4):

noise = np.random.normal(0, 1, (image_grid_rows * image_grid_columns, 100))

gen_imgs = generator.predict(noise)

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(image_grid_rows, image_grid_columns, figsize=(4, 4), sharey=True, sharex=True)

cnt = 0

for i in range(image_grid_rows):

for j in range(image_grid_columns):

axs[i, j].imshow(gen_imgs[cnt, :, :, 0], cmap='gray')

axs[i, j].axis('off')

cnt += 1

plt.show()

Generating and Normalizing Images

- Noise Generation:

- Create a batch of random noise vectors to feed into the generator. The number of noise vectors matches the total number of images to be displayed in a grid (

image_grid_rows * image_grid_columns).

- Create a batch of random noise vectors to feed into the generator. The number of noise vectors matches the total number of images to be displayed in a grid (

- Image Generation:

- Generate images from the noise vectors using the generator model.

- Normalize the generated images from the range [-1, 1] to [0, 1] for visualization by applying the transformation

0.5 * gen_imgs + 0.5.

Creating and Displaying the Grid

- Grid Setup:

- Create a grid of subplots (

fig, axs) to display the generated images. The grid size is defined byimage_grid_rowsandimage_grid_columns. - The

figsizeparameter sets the size of the entire figure, andshareyandsharexensure that all subplots share the same x and y axes.

- Create a grid of subplots (

- Plotting Images:

- Loop through the grid and display each image in the corresponding subplot.

- Use

imshowto render each image in grayscale (cmap='gray'), and turn off the axis labels and ticks withaxis('off').

- Display:

- Show the complete grid of generated images with

plt.show().

- Show the complete grid of generated images with

Generate Images

After training, generate new images using the trained generator.

def generate_images(generator, num_images=25):

noise = np.random.normal(0, 1, (num_images, 100))

gen_imgs = generator.predict(noise)

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(5, 5, figsize=(5, 5), sharey=True, sharex=True)

cnt = 0

for i in range(5):

for j in range(5):

axs[i, j].imshow(gen_imgs[cnt, :, :, 0], cmap='gray')

axs[i, j].axis('off')

cnt += 1

plt.show()

generate_images(generator, num_images=25)

Generating and Normalizing Images

- Noise Generation:

- Create a set of random noise vectors. The number of noise vectors (

num_images) determines how many images you want to generate.

- Create a set of random noise vectors. The number of noise vectors (

- Image Generation:

- Use the trained generator to produce images from the noise vectors.

- Normalize the generated images from the range

[-1, 1]to[0, 1]to make them suitable for display with the transformation0.5 * gen_imgs + 0.5.

Creating and Displaying the Grid

- Grid Setup:

- Set up a grid of subplots (

fig, axs) to display the images. The grid is 5×5, resulting in a total of 25 images displayed. figsizespecifies the size of the entire figure, whileshareyandsharexensure that all subplots share the same axes.

- Set up a grid of subplots (

- Plotting Images:

- Loop through the grid to place each generated image in the corresponding subplot.

- Use

imshowto display each image in grayscale (cmap='gray'). - Hide the axis labels and ticks with

axis('off').

- Display:

- Show the complete grid of generated images using

plt.show().

- Show the complete grid of generated images using

Function Execution

- Generate and Visualize:

- Call the

generate_imagesfunction with thegeneratorandnum_images=25to generate and display a 5×5 grid of images created by the GAN.

- Call the

This function visualizes the output of the trained generator, allowing you to see how well the GAN has learned to produce realistic images.

Conclusion

Generative Adversarial Networks, or GANs, have changed the game in the world of artificial intelligence. By using two smart systems that compete with each other, GANs can create amazingly realistic and high-quality data. Whether it’s improving the quality of images, generating new artwork, helping in medical imaging, or creating synthetic data for research, GANs are making a big impact in many areas.

However, GANs also come with challenges. They can be tricky to train, sometimes produce repetitive results, and require a lot of computer power. There are also concerns about the misuse of GANs, such as creating fake videos or images, which means we need to use this technology responsibly.

Despite these challenges, the future of GANs looks very bright. Researchers are constantly working on making GANs more stable and efficient. This means we’ll see even more amazing uses for GANs in the coming years, helping to drive creativity and solve problems in new ways.

In short, GANs show us just how far artificial intelligence has come. They highlight the incredible potential of using competition between smart systems to push the limits of what we can create. As we continue to explore and improve this technology, GANs will keep playing a key role in advancing AI, opening up exciting possibilities for the future.

External Resources

Research Papers and Articles

- Original GAN Paper by Ian Goodfellow et al.

- Title: “Generative Adversarial Nets”

- URL: arXiv:1406.2661

- NIPS 2014 Paper

- Title: “Generative Adversarial Networks”

- Conference: Neural Information Processing Systems (NIPS) 2014

- URL: NIPS 2014 Paper

- Survey of GANs

- Title: “A Survey on Generative Adversarial Networks: Fundamentals, Applications, and Future Research Directions”

- URL: arXiv:1906.01529

Frequently Asked Questions

1. What are Generative Adversarial Networks (GANs)?

GANs are a type of artificial intelligence model designed to generate new data that resembles a given dataset. They consist of two parts: the generator, which creates data, and the discriminator, which evaluates how real or fake the generated data is.

2. Who invented GANs?

GANs were introduced by Ian Goodfellow and his colleagues in 2014.

3. How do GANs work?

GANs work by having two neural networks compete against each other. The generator creates data, and the discriminator evaluates it. Over time, both networks improve, leading to the generation of increasingly realistic data.

4. What are the challenges in training GANs?

Training GANs can be difficult due to issues like training instability, mode collapse (where the generator produces limited varieties of data), and the high computational power required.

7. What is mode collapse in GANs?

Mode collapse occurs when the generator produces a limited variety of outputs instead of generating a diverse set of data that accurately reflects the training data distribution.

8. How are GANs evaluated?

Evaluating GANs can be challenging. Common methods include using metrics like Inception Score (IS) and Fréchet Inception Distance (FID) to measure the quality and diversity of the generated data.

Leave a Reply