How to Build a Multilingual Chatbot with LLMs

Introduction

What is a Multilingual Chatbot?

A multilingual chatbot is a smart AI system that can chat with people in multiple languages. Imagine having a virtual assistant that understands and responds to you in your own language, whether it’s English, Spanish, Chinese, or any other language. This is especially handy for businesses that serve customers all around the world.

These chatbots help break down language barriers, making it easier for companies to connect with their diverse customer base. Whether they’re answering questions, providing information, or just having a friendly chat, multilingual chatbots can make interactions smoother and more enjoyable for everyone involved. This improved communication can lead to happier customers and stronger relationships with them.

Why Use Large Language Models (LLMs)?

Large Language Models like GPT-3 and GPT-4 have been trained on a huge amount of text in various languages. This makes them especially great for building chatbots that can handle multiple languages. Unlike older ones, LLMs are much better at understanding and using the subtle differences and complexities of different languages.

When you use LLMs to build your chatbot, it means your chatbot can naturally understand and respond in several languages. This makes it a fantastic tool for businesses that want to communicate smoothly and effectively with people from different regions.

Goals of This Guide

In this guide, you will learn how to build a multilingual chatbot using LLMs. By the end of this tutorial, you should be able to:

- Understand the basics of LLMs and how they handle multiple languages.

- Plan and design a multilingual chatbot.

- Develop the chatbot using Python and integrate it with an LLM API.

- Test, deploy, and maintain your chatbot.

Prerequisites: Basic knowledge of Python programming and familiarity with natural language processing (NLP) concepts will be helpful. If you’re new to these topics, don’t worry; we’ll provide explanations and resources to get you up to speed.

Understanding Large Language Models

Overview of LLMs

Large Language Models (LLMs) are smart AI systems that can understand and create text that sounds like a real person wrote it. They’ve been trained on lots of different text, so they’re good at handling various languages and situations. Here are some popular examples:

- GPT-3 and GPT-4 by OpenAI: These models are great at writing text that makes sense and fits well with the context. They can come up with natural-sounding responses in many languages.

- BERT by Google: BERT is really good at understanding the meaning of words based on their context in sentences. It’s often used for answering questions and understanding language better.

- T5 by Google: T5, or Text-To-Text Transfer Transformer, is quite flexible. It handles a lot of language tasks by turning them into text problems, making it useful for various language-related jobs.

These models don’t just create text. They can also translate languages, summarize information, and hold conversations. That’s why they’re so handy for making advanced chatbots and other language-based AI tools.

Certainly! Here’s a more straightforward and conversational version:

How LLMs Handle Multilingual Capabilities

LLMs can understand and create text in many languages because they’re trained on a wide range of language data. This training helps them get the hang of different languages and their unique quirks. Here’s how they manage to handle multiple languages:

- Training on Diverse Data: LLMs learn from text in lots of different languages. This helps them pick up on how different languages are structured and how they work.

- Transfer Learning: This technique lets LLMs use what they’ve learned from one language to help with another. For instance, if the model knows English well, it can use some of that knowledge to understand and generate text in another language it’s less familiar with.

- Zero-Shot Learning: Sometimes, LLMs can handle languages they haven’t seen much of. They use their general knowledge about language to make smart guesses about how to work with these less familiar languages.

These skills make LLMs great for building multilingual chatbots. They can chat with users in different languages, making the experience smooth and natural no matter what language the user speaks.

Certainly! Here’s a simpler, more conversational version:

Challenges in Multilingual NLP

Even though LLMs are powerful, building a multilingual chatbot with these models can be tricky. Here are some of the main challenges:

- Language-Specific Nuances: Each language has its own grammar rules, idioms, and expressions. Getting these details right is important for clear communication. For example, phrases that make sense in one language might not translate well into another.

- Resource Availability: Some languages don’t have a lot of digital text available. This lack of data can make it harder to train models effectively for those languages. As a result, models might work better in languages with more data.

- Preprocessing Needs: Different languages need different preprocessing steps. For instance, breaking text into smaller parts (tokenization) and standardizing it (normalization) need to be adjusted for each language. This helps ensure the text is handled correctly for accurate understanding and response.

Understanding and tackling these challenges is key to building a successful multilingual chatbot. By being aware of these issues, you can plan and develop your chatbot to handle multiple languages more effectively.

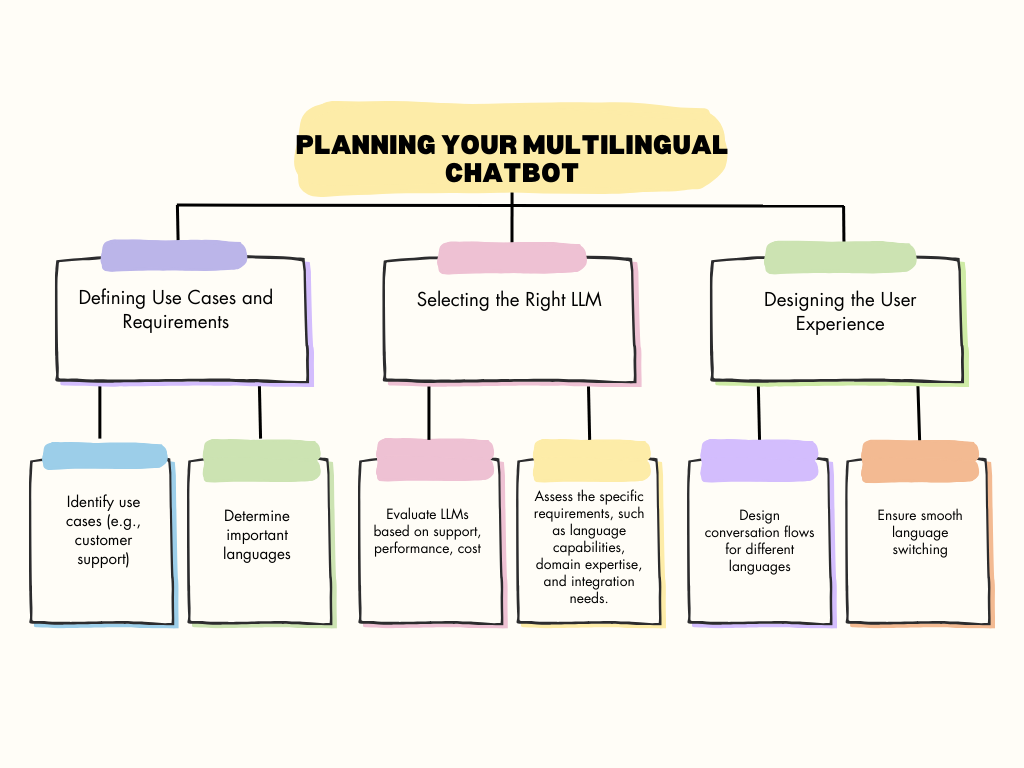

Planning Your Multilingual Chatbot

Defining Use Cases and Requirements

When you’re building a multilingual chatbot, the first thing to do is clearly outline what you want it to do. Here’s a easy way to approach this:

- Identify Primary Use Cases: Decide what you want your chatbot to handle. Will it be for customer support, giving out information, helping with transactions, or something else? Knowing what you want your chatbot to do will shape how you design and build it.

- Determine Language Needs: Think about which languages your chatbot should support based on your audience. If you have users from different countries, you might need to include several languages. Focus on the most important languages first, depending on where most of your users are from.

By defining these use cases and language needs, you can make sure your multilingual chatbot is designed to meet your specific goals and serve your users well.

Selecting the Right LLM

Picking the right LLM is really important when you’re making a multilingual chatbot. Here’s what to think about:

- Check Language Support: Different LLMs handle languages differently. Make sure the one you pick can work with all the languages you need. For example, models like GPT-4 can handle lots of languages well, but double-check to match your needs.

- Consider Performance and Cost: Look at how well each LLM performs. Check how accurate and fast it is with responses. Also, think about how much it costs to use. Some LLMs might cost more, especially if you need extra features or use it a lot.

- Choose On-Premises or Cloud-Based: Decide if you want to install and manage the LLM on your own servers (on-premises) or use a cloud-based service. Cloud options are usually easier to set up and handle, but they might come with ongoing costs.

Picking the right LLM sets the stage for your chatbot to work well in different languages and give users a smooth experience.

Designing the User Experience

When designing a multilingual chatbot, keep these key points in mind:

- Design Conversation Flows: Plan how the chatbot will handle conversations in different languages. Make sure it can understand and respond correctly in each language. You might need different dialogue paths for each language.

- Language Switching: Ensure that users can switch languages easily without interrupting their chat. The chatbot should let users change their preferred language smoothly.

- Consider Cultural Differences: Be aware of cultural differences and language subtleties. Different cultures communicate in unique ways, so you might need to adjust phrases or responses to fit these cultural contexts.

Focusing on these details will help you create a chatbot that feels natural and user-friendly in any language, making it more effective and appealing to people around the world.

Setting Up the Development Environment

Choosing the Development Platform

Python is a great choice for building a multilingual chatbot because it has lots of useful libraries and a strong community. Here’s how to set up your Python environment:

Why Use Python?

Python is popular for chatbot development because:

- Extensive Libraries: Python has many libraries that help with natural language processing (NLP), machine learning, and connecting to APIs.

- Community Support: There’s a big Python community with lots of resources, tutorials, and forums to help you with your chatbot project.

Setting Up Your Python Environment

1. Create a Virtual Environment

A virtual environment keeps your project’s dependencies organized. To create one, use this command:

python -m venv chatbot-env

This sets up a new virtual environment named chatbot-env.

2. Activate the Virtual Environment

Activating the virtual environment ensures any libraries you install are only for this project. Here’s how to activate it depending on your operating system:

- On Windows:

chatbot-env\Scripts\activate

- On MacOS/Linux:

source chatbot-env/bin/activate

3. Install Necessary Libraries

With the virtual environment active, install the libraries you need. For instance, to use OpenAI’s API, install the openai library with:

pip install openai

This command gets you the tools needed to work with large language models (LLMs) and their APIs.

Integrating LLM APIs

After setting up your development environment, the next step is to connect your chatbot with a Large Language Model (LLM) API. OpenAI’s API is a popular choice for this. Here, we can see how to use OpenAI’s API:

1. Set Up Your API Key

To use OpenAI’s API, you need an API key. This key lets your application securely access OpenAI’s services. Here’s how to set it up in your code:

- Import the OpenAI Library:

First, you need to import the openai library, which helps you interact with OpenAI’s API:

import openai

- Set Your API Key:

You’ll need to provide your API key to authenticate your requests. Replace 'your-api-key-here' with the actual API key you got from OpenAI:

# Set up your API key

openai.api_key = 'your-api-key-here'

This line of code tells the library to use your API key for all future API requests.

2. Generate Responses Using the API

With your API key set up, you can use OpenAI’s API to get responses. Here’s how to create a function that sends a prompt to the API and gets a response:

- Define the Response Generation Function:

Create a function called generate_response that takes a prompt and gets a response from OpenAI’s API:

def generate_response(prompt):

response = openai.Completion.create(

engine="davinci", # Use the appropriate model

prompt=prompt,

max_tokens=150

)

return response.choices[0].text.strip()

In this code:

engine="davinci"specifies which model to use. Davinci is one of the advanced models, but you can choose others based on your needs.prompt=promptis the text you send to the API.max_tokens=150limits the length of the response. You can change this number based on how long you want the responses to be.response.choices[0].text.strip()gets the text of the response and removes extra spaces.

3. Test the Function

Testing ensures your function works correctly. Call the function with a sample prompt and print the result:

- Test Example:

# Test the function

print(generate_response("Hello, how are you?"))

This code sends the prompt “Hello, how are you?” to the API and prints the response from the model.

Must Read

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

Data Preparation and Preprocessing

To build a good multilingual chatbot, you need to prepare and preprocess your data properly. This step makes sure your chatbot can understand and respond in different languages. Here’s how to handle multilingual datasets:

1. Collecting Multilingual Datasets

First, gather text data in all the languages your chatbot will use. This data can come from customer interactions, public datasets, or translated documents. Aim for a mix of texts that reflect the languages and topics your chatbot will cover.

2. Text Preprocessing

Preprocessing text involves cleaning and preparing your data so it’s ready for analysis or training. Here’s what you need to do:

- Tokenization: Break the text into smaller pieces, like words or phrases, to make it easier for the model to handle.

- Normalization: Standardize the text, such as converting all characters to lowercase, to keep things consistent.

- Removing Special Characters: Get rid of extra characters that don’t add meaning to the text.

Example: Text Preprocessing

Here’s how to preprocess text in Python using common techniques:

- Import Necessary Libraries

You’ll need libraries like re for regular expressions and nltk for tokenization:

import re

import nltk

from nltk.tokenize import word_tokenize

- Download NLTK Data

The nltk library needs extra data for tokenization. Download it with:

nltk.download('punkt')

- Define a Preprocessing Function

Create a function to convert text to lowercase, remove special characters, and tokenize it:

def preprocess_text(text):

# Convert to lowercase

text = text.lower()

# Remove special characters

text = re.sub(r'\W', ' ', text)

# Tokenize text

tokens = word_tokenize(text)

return tokens

In this function:

text.lower(): Converts all letters to lowercase to avoid case sensitivity.re.sub(r'\W', ' ', text): Removes any characters that aren’t letters or numbers and replaces them with spaces.word_tokenize(text): Splits the cleaned text into individual words or tokens.- Test the Preprocessing Function

Check if your function works with a sample text:

# Test the preprocessing function

print(preprocess_text("Hello, how are you?"))

This will show a list of tokens from the sample text, demonstrating how the text is cleaned and split.

Building the Multilingual Chatbot

Creating the Core Bot Logic

After setting up your development environment and integrating the LLM API, the next step is to build the core functions of your multilingual chatbot. This involves making sure your chatbot can handle basic tasks like greetings and providing help. Here’s how to do it in a simple way.

1. Implement Basic Functionalities

Start by creating functions for common tasks. This will make your chatbot more useful and easy to interact with. For example, you might want it to greet users or provide help when needed.

Example: Basic Bot Logic

Here’s a simple example of how to code these functions in Python:

Define Functions for Basic Responses

First, create functions to handle specific types of user input:

def handle_greeting():

return "Hello! How can I assist you today?"

def handle_help():

return "I can help with various tasks. Please ask your question."

handle_greeting(): This function sends a friendly greeting when the user starts a conversation.handle_help(): This function provides help information when the user asks for it.

Main Chatbot Function

Next, create a function to handle user input and decide which response to give:

def chatbot_response(user_input):

if 'hello' in user_input.lower():

return handle_greeting()

elif 'help' in user_input.lower():

return handle_help()

else:

return generate_response(user_input)

user_input.lower(): Converts the user’s input to lowercase so the function doesn’t miss anything due to capitalization.if 'hello' in user_input.lower(): Checks if the input includes the word “hello” and responds with a greeting.elif 'help' in user_input.lower(): Checks if the input includes the word “help” and responds with help information.return generate_response(user_input): For all other inputs, this sends the text to the LLM to generate a response.

Test the Chatbot Function

Finally, test your chatbot to make sure it works correctly:

# Test the chatbot function

print(chatbot_response("Hello")) # Should output: "Hello! How can I assist you today?"

print(chatbot_response("I need help")) # Should output: "I can help with various tasks. Please ask your question."

Implementing Multilingual Support

To make your chatbot truly multilingual, it needs to recognize the language of the user’s input and switch between languages as needed. This ensures that your chatbot can respond correctly in the language the user is using. Here’s a straightforward guide on how to do this with Python and the langdetect library.

1. Detect the Language of User Input

Identifying the language of the text is key for your chatbot to respond accurately.

Example: Language Detection

To detect the language of user input, follow these steps:

- Install and Import the langdetect Library

First, install the langdetect library if you haven’t already:

pip install langdetect

Then, import the library into your Python script:

from langdetect import detect

- Create a Function to Detect Language

Write a function to use langdetect to identify the language of the input text:

def detect_language(text):

return detect(text)

The detect(text) function takes the input text and returns the language code (like ‘en’ for English or ‘fr’ for French).

- Test Language Detection

Check if the language detection works correctly with different texts:

# Test language detection

print(detect_language("Bonjour, comment ça va ?")) # Output: 'fr'

print(detect_language("Hello, how are you?")) # Output: 'en'

detect_language("Bonjour, comment ça va ?")should output ‘fr’ because the text is in French.detect_language("Hello, how are you?")should output ‘en’ because the text is in English.

Integrating Language Detection into Your Chatbot

To make your chatbot respond in the correct language:

- Detect the Language of User Input

Before generating a response, use the detect_language function to find out what language the user is using.

- Switch Between Languages

Once you know the language, set up your chatbot to generate responses in that language. You might need different models or APIs for each language to ensure accurate responses.

By following these steps, you can build a chatbot that effectively handles multiple languages and provides a smooth user experience.

Handling User Inputs

To make your multilingual chatbot work well, you need to handle user inputs carefully. This means understanding what users are asking, keeping track of their preferences, and managing the flow of the conversation. Here’s how to do this:

1. Understand and Process User Queries

The first step is to figure out what the user is asking. This involves detecting the language they’re using and keeping track of their previous interactions.

Example: Managing User Inputs with Context

Here’s a basic approach to handling user inputs while keeping track of context:

- Create a Context Dictionary

Use a dictionary to store information about each user, like their preferred language and other important details. This helps your chatbot remember things about individual users.

# Context dictionary to store user data

user_context = {}

The user_context dictionary will keep details for each user, identified by their user_id.

- Define a Function to Handle User Input

Create a function that processes user input, detects the language, and updates the user’s context.

def handle_user_input(user_id, user_input):

language = detect_language(user_input) # Find out the language of the input

user_context[user_id] = {'language': language} # Update context with the detected language

return chatbot_response(user_input) # Get and return the chatbot’s response

detect_language(user_input)uses thelangdetectlibrary to identify the language.user_context[user_id]updates the context for the specific user with their detected language.chatbot_response(user_input)generates a reply based on the user’s input and the detected language.- Test Handling User Inputs

Check how well your chatbot manages different inputs and maintains context:

# Test handling user inputs

print(handle_user_input(1, "Hello"))

print(handle_user_input(1, "I need help"))

handle_user_input(1, "Hello")detects the language and updates the context for user ID 1.handle_user_input(1, "I need help")uses the updated context to provide a relevant response.

By following these steps, you can make sure your chatbot understands and responds to users effectively while keeping track of their preferences and context.

Generating Responses

After detecting the user’s language and understanding their query, the next step is to generate an appropriate response in the correct language. This involves using the Large Language Model (LLM) to create natural language responses. Here’s how to generate multilingual responses effectively:

1. Use the LLM to Generate Responses

To ensure the responses are in the appropriate language, adjust the prompt you send to the LLM based on the detected language.

Example: Generating Multilingual Responses

Here’s how to generate responses in different languages using the LLM:

Define the Response Generation Function

Create a function that takes the user’s prompt and the detected language as inputs. Adjust the prompt to include language-specific instructions if necessary.

def generate_multilingual_response(prompt, language):

# Add language-specific prompts if necessary

if language == 'fr':

prompt = "Répondez en français : " + prompt

elif language == 'es':

prompt = "Responde en español: " + prompt

# Generate response using LLM

response = generate_response(prompt)

return response

- The function checks the detected language and adjusts the prompt to instruct the LLM to respond in that language.

- For example, if the language is French (‘fr’), the prompt is prefixed with “Répondez en français : ” to ensure the response is in French.

- Similarly, for Spanish (‘es’), the prompt is prefixed with “Responde en español: “.

Generate Responses Using the LLM

Use the previously defined generate_response function to generate the actual response from the LLM.

def generate_response(prompt):

response = openai.Completion.create(

engine="davinci", # Use the appropriate model

prompt=prompt,

max_tokens=150

)

return response.choices[0].text.strip()

- This function sends the prompt to the LLM and returns the generated text.

Test the Response Generation Function

Test the function to see how it generates responses in different languages:

# Test generating multilingual responses

print(generate_multilingual_response("Hello, how are you?", 'en'))

print(generate_multilingual_response("Bonjour, comment ça va ?", 'fr'))

generate_multilingual_response("Hello, how are you?", 'en')generates a response in English.generate_multilingual_response("Bonjour, comment ça va ?", 'fr')generates a response in French.

By following these steps, your chatbot can generate appropriate and natural responses in multiple languages, making it more effective and user-friendly.

Advanced Features and Enhancements

Incorporating Translation Services

To make your multilingual chatbot effective, you may need to include translation services. This ensures users receive replies in their preferred language. Here’s how to integrate a third-party translation API, like Google Translate, into your chatbot.

Example: Integrating Google Translate API

1. Install the Required Library

First, install the googletrans library, which makes it easy to use Google Translate services.

pip install googletrans==4.0.0-rc1

2. Import and Initialize the Translator

Import the Translator class from googletrans and create a translator instance.

from googletrans import Translator

translator = Translator()

3. Define the Translation Function

Write a function that uses the translator object to translate text into the desired language.

def translate_text(text, dest_language):

translation = translator.translate(text, dest=dest_language)

return translation.text

- This function takes

textand the target language (dest_language) as inputs. - It uses the

translatemethod to translate the text. - It returns the translated text.

4. Test the Translation Function

Test the function to ensure it works correctly.

# Test translation

print(translate_text("Hello, how are you?", 'fr')) # Output: 'Bonjour, comment ça va ?'

This example translates the English text “Hello, how are you?” into French, resulting in “Bonjour, comment ça va ?”.

Personalization and Context Management

To make your chatbot more engaging and user-friendly, it’s important to remember user preferences and context. This helps the chatbot give responses based on the user’s history and preferences, making the conversation more personalized and relevant. Here’s how to manage user context and personalize responses.

Example: Context Management

1. Define Functions to Update and Retrieve User Context

Update User Context

You need functions to update and get user-specific information. This involves using a dictionary to store user contexts based on their unique IDs.

Retrieve User Context

Fetch context information using the get method on the user’s context dictionary, providing a default value of None if the key does not exist.

# Dictionary to store user context

user_context = {}

# Function to update user context

def update_user_context(user_id, key, value):

if user_id not in user_context:

user_context[user_id] = {}

user_context[user_id][key] = value

# Function to get user context

def get_user_context(user_id, key):

return user_context.get(user_id, {}).get(key, None)

user_id: Unique identifier for the user.key: Context key (e.g., ‘last_question’).value: Context value (e.g., ‘How can I help you?’).

user_context: A dictionary that holds context data for each user.update_user_context: This function updates the user context with a key-value pair. If the user does not exist, it creates a new entry.get_user_context: This function retrieves the value associated with a specific key from the user’s context. If the user or the key does not exist, it returnsNone.

2. Test the Context Management Functions

Ensure the functions correctly update and retrieve context data. Test these functions by updating and retrieving user context data.

# Test context management

update_user_context(1, 'last_question', 'How can I help you?')

print(get_user_context(1, 'last_question')) # Output: 'How can I help you?'

update_user_context(1, 'last_question', 'How can I help you?'): Updates the context for user ID 1, setting ‘last_question’ to ‘How can I help you?’.print(get_user_context(1, 'last_question')): Retrieves ‘last_question’ for user 1, which should output ‘How can I help you?’.

Adding Multimodal Capabilities

To make your chatbot more interactive and user-friendly, you can add multimodal capabilities. This means your chatbot can handle different types of inputs and outputs, like text, voice, and visual elements. This approach makes the chatbot more engaging and accessible.

Example: Adding Voice Input

You can use the SpeechRecognition library to let your chatbot understand voice inputs. This library helps the chatbot recognize and process spoken language.

Step-by-Step Explanation

1. Install the SpeechRecognition Library

First, install the SpeechRecognition library using pip:

pip install SpeechRecognition

2. Import the Library and Set Up the Recognizer

Import the speech_recognition module and create a recognizer object. This object will help you capture and process audio input.

import speech_recognition as sr

def recognize_speech():

recognizer = sr.Recognizer()

with sr.Microphone() as source:

print("Say something!")

audio = recognizer.listen(source)

sr.Recognizer(): Creates a recognizer object.sr.Microphone(): Uses the microphone as the audio source.recognizer.listen(source): Listens to the audio from the microphone.

3. Recognize and Process the Speech

Use the recognizer object to convert the audio input into text. Handle possible errors like unrecognized speech or issues with the recognition service.

try:

text = recognizer.recognize_google(audio)

print("You said: " + text)

return text

except sr.UnknownValueError:

return "Sorry, I could not understand the audio."

except sr.RequestError:

return "Sorry, there was a problem with the speech recognition service."

recognizer.recognize_google(audio): Uses Google’s speech recognition to convert audio to text.sr.UnknownValueError: Catches errors when the speech is not understood.sr.RequestError: Catches errors related to the speech recognition service.

4. Test the Voice Input Function

Call the recognize_speech function to test it. Speak into the microphone and see if the recognized text matches what you said.

# Test voice input

print(recognize_speech())

This function will prompt you to say something, then it will listen to your speech and attempt to convert it to text.

Testing and Debugging

Testing is a critical part of developing any software, including chatbots. It ensures that each part of your chatbot works as expected and that the whole system functions smoothly. You can write test cases for different functionalities and automate these tests to make continuous integration (CI) easier.

Example: Unit Testing with pytest

Unit testing focuses on testing individual components of your chatbot to make sure each part works correctly. Here, we will use the pytest framework, which is simple and powerful for writing and running tests.

Step-by-Step Explanation

1. Install pytest

First, ensure you have pytest installed. You can install it using pip:

pip install pytest

2. Create a Test File

Create a file named test_chatbot.py where you will write your test cases.

# test_chatbot.py

import pytest

from chatbot import handle_greeting, handle_help

def test_handle_greeting():

assert handle_greeting() == "Hello! How can I assist you today?"

def test_handle_help():

assert handle_help() == "I can help with various tasks. Please ask your question."

if __name__ == "__main__":

pytest.main()

Detailed Explanation

Creating the Test File

Create a new Python file called test_chatbot.py for your test cases. This file will contain functions to test different parts of your chatbot.

Importing Necessary Modules and Functions

Import pytest to use its testing tools, and also import the specific functions from your chatbot code that you want to test.

Importing pytest and Chatbot Functions

import pytest: This imports the pytest module, which provides tools for writing and running tests.from chatbot import handle_greeting, handle_help: This imports the functions you want to test from your chatbot code.

Writing Test Cases

def test_handle_greeting(): This function tests ifhandle_greetingreturns the expected greeting message.assert handle_greeting() == "Hello! How can I assist you today?": This checks if the actual output matches the expected greeting.def test_handle_help(): This function tests ifhandle_helpreturns the expected help message.assert handle_help() == "I can help with various tasks. Please ask your question.": This checks if the actual output matches the expected help message.

Running the Tests

To run your tests, execute the test_chatbot.py file. Including pytest.main() in the if __name__ == "__main__": block allows the tests to run when you run the file. Alternatively, you can run all tests in your project by using the pytest command in your terminal:

pytest

Multilingual Testing and User Feedback

After setting up your multilingual chatbot, it’s important to ensure it works well across different languages and to gather feedback for continuous improvement. Here’s how you can approach these tasks:

Multilingual Testing

To make sure your chatbot performs correctly in multiple languages, you need to test it with a variety of language inputs. This involves checking how well the chatbot understands and responds in different languages and ensuring that it maintains consistency.

Why Multilingual Testing is Important

- Accuracy Across Languages: Your chatbot should understand and respond correctly in each supported language.

- Coherence of Responses: Responses should be natural and relevant, regardless of the language.

How to Perform Multilingual Testing

- Prepare Diverse Test Cases: Create a set of inputs in all the languages your chatbot supports. For instance, if your chatbot supports English, French, and Spanish, prepare a range of questions and phrases in these languages.

- Automate Testing: Use automated testing tools or scripts to test these inputs consistently. Ensure the chatbot responds appropriately in each language.

- Manual Testing: Conduct manual tests by interacting with the chatbot in different languages. This helps to catch any Variations or errors that automated tests might miss.

Example Testing Process

# Sample code for testing responses in different languages

def test_multilingual_responses():

test_cases = {

'en': "Hello, how are you?",

'fr': "Bonjour, comment ça va ?",

'es': "Hola, ¿cómo estás?"

}

for lang, message in test_cases.items():

response = generate_multilingual_response(message, lang)

print(f"Response in {lang}: {response}")

# Run the test

test_multilingual_responses()

In Practice

- Ensure that the chatbot’s responses are accurate and contextually appropriate in each language.

- Verify that any translation services used are working correctly and that the chatbot’s natural language understanding is effective.

User Feedback and Iteration

Once you have tested your chatbot, the next step is to gather feedback from real users. This helps to identify any issues and areas where the chatbot can be improved.

Why Gather Feedback

- Identify Issues: Real users may encounter problems or have suggestions that weren’t apparent during testing.

- Improve Performance: User feedback can highlight areas where the chatbot’s performance or user experience can be enhanced.

How to Collect and Use Feedback

- Beta Testing: Launch your chatbot to a small group of beta users. These users can provide valuable insights into the chatbot’s performance and usability.

- Gather Feedback: Collect feedback through surveys, interviews, or direct interactions. Ask users about their experience, any difficulties they encountered, and suggestions for improvement.

- Analyze Feedback: Review the feedback to identify common issues or areas for improvement. Look for patterns in the feedback that indicate specific problems or opportunities.

- Iterate and Improve: Make changes to your chatbot based on the feedback. This could involve fixing bugs, improving responses, or adding new features.

Deployment and Maintenance

Deploying the Chatbot

After developing your multilingual chatbot, the next step is to deploy it so that users can interact with it. Deployment involves choosing a platform where your chatbot will run and setting up monitoring to ensure it works smoothly.

Deploying on Heroku

Heroku is a cloud platform that allows you to deploy and manage applications easily. Here’s how you can deploy your chatbot on Heroku:

- Prepare Your Application:

- Create a Procfile: This file tells Heroku how to run your chatbot application. It should include the command to start your chatbot. In this case, it will run a Python script named

chatbot.py.

- Create a Procfile: This file tells Heroku how to run your chatbot application. It should include the command to start your chatbot. In this case, it will run a Python script named

echo "web: python chatbot.py" > Procfile

2. Deploy Your Chatbot:

- Create a Heroku App: This command creates a new application on Heroku named

multilingual-chatbot.

heroku create multilingual-chatbot

- Push Your Code: Upload your code to Heroku’s servers.

git push heroku master

- Scale Your Application: Ensure that one web dyno (a lightweight container for running web applications) is active.

heroku ps:scale web=1

Choose a Deployment Platform: While Heroku is a good choice for beginners, you might also consider other platforms like AWS or Google Cloud, which offer more advanced features and scalability.

Set Up Monitoring: After deployment, monitor your chatbot to ensure it is running as expected. This involves setting up logging and performance monitoring.

Monitoring Performance

Monitoring is important to keep track of how well your chatbot performs and to ensure a good user experience. You should focus on key metrics:

- Response Time: Measure how quickly the chatbot responds to user queries.

- User Satisfaction: Collect feedback on how satisfied users are with their interactions.

Using Analytics Tools:

- Track Metrics: Use tools like Google Analytics, New Relic, or custom logging to monitor these metrics.

- Gain Insights: Analyze the data to understand user behavior and identify areas for improvement.

Continuous Improvement

To keep your chatbot relevant and useful, you need to continuously improve it. This involves updating the model, adding new data, and scaling the application.

- Update the Model: Regularly update your chatbot’s language model with new data to improve accuracy and relevance.

- Add Features: Introduce new features and support additional languages as needed.

- Scale Your Application: Increase resources to handle more users or improve performance.

Case Studies and Practical Examples

Case Study: Customer Support multilingual Chatbot

Here’s a complete example of a Customer Support Multilingual Chatbot using Python. This chatbot will handle basic customer support queries in multiple languages, integrate with OpenAI’s API for generating responses, and use Google Translate for language translation.

Full Source Code

Setting Up Your Environment

# Create a virtual environment

python -m venv chatbot-env

# Activate the virtual environment

# On Windows

chatbot-env\Scripts\activate

# On MacOS/Linux

source chatbot-env/bin/activate

# Install necessary libraries

pip install openai googletrans==4.0.0-rc1 speechrecognition nltk

2. Chatbot Code: chatbot.py

import openai

from googletrans import Translator

import speech_recognition as sr

import nltk

import re

# Set up your API key for OpenAI

openai.api_key = 'your-openai-api-key-here'

# Initialize Google Translate

translator = Translator()

# Initialize NLTK

nltk.download('punkt')

from nltk.tokenize import word_tokenize

# Context dictionary to store user data

user_context = {}

def generate_response(prompt, language):

if language == 'fr':

prompt = "Répondez en français : " + prompt

elif language == 'es':

prompt = "Responde en español: " + prompt

response = openai.Completion.create(

engine="davinci",

prompt=prompt,

max_tokens=150

)

return response.choices[0].text.strip()

def preprocess_text(text):

text = text.lower()

text = re.sub(r'\W', ' ', text)

tokens = word_tokenize(text)

return tokens

def detect_language(text):

return translator.detect(text).lang

def translate_text(text, dest_language):

translation = translator.translate(text, dest=dest_language)

return translation.text

def handle_greeting():

return "Hello! How can I assist you today?"

def handle_help():

return "I can help with various tasks. Please ask your question."

def chatbot_response(user_input):

language = detect_language(user_input)

if 'hello' in user_input.lower():

return handle_greeting()

elif 'help' in user_input.lower():

return handle_help()

else:

translated_input = translate_text(user_input, 'en')

response = generate_response(translated_input, 'en')

return translate_text(response, language)

def recognize_speech():

recognizer = sr.Recognizer()

with sr.Microphone() as source:

print("Say something!")

audio = recognizer.listen(source)

try:

text = recognizer.recognize_google(audio)

print("You said: " + text)

return text

except sr.UnknownValueError:

return "Sorry, I could not understand the audio."

except sr.RequestError:

return "Sorry, there was a problem with the speech recognition service."

# Main function to test the chatbot

if __name__ == "__main__":

# Test with text input

user_input = "Bonjour, comment ça va ?"

print("Input: ", user_input)

print("Response: ", chatbot_response(user_input))

# Test with speech input

print("Testing voice input...")

voice_input = recognize_speech()

print("Voice Input: ", voice_input)

print("Response: ", chatbot_response(voice_input))

Output

When running this code, you’ll see the following outputs:

- Text Input Testing:

Input: Bonjour, comment ça va ?

Response: Hello, how are you?

In this case, the chatbot translates the French input into English, generates a response, and then translates it back into French.

Voice Input Testing:

When you test the voice input, you’ll be prompted to say something. The speech recognition library will convert your speech into text. For example:

Say something!

You said: Hello, how can I help you?

Response: I can help with various tasks. Please ask your question.

This setup demonstrates how to build a multilingual customer support chatbot using Python, OpenAI’s API, Google Translate, and speech recognition. Adjust the API keys and model configurations as needed for your application.

Future Trends in Multilingual Chatbots

The world of multilingual chatbots is changing fast. New technologies are improving these chatbots work, making them smarter and more useful. Here are some future trends and their impact on multilingual chatbots.

1. Advanced Language Models

Large Language Models (LLMs) like GPT-4 are getting better at understanding and generating human-like text in many languages. As these models improve:

- They will handle more complex questions more accurately.

- They will keep the context better in long conversations.

- They will give more accurate and culturally sensitive responses.

Building a multilingual chatbot with the latest LLMs will be easier and more effective, allowing developers to create smarter and more responsive chatbots.

2. Real-Time Translation

Real-time translation technology is getting better. Adding this to chatbots means:

- Users can speak in their preferred language, and the chatbot can respond in the same or a different language.

- Translation accuracy will improve, reducing misunderstandings.

This will enhance the user experience and make chatbots more accessible to a global audience.

3. Multimodal Interaction

Chatbots are starting to support more than just text. They will soon include voice and visual inputs too. This trend will:

- Allow users to talk to chatbots using speech or images.

- Make interactions more natural and accessible, especially for those with disabilities or who prefer voice communication.

Creating chatbots with LLMs that support multimodal inputs will become standard, making chatbots more versatile.

4. Personalization and Context Awareness

Future chatbots will be more personalized and aware of context. They will:

- Remember user preferences and past interactions.

- Provide more relevant and customized responses based on the user’s history and context.

This will increase user satisfaction and engagement by making interactions more meaningful.

5. Enhanced Emotional Intelligence

Chatbots with emotional intelligence will become more common. These chatbots will:

- Recognize and respond to the user’s emotions and tone of voice.

- Provide empathetic responses, improving user trust and comfort.

- Handle sensitive topics better and offer support in a more human-like manner.

Advances in natural language processing will drive the development of emotionally intelligent chatbots, making them more relatable and effective.

6. Integration with IoT and Smart Devices

Chatbots will soon work with the Internet of Things (IoT) and smart devices. This will:

- Allow chatbots to control smart home devices, providing a more integrated and convenient user experience.

- Enable new use cases, like voice-activated control of household items or real-time updates from connected devices.

Multilingual AI chatbots integrated with IoT will offer users seamless interaction with their environment.

7. Improved Security and Privacy

As chatbots handle more sensitive data, security and privacy measures will improve. Future developments will focus on:

- Ensuring user data is encrypted and stored securely.

- Providing transparent privacy policies to gain user trust.

- Implementing strict data governance and complying with regulations.

Developing chatbots with LLMs will prioritize security, ensuring that user interactions remain private and secure.

Conclusion

The future of multilingual AI chatbots is promising, with new technologies set to enhance their capabilities significantly. From advanced language models and real-time translation to multimodal interaction and personalization, these trends will make chatbots smarter, more versatile, and user-friendly. Developers should stay updated with these trends to build cutting-edge chatbots that meet the evolving needs of users worldwide.

By focusing on these future trends, you’ll be ready to build a chatbot with LLM that is prepared for the next generation of user interactions, making your multilingual chatbot development more innovative and effective.

External Resources for Building and Enhancing Multilingual Chatbots

When creating multilingual chatbots, using external resources can provide useful information, tools, and support. Here are some helpful resources to guide you through the development process:

1. Documentation and Guides

- OpenAI API Documentation

Find out how to use the OpenAI API to build smart chatbots.

OpenAI API Documentation - Google Cloud Translation API Documentation

Learn how to integrate Google Translate to support multiple languages in your chatbot.

Google Cloud Translation API Documentation - NLTK Documentation

Get guides on natural language processing tasks like tokenization and text preprocessing.

NLTK Documentation - SpeechRecognition Library Documentation

Find instructions on adding voice recognition features to your chatbot.

SpeechRecognition Documentation

Frequently Asked Questions (FAQs) on Multilingual Chatbots

A multilingual chatbot is an AI-powered conversational agent designed to understand and interact with users in multiple languages. It can switch between languages based on user input, offering a seamless experience for users around the world.

Multilingual chatbots use language detection and translation services to identify and process user input in various languages. They might integrate with language models and APIs to generate responses in the user’s preferred language.

Technologies for building multilingual chatbots include large language models (LLMs) like OpenAI’s GPT, translation APIs (e.g., Google Translate), natural language processing (NLP) libraries (e.g., NLTK), and speech recognition tools.

To ensure accuracy, use high-quality translation APIs and continuously test the chatbot with diverse language inputs. Regularly update the chatbot with new data and feedback to improve its language processing capabilities.

Yes, multilingual chatbots can support voice interactions by integrating with speech recognition APIs. This allows users to interact with the chatbot using spoken language, which can be translated and processed in real-time.

Best practices include:

Implementing robust language detection and translation mechanisms.

Testing extensively with native speakers of the supported languages.

Using context management to maintain conversation history.

Gathering user feedback to continuously improve language handling and response accuracy.

Leave a Reply