How to Generate Synthetic Data using GenAI

Introduction

Purpose of the Post

The purpose of this post is to explain the importance and applications of synthetic data. Synthetic data is becoming more and more important in the field of machine learning. Understanding synthetic data helps us see how it can be used to improve machine learning models and make data collection easier and more efficient.

Overview of Synthetic Data

Synthetic data is artificial data generated by computers. It is created to look and behave like real-world data. In machine learning, synthetic data is used to train models. It is especially useful when there is not enough real data available or when using real data is not possible due to privacy concerns.

Generating synthetic data with AI helps overcome challenges related to data scarcity and privacy. It allows researchers and developers to create large, high-quality datasets that are similar to real-world data. This improves the performance of machine learning models without the need for extensive data collection efforts.

Core Concepts

- Synthetic Data Generation: The process of creating artificial data using computers.

- AI: Artificial intelligence, used to generate and analyze synthetic data.

- Machine Learning: A field of study focused on teaching computers to learn from data.

- Artificial Data: Data that is created by computers to mimic real-world data.

- Training Data: Data used to teach machine learning models to make predictions.

Importance of Synthetic Data

Enhancing Model Performance

Synthetic data can improve the performance of machine learning models. By generating large amounts of data, models can be trained more effectively. This leads to better accuracy and more reliable predictions.

Addressing Data Privacy Concerns

In many cases, using real data is not possible due to privacy concerns. For example, in healthcare, financial, or personal data cannot always be used for training models. Synthetic data can be used as an alternative, providing the needed information without compromising privacy.

Overcoming Data Scarcity

Data scarcity is a common problem in machine learning. Sometimes, collecting enough real data is difficult or expensive. Synthetic data generation helps fill these gaps, ensuring that models have enough data to learn from.

Facilitating Data Sharing

Sharing real data between organizations can be risky due to privacy and security issues. Synthetic data can be shared more freely, allowing for better collaboration and innovation across different fields.

Applications of Synthetic Data

Healthcare

In healthcare, synthetic data can be used to create patient records for research and training purposes. This helps in developing better diagnostic tools and treatments without compromising patient privacy.

Autonomous Vehicles

Synthetic data is crucial for training autonomous vehicles. Real-world data on driving scenarios is limited and sometimes dangerous to collect. Synthetic data can simulate various driving conditions, helping improve the safety and performance of autonomous systems.

Finance

In finance, synthetic data can be used to simulate market conditions and test trading algorithms. This helps in developing robust financial models without risking real money.

Marketing

Synthetic data can help in creating and testing marketing strategies. By simulating customer behaviors and preferences, businesses can develop more effective campaigns.

Robotics

Robotics applications use synthetic data to train robots in various tasks, from manufacturing to home assistance. This speeds up the development process and improves the robots’ abilities.

Why Use Synthetic Data?

Data Privacy and Security

One of the biggest challenges in fields like healthcare and finance is ensuring the privacy and security of sensitive information. Imagine a researcher needing patient records to study a new treatment. Sharing real patient data is risky because it could expose personal information. Synthetic data offers a solution by creating artificial records that mimic real ones without using any actual patient details. This approach allows researchers to use data that mimics real-world behavior without risking privacy. It’s like using a look-alike in a movie scene to avoid putting the real star in danger.

Cost Efficiency

Collecting and labeling real-world data can be incredibly expensive and time-consuming. Think about the effort and cost involved in gathering financial transaction data for fraud detection.You’d have to get permission, make sure the data is secure, and manually label each transaction as either legitimate or fraudulent. With synthetic data, you can create it as needed, customized to your requirements, and avoid all the extra costs. It’s like having a 3D printer that makes any tool you need instantly, without the hassle of ordering from far away.

Overcoming Data Scarcity

Finally, overcoming data scarcity is crucial in fields where real data is hard to gather. Autonomous vehicle development is a perfect example. Real-world driving data, especially for rare events like sudden road closures or extreme weather, is limited. Synthetic data allows developers to create endless variations of these scenarios, ensuring the AI learns how to handle them. It’s similar to how pilots use flight simulators to practice handling emergencies they might rarely encounter in real life.

Methods of Generating Synthetic Data

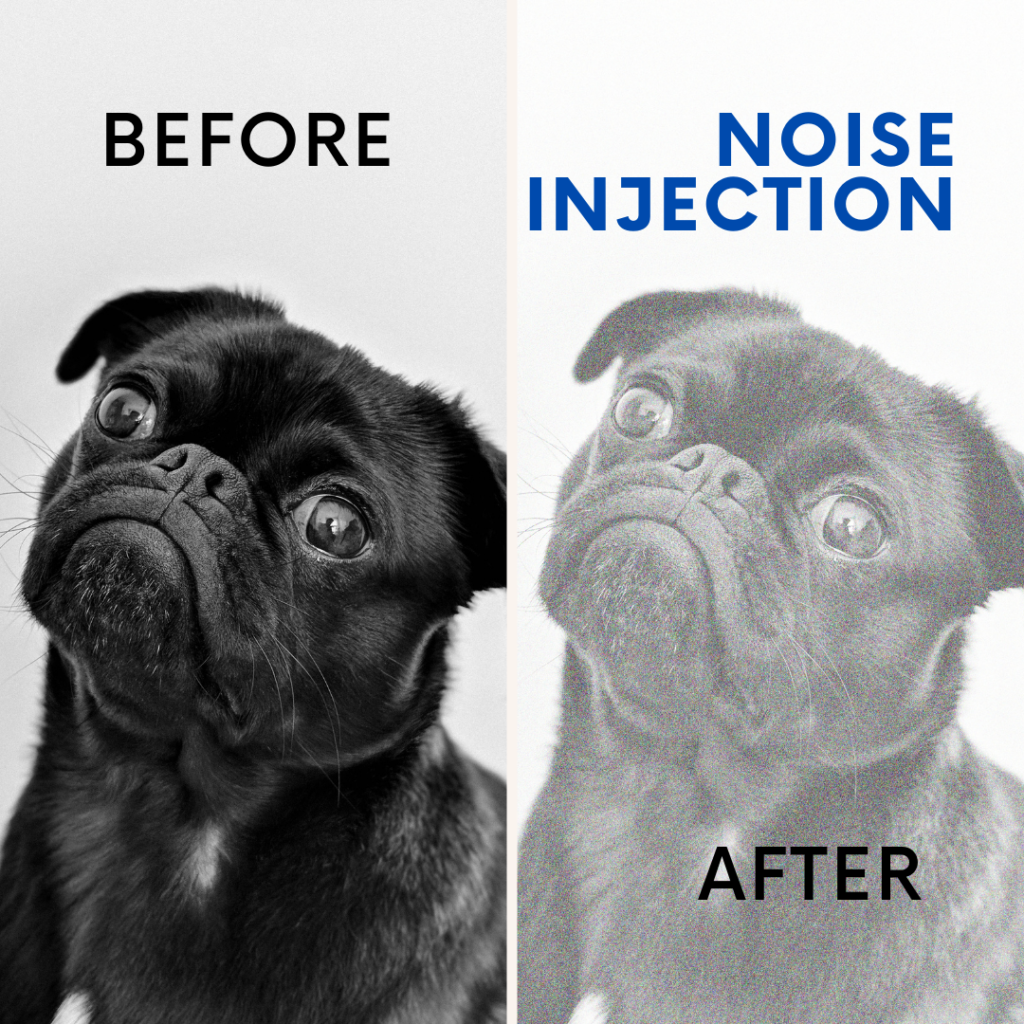

Noise Injection

What is Noise Injection?

Noise injection is a technique used to add random variations or “noise” to existing data. This creates new, slightly different samples that still resemble the original data but with some variations. This is useful for making synthetic data that mimics real data in a realistic way but with enough differences to test various scenarios.

How Does It Work?

Imagine you have a photo of a cat, and you want to create new images that look similar but are not identical. By adding noise, you slightly change the pixel colors in the photo. These changes are small and random, so the cat still looks like a cat, but each image will have a unique appearance.

Example: Adding Noise to an Image

Let’s say you want to add noise to an image using Python. Here’s a simple example of how you can do it:

- Load the Image: First, you need to load the image you want to work with. You use a library called

cv2(part of OpenCV) to read the image from your computer.

import cv2

image = cv2.imread('image.jpg')

2. Generate Noise: Next, you create random noise. This noise is a matrix (a grid of numbers) where each number represents a random change to the image’s pixel values. The np.random.normal function generates this random noise, with the amount of noise controlled by a parameter (in this case, 25).

import numpy as np

noise = np.random.normal(0, 25, image.shape).astype(np.uint8)

3. Add Noise to the Image: You then combine the original image with the noise. The cv2.add function adds the noise to each pixel of the image. This process creates a new image with the noise added.

noisy_image = cv2.add(image, noise)

4. Save or Display the Noisy Image: Finally, you can save the new noisy image or display it. This new image will look similar to the original but with added variations due to the noise.

cv2.imwrite('noisy_image.jpg', noisy_image)

In Simple Terms

- Noise Injection: Adding random changes to data to make it look a bit different but still recognizable.

- Image Example: Start with a photo, add random changes to the pixel colors, and you get a new image that’s similar but not the same as the original.

This technique is helpful in various fields, like training machine learning models, to ensure they can handle slightly different versions of the same data.

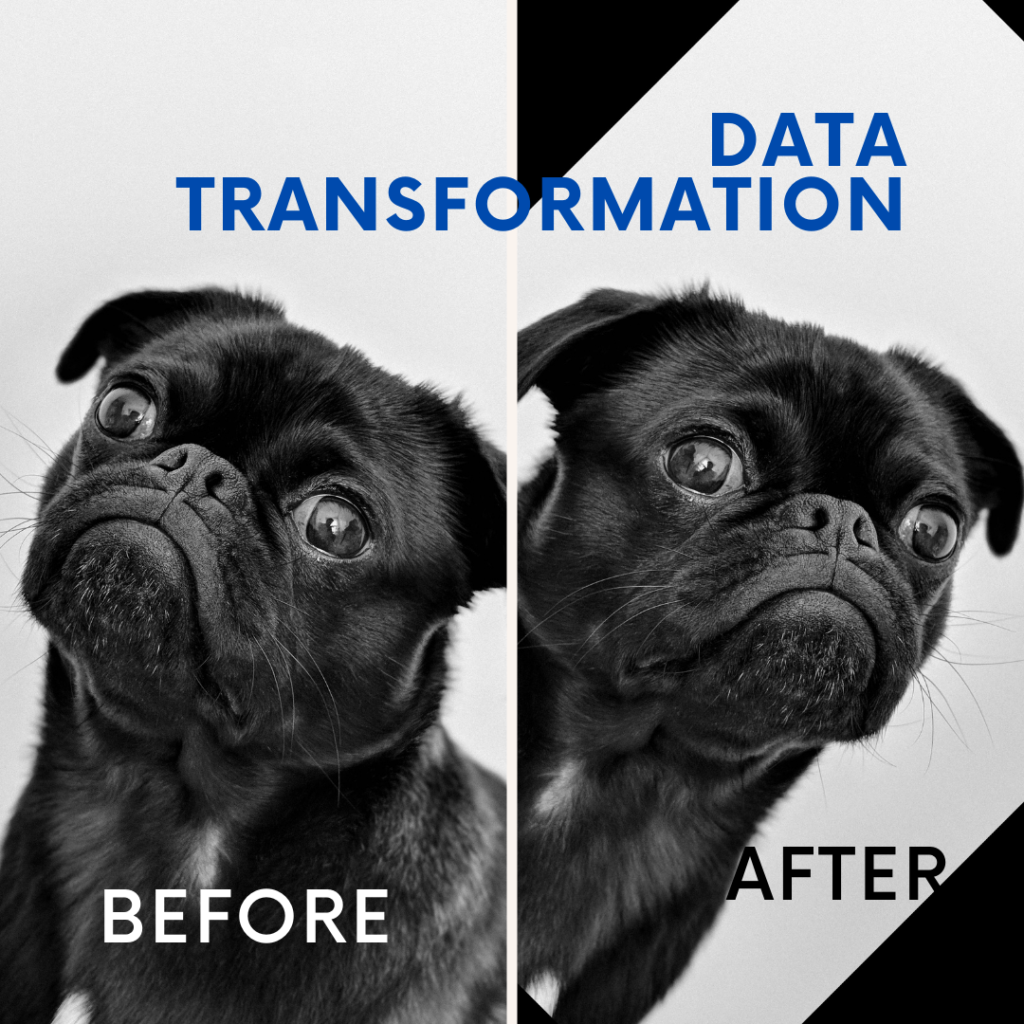

Data Transformation

What is Data Transformation?

Data transformation involves changing your data in various ways to create new versions of it. This can include actions like scaling, rotating, or flipping data. The goal is to make the data more varied and diverse. For example, if you have a set of images, you can rotate or flip them to create new images that can be used for training a model. This helps the model learn to recognize objects from different angles or orientations.

How Does It Work?

Let’s look at how you can rotate and flip an image using Python. This example will help you understand how data transformation works:

Example: Rotating and Flipping Images

- Load the Image: First, you need to load the image you want to transform. You use the

cv2.imreadfunction to read the image from your computer.

import cv2

image = cv2.imread('image.jpg')

2. Rotate the Image: To rotate the image, you need to:

- Find the Center: Determine the center of the image so you can rotate it around that point. This is done by dividing the image’s width and height by 2.

center = (image.shape[1] // 2, image.shape[0] // 2)

- Create a Rotation Matrix: This matrix helps in performing the rotation. You specify the center, the angle of rotation (45 degrees in this case), and the scale (1.0 means no scaling).

rotation_matrix = cv2.getRotationMatrix2D(center, 45, 1.0)

- Apply the Rotation: Use the rotation matrix to rotate the image. The

cv2.warpAffinefunction applies the rotation to the image.

rotated_image = cv2.warpAffine(image, rotation_matrix, (image.shape[1], image.shape[0]))

3. Flip the Image: After rotating, you can flip the image horizontally. The cv2.flip function is used for this purpose. The parameter 1 indicates horizontal flipping.

flipped_image = cv2.flip(rotated_image, 1)

4. Save or Display the Transformed Image: Finally, save or display the new transformed image. This image will be rotated and flipped compared to the original.

cv2.imwrite('transformed_image.jpg', flipped_image)

In Simple Terms

- Data Transformation: Changing your data in different ways to create new versions of it.

- Image Example: Start with a photo, rotate it by 45 degrees, then flip it horizontally, and you get a new version of the photo that looks different but is still based on the original.

Data transformation helps in creating a more diverse dataset, which can improve the performance of machine learning models by making them more robust to variations in the data.

Generative Models

What are Generative Models?

Generative models are advanced techniques in machine learning that create new data from scratch. They learn from existing data and then generate new, similar data. There are two popular types of generative models: Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

VAEs (Variational Autoencoders)

How Do VAEs Work?

VAEs are another type of generative model. They work by encoding the input data into a smaller, compressed representation called the latent space, and then decoding it back to create new data. This process involves two main steps:

- Encoder: Compresses the input data into the latent space.

- Decoder: Reconstructs the data from the latent space.

Let’s Explore Generative Models and VAEs (Variational Autoencoders) in detail with examples

Generative Adversarial Networks (GANs)

What are GANs?

Generative Adversarial Networks (GANs) are a type of AI that helps create new data which looks similar to real data. Imagine they are like two people working together in a contest to improve their skills.

Must Read

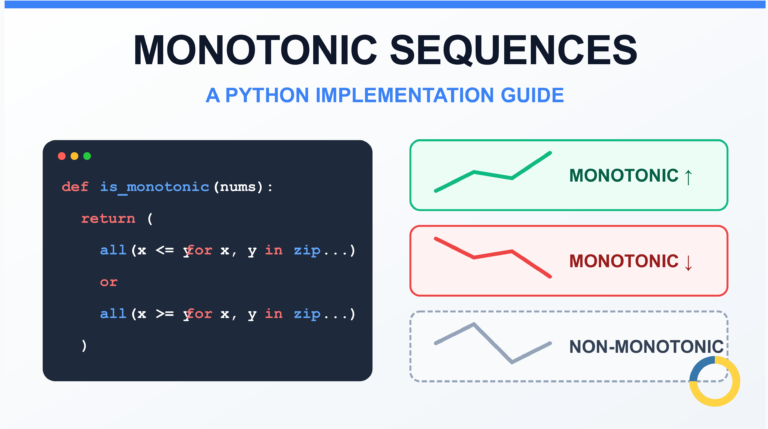

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

The Two Main Parts of GANs

- Generator

- What it Does: The generator’s job is to create fake data. Think of it as an artist trying to draw or paint something that looks like a real piece of art.

- How it Works: It starts with random noise (like an empty canvas) and tries to generate data that looks realistic, such as images of faces or landscapes.

- Discriminator

- What it Does: The discriminator’s job is to tell apart real data from the fake data created by the generator. Imagine it as an art critic who looks at a painting and decides if it’s a real masterpiece or a fake.

- How it Works: It learns to recognize the differences between real data and the fake data, getting better at spotting what is genuine and what is not.

How They Work Together

- Training Together: Both the generator and the discriminator are trained at the same time in a back-and-forth process.

- Generator’s Goal: The generator aims to make its fake data look so realistic that the discriminator cannot tell it’s fake.

- Discriminator’s Goal: The discriminator aims to become better at spotting fake data and distinguishing it from real data.

The Competitive Process

- Generator vs. Discriminator: They are in a kind of competition. As the generator improves at making realistic data, the discriminator improves at identifying what is fake.

- Learning from Each Other: The generator learns from the feedback it gets from the discriminator about how to make better data. In turn, the discriminator learns from the generator’s improvements on how to spot better fakes.

In simple terms, GANs are like a pair of competitors where one is trying to create convincing fakes (generator) and the other is trying to detect those fakes (discriminator). They push each other to improve, leading to better and more realistic synthetic data over time.

Example Code Explanation

Here’s a simple example using TensorFlow and Keras to set up a GAN:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Reshape, Flatten, LeakyReLU, Dropout

from tensorflow.keras.models import Sequential

# Build the generator

def build_generator():

model = Sequential()

model.add(Dense(128, input_dim=100)) # Start with a dense layer; input is a random noise vector of 100 dimensions

model.add(LeakyReLU(alpha=0.01)) # Apply a LeakyReLU activation function for better training

model.add(Dense(784, activation='tanh')) # Output layer to produce an image of 784 pixels (28x28)

model.add(Reshape((28, 28, 1))) # Reshape the output to 28x28 pixels with a single color channel

return model

# Build the discriminator

def build_discriminator():

model = Sequential()

model.add(Flatten(input_shape=(28, 28, 1))) # Flatten the input image (28x28 pixels) into a 1D vector

model.add(Dense(128)) # Dense layer to process the flattened data

model.add(LeakyReLU(alpha=0.01)) # Apply a LeakyReLU activation function

model.add(Dense(1, activation='sigmoid')) # Output layer to classify the image as real or fake

return model

# Instantiate and compile the discriminator

discriminator = build_discriminator()

discriminator.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Instantiate the generator

generator = build_generator()

# Create the GAN by stacking the generator and discriminator

gan = Sequential()

gan.add(generator) # Add the generator

gan.add(discriminator) # Add the discriminator

gan.compile(loss='binary_crossentropy', optimizer='adam') # Compile the GAN

Step-By-Step Explanation

Importing Libraries

import tensorflow as tf

from tensorflow.keras.layers import Dense, Reshape, Flatten, LeakyReLU, Dropout

from tensorflow.keras.models import Sequential

- TensorFlow: An open-source machine learning library used for building and training neural networks.

- Layers:

- Dense: Fully connected layer.

- Reshape: Changes the shape of the data.

- Flatten: Converts multi-dimensional data to a 1D vector.

- LeakyReLU: Activation function that allows a small gradient when the unit is not active.

- Dropout: Regularization technique to prevent overfitting.

- Sequential: A type of model where layers are stacked one after another.

Building the Generator

build_generator(): This function creates the generator model.

- Dense Layer: The generator starts with a dense layer that takes random noise (100 dimensions) as input.

- LeakyReLU: This activation function helps the generator learn better by allowing some negative values to pass through.

- Second Dense Layer: Expands the data to match the size of the image (784 pixels).

- Reshape: Converts the output into a 28×28 pixel image with a single color channel.

def build_generator():

model = Sequential()

model.add(Dense(128, input_dim=100)) # Start with a dense layer; input is a random noise vector of 100 dimensions

model.add(LeakyReLU(alpha=0.01)) # Apply a LeakyReLU activation function for better training

model.add(Dense(784, activation='tanh')) # Output layer to produce an image of 784 pixels (28x28)

model.add(Reshape((28, 28, 1))) # Reshape the output to 28x28 pixels with a single color channel

return model

build_generator(): Function that creates a generator model.- Sequential(): Initializes the model.

- Dense(128, input_dim=100):

- 128: Number of neurons in this layer.

- input_dim=100: The input is a vector of 100 random values (noise).

- LeakyReLU(alpha=0.01): Activation function to introduce non-linearity and avoid the dying ReLU problem.

- Dense(784, activation=’tanh’):

- 784: Output neurons corresponding to 784 pixels in the image (28×28).

- activation=’tanh’: Activation function that outputs values between -1 and 1.

- Reshape((28, 28, 1)): Converts the 784-dimensional vector to a 28×28 pixel image with 1 color channel (grayscale).

Building the Discriminator

build_discriminator(): This function creates the discriminator model.

- Flatten Layer: Flattens the 28×28 pixel image into a single vector.

- Dense Layer: Processes this vector to help the discriminator make a decision.

- LeakyReLU: Helps the discriminator learn better by allowing some negative values.

- Output Dense Layer: Uses a sigmoid activation function to classify the image as either real (1) or fake (0).

def build_discriminator():

model = Sequential()

model.add(Flatten(input_shape=(28, 28, 1))) # Flatten the input image (28x28 pixels) into a 1D vector

model.add(Dense(128)) # Dense layer to process the flattened data

model.add(LeakyReLU(alpha=0.01)) # Apply a LeakyReLU activation function

model.add(Dense(1, activation='sigmoid')) # Output layer to classify the image as real or fake

return model

build_discriminator(): Function that creates a discriminator model.- Sequential(): Initializes the model.

- Flatten(input_shape=(28, 28, 1)): Converts the 28×28 pixel image into a 1D vector.

- Dense(128): Dense layer with 128 neurons to process the flattened vector.

- LeakyReLU(alpha=0.01): Activation function for non-linearity.

- Dense(1, activation=’sigmoid’):

- 1: Output neuron to classify the image.

- activation=’sigmoid’: Outputs a value between 0 and 1, representing the probability that the image is real.

Compiling the Discriminator

discriminator.compile(): Sets up the discriminator to learn by comparing its predictions to actual labels using binary cross-entropy loss. The Adam optimizer adjusts the weights during training.

discriminator = build_discriminator()

discriminator.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

- discriminator = build_discriminator(): Creates an instance of the discriminator model.

- discriminator.compile():

- loss=’binary_crossentropy’: Loss function for binary classification (real or fake).

- optimizer=’adam’: Optimization algorithm for updating weights.

- metrics=[‘accuracy’]: Metrics to monitor during training.

Building the GAN

generator = build_generator()

- generator = build_generator(): Creates an instance of the generator model.

gan = Sequential()

gan.add(generator) # Add the generator

gan.add(discriminator) # Add the discriminator

gan.compile(loss='binary_crossentropy', optimizer='adam') # Compile the GAN

- gan = Sequential(): Initializes the GAN model.

- gan.add(generator): Adds the generator model to the GAN.

- gan.add(discriminator): Adds the discriminator model to the GAN.

gan.compile():

- loss=’binary_crossentropy’: Loss function used to train the GAN, which is typically binary cross-entropy.

- optimizer=’adam’: Optimization algorithm for updating the GAN’s weights.

By understanding and using GANs, you can generate synthetic data that helps in various applications like training machine learning models, creating realistic simulations, and more.

Variational Autoencoders (VAEs)

Overview of VAEs

Variational Autoencoders (VAEs) are a type of machine learning model used to generate new data that resembles real data. They work in two main steps:

- Encoding: VAEs take input data (like images) and convert it into a different space called the latent space. This latent space is a compressed version of the data, capturing its essential features in a smaller, more manageable form.

- Decoding: VAEs then reconstruct the original data from the latent space. The goal is to produce new samples that are similar to the original data but with some variations.

This process allows VAEs to generate synthetic data that closely mimics real-world data.

Training VAEs

Training a VAE involves two main goals:

- Minimizing Reconstruction Loss: Ensures that the data reconstructed from the latent space closely resembles the original input data.

- Minimizing KL Divergence: Ensures that the distribution of the latent space is close to a standard normal distribution. This helps in creating smooth and meaningful variations in the generated data.

Example Code Explanation

Here’s a step-by-step explanation of the VAE code using TensorFlow and Keras:

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Lambda, Flatten, Reshape

from tensorflow.keras.models import Model

from tensorflow.keras.losses import binary_crossentropy

from tensorflow.keras import backend as K

# Function to sample from the latent space

def sampling(args):

z_mean, z_log_var = args

batch = K.shape(z_mean)[0] # Number of samples in the batch

dim = K.int_shape(z_mean)[1] # Dimension of the latent space

epsilon = K.random_normal(shape=(batch, dim)) # Random noise

return z_mean + K.exp(0.5 * z_log_var) * epsilon # Reconstruct the latent space

# Define the input shape of the data (28x28 grayscale images)

input_shape = (28, 28, 1)

inputs = Input(shape=input_shape)

# Encoder part: converts input data to latent space

x = Flatten()(inputs) # Flatten the image into a 1D vector

x = Dense(128, activation='relu')(x) # Dense layer with ReLU activation

z_mean = Dense(2)(x) # Mean of the latent space

z_log_var = Dense(2)(x) # Log variance of the latent space

# Sample from the latent space

z = Lambda(sampling, output_shape=(2,))([z_mean, z_log_var])

encoder = Model(inputs, [z_mean, z_log_var, z])

# Decoder part: converts latent space back to image

latent_inputs = Input(shape=(2,))

x = Dense(128, activation='relu')(latent_inputs) # Dense layer with ReLU activation

x = Dense(28 * 28, activation='sigmoid')(x) # Dense layer to output an image

outputs = Reshape((28, 28, 1))(x) # Reshape to the original image shape

decoder = Model(latent_inputs, outputs)

# Combine encoder and decoder to form the VAE

outputs = decoder(encoder(inputs)[2])

vae = Model(inputs, outputs)

# Compute the reconstruction loss

reconstruction_loss = binary_crossentropy(K.flatten(inputs), K.flatten(outputs))

reconstruction_loss *= 28 * 28 # Scale the loss to the size of the image

# Compute the KL divergence

kl_loss = 1 + z_log_var - K.square(z_mean) - K.exp(z_log_var)

kl_loss = K.sum(kl_loss, axis=-1) # Sum across dimensions

kl_loss *= -0.5 # Scale the loss

# Combine both losses

vae_loss = K.mean(reconstruction_loss + kl_loss)

vae.add_loss(vae_loss) # Add the loss to the VAE model

vae.compile(optimizer='adam') # Compile the model with Adam optimizer

This code defines and trains a Variational Autoencoder (VAE) using TensorFlow and Keras. VAEs are generative models that learn to encode input data into a latent space and then decode it back to the original data format, enabling the generation of new data samples. Here’s a detailed step-by-step explanation of the code:

Step-by-Step Explanation of the code

1. Import Libraries

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Lambda, Flatten, Reshape

from tensorflow.keras.models import Model

from tensorflow.keras.losses import binary_crossentropy

from tensorflow.keras import backend as K

- tensorflow: Library for building and training machine learning models.

- tensorflow.keras.layers: Provides layers used to construct the neural network.

- tensorflow.keras.models: Contains functions to create and manage models.

- tensorflow.keras.losses: Provides loss functions for training models.

- tensorflow.keras.backend: Offers backend functions to perform tensor operations.

2. Sampling Function

def sampling(args):

z_mean, z_log_var = args

batch = K.shape(z_mean)[0] # Number of samples in the batch

dim = K.int_shape(z_mean)[1] # Dimension of the latent space

epsilon = K.random_normal(shape=(batch, dim)) # Random noise

return z_mean + K.exp(0.5 * z_log_var) * epsilon # Reconstruct the latent space

- Purpose: Samples from the latent space using the reparameterization trick.

- Inputs:

z_meanandz_log_varare the mean and log variance of the latent space distribution. - Process:

- Batch Size: Number of samples in the current batch (

batch). - Latent Dimensionality: Dimension of the latent space (

dim). - Noise: Generate random noise (

epsilon) to create variability. - Output: Compute and return a sample from the latent space using the formula

z_mean + K.exp(0.5 * z_log_var) * epsilon.

- Batch Size: Number of samples in the current batch (

3. Encoder Network

input_shape = (28, 28, 1)

inputs = Input(shape=input_shape) # Input layer for 28x28 grayscale images

x = Flatten()(inputs) # Flatten the 2D image into a 1D vector

x = Dense(128, activation='relu')(x) # Dense layer with ReLU activation

z_mean = Dense(2)(x) # Dense layer to output the mean of the latent space

z_log_var = Dense(2)(x) # Dense layer to output the log variance of the latent space

z = Lambda(sampling, output_shape=(2,))([z_mean, z_log_var]) # Sample from latent space

encoder = Model(inputs, [z_mean, z_log_var, z]) # Define the encoder model

- Input Layer: Accepts 28×28 grayscale images.

- Flatten Layer: Converts the 2D image into a 1D vector.

- Dense Layers:

- First Dense Layer: Reduces dimensionality with ReLU activation.

- Mean and Log Variance: Outputs for the latent space.

- Sampling Layer: Uses the

samplingfunction to generate samples from the latent space. - Encoder Model: Defines the encoder that outputs

z_mean,z_log_var, and the sampled latent spacez.

4. Decoder Network

latent_inputs = Input(shape=(2,)) # Input layer for the latent space

x = Dense(128, activation='relu')(latent_inputs) # Dense layer with ReLU activation

x = Dense(28 * 28, activation='sigmoid')(x) # Dense layer to produce an image

outputs = Reshape((28, 28, 1))(x) # Reshape output to the original image shape

decoder = Model(latent_inputs, outputs) # Define the decoder model

- Input Layer: Accepts latent vectors with a dimension of 2.

- Dense Layers:

- First Dense Layer: Expands the latent vector with ReLU activation.

- Second Dense Layer: Produces a flattened image with sigmoid activation.

- Reshape Layer: Converts the flattened image back to the original shape (28x28x1).

- Decoder Model: Defines the decoder that reconstructs images from the latent space.

5. Combine Encoder and Decoder

outputs = decoder(encoder(inputs)[2]) # Pass input through encoder and then decoder

vae = Model(inputs, outputs) # Define the VAE model

- Forward Pass: The

inputsare first encoded to the latent space, then decoded back to the original image space. - VAE Model: Combines encoder and decoder to form the full VAE.

6. Compute Loss Functions

Reconstruction Loss

reconstruction_loss = binary_crossentropy(K.flatten(inputs), K.flatten(outputs))

reconstruction_loss *= 28 * 28 # Scale the loss to the size of the image

- Purpose: Measures how well the reconstructed image matches the original image.

- Binary Crossentropy: Used because the output image is typically normalized between 0 and 1.

- Scaling: The loss is scaled by the total number of pixels (28×28).

KL Divergence

kl_loss = 1 + z_log_var - K.square(z_mean) - K.exp(z_log_var)

kl_loss = K.sum(kl_loss, axis=-1) # Sum across dimensions

kl_loss *= -0.5 # Scale the loss

- Purpose: Ensures that the latent space follows a standard normal distribution.

- Formula: Computes the KL divergence between the learned latent space distribution and a standard normal distribution.

- Scaling: The KL divergence loss is scaled by -0.5.

Combine Losses

vae_loss = K.mean(reconstruction_loss + kl_loss) # Mean of the combined losses

vae.add_loss(vae_loss) # Add the combined loss to the VAE model

vae.compile(optimizer='adam') # Compile the model with Adam optimizer

- Combine: Adds both reconstruction and KL divergence losses.

- Compile: Configures the VAE model for training with the Adam optimizer.

This code sets up a Variational Autoencoder (VAE) using TensorFlow and Keras. It includes:

- Sampling Function: Reparameterization trick for generating samples from the latent space.

- Encoder: Encodes input images into a latent space.

- Decoder: Reconstructs images from the latent space.

- Loss Functions: Computes reconstruction loss and KL divergence to train the VAE.

- Model Definition: Combines encoder and decoder into a single VAE model and compiles it for training.

VAEs are powerful tools for generating synthetic data that can be used to train machine learning models, create realistic simulations, and explore data variations.

Evaluating Synthetic Data

Evaluating synthetic data is crucial to ensure that it is realistic and useful for your needs. Here’s a detailed explanation of the different methods to evaluate synthetic data, presented in a straightforward, human-friendly manner:

1. Statistical Similarity

What It Is:

Statistical similarity means checking if the synthetic data looks like the real data in terms of its patterns and characteristics. It’s about comparing the numbers and distributions of both datasets to see if they match.

Why It Matters:

If synthetic data mirrors real data closely, it means the patterns in the synthetic data are similar to those in real-world data. This is important because it helps ensure that any model trained on this synthetic data will work well when applied to real-world problems.

How to Do It:

- Statistical Tests: Use tests like the Kolmogorov-Smirnov test to compare how two sets of data differ. This test looks at the distribution of data points and measures the largest difference between them.

- Similarity Metrics: Calculate and compare statistics like the mean (average value) and variance (how spread out the values are) of both datasets. If these statistics are similar, it suggests the data is comparable.

2. Model Performance

What It Is:

Model performance evaluation involves training machine learning models on both synthetic and real datasets to see how well they perform. This method helps check if the synthetic data can effectively substitute real data for training purposes.

Why It Matters:

If a model trained on synthetic data performs as well as a model trained on real data, it indicates that the synthetic data is of good quality. This is especially valuable when real data is hard to get or expensive to obtain.

How to Do It:

- Train Models: Create and train machine learning models using both synthetic data and real data.

- Compare Metrics: After training, evaluate the models using metrics such as accuracy (how often the model is correct), precision (how many correct positive predictions the model made), recall (how well the model identifies all relevant cases), and F1 score (a balance between precision and recall). Comparing these metrics helps determine if synthetic data is a good substitute.

3. Visual Inspection

What It Is:

Visual inspection involves looking at samples from the synthetic data to judge if they look realistic and meet your expectations. It’s like checking a picture to see if it looks like the real thing.

Why It Matters:

In many cases, especially with image generation or other visual data, it’s important to see if the synthetic data looks like what you expect. If the visual quality is good, it’s a strong indicator that the data is useful.

How to Do It:

- Visualization Tools: Use tools or software to plot and display images or samples from the synthetic dataset.

- Compare Visually: Look at these samples and compare them with real data samples. Check for realistic details, patterns, and overall appearance.

To make sure synthetic data is useful and realistic, you should:

- Check Statistical Similarity: Compare the numerical patterns of synthetic and real data.

- Evaluate Model Performance: Train models on both types of data and compare their performance.

- Inspect Visually: Look at samples from the synthetic data to see if they look realistic.

By using these methods, you can ensure that your synthetic data will be valuable for training models and performing tasks in real-world scenarios.

Example Code: Visual Inspection

Here’s a Python code example for visual inspection of generated images using matplotlib:

import numpy as np

import matplotlib.pyplot as plt

# Generate synthetic data (e.g., images) using a pre-trained generator

noise = np.random.normal(0, 1, (25, 100)) # Create random noise as input

generated_images = generator.predict(noise) # Generate synthetic images

# Set up a grid for plotting images

fig, axs = plt.subplots(5, 5, figsize=(5, 5), sharey=True, sharex=True)

# Loop through the grid and plot each image

for i in range(5):

for j in range(5):

axs[i, j].imshow(generated_images[i * 5 + j].reshape((28, 28)), cmap='gray')

axs[i, j].axis('off') # Hide axes for a cleaner look

# Display the plot

plt.show()

Explanation

This code snippet is used to generate and visualize synthetic images created by a Generative Adversarial Network (GAN). Here’s a detailed explanation of what each part of the code does:

Import Libraries

import numpy as np

import matplotlib.pyplot as plt

numpy(imported asnp): A library used for numerical operations in Python. Here, it is used to create random noise.matplotlib.pyplot(imported asplt): A library used for creating visualizations. It is used here to plot the generated images.

Generate Synthetic Data

# Generate synthetic data (e.g., images) using a pre-trained generator

noise = np.random.normal(0, 1, (25, 100)) # Create random noise as input

generated_images = generator.predict(noise) # Generate synthetic images

np.random.normal(0, 1, (25, 100)): Creates an array of random noise.

0is the mean of the normal distribution.1is the standard deviation.(25, 100)specifies the shape of the array: 25 samples each of 100 dimensions.

generator.predict(noise): Uses the pre-trained GAN generator model to create synthetic images from the random noise. The predict method generates images based on the input noise. The output, generated_images, is an array where each entry is a synthetic image.

Set Up a Grid for Plotting Images

# Set up a grid for plotting images

fig, axs = plt.subplots(5, 5, figsize=(5, 5), sharey=True, sharex=True)

plt.subplots(5, 5, figsize=(5, 5), sharey=True, sharex=True): Creates a 5×5 grid of subplots (total of 25 subplots) where images will be plotted.5, 5specifies the number of rows and columns in the grid.figsize=(5, 5)sets the size of the entire figure to 5×5 inches.sharey=Trueandsharex=Trueensure that all subplots share the same x and y axes, which helps in keeping the grid layout clean.

Loop Through the Grid and Plot Each Image

# Loop through the grid and plot each image

for i in range(5):

for j in range(5):

axs[i, j].imshow(generated_images[i * 5 + j].reshape((28, 28)), cmap='gray')

axs[i, j].axis('off') # Hide axes for a cleaner look

for i in range(5)andfor j in range(5): Loop through each subplot position in the 5×5 grid.generated_images[i * 5 + j]: Accesses each image from thegenerated_imagesarray.i * 5 + jcalculates the index of the image in the array.reshape((28, 28)): Reshapes the flat image data into a 28×28 pixel format. This is because each generated image is originally in a flat array format.axs[i, j].imshow(..., cmap='gray'): Displays the image in the subplot.cmap='gray'specifies that the image should be displayed in grayscale.axs[i, j].axis('off'): Hides the axes of the subplot for a cleaner visual presentation. This makes the plot look like a grid of images without extra axis lines or labels.

Display the Plot

# Display the plot

plt.show()

plt.show(): Renders and displays the plot with all the subplots. This command is used to actually see the visual representation of the synthetic images.

Evaluating synthetic data is essential to confirm that it can effectively replace real data. By examining its statistical properties, assessing model performance, and checking visual quality, you can assess whether the synthetic data meets your requirements.

Challenges and Limitations in Synthetic Data Generation

When working with synthetic data, there are several challenges and limitations to consider. These issues can impact the effectiveness and usability of the synthetic data for training machine learning models. Here’s a detailed look at these challenges:

1. Quality Control

Ensuring High-Quality Synthetic Data

Quality control is crucial to make sure that synthetic data is accurate and useful for training machine learning models. If the synthetic data isn’t high quality, it could lead to poor model performance or unreliable results.

- Why It Matters: High-quality data helps train models effectively, ensuring they learn patterns that are relevant and accurate.

- How to Address It: Regularly evaluate and validate the synthetic data. This means comparing it with real data to ensure it matches in terms of statistical properties and performance metrics. You can also use model performance to gauge data quality by testing how well models trained on synthetic data perform compared to those trained on real data.

2. Bias and Fairness

Addressing Bias in Synthetic Data

Synthetic data can sometimes reflect biases present in the real data or in the data generation process itself. This can lead to unfair or skewed results in machine learning models.

- Why It Matters: Bias in data can lead to biased models, which can make unfair or inaccurate predictions. Ensuring fairness helps in creating models that perform well across different groups and scenarios.

- How to Address It: Implement strategies to ensure diversity in the generated data. This involves careful design of the data generation process to include various scenarios, demographics, and conditions. Regularly audit the data for potential biases and adjust the generation techniques accordingly.

3. Computational Resources

Managing Resource Demands

Generating synthetic data can be computationally expensive, requiring significant processing power and memory, especially for complex models or large datasets.

- Why It Matters: Efficient use of computational resources helps in managing costs and reducing processing times. This is crucial for scalability and practical implementation of data generation processes.

- How to Address It: Use efficient algorithms and optimize the data generation process. Consider parallel processing and leveraging cloud computing resources to handle large-scale data generation. Balancing resource use with the quality of data generated is key to maintaining efficiency.

Conclusion

Synthetic data generation is a powerful tool in machine learning. It addresses data privacy and security, reduces costs, and overcomes data scarcity. By mimicking real-world data, synthetic data ensures effective training of machine learning models. The benefits and applications of synthetic data are vast, making it an essential component of modern data science. Using AI for data generation opens up new possibilities for creating high-quality, diverse datasets, enhancing the development and performance of machine learning models.

External Resources

Here are some external resources on generating synthetic data using Generative AI (GenAI):

Google Cloud AI – Synthetic Data Generation

Link: Google Cloud Synthetic Data

Description: An overview of synthetic data and its applications, including how Google Cloud uses AI to generate synthetic data for various purposes.

IBM – Generative AI for Synthetic Data

Link: IBM Synthetic Data

Description: IBM’s guide to synthetic data, including the role of Generative AI in creating artificial datasets.

Microsoft Azure – Synthetic Data

Link: Microsoft Azure Synthetic Data

Description: Microsoft’s insights into how synthetic data can be used to enhance machine learning models, with a focus on Azure’s solutions.

Frequently Asked Questions

1. What is synthetic data?

Synthetic data is artificial data created by computers to mimic real-world data. It is used for various purposes, such as training machine learning models, when real data is not available or practical to use.

2. How is synthetic data generated using Generative AI?

Generative AI uses algorithms to create synthetic data. These algorithms learn patterns from real data and then generate new, artificial data that resembles the original data. Examples of such algorithms include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

3. Why is synthetic data important?

Synthetic data is important because it helps overcome challenges like data scarcity, privacy concerns, and high data collection costs. It allows organizations to create large datasets without needing real data, which can be expensive or sensitive.

4. What are some common applications of synthetic data?

Synthetic data is used in many fields, including healthcare for simulating patient records, autonomous vehicles for creating driving scenarios, finance for testing trading algorithms, and marketing for simulating customer behavior.

5. Are there any privacy benefits to using synthetic data?

Yes, synthetic data provides privacy benefits because it does not contain real personal information. This helps protect individuals’ privacy while still allowing for effective training and testing of machine learning models.

6. How does synthetic data compare to real data?

Synthetic data is designed to mimic the characteristics of real data, but it is not real. While it can effectively simulate many aspects of real data, it may not capture every detail. However, it is often used to supplement real data and improve the performance of machine learning models.

Leave a Reply