How to Implement Generative Models Using Machine Learning Principles

Introduction

Generative models are a type of machine learning algorithms designed to generate new data samples that resemble a given dataset. They are powerful tools in artificial intelligence, enabling applications like image synthesis, text generation, and data augmentation. This section provides an overview of how to implement these models using core machine learning principles.

Overview of Generative Models

1. Definition and Significance

Generative models are a class of machine learning algorithms designed to generate new data samples that resemble a given dataset. Unlike traditional models that focus on classification or prediction, generative models aim to understand the underlying distribution of the data and produce new instances that share similar characteristics.

Significance:

- Data Creation: Generative models can create new, synthetic data that is similar to real-world data. This is useful for applications such as data augmentation, where additional training data is needed to improve model performance.

- Creativity and Innovation: These models enable the generation of new content, including images, text, and music, fostering creativity and innovation in fields like art, design, and entertainment.

- Understanding Data: They help in understanding and visualizing complex data distributions, which can be valuable for exploratory data analysis and insights.

2. Role in Machine Learning and AI

Generative models play a crucial role in various aspects of machine learning and artificial intelligence:

- Data Generation: They are used to create synthetic datasets for training and testing models. This can be particularly valuable when dealing with scarce or sensitive data. For example, generating synthetic medical images to enhance diagnostic models.

- Feature Learning: Generative models can learn and extract features from data that are useful for downstream tasks. For instance, autoencoders can learn compact representations of data, which can be used for classification or clustering.

- Anomaly Detection: By learning the normal data distribution, generative models can identify anomalies or outliers. For example, VAEs can detect unusual patterns in data by comparing generated samples to real data.

- Simulation and Forecasting: They are employed in simulations and forecasting by generating realistic scenarios based on historical data. This includes predicting future trends or simulating different conditions for testing purposes.

- Enhancing Creativity: Generative models are widely used in creative applications, such as generating artwork, composing music, and writing text. They assist in exploring new ideas and producing content that may not be easily conceived by humans.

Generative models are powerful tools in machine learning and AI, offering the ability to generate new data, enhance creativity, and gain insights into complex data distributions. Their ability to create synthetic data and understand underlying patterns makes them invaluable for a wide range of applications and research areas.

Importance of Understanding Machine Learning Principles

1. Relevance to Implementing Generative Models

Understanding machine learning (ML) principles is crucial for successfully implementing generative models. Here’s why:

- Model Selection and Design: A solid understanding of ML principles helps in choosing the appropriate generative model based on the problem at hand. For instance, knowing the differences between Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and autoregressive models allows for selecting the right model architecture for tasks like image synthesis or text generation.

- Training and Optimization: Implementing generative models requires knowledge of optimization techniques and loss functions. For example, training GANs involves balancing the generator and discriminator, which demands a thorough understanding of gradient descent and optimization strategies. Understanding how to fine-tune these models ensures better performance and stability.

- Data Representation: Machine learning principles provide insights into how data should be represented and processed. This includes normalization, feature scaling, and data augmentation, all of which are essential for preparing data for generative models.

- Evaluation Metrics: Evaluating the performance of generative models requires familiarity with metrics such as Inception Score (IS) and Fréchet Inception Distance (FID). Understanding these metrics helps in assessing the quality of generated data and making necessary adjustments to the models.

2. Benefits of a Solid ML Foundation

Having a strong foundation in machine learning offers several advantages when working with generative models:

- Enhanced Problem-Solving Skills: A deep understanding of ML principles enables you to approach problems systematically and apply suitable techniques to solve them. This includes debugging issues, optimizing model performance, and developing innovative solutions.

- Efficient Model Development: Knowledge of ML fundamentals accelerates the model development process. You can make informed decisions about model architectures, hyperparameter tuning, and data preprocessing, leading to faster and more effective results.

- Adaptability to New Techniques: The field of generative AI is rapidly evolving, with new techniques and models emerging frequently. A solid ML foundation equips you with the ability to quickly grasp and implement these new advancements, keeping your skills and knowledge up-to-date.

- Improved Understanding of Model Behavior: Understanding the underlying principles of ML helps in interpreting and explaining the behavior of generative models. This includes understanding why a model might generate unrealistic samples or how to improve its performance.

- Effective Communication and Collaboration: A strong ML background allows you to communicate effectively with other data scientists, researchers, and stakeholders. It ensures that you can articulate the technical aspects of generative models and their applications clearly.

Understanding machine learning principles is essential for implementing and optimizing generative models. It provides the necessary knowledge to select appropriate models, train them effectively, and evaluate their performance. A solid ML foundation not only enhances problem-solving skills but also improves efficiency, adaptability, and communication in the field of generative AI.

Foundations of Machine Learning

1. Understanding Machine Learning (ML)

Definition and Key Concepts

Machine Learning (ML) is a branch of artificial intelligence (AI) that enables systems to learn from data and improve their performance over time without being explicitly programmed. ML focuses on developing algorithms and models that can recognize patterns, make predictions, and make decisions based on input data.

Key Concepts:

- Algorithms: Procedures or formulas for solving problems. In ML, algorithms are used to analyze data and build models. Common algorithms include linear regression, decision trees, and neural networks.

- Models: Mathematical representations of data patterns created by training algorithms. A model is the result of the learning process and is used to make predictions or decisions.

- Training: The process of feeding data to an algorithm to enable it to learn and adjust its parameters. During training, the model learns from the data and improves its accuracy.

- Testing: Evaluating a trained model on new, unseen data to assess its performance and generalizability.

- Features: The individual measurable properties or characteristics of the data used for model training. Features are the input variables that help in making predictions.

- Labels: The output or target variable that the model is trying to predict. Labels are used in supervised learning to guide the training process.

2. Overview of Machine Learning and Its Types

Machine learning can be broadly categorized into three main types based on the learning approach and the nature of the data:

- Supervised Learning: In this type, the model is trained on labeled data, where each input data point is associated with an output label. The goal is to learn a mapping from inputs to outputs that can be used to make predictions on new data. Common applications include classification (e.g., email spam detection) and regression (e.g., predicting house prices).

- Unsupervised Learning: Here, the model is trained on unlabeled data and must find hidden patterns or structures within the data. The goal is to identify underlying relationships without predefined labels. Common applications include clustering (e.g., customer segmentation) and dimensionality reduction (e.g., reducing the number of features in a dataset).

- Reinforcement Learning: In this type, the model learns by interacting with an environment and receiving feedback in the form of rewards or penalties. The goal is to learn a policy that maximizes cumulative rewards over time. This approach is commonly used in applications such as game playing and robotic control.

3. Machine learning Importance in Developing AI Systems

Machine learning is fundamental to developing advanced AI systems for several reasons:

- Automation: ML enables the automation of complex tasks by learning from data and making decisions without human intervention. This is crucial for applications such as self-driving cars and automated financial trading.

- Predictive Analytics: ML models can analyze historical data to make predictions about future events. This capability is valuable for industries such as healthcare (predicting disease outbreaks), finance (forecasting market trends), and marketing (personalizing customer recommendations).

- Pattern Recognition: ML excels at identifying patterns and trends in data that may not be immediately apparent. This ability is essential for tasks like image recognition, natural language processing, and anomaly detection.

- Adaptability: ML systems can adapt to new data and evolving conditions. This adaptability allows AI systems to continuously improve and stay relevant in dynamic environments.

- Personalization: ML enables the creation of personalized experiences by analyzing individual preferences and behaviors. This is widely used in recommendation systems for platforms like Netflix, Amazon, and social media.

Types of Machine Learning and Key Machine Learning Algorithms

1. Machine learning – Supervised Learning

Definition:

Supervised learning is a type of machine learning where the model is trained on a labeled dataset. Each training example includes an input and the corresponding output (label). The model learns to map inputs to outputs by minimizing the error between its predictions and the actual labels.

Examples:

- Classification:

- What It Is: This involves categorizing data into predefined classes.

- Example: Imagine you have an email dataset where each email is labeled as “spam” or “not spam.” A classification model would learn to identify whether a new email should be classified as “spam” or “not spam.”

- Regression:

- What It Is: This involves predicting a continuous value.

- Example: Suppose you want to predict house prices. You might use features like the size of the house, the number of rooms, and its location. A regression model would learn to predict the price based on these features.

Common Algorithms

Linear Regression in Machine learning

Concept: Linear regression is a statistical method used to understand and model the relationship between one or more independent variables (features) and a dependent variable (target). The primary goal is to predict the value of the dependent variable based on the independent variables by fitting a linear equation to the observed data.

Key Points:

- Simple Linear Regression:

- What It Is: Involves modeling the relationship between a single independent variable and a dependent variable.

- Equation: The relationship is expressed as:

Where:

- y is the dependent variable.

- x is the independent variable.

- β0\beta is the intercept (the value of y when x is zero).

- β1\beta is the slope of the line (the change in y for a one-unit change in x).

- ϵ\epsilonϵ is the error term (the difference between the predicted and actual values).

2. Multiple Linear Regression:

- What It Is: Extends simple linear regression to include multiple independent variables.

- Equation: The relationship is modeled as:

Where:

- where:

- x1,x2,…,xn are the independent variables.

- β1,β2,…,βn are the coefficients for each independent variable.

Applications

- Predicting Sales:

- Example: Estimating future sales based on features like advertising budget, store location, and product pricing. For instance, you can use historical sales data and budget information to predict future sales.

- House Pricing:

- Example: Predicting house prices based on features such as size, number of bedrooms, and location. A model can help estimate a house’s value by analyzing these attributes.

- Economic Forecasting:

- Example: Analyzing economic indicators like GDP, inflation rates, and employment to forecast trends. Linear regression can help understand how different economic factors are related and predict future trends.

Implementation Steps Using Python

- Import Libraries:

- Purpose: Load necessary libraries for data manipulation, model training, and evaluation.

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error, r2_score

2. Load Data:

- Purpose: Load your dataset and separate it into features (independent variables) and target variables (dependent variable).

# Sample data

data = {

'Size': [1, 2, 3, 4, 5],

'Price': [100, 200, 300, 400, 500]

}

df = pd.DataFrame(data)

# Features and target variable

X = df[['Size']] # Features

y = df['Price'] # Target variable

3. Split Data:

- Purpose: Divide the dataset into training and testing sets to evaluate the model’s performance on unseen data.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

4. Create and Train the Model:

- Purpose: Initialize and train the linear regression model on the training data.

# Model initialization

model = LinearRegression()

# Model training

model.fit(X_train, y_train)

5. Make Predictions:

- Purpose: Use the trained model to make predictions on the test set.

# Predictions

y_pred = model.predict(X_test)

6. Evaluate the Model:

- Purpose: Assess the model’s performance using metrics like Mean Squared Error (MSE) and R-squared (R^2) score.

# Model evaluation

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print(f'Mean Squared Error: {mse}')

print(f'R-squared: {r2}')

7. Visualize Results (Optional):

- Purpose: Plot the results to visualize how well the regression line fits the data.

import matplotlib.pyplot as plt

# Plotting

plt.scatter(X_test, y_test, color='blue', label='Actual data')

plt.plot(X_test, y_pred, color='red', linewidth=2, label='Regression line')

plt.xlabel('Size')

plt.ylabel('Price')

plt.title('Linear Regression')

plt.legend()

plt.show()

Summary

Linear regression is a fundamental statistical technique used to model the relationship between a dependent variable and one or more independent variables. It helps in predicting outcomes and analyzing trends. By fitting a linear equation to the data, you can understand how changes in the independent variables affect the dependent variable, and make informed predictions based on this relationship.

Logistic Regression in Machine learning

Concept:

Logistic regression is a statistical method used for binary classification problems. Unlike linear regression, which predicts continuous values, logistic regression predicts probabilities of a binary outcome (i.e., success/failure, yes/no, 0/1). The model estimates the probability that a given input belongs to a certain class.

Key Points:

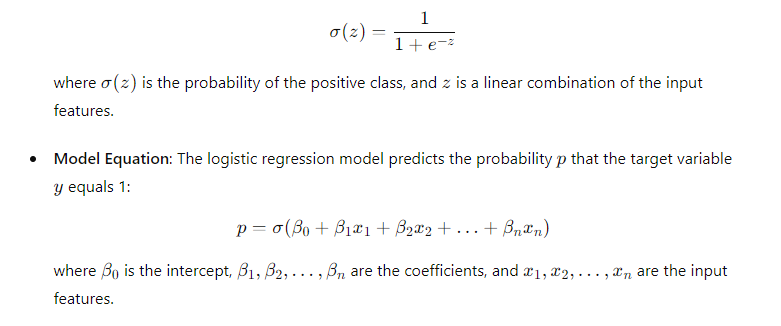

- Logistic Function: The core of logistic regression is the logistic function (also called the sigmoid function), which maps any real-valued number into a value between 0 and 1. The function is defined as:

Applications:

- Spam Detection: Classifying emails as spam or not spam.

- Medical Diagnosis: Predicting the likelihood of a disease based on patient features.

- Credit Scoring: Evaluating the probability of a borrower defaulting on a loan.

Implementation

Here’s a step-by-step guide to implementing logistic regression using Python’s scikit-learn library:

Import Libraries:

Import the necessary libraries for data manipulation, model training, and evaluation.

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

Load Data: Load your dataset and prepare the features and target variable.

# Sample data

data = {

'Feature1': [2, 3, 4, 5, 6],

'Feature2': [1, 2, 3, 4, 5],

'Target': [0, 0, 1, 1, 1]

}

df = pd.DataFrame(data)

# Features and target variable

X = df[['Feature1', 'Feature2']] # Features

y = df['Target'] # Target variable

Split Data: Split the dataset into training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Create and Train the Model: Initialize and train the logistic regression model.

# Model initialization

model = LogisticRegression()

# Model training

model.fit(X_train, y_train)

Make Predictions: Use the trained model to make predictions on the test set.

# Predictions

y_pred = model.predict(X_test)

Evaluate the Model: Assess the model’s performance using metrics like accuracy, confusion matrix, and classification report.

# Model evaluation

accuracy = accuracy_score(y_test, y_pred)

conf_matrix = confusion_matrix(y_test, y_pred)

class_report = classification_report(y_test, y_pred)

print(f'Accuracy: {accuracy}')

print('Confusion Matrix:')

print(conf_matrix)

print('Classification Report:')

print(class_report)

Summary

Logistic regression is a powerful and interpretable method for binary classification tasks. By modeling the probability of a binary outcome, it helps in making decisions based on the likelihood of different classes. Understanding and implementing logistic regression allows you to tackle classification problems effectively, providing insights into how features influence the target variable.

Must Read

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

Decision Trees in Machine learning

Concept:

Decision trees are a type of supervised learning algorithm used for both classification and regression tasks. The model uses a tree-like structure to make decisions based on the input features. Each internal node of the tree represents a decision based on a feature, each branch represents the outcome of that decision, and each leaf node represents the final output or class label.

Key Points:

- Structure: A decision tree is made up of nodes, branches, and leaves. The root node is the topmost node, representing the first decision point. Each internal node represents a decision on a feature, leading to branches for each possible outcome. Leaf nodes represent the final prediction or class.

- Splitting: Decision trees use different algorithms (like Gini impurity, entropy, or variance reduction) to decide the best way to split the data at each node. The goal is to create the most homogeneous branches possible.

- Pruning: To avoid overfitting, decision trees can be pruned by removing nodes that provide little predictive power. This can be done by setting a maximum depth or minimum samples per leaf node.

Applications:

- Classification: Classifying emails as spam or not spam, diagnosing medical conditions, and predicting customer churn.

- Regression: Predicting house prices, estimating sales figures, and forecasting economic indicators.

Implementation:

Here’s a step-by-step guide to implementing a decision tree using Python’s scikit-learn library:

- Import Libraries: Import the necessary libraries for data manipulation, model training, and evaluation.

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier, DecisionTreeRegressor

from sklearn.metrics import accuracy_score, mean_squared_error, classification_report

from sklearn import tree

import matplotlib.pyplot as plt

Load Data: Load your dataset and prepare the features and target variable.

# Sample data for classification

data = {

'Feature1': [1, 2, 3, 4, 5],

'Feature2': [5, 4, 3, 2, 1],

'Target': [0, 1, 0, 1, 0]

}

df = pd.DataFrame(data)

# Features and target variable

X = df[['Feature1', 'Feature2']] # Features

y = df['Target'] # Target variable

Split Data: Split the dataset into training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Create and Train the Model: Initialize and train the decision tree model. Here, we’ll use DecisionTreeClassifier for classification tasks.

# Model initialization

model = DecisionTreeClassifier()

# Model training

model.fit(X_train, y_train)

Make Predictions: Use the trained model to make predictions on the test set.

# Predictions

y_pred = model.predict(X_test)

Evaluate the Model: Assess the model’s performance using metrics like accuracy and classification report.

# Model evaluation

accuracy = accuracy_score(y_test, y_pred)

class_report = classification_report(y_test, y_pred)

print(f'Accuracy: {accuracy}')

print('Classification Report:')

print(class_report)

Visualize the Tree (Optional): Plot the decision tree to visualize its structure.

plt.figure(figsize=(10, 6))

tree.plot_tree(model, filled=True, feature_names=X.columns, class_names=['Class 0', 'Class 1'])

plt.title('Decision Tree')

plt.show()

Summary:

Decision trees are intuitive and easy-to-interpret models that are useful for both classification and regression tasks. They work by recursively splitting the data based on feature values to make predictions. While powerful, decision trees can overfit, so techniques like pruning or ensemble methods (e.g., random forests) are often used to improve performance and generalization. Understanding and implementing decision trees enables you to build strong predictive models and gain insights into the decision-making process of your data.

Supervised learning helps build models that can predict outcomes based on patterns learned from labeled data. The three algorithms mentioned—Linear Regression, Logistic Regression, and Decision Trees—each serve different purposes and can be applied depending on whether you’re dealing with continuous values, binary outcomes, or classification tasks.

Unsupervised Learning

Definition: Unsupervised learning is a type of machine learning where the model is trained using data that doesn’t have any labeled responses. Instead of learning from input-output pairs, the model tries to understand the underlying patterns or structures within the data itself. It’s like exploring a new dataset and trying to find natural groupings or patterns without having predefined categories.

Examples

- Clustering

- What It Is: Clustering involves grouping similar data points together based on their features.

- Example: In marketing, you might use clustering to segment customers into different groups based on their purchasing behavior. This helps in targeting marketing strategies more effectively for each customer segment.

- Dimensionality Reduction

- What It Is: This technique reduces the number of features or dimensions in the data while retaining as much important information as possible.

- Example: If you have a dataset with many features, dimensionality reduction can simplify the data for easier visualization or analysis, such as compressing high-dimensional data into fewer dimensions for better visualization.

Common Algorithms

k-Means Clustering in Machine learning

Concept:

k-Means clustering is an unsupervised machine learning algorithm used for partitioning a dataset into distinct groups (or clusters). The goal is to group similar data points together based on their features while ensuring that the clusters are as distinct as possible from one another. It is widely used in exploratory data analysis, customer segmentation, image compression, and more.

Key Points:

- Clustering Process: The k-means algorithm works by iteratively assigning data points to one of k clusters based on their features. The algorithm consists of the following steps:

- Initialization: Choose kkk initial centroids randomly from the dataset.

- Assignment: Assign each data point to the nearest centroid, creating k clusters.

- Update: Calculate the new centroids by taking the mean of all data points assigned to each cluster.

- Convergence: Repeat the assignment and update steps until the centroids no longer change significantly or until a predefined number of iterations is reached.

- Distance Metric: The most common distance metric used in k-means is the Euclidean distance, but other metrics can also be applied depending on the dataset and problem.

- Choosing k: The number of clusters k is a hyperparameter that needs to be defined before running the algorithm. The Elbow Method is a common technique to determine the optimal number of clusters by plotting the within-cluster sum of squares (WCSS) against the number of clusters.

Applications:

- Customer Segmentation: Grouping customers based on purchasing behavior for targeted marketing.

- Image Compression: Reducing the number of colors in an image by clustering similar colors.

- Anomaly Detection: Identifying outliers in datasets.

Implementation:

Here’s a step-by-step guide to implementing k-Means clustering using Python’s scikit-learn library:

- Import Libraries: Import the necessary libraries for data manipulation and clustering.

import numpy as np

import pandas as pd

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

import matplotlib.pyplot as plt

from sklearn.metrics import silhouette_score

2. Load Data: Create or load a dataset. For illustration, we can generate synthetic data using make_blobs.

# Generate synthetic data

X, y = make_blobs(n_samples=300, centers=4, cluster_std=0.60, random_state=0)

# Convert to DataFrame for better visualization

df = pd.DataFrame(X, columns=['Feature1', 'Feature2'])

3. Choose the Number of Clusters (k): Use the Elbow Method to find the optimal number of clusters.

wcss = []

for i in range(1, 11):

kmeans = KMeans(n_clusters=i, random_state=0)

kmeans.fit(X)

wcss.append(kmeans.inertia_)

# Plotting the elbow curve

plt.figure(figsize=(10, 6))

plt.plot(range(1, 11), wcss, marker='o')

plt.title('Elbow Method')

plt.xlabel('Number of clusters (k)')

plt.ylabel('WCSS')

plt.show()

4. Create and Train the Model: Initialize and train the k-means model with the chosen k.

# Choose k=4 based on the elbow method

k = 4

kmeans = KMeans(n_clusters=k, random_state=0)

y_kmeans = kmeans.fit_predict(X)

5. Visualize the Clusters: Plot the resulting clusters and their centroids.

# Plotting the clusters

plt.figure(figsize=(10, 6))

plt.scatter(X[:, 0], X[:, 1], c=y_kmeans, s=50, cmap='viridis')

centers = kmeans.cluster_centers_

plt.scatter(centers[:, 0], centers[:, 1], c='red', s=200, alpha=0.75, marker='X', label='Centroids')

plt.title('K-Means Clustering')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.legend()

plt.show()

6. Evaluate the Model: Assess the clustering performance using metrics like the silhouette score.

# Calculate silhouette score

silhouette_avg = silhouette_score(X, y_kmeans)

print(f'Silhouette Score: {silhouette_avg}')

Summary:

k-Means clustering is a powerful and straightforward algorithm used for grouping similar data points into clusters. It relies on minimizing the distance between points and their respective cluster centroids. By understanding and implementing k-means, you can effectively uncover hidden patterns in your data, making it a valuable tool for data analysis, segmentation, and exploratory data analysis.

Agglomerative Hierarchical Clustering in Machine learning

Concept:

Agglomerative hierarchical clustering is a bottom-up approach to clustering where each data point starts as its own cluster, and pairs of clusters are merged iteratively based on a predefined distance metric until all points belong to a single cluster or a specified number of clusters is reached. This method is called “agglomerative” because it agglomerates (merges) clusters.

Key Points:

- Linkage Criteria: The method for determining the distance between clusters. Common linkage criteria include:

- Single Linkage: The distance between the closest points of two clusters.

- Complete Linkage: The distance between the farthest points of two clusters.

- Average Linkage: The average distance between all points in the two clusters.

- Ward’s Method: Minimizes the variance within each cluster.

- Dendrogram: A tree-like diagram that records the sequence of merges or splits. The height of each branch represents the distance (or dissimilarity) between the clusters being merged. Dendrograms are useful for visualizing the structure and deciding the number of clusters.

- Distance Metrics: Commonly used metrics include Euclidean distance, Manhattan distance, and cosine similarity.

Applications:

- Gene Expression Data Analysis: Grouping genes with similar expression patterns.

- Document Clustering: Grouping documents with similar content.

- Market Segmentation: Identifying customer segments with similar behaviors.

Implementation:

Here’s a step-by-step guide to implementing agglomerative hierarchical clustering using Python’s scikit-learn library:

- Import Libraries: Import the necessary libraries for data manipulation, clustering, and visualization.

import numpy as np

import pandas as pd

from sklearn.datasets import make_blobs

from sklearn.cluster import AgglomerativeClustering

from scipy.cluster.hierarchy import dendrogram, linkage

import matplotlib.pyplot as plt

2. Load Data: Create or load a dataset. For illustration, we can generate synthetic data using make_blobs.

# Generate synthetic data

X, y = make_blobs(n_samples=300, centers=4, cluster_std=0.60, random_state=0)

# Convert to DataFrame for better visualization

df = pd.DataFrame(X, columns=['Feature1', 'Feature2'])

3. Visualize the Data: Plot the data points to understand the distribution.

plt.figure(figsize=(10, 6))

plt.scatter(X[:, 0], X[:, 1], s=50)

plt.title('Data Points')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.show()

4. Perform Hierarchical Clustering: Use linkage to perform hierarchical clustering and create a dendrogram.

# Perform hierarchical/agglomerative clustering

Z = linkage(X, method='ward')

# Plot the dendrogram

plt.figure(figsize=(12, 8))

dendrogram(Z, truncate_mode='level', p=5)

plt.title('Hierarchical Clustering Dendrogram')

plt.xlabel('Sample index')

plt.ylabel('Distance')

plt.show()

5. Determine the Number of Clusters: Decide the number of clusters by cutting the dendrogram at the desired level.

# Agglomerative Clustering

num_clusters = 4

clustering_model = AgglomerativeClustering(n_clusters=num_clusters, affinity='euclidean', linkage='ward')

cluster_labels = clustering_model.fit_predict(X)

6. Visualize the Clusters: Plot the resulting clusters.

plt.figure(figsize=(10, 6))

plt.scatter(X[:, 0], X[:, 1], c=cluster_labels, s=50, cmap='viridis')

plt.title('Agglomerative Hierarchical Clustering')

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.show()

Summary:

Agglomerative hierarchical clustering is a adaptable and interpretable method for clustering that builds a hierarchy of clusters through successive merges. The dendrogram provides a visual representation of the clustering process and helps determine the optimal number of clusters. Understanding and implementing agglomerative hierarchical clustering allows you to analyze the hierarchical structure of your data, making it a valuable tool for various applications, from biology to market analysis.

Principal Component Analysis (PCA) in Machine learning

Concept:

Principal Component Analysis (PCA) is a dimensionality reduction technique used to transform a dataset with potentially correlated features into a set of linearly uncorrelated components called principal components. The goal is to reduce the number of features while preserving as much variance (information) as possible in the data. PCA is widely used in data preprocessing, exploratory data analysis, and visualization.

Key Points:

- Principal Components: New features (components) that are orthogonal to each other and ordered by the amount of variance they explain in the data.

- Variance: PCA seeks to maximize the variance explained by the first few principal components. The first principal component explains the most variance, the second explains the next most, and so on.

- Orthogonality: Principal components are orthogonal (uncorrelated) to each other, which helps in reducing multicollinearity in the dataset.

Applications:

- Dimensionality Reduction: Reducing the number of features in a dataset while retaining as much information as possible.

- Data Visualization: Projecting high-dimensional data into 2D or 3D for visualization.

- Noise Reduction: Filtering out noise by focusing on the components that explain the most variance.

Implementation:

Here’s a step-by-step guide to implementing PCA using Python’s scikit-learn library:

- Import Libraries: Import the necessary libraries for data manipulation, PCA, and visualization.

import numpy as np

import pandas as pd

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

2. Load Data: Create or load a dataset. For illustration, we’ll use the Iris dataset.

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

3. Standardize the Data: Standardize the dataset so that each feature has a mean of 0 and a standard deviation of 1. This step is crucial for PCA since it is sensitive to the scale of the features.

from sklearn.preprocessing import StandardScaler

# Standardize the data

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

4. Apply PCA: Initialize PCA and fit it to the standardized data. Determine the number of components to keep.

# Apply PCA

pca = PCA(n_components=2) # Reduce to 2 dimensions for visualization

X_pca = pca.fit_transform(X_scaled)

# Explained variance ratio

explained_variance = pca.explained_variance_ratio_

print(f'Explained variance ratio for each component: {explained_variance}')

print(f'Total variance explained: {sum(explained_variance)}')

5. Visualize the Results: Plot the data points in the new 2D space defined by the first two principal components.

plt.figure(figsize=(10, 6))

scatter = plt.scatter(X_pca[:, 0], X_pca[:, 1], c=y, cmap='viridis', edgecolor='k', s=50)

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.title('PCA of Iris Dataset')

plt.colorbar(scatter, label='Species')

plt.show()

6. Interpret the Principal Components: Understand the contribution of each original feature to the principal components.

# Principal components

components = pca.components_

feature_names = iris.feature_names

print('Principal Components:')

for i, component in enumerate(components):

print(f'Principal Component {i+1}:')

for feature, weight in zip(feature_names, component):

print(f' {feature}: {weight:.4f}')

Summary:

Principal Component Analysis (PCA) is a powerful dimensionality reduction technique that helps in transforming high-dimensional data into a lower-dimensional space while preserving as much variance as possible. By understanding and implementing PCA, you can simplify your dataset, improve computational efficiency, and gain insights into the structure of your data. PCA is an essential tool in data preprocessing, exploratory data analysis, and machine learning pipelines.

Unsupervised learning helps in understanding and summarizing data by finding hidden structures or patterns without predefined labels. Clustering techniques like k-Means and Hierarchical Clustering group data points based on similarity, while Dimensionality Reduction techniques like PCA simplify the data for analysis and visualization. Each algorithm has its specific use case and can be applied depending on the nature of the data and the goals of the analysis.

Reinforcement Learning (RL) in Machine learning

Concept:

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by performing actions in an environment to maximize cumulative rewards. Unlike supervised learning, where the model is trained on labeled data, RL involves learning from interactions with the environment and receiving feedback in the form of rewards or penalties. The agent’s goal is to learn a policy that maximizes long-term rewards.

Key Points:

Agent:

- Definition: The learner or decision-maker that performs actions within the environment.

- Role: The agent aims to learn how to act to maximize rewards.

Environment:

- Definition: The external system or context with which the agent interacts.

- Role: It provides feedback based on the agent’s actions, which helps the agent learn.

Actions:

- Definition: The set of choices available to the agent at each state.

- Examples: Moving up, down, left, or right in a grid world.

States:

- Definition: The different situations or configurations the agent can encounter in the environment.

- Role: States represent the current situation in which the agent makes decisions.

Rewards:

- Definition: Feedback received from the environment after performing an action.

- Role: Rewards indicate the success or failure of actions in achieving the goal. They guide the agent’s learning process.

Policy:

- Definition: A strategy or mapping from states to actions that the agent follows to make decisions.

- Role: The policy helps the agent decide which actions to take in different states.

Value Function:

- Definition: A function that estimates the expected cumulative reward from a given state or state-action pair.

- Role: It helps evaluate the desirability of states or actions and guides decision-making.

Applications:

- Game Playing: Training agents to play and master games like chess, Go, and video games.

- Robotics: Enabling robots to learn complex tasks such as walking, grasping, and navigation.

- Recommendation Systems: Personalizing recommendations based on user interactions and preferences.

- Finance: Optimizing trading strategies and portfolio management.

Types of RL:

- Model-Free vs. Model-Based RL:

- Model-Free RL: The agent learns directly from interactions with the environment without building a model of the environment. It includes methods like Q-Learning and Policy Gradients.

- Model-Based RL: The agent builds a model of the environment and uses it to make predictions and plan actions.

- Value-Based vs. Policy-Based Methods:

- Value-Based Methods: Focus on estimating the value function to determine the optimal policy. Examples include Q-Learning and SARSA.

- Policy-Based Methods: Directly learn the policy without estimating the value function. Examples include Policy Gradients and Actor-Critic methods.

Implementation:

Here’s a basic implementation of Q-Learning, a popular value-based RL algorithm, using Python:

Import necessary libraries for RL and data manipulation.

1. Import Libraries:

- Purpose: Load necessary libraries for RL and data manipulation.

import numpy as np

import random

import matplotlib.pyplot as plt

2. Define the Environment:

Purpose: Create a simple environment where the agent can learn. In this example, a grid world is used with basic reward structures.

class GridWorld:

def __init__(self):

self.grid_size = 4

self.state_space = self.grid_size * self.grid_size

self.action_space = 4 # Up, Down, Left, Right

self.state = 0 # Start state

def reset(self):

self.state = 0

return self.state

def step(self, action):

# Define the reward and next state based on the action

if action == 0: # Up

new_state = self.state - self.grid_size

elif action == 1: # Down

new_state = self.state + self.grid_size

elif action == 2: # Left

new_state = self.state - 1

elif action == 3: # Right

new_state = self.state + 1

reward = -1 # Negative reward for each step to encourage shorter paths

done = new_state == self.state_space - 1 # End state

if done:

reward = 0 # Reward of 0 for reaching the end

self.state = new_state

return new_state, reward, done

3. Q-Learning Algorithm:

Purpose: Implement the Q-Learning algorithm to update the Q-values and learn the optimal policy.

class QLearning:

def __init__(self, env):

self.env = env

self.q_table = np.zeros((env.state_space, env.action_space))

self.learning_rate = 0.1

self.discount_factor = 0.9

self.exploration_rate = 1.0

self.exploration_decay = 0.995

self.min_exploration_rate = 0.01

def choose_action(self, state):

if random.uniform(0, 1) < self.exploration_rate:

return random.choice(range(self.env.action_space)) # Exploration

else:

return np.argmax(self.q_table[state]) # Exploitation

def update_q_table(self, state, action, reward, next_state):

best_next_action = np.argmax(self.q_table[next_state])

td_target = reward + self.discount_factor * self.q_table[next_state, best_next_action]

td_error = td_target - self.q_table[state, action]

self.q_table[state, action] += self.learning_rate * td_error

def train(self, episodes):

for episode in range(episodes):

state = self.env.reset()

done = False

while not done:

action = self.choose_action(state)

next_state, reward, done = self.env.step(action)

self.update_q_table(state, action, reward, next_state)

state = next_state

self.exploration_rate = max(self.min_exploration_rate, self.exploration_rate * self.exploration_decay)

4. Train and Evaluate:

Purpose: Create the environment and Q-Learning agent, train the agent, and display the Q-table to evaluate learning.

# Create environment and Q-Learning agent

env = GridWorld()

agent = QLearning(env)

# Train the agent

agent.train(episodes=1000)

# Display Q-Table

print('Q-Table:')

print(agent.q_table)

Summary:

Reinforcement Learning is a powerful approach where an agent learns to make decisions through interactions with an environment, aiming to maximize rewards. It involves learning from feedback to develop a strategy or policy. Q-Learning, a value-based RL method, uses Q-values to learn the optimal actions. By understanding and implementing RL, you can develop systems that learn to perform complex tasks by continuously interacting with their environment.

Introduction to Generative Models

What Are Generative Models?

- Definition and Significance

- Overview of generative models.

- Applications in various domains.

- Comparison with Other AI Models

- Differences between generative models and traditional models.

Types of Generative Models

- Generative Adversarial Networks (GANs)

- Overview of GANs

- Definition and components (Generator and Discriminator).

- How GANs Work

- Training process and key concepts.

- Implementation Steps

- Setting up GAN architecture.

- Training and fine-tuning GAN models.

- Overview of GANs

- Variational Autoencoders (VAEs)

- Overview of VAEs

- Definition and components (Encoder and Decoder).

- How VAEs Work

- Latent space representation and training process.

- Implementation Steps

- Building and training VAE models.

- Overview of VAEs

- Other Generative Models

- Normalizing Flows

- Concept, application, and implementation.

- Diffusion Models

- Concept, application, and implementation.

- Normalizing Flows

Practical Implementation of Generative Models

Implementing generative models involves several steps, from setting up the environment to deploying the models in real-world applications. Here’s a step-by-step guide with a real-life example of generating images using a Generative Adversarial Network (GAN). We’ll use the DCGAN (Deep Convolutional GAN) architecture to generate images of handwritten digits from the MNIST dataset.

Overview: Implementing Generative Models with DCGAN

In this overview, we’ll focus on implementing a GAN, specifically the Deep Convolutional GAN (DCGAN), to generate images of handwritten digits using the MNIST dataset.

Here’s a detailed step-by-step guide:

1. Set Up the Environment

Before you start, you need to set up your programming environment with the necessary libraries. For this example, we’ll use Python along with popular libraries such as TensorFlow or PyTorch.

Choosing Tools and Libraries:

- TensorFlow: An open-source deep learning library for building and training neural networks.

- PyTorch: A popular deep learning framework known for its flexibility and ease of use.

- Libraries for Generative Models:

- TensorFlow/Keras: For building GANs and other neural network models.

- PyTorch: For its dynamic computational graph and extensive libraries for GANs.

Installing and Configuring Dependencies: Here’s how to set up the environment using Python and TensorFlow:

Install Libraries: Make sure you have the following libraries installed:

pip install tensorflow keras matplotlib

2. Load and Prepare the Dataset

The MNIST dataset is a collection of handwritten digits (0-9) commonly used for training image processing systems. In this step, you’ll load and preprocess the dataset.

import numpy as np

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.preprocessing.image import img_to_array, array_to_img

# Load the MNIST dataset

(X_train, _), (_, _) = mnist.load_data()

# Preprocess the data: Normalize and reshape

X_train = X_train / 255.0 # Normalize pixel values to [0, 1]

X_train = np.expand_dims(X_train, axis=-1) # Add a channel dimension

Explanation:

- Loading the dataset:

mnist.load_data()loads the MNIST dataset and splits it into training and testing sets. Here, we only need the training set, so we discard the labels and the test set. - Normalization: Dividing by 255.0 scales the pixel values from 0-255 to 0-1, which helps in faster and more efficient training.

- Reshaping: The

np.expand_dimsfunction adds an extra dimension to the data to make it compatible with the convolutional layers, which expect 4D input (batch size, height, width, channels).

Step 3: Define the DCGAN Architecture

The DCGAN architecture consists of two main components:

- Generator: Generates new images from random noise.

- Discriminator: Classifies images as real (from the dataset) or fake (generated by the Generator).

Generator Network:

The Generator takes a random noise vector and generates an image.

from tensorflow.keras.layers import Dense, Reshape, Conv2DTranspose

from tensorflow.keras.models import Sequential

def build_generator():

model = Sequential()

model.add(Dense(7*7*256, input_dim=100)) # Input is a vector of 100 random numbers

model.add(Reshape((7, 7, 256))) # Reshape into a 7x7x256 feature map

model.add(Conv2DTranspose(128, kernel_size=3, strides=2, padding='same'))

model.add(Conv2DTranspose(64, kernel_size=3, strides=2, padding='same'))

model.add(Conv2DTranspose(1, kernel_size=3, activation='sigmoid', padding='same'))

return model

Explanation:

- Dense Layer: The input to the generator is a 100-dimensional noise vector. This layer increases the dimension to 7x7x256, forming a feature map.

- Reshape Layer: Reshapes the feature map to 7x7x256.

- Conv2DTranspose Layers: These layers upsample the input, effectively increasing the spatial dimensions. The strides of 2 double the height and width of the feature maps. The final layer outputs a 28×28 image with one channel (grayscale) and a sigmoid activation to scale the pixel values to [0, 1].

Discriminator Network:

The Discriminator takes an image and outputs a probability that the image is real.

from tensorflow.keras.layers import Conv2D, Flatten, Dropout

def build_discriminator():

model = Sequential()

model.add(Conv2D(64, kernel_size=3, strides=2, padding='same', input_shape=(28, 28, 1)))

model.add(Conv2D(128, kernel_size=3, strides=2, padding='same'))

model.add(Flatten())

model.add(Dropout(0.4))

model.add(Dense(1, activation='sigmoid'))

return model

Explanation:

- Conv2D Layers: These layers downsample the input image, reducing its spatial dimensions while increasing the depth.

- Flatten Layer: Flattens the feature maps into a single vector.

- Dropout Layer: Helps prevent overfitting by randomly setting a fraction of input units to 0 at each update during training.

- Dense Layer: Outputs a single probability value (real or fake) using a sigmoid activation function.

Step 4: Compile the Models

After defining the Generator and Discriminator, we compile them with appropriate optimizers and loss functions.

from tensorflow.keras.optimizers import Adam

# Create the models

generator = build_generator()

discriminator = build_discriminator()

# Compile the Discriminator

discriminator.compile(optimizer=Adam(), loss='binary_crossentropy', metrics=['accuracy'])

# Build and compile the GAN

discriminator.trainable = False # Freeze the Discriminator during GAN training

gan_input = tf.keras.Input(shape=(100,))

x = generator(gan_input)

gan_output = discriminator(x)

gan = tf.keras.Model(gan_input, gan_output)

gan.compile(optimizer=Adam(), loss='binary_crossentropy')

Explanation:

- Compile the Discriminator: The Discriminator is compiled with the Adam optimizer and binary crossentropy loss since it is a binary classification problem.

- Freeze the Discriminator: To ensure that during GAN training, the Discriminator weights are not updated when training the Generator.

- GAN Model: The GAN combines the Generator and the Discriminator. The input to the GAN is a noise vector, which is passed to the Generator to produce an image. This image is then passed to the Discriminator to determine if it is real or fake. The GAN is compiled with the Adam optimizer and binary crossentropy loss.

Step 5: Train the DCGAN

Training involves alternating between training the Discriminator and the Generator. The Discriminator is trained to distinguish between real and fake images, while the Generator is trained to fool the Discriminator.

def train_gan(epochs, batch_size=128):

for epoch in range(epochs):

# Train Discriminator

idx = np.random.randint(0, X_train.shape[0], batch_size)

real_imgs = X_train[idx]

fake_imgs = generator.predict(np.random.randn(batch_size, 100))

d_loss_real = discriminator.train_on_batch(real_imgs, np.ones((batch_size, 1)))

d_loss_fake = discriminator.train_on_batch(fake_imgs, np.zeros((batch_size, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# Train Generator

g_loss = gan.train_on_batch(np.random.randn(batch_size, 100), np.ones((batch_size, 1)))

print(f"Epoch {epoch} | Discriminator Loss: {d_loss[0]} | Generator Loss: {g_loss}")

# Optionally save generated images

if epoch % 100 == 0:

save_generated_images(epoch)

def save_generated_images(epoch):

noise = np.random.randn(16, 100)

generated_images = generator.predict(noise)

generated_images = (generated_images + 1) / 2.0 # Rescale to [0, 1]

for i in range(16):

img = array_to_img(generated_images[i])

img.save(f"gan_image_{epoch}_{i}.png")

# Start training

train_gan(epochs=10000, batch_size=128)

Explanation:

- Training Loop: For each epoch:

- Train Discriminator:

- Select a random batch of real images from

X_train. - Generate fake images using the Generator.

- Train the Discriminator on real images with labels 1 (real) and fake images with labels 0 (fake).

- Calculate the loss for real and fake images and average them.

- Select a random batch of real images from

- Train Generator:

- Generate a batch of noise vectors.

- Train the GAN with labels 1, aiming to fool the Discriminator into thinking the generated images are real.

- Train Discriminator:

- Print Losses: Display the Discriminator and Generator losses for each epoch.

- Save Generated Images: Optionally save generated images every 100 epochs to visualize the progress.

Step 6: Deploy and Use the GAN

Once trained, you can use the Generator to produce new images from random noise. These images can be saved, displayed, or used in various applications.

# Generate new images

noise = np.random.randn(10, 100)

new_images = generator.predict(noise)

# Display or save the generated images

import matplotlib.pyplot as plt

for i in range(10):

plt.imshow(new_images[i].reshape(28, 28), cmap='gray')

plt.axis('off')

plt.show()

Explanation:

- Generate New Images: Create a batch of random noise vectors and use the Generator to produce images.

- Display or Save Images: Use Matplotlib to display the generated images or save them for further use.

Output

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 46ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 42ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 23ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 23ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 27ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 24ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 24ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 26ms/step

2/2 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step

By following these steps, you can build and train a DCGAN to generate realistic images similar to the ones in the MNIST dataset. This process involves loading and preprocessing data, defining and compiling models, training the models, and using the trained Generator to create new images.

Fine-Tuning and Optimization: Improving Model Performance

When working on machine learning projects, fine-tuning and optimizing your model is crucial to achieve the best possible performance. Here are some techniques for enhancing model accuracy and efficiency:

- Hyperparameter Tuning:

- Adjust learning rates, batch sizes, and the number of epochs to find the optimal settings.

- Use techniques like Grid Search or Random Search to explore different combinations of hyperparameters.

- Consider more advanced methods like Bayesian Optimization for more efficient searches.

- Regularization:

- L1 and L2 Regularization: Add penalties to the loss function to reduce overfitting by discouraging overly complex models.

- Dropout: Randomly drop neurons during training to prevent the model from becoming too dependent on specific nodes.

- Data Augmentation:

- Increase the diversity of your training data by applying transformations such as rotations, flips, and color changes.

- This helps the model generalize better to unseen data.

- Transfer Learning:

- Use pre-trained models on similar tasks and fine-tune them on your specific dataset.

- This approach can save time and computational resources while leveraging the knowledge learned by the pre-trained model.

- Early Stopping:

- Monitor the model’s performance on a validation set during training.

- Stop training when the performance stops improving to avoid overfitting.

- Model Pruning and Quantization:

- Prune unimportant weights from the model to reduce its size and speed up inference.

- Quantize the model to use lower precision (e.g., 8-bit integers) without significantly losing accuracy.

- Ensemble Methods:

- Combine the predictions of multiple models to improve overall performance.

- Techniques like bagging (e.g., Random Forest) and boosting (e.g., XGBoost) are popular ensemble methods.

Handling Overfitting and Underfitting: Strategies for Common Issues

Overfitting and underfitting are common challenges in model training. Here’s how to address them:

- Overfitting:

- More Data: Increase the size of your training dataset to help the model generalize better.

- Simplify the Model: Reduce the model complexity by decreasing the number of layers or neurons.

- Regularization: Apply L1/L2 regularization or dropout as mentioned above.

- Cross-Validation: Use k-fold cross-validation to ensure your model performs well on different subsets of the data.

- Underfitting:

- Complexify the Model: Increase the model’s complexity by adding more layers or neurons.

- Feature Engineering: Create new features or use more relevant ones to help the model learn better patterns.

- Longer Training: Train the model for more epochs to allow it to learn the underlying patterns better.

- Reduce Regularization: If using regularization, reduce its strength to allow the model to fit the data more closely.

By applying these techniques, you can enhance your model’s performance, ensuring it generalizes well to new data and achieves high accuracy.

Deployment and Integration: Deploying Generative Models

Deploying generative models in production environments involves several steps to ensure they function efficiently and reliably. Here are some methods for deploying these models:

- Containerization:

- Docker: Package your model and its dependencies into a Docker container. This ensures consistency across different environments.

- Kubernetes: Orchestrate your Docker containers using Kubernetes for scalable and managed deployments.

- Cloud Services:

- AWS SageMaker: Use Amazon Web Services to deploy your model with auto-scaling and monitoring features.

- Google AI Platform: Deploy on Google Cloud with support for various frameworks and automatic scaling.

- Azure Machine Learning: Microsoft’s platform provides tools for deploying and managing your model in the cloud.

- Model Serving Frameworks:

- TensorFlow Serving: Specifically designed for deploying TensorFlow models, providing high performance and flexibility.

- TorchServe: A serving library for PyTorch models, offering multi-model serving and scaling.

- FastAPI: A modern web framework for building APIs quickly, which can be used to serve models with high performance.

- API Development:

- RESTful APIs: Expose your model as a REST API using frameworks like Flask or Django, enabling easy integration with other services.

- GraphQL: Use GraphQL for more flexible queries and efficient data fetching, suitable for complex applications.

- Edge Deployment:

- Deploy models on edge devices like smartphones or IoT devices for low-latency applications. Use frameworks like TensorFlow Lite or ONNX Runtime for optimized performance on limited hardware.

Integrating Models into Applications: Practical Applications and Use Cases

Integrating generative models into applications can provide numerous innovative features and enhancements. Here are some practical applications and use cases:

- Chatbots and Virtual Assistants:

- Natural Language Generation (NLG): Use models like GPT-3 to generate human-like responses in chatbots and virtual assistants, providing more natural and engaging interactions.

- Content Creation:

- Text Generation: Automate the creation of articles, summaries, and reports. Tools like OpenAI’s GPT can be integrated to assist writers by generating drafts or enhancing content.

- Image and Video Generation: Use models like DALL-E or GANs (Generative Adversarial Networks) to create images or videos based on textual descriptions or other inputs.

- Personalization and Recommendations:

- Customized Content: Generate personalized recommendations for users in e-commerce, entertainment, and social media platforms. Generative models can create unique content tailored to individual preferences.

- Design and Art:

- Creative Tools: Integrate generative models into design software to help artists and designers create new artworks, patterns, and designs. These models can suggest variations or generate entirely new concepts.

- Healthcare and Life Sciences:

- Drug Discovery: Use generative models to predict molecular structures and suggest new compounds for drug development.

- Medical Imaging: Enhance medical imaging techniques by generating high-quality images from low-resolution scans, aiding in better diagnosis and treatment planning.

- Gaming and Entertainment:

- Procedural Content Generation: Create levels, characters, and storylines in video games dynamically. Generative models can provide endless variations, enhancing replayability and user engagement.

- Interactive Storytelling: Develop interactive stories and narratives where the plot adapts based on user input, providing a unique experience every time.

By deploying and integrating generative models into various applications, you can unlock new possibilities and improve user experiences across different domains.

Challenges and Best Practices: Common Challenges in Generative Model Implementation

Quality and Accuracy Issues

Common Challenges:

- Data Quality: Poor quality or biased training data can lead to inaccurate or undesirable outputs.

- Overfitting: Generative models might memorize training data instead of learning to generate new data.

- Mode Collapse: In GANs, the generator might produce limited variations, missing out on diverse outputs.

Strategies for Ensuring High-Quality Output:

- Data Preprocessing: Ensure your data is clean, well-labeled, and representative of the target distribution.

- Regularization: Use techniques like dropout or weight decay to prevent overfitting.

- Evaluation Metrics: Utilize metrics such as BLEU for text or FID for images to quantitatively assess output quality.

- Human Evaluation: Incorporate human feedback loops to evaluate and improve model outputs.

- Ensemble Methods: Combine multiple models to improve output quality and robustness.

Computational Resources

Common Challenges:

- High Computational Demand: Generative models, especially deep ones, require significant computational power for training.

- Memory Constraints: Large models may exceed available memory, causing training to fail or slow down.

Managing Computational Requirements and Efficiency:

- Cloud Computing: Leverage cloud services like AWS, Google Cloud, or Azure for scalable resources.

- Model Optimization: Use techniques like model pruning, quantization, and mixed-precision training to reduce resource consumption.

- Distributed Training: Implement distributed training strategies to speed up the process by dividing the workload across multiple machines.

- Efficient Architectures: Choose model architectures designed for efficiency, such as MobileNets or EfficientNet for image generation tasks.

Ethical Considerations

Common Challenges:

- Bias in Generated Content: Models can perpetuate or even amplify biases present in the training data.

- Misuse of Technology: Generative models can be used to create misleading or harmful content.

- Privacy Concerns: Training on sensitive data can lead to privacy violations if the model memorizes and reproduces it.

Addressing Biases and Ethical Concerns:

- Diverse Datasets: Use diverse and representative datasets to minimize biases.

- Bias Detection and Mitigation: Implement techniques to detect and mitigate biases in your models, such as fairness-aware algorithms.

- Transparency and Explainability: Make model decisions transparent and provide explanations for generated content.

- Ethical Guidelines: Follow ethical guidelines and best practices for AI development, such as those provided by organizations like IEEE or AI ethics frameworks.

Best Practices for Developing Generative Models

Guidelines for Effective Model Development

- Define Clear Objectives: Clearly define what you want to achieve with your generative model, including specific use cases and desired outcomes.

- Iterative Development: Develop your model iteratively, starting with a simple version and gradually adding complexity based on performance evaluation.

- Robust Training Pipeline: Set up a robust training pipeline with automated data preprocessing, model training, and evaluation.

- Regular Monitoring: Continuously monitor model performance and retrain as needed to maintain quality and relevance.

Tips for Successful Implementation and Deployment

- Experimentation and Tuning: Experiment with different model architectures, hyperparameters, and training techniques to find the best configuration.

- Scalability: Design your deployment infrastructure to scale with demand, ensuring reliability and performance.

- Version Control: Use version control for your models and code to track changes and facilitate collaboration.

- Documentation: Maintain comprehensive documentation of your model development process, including data sources, preprocessing steps, and evaluation metrics.

- User Feedback: Collect and incorporate user feedback to continuously improve your model and its outputs.

- Security Measures: Implement security measures to protect your model and data, including encryption and access controls.

- Compliance: Ensure compliance with relevant regulations and standards, especially when dealing with sensitive data.

By addressing these challenges and following best practices, you can develop, deploy, and maintain generative models that deliver high-quality, reliable, and ethically sound outputs.

Future Trends and Directions: Emerging Trends in Generative AI

Advancements in Model Architectures

New Developments and Innovations:

- Transformers and Attention Mechanisms:

- Transformers: These models, especially those like GPT-4 and beyond, continue to dominate due to their ability to handle long-range dependencies and generate coherent, contextually relevant content.

- Efficient Transformers: Innovations like sparse attention and linear transformers aim to reduce computational complexity while maintaining performance.

- Diffusion Models:

- Denoising Diffusion Probabilistic Models (DDPMs): These models generate high-quality images by reversing a diffusion process, offering better diversity and detail compared to GANs.

- Score-Based Generative Models: Leveraging score matching techniques to generate data with more stability and less mode collapse.

- Multi-Modal Models:

- Cross-Modal Generation: Models like CLIP (Contrastive Language–Image Pre-training) and DALL-E combine text and image modalities, enabling the generation of images from textual descriptions and vice versa.

- Unified Models: Emerging architectures aim to handle multiple modalities (text, image, audio) within a single framework, enhancing versatility and application potential.

- Meta-Learning and Few-Shot Learning:

- Meta-Learning: Enables models to learn how to learn, adapting quickly to new tasks with minimal data, crucial for generative tasks where data is scarce.

- Few-Shot Learning: Advances in this area help models generate relevant content with very few examples, reducing the need for large annotated datasets.

Potential Applications and Use Cases

Future Directions and Opportunities in Generative AI:

- Healthcare:

- Personalized Medicine: Generative models can design tailored drugs and treatment plans based on individual genetic profiles and medical histories.

- Medical Imaging: Enhanced image generation and analysis for better diagnostics and treatment planning.

- Entertainment and Media:

- Virtual Reality and Gaming: Real-time content generation for immersive experiences, including dynamic storylines and environments.

- Automated Content Creation: Tools for generating high-quality music, videos, and graphics, supporting creators and reducing production costs.

- Education:

- Intelligent Tutoring Systems: Personalized learning experiences generated by understanding individual student needs and adapting content accordingly.

- Content Generation: Creating educational materials, exercises, and interactive scenarios tailored to different learning styles and levels.

- Business and Marketing:

- Market Analysis: Generative models analyzing trends and generating insights, aiding in strategic decision-making.

- Personalized Marketing: Creating tailored advertisements and product recommendations based on consumer data.

- Environmental Science:

- Climate Modeling: Generating detailed simulations of climate change impacts and potential mitigation strategies.

- Resource Management: Optimizing the management and allocation of natural resources through generative data analysis.

Conclusion

Summary of Key Points

Recap of Generative Models and Machine Learning Principles:

- Generative models are capable of creating new data that resembles a given dataset, with applications in text, image, and other data types.

- Key principles include training on large, high-quality datasets, tuning model architectures, and leveraging techniques like transfer learning and regularization.

Overview of Practical Implementation Steps and Best Practices:

- Ensure data quality through preprocessing and augmentation.

- Optimize model performance via hyperparameter tuning, regularization, and advanced evaluation metrics.

- Deploy models efficiently using containerization, cloud services, and scalable frameworks.

- Address ethical concerns by mitigating biases, ensuring transparency, and following ethical guidelines.

Final Thoughts

The Impact of Generative Models on AI and Machine Learning:

- Generative models are revolutionizing fields like healthcare, entertainment, education, and business by enabling the creation of high-quality, contextually relevant content.

- Their ability to generate data has significant implications for research, innovation, and problem-solving across various domains.

The Importance of Continued Research and Development:

- Ongoing research is essential to overcome current limitations, improve model efficiency, and expand the range of applications.

- Ethical considerations and bias mitigation will remain critical as these models become more integrated into everyday applications.

- Collaboration between researchers, industry professionals, and policymakers will be crucial to harness the full potential of generative models while ensuring responsible and beneficial use.

By staying informed about emerging trends and best practices, professionals can leverage generative AI to drive innovation and create impactful solutions in their respective fields.

External Resources

Here’s a list of external resources that can help you learn how to implement generative models using machine learning principles:

Generative Adversarial Networks (GANs): An Overview” by Ian Goodfellow et al.

- Link

- This is the original paper on GANs, written by the creator of the concept. It provides a thorough explanation of the theory behind GANs.

Variational Autoencoders (VAEs)” by Carl Doersch

- Tutorial

- This tutorial explains VAEs in detail, including their mathematical foundation and implementation.

An Introduction to Variational Autoencoders” by Aaron Courville et al.

- Paper

- This is one of the foundational papers on VAEs, providing a comprehensive introduction to the concept.

FAQs

1. What are generative models?

Generative models create new data samples similar to a given dataset. Examples include GANs and VAEs.

2. How do GANs work?

GANs have two networks: a generator creates fake data, and a discriminator distinguishes between real and fake data. They train together in a competitive process.

3. What’s the difference between GANs and VAEs?

GANs use a game-theoretic approach with a generator and discriminator, while VAEs use probabilistic methods to learn data distributions.

4. How should data be prepared for generative models?

Data should be collected, cleaned, normalized, and augmented to ensure quality and diversity for effective training.

5. What are common challenges in training generative models?

Challenges include mode collapse, training instability, and evaluating the quality of generated samples.

Leave a Reply