How to Automating Data Cleaning with PyCaret

Introduction: Automating Data Cleaning with PyCaret

Data cleaning is often the most time-consuming and tedious part of any data project. Before you can analyze, visualize, or build models, you need to deal with missing values. It’s not exactly the most exciting task, but it’s absolutely important.

What if you could spend less time on cleaning and more time on exploring insights? That’s where PyCaret comes in. This powerful Python library isn’t just for machine learning; it also includes tools to simplify the data preparation process.

In this blog post, we’ll show you how to use PyCaret to automate data cleaning. This blogpost covering everything from handling missing data to scaling and transforming features. By the end, you’ll see how easy it is to clean up messy datasets and get them ready for analysis in just a few lines of code.

So, if you’re tired of spending more time on cleaning than creating, keep reading—this post might just change the way you work with data forever!

What Is Data Cleaning and Why Is It Crucial for Machine Learning?

Data cleaning is the process of identifying and fixing issues in a dataset to ensure it’s accurate, consistent, and ready for analysis or modeling. This includes removing duplicates, handling missing values, correcting errors, and transforming data into a suitable format for machine learning. It’s like preparing ingredients before cooking—they need to be fresh and sorted before creating something amazing.

Why Is Data Cleaning Crucial for Machine Learning?

- Better Results

Machine learning models do their best when they learn from clean and clear data. If the data is messy, it can confuse the model and cause it to make incorrect predictions. Clean data helps the model work better and make smarter choices. - Fewer Mistakes

Messy data is often full of errors, like missing pieces or incorrect entries. If you don’t fix these, your model might learn the wrong patterns. Cleaning the data ensures you avoid these problems. - Makes Data Usable

Raw data often isn’t ready to use straight away. Data cleaning helps organize it so you can jump into analysis or build models without wasting time trying to make sense of it. - Saves Time Later

Spending time upfront to clean your data saves headaches later. If you skip this step, you might end up fixing mistakes during modeling or dealing with bad results, which can waste even more time.

In short, data cleaning sets the stage for everything else. Without it, even the smartest machine learning model won’t work well. If you want accurate and reliable results, start by making sure your data is clean and ready to go.

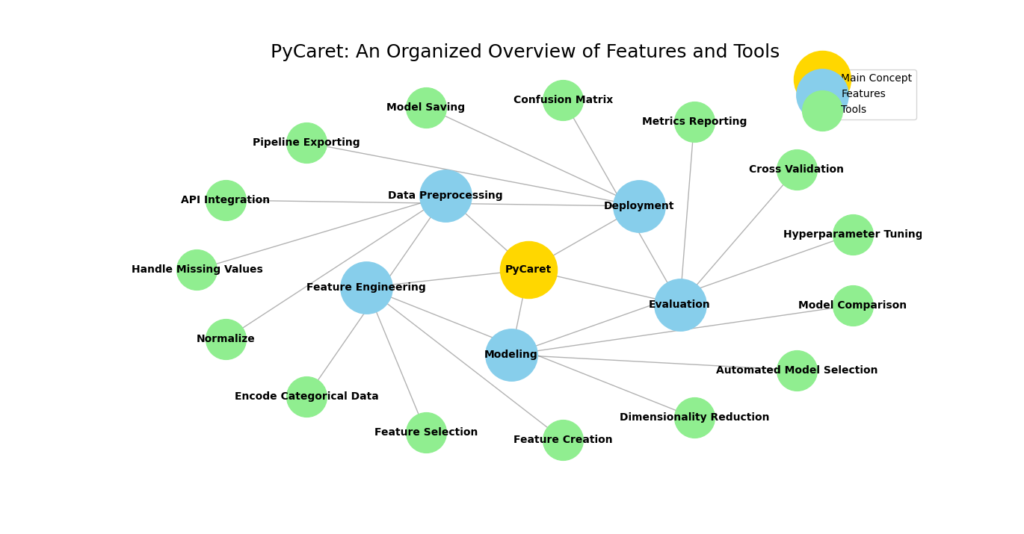

Introduction to PyCaret: A low-code machine learning library for automation

PyCaret is a low-code library designed to make machine learning faster and simpler for everyone. Whether you’re building predictive models, automating data preparation, or trying out different algorithms, PyCaret makes the process smooth and efficient.

PyCaret stands out because it takes care of the heavy lifting. With just a few lines of code, you can handle data preprocessing, compare models, tune hyperparameters, and even deploy your final model. It’s perfect for data scientists, business analysts, and beginners who want powerful results without getting stuck in complicated code.

How PyCaret simplifies the data preprocessing workflow

Getting your data ready for machine learning can feel like a lot of work. You have to deal with missing values, scale numbers, change text into numbers, and split your data into training and testing sets. It’s an important step, but it can take up a lot of time. That’s where PyCaret helps—it makes all of this faster and simpler.

Here’s how PyCaret makes your life easier:

- Fixing Missing Data Automatically

If your dataset has gaps or missing pieces, PyCaret spots them and gives you quick ways to fix them. You can fill the blanks with averages, use smarter methods, or just remove the rows—all with minimal effort. - Quick Transformations

Need to scale numbers or turn text into something your model understands? PyCaret can do these transformations in just one line of code. Everything happens behind the scenes, so you don’t have to manually write a bunch of steps. - Smart Feature Engineering

PyCaret can create new features from dates, group similar categories, or even come up with better ways to organize your data. It takes care of the tricky stuff so you can focus on building your model. - Easy Data Splitting

Splitting data into training and testing sets can sometimes go wrong if preprocessing isn’t consistent. PyCaret ensures the same steps are applied to both, so your model gets the right data to learn from. - Custom Preprocessing Options

If you need something specific, PyCaret lets you tweak the process. You can add or remove steps without diving into complex code.

By handling these tasks for you, PyCaret frees up your time and helps you focus on the fun parts of machine learning. Whether you’re just starting out or have years of experience, it makes preprocessing easy, reliable, and stress-free.

Setting Up PyCaret for Automated Data Cleaning

Installing PyCaret: A Quick Guide

Getting started with PyCaret is easy and only takes a few steps.

How to Install PyCaret in Python Using pip

- Open Your Terminal or Command Prompt

First, make sure you have Python installed (version 3.7 or above). Open your terminal or command prompt to start. - Run the pip Install Command

Type the following command to install PyCaret:

pip install pycaret

This will download and install all the necessary components for PyCaret.

3. Verify the Installation

Once the installation is complete, check if PyCaret was installed correctly by running:

import pycaret

print(pycaret.__version__)

If no errors appear and the version is printed, you’re ready to go!

Prerequisites for Running PyCaret in Jupyter Notebook

To use PyCaret in Jupyter Notebook, there are a few additional steps to ensure everything runs smoothly:

- Install Jupyter Notebook

If you haven’t already, install Jupyter Notebook using pip:

pip install notebook

2. Install Dependencies for Jupyter

PyCaret works best with certain libraries, so make sure they are installed:

pip install ipywidgets

3. Launch Jupyter Notebook

Start Jupyter Notebook by running:

jupyter notebook

4. Check Kernel Compatibility

Ensure the notebook kernel is linked to the Python environment where PyCaret is installed. If not, you can add the environment manually:

pip install ipykernel

python -m ipykernel install --user --name=pycaret_env

Now, you’re all set to start using PyCaret for your machine learning projects in Jupyter Notebook!

Basic Syntax and Functionality of PyCaret for Preprocessing

PyCaret is designed to make common preprocessing tasks quick and easy. Here’s an overview of how it works:

- Loading a Dataset

Start by importing your dataset. You can use pandas to load your data into a DataFrame:

import pandas as pd

data = pd.read_csv("your_dataset.csv")

2. Setting Up PyCaret

The setup function is the heart of PyCaret. It initializes preprocessing tasks in one go. Here’s how you can set it up:

from pycaret.classification import setup

clf_setup = setup(data=data, target="target_column")

Replace "target_column" with the column you want your model to predict.

3. What Happens in setup()

- Handles missing values automatically.

- Converts categorical variables to numeric.

- Scales numeric features.

- Splits data into training and testing sets.

4. Preview the Changes

PyCaret will display a summary of the preprocessing steps it applied. Review these to understand how your data is being transformed.

Importing PyCaret Modules for Data Preparation and Cleaning

PyCaret has specialized modules for different tasks. For data preparation and cleaning, you’ll mostly work with the following:

- Classification or Regression Module

- Import

pycaret.classificationfor classification problems. - Import

pycaret.regressionfor regression problems.

Example:

- Import

from pycaret.classification import setup

2. Data Preparation Module

If you want to use PyCaret just for data cleaning, import the preparation tools:

from pycaret.datasets import get_data

from pycaret.preprocess import preprocess

3. Loading a Sample Dataset

PyCaret includes built-in datasets to practice:

data = get_data("credit")With these steps, you can start preparing and cleaning your data for machine learning projects in just a few lines of code.

Must Read

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

- Mastering Python Regex (Regular Expressions): A Step-by-Step Guide

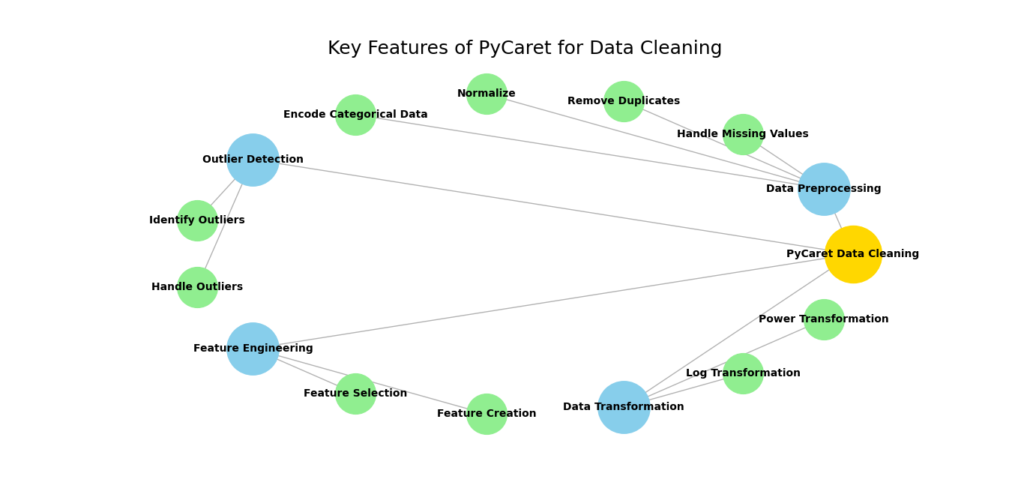

Key Features of PyCaret for Data Cleaning

Automatic Handling of Missing Data

When working with real-world datasets, missing data is a common issue. Whether caused by user input errors, data collection issues, or other reasons, missing values can disrupt machine learning workflows. Automating data cleaning, especially handling missing data, is one of PyCaret’s key strengths. It simplifies this tedious task, saving time and ensuring your data is ready for analysis.

How PyCaret Detects and Handles Missing Values

PyCaret makes identifying missing data hassle-free. The library automatically scans your dataset for gaps as soon as you use the setup() function. It gives you a summary of missing values and suggests the best way to handle them.

Steps in Missing Data Detection

- Input Data Analysis

When you load your dataset into PyCaret, the first step is to initialize it using thesetup()function. Here’s how it works:

from pycaret.classification import setup

from pycaret.datasets import get_data

# Load sample dataset

data = get_data("credit")

# Setup PyCaret

clf_setup = setup(data=data, target="default")

PyCaret automatically identifies columns with missing data and categorizes them into numerical and categorical types.

2. Summary Report

After running setup(), PyCaret displays a report showing:

- The percentage of missing values in each column.

- How missing values will be imputed (or handled).

Techniques Used by PyCaret for Missing Value Imputation

Imputation means filling in missing data to make the dataset usable for machine learning. PyCaret uses different methods depending on the type of data. Let’s look at the techniques:

| Data Type | Imputation Technique | Example |

|---|---|---|

| Numerical | Mean, Median, or Mode substitution | Missing age values replaced with mean age. |

| Categorical | Most frequent value substitution or a placeholder | Empty gender field replaced with “Male” (most common). |

| Advanced Methods | k-Nearest Neighbors (k-NN) or Iterative Imputation | Using patterns in other columns to predict missing values. |

PyCaret automatically chooses the appropriate method based on your dataset, but you can customize this if needed.

Example: Missing Value Imputation

Consider a dataset where the Age column has missing values. PyCaret imputes these values using the mean:

from pycaret.datasets import get_data

from pycaret.classification import setup

# Load sample dataset

data = get_data('credit')

data.loc[10:15, 'Age'] = None # Introduce missing values

# Setup PyCaret

clf_setup = setup(data=data, target='default')

# Check imputed values

print(data['Age'].head(20))

PyCaret fills the gaps in the Age column with the mean or other specified techniques.

Outlier Detection and Treatment

Outliers are unusual data points that are far away from the rest of the data. These can happen because of errors in data collection or unusual events, and they can confuse machine learning models. That’s why it’s important to handle them properly. PyCaret makes this easier by detecting and fixing outliers automatically during data cleaning.

How PyCaret Finds Outliers

PyCaret automatically checks for outliers when you use the setup() function. It uses statistical methods to flag values that don’t fit well with the rest of the data.

Steps PyCaret Follows:

- Scans for Outliers: During the setup process, PyCaret looks for outliers in numeric columns.

- Outlier Report: After scanning, PyCaret provides a summary showing:

- Which columns have outliers.

- How many outliers were found.

- Custom Options: You can adjust the way PyCaret detects outliers by changing settings during setup.

How PyCaret Fixes Outliers

Once it finds outliers, PyCaret can handle them in two ways:

- Removing Outliers: PyCaret can drop rows with outliers. This is helpful when the outliers are caused by mistakes or are irrelevant to your analysis.

- Adjusting Outliers: If removing data isn’t a good idea, PyCaret can adjust the outlier values to bring them closer to the rest of the data.

Methods PyCaret Uses to Handle Outliers

| Method | What It Does | Example |

|---|---|---|

| IQR Method | Removes values that are too far outside the range of most data. | Removing extreme ages like 150 years. |

| Z-Score Capping | Limits values that are too far from the mean. | Adjusting extremely high incomes. |

| Clipping | Caps extreme values at a set maximum or minimum. | Limiting very high sales figures. |

Example: Handling Outliers with PyCaret

Here’s how you can handle outliers with PyCaret step by step:

- Load Your Data:

from pycaret.datasets import get_data

from pycaret.classification import setup

# Load dataset

data = get_data('insurance')

2. Add Outliers for Testing:

Let’s add some fake outliers for demonstration:

data.loc[0, 'Age'] = 150 # Add an unrealistic age

data.loc[1, 'Salary'] = 1e6 # Add an extremely high salary

3. Run PyCaret’s Setup:

clf_setup = setup(data=data, target='Fraud', remove_outliers=True)

The remove_outliers=True parameter makes sure that PyCaret handles the outliers automatically.

4. Check the Results:

After running the setup, you’ll see a report showing how many outliers were fixed or removed.

Data Encoding with PyCaret

In machine learning, categorical variables can’t be used directly in most algorithms. These are values like “Male/Female” or “Red/Blue/Green” that represent categories, not numbers. To use them in your models, you need to convert them into a numeric format through data encoding. PyCaret makes this process simpler by automating encoding techniques, even for complex datasets with high-cardinality features.

Automating Categorical Variable Encoding with PyCaret

When you run the setup() function in PyCaret, it automatically detects categorical variables in your dataset and applies the right encoding method. You don’t have to write separate code for one-hot encoding or label encoding; PyCaret takes care of everything based on your data type and modeling requirements.

Steps PyCaret Follows:

- Identifies Categorical Variables: During setup, PyCaret automatically identifies columns with text or categorical values.

- Applies Suitable Encoding:

- Low-Cardinality Features: For columns with a small number of unique categories, PyCaret uses one-hot encoding, which creates binary columns for each category.

- High-Cardinality Features: For columns with many unique values (like ZIP codes), PyCaret uses techniques like frequency encoding or target encoding to reduce complexity.

- Displays Encoding Results: After setup, PyCaret provides a summary of how it handled each categorical feature.

Example: Automating Encoding

Here’s how you can see PyCaret’s automatic encoding in action:

from pycaret.datasets import get_data

from pycaret.classification import setup

# Load a dataset with categorical variables

data = get_data('insurance')

# Set up the environment with automatic encoding

clf_setup = setup(data=data, target='Fraud')

# Check how categorical features were handled

print(clf_setup)

You’ll notice that PyCaret has applied encoding to all categorical columns without any manual intervention.

Handling High-Cardinality Features Using PyCaret’s Encoding Techniques

High-cardinality features are those with too many unique values, like “Product ID” or “Customer Name.” These can make your dataset bulky and lead to overfitting. PyCaret uses smart encoding techniques to handle them effectively:

Techniques PyCaret Uses:

| Encoding Method | What It Does | Example Use Case |

|---|---|---|

| Frequency Encoding | Replaces categories with their frequency counts. | Encoding ZIP codes in customer data. |

| Target Encoding | Maps categories to the average target value (for supervised learning). | Encoding product IDs in fraud detection. |

| Ordinal Encoding | Assigns ordered numeric values to categories (if order matters). | Encoding education levels. |

Why These Techniques Work:

- They reduce the number of features, which saves memory and speeds up training.

- They prevent the explosion of columns caused by one-hot encoding for high-cardinality variables.

Example: Encoding High-Cardinality Features

Let’s see how PyCaret handles high-cardinality columns:

- Load a Dataset with High-Cardinality Features:

data = get_data('credit') # Dataset with high-cardinality columns like 'Customer_ID'

2. Run PyCaret Setup:

from pycaret.classification import setup

clf_setup = setup(data=data, target='default', ignore_features=['Customer_ID'])

PyCaret automatically ignores unnecessary columns like unique IDs and encodes other high-cardinality features using suitable techniques.

Feature Scaling and Normalization

In machine learning, feature scaling ensures that all numeric values in your dataset have a similar range. This is important for algorithms that rely on the relative magnitude of values, such as gradient descent or distance-based models like K-Nearest Neighbors. PyCaret simplifies this by automatically scaling and normalizing features during the data preprocessing step, saving you time and effort.

Why Feature Scaling and Normalization Are Important

Without scaling, models can:

- Misinterpret features with large ranges as more important.

- Fail to converge properly during training.

- Produce suboptimal results, especially in distance-based algorithms.

By automating these tasks, PyCaret ensures that your machine learning pipeline is ready for accurate modeling.

PyCaret’s Approach to Automatic Feature Scaling

When you initialize the setup() function in PyCaret, it automatically detects the need for scaling and applies the most suitable method.

Key Features of PyCaret’s Scaling Process:

- Automatic Detection: PyCaret identifies numeric columns that need scaling.

- Standardization by Default: PyCaret uses standardization (z-score scaling) for most models.

- Option to Customize: You can enable or disable scaling by changing the

normalizeparameter.

Example: Automatic Scaling with PyCaret

from pycaret.datasets import get_data

from pycaret.regression import setup

# Load a dataset

data = get_data('boston')

# Initialize PyCaret with automatic scaling

reg_setup = setup(data=data, target='medv', normalize=True)

# Scaling is applied automatically; no manual steps required!

Choosing Between Standardization and Normalization

The choice between standardization and normalization depends on the type of data and the machine learning model being used. PyCaret allows you to switch between these methods with simple configuration.

What’s the Difference?

| Method | What It Does | When to Use |

|---|---|---|

| Standardization | Scales data to have a mean of 0 and a standard deviation of 1 (z-score). | Best for models that assume a Gaussian distribution (e.g., linear regression, SVM). |

| Normalization | Scales data to fit within a specific range, often [0, 1]. | Ideal for distance-based models (e.g., KNN, neural networks). |

How to Enable in PyCaret

- Standardization is the default method in PyCaret.

- To use normalization, set the

normalize_method='minmax'parameter in thesetup()function:pythonCopy code

reg_setup = setup(data=data, target='medv', normalize=True, normalize_method='minmax')

Example: Choosing the Right Scaling Method

- Standardization for Regression:

When working with a regression problem:

reg_setup = setup(data=data, target='medv', normalize=True) # Default is z-score scaling

2. Normalization for KNN:

When working with a K-Nearest Neighbors model:

from pycaret.classification import setup

clf_setup = setup(data=data, target='Fraud', normalize=True, normalize_method='minmax')

Step-by-Step Guide: Automating Data Cleaning Tasks with PyCaret

1. Loading and Exploring Your Dataset

Before you clean data, it’s important to load it properly and understand its structure. PyCaret simplifies this process by providing built-in tools for loading and exploring datasets.

How to Load Raw Data into PyCaret for Preprocessing

- You can load a dataset using Python’s popular libraries like Pandas.

- Pass this data to PyCaret’s

setup()function for preprocessing.

Example:

import pandas as pd

from pycaret.regression import setup

# Load raw data

data = pd.read_csv('raw_data.csv')

# Pass data to PyCaret

reg_setup = setup(data=data, target='Price')

Visualizing Data Insights Before Cleaning Using PyCaret’s EDA Tools

Exploratory Data Analysis (EDA) is an essential step. PyCaret includes tools for visualizing and understanding your dataset before cleaning:

- Data Type Summary: Shows types (numeric, categorical) of each column.

- Missing Value Summary: Highlights columns with missing data.

- Outliers Detection: Identifies potential outliers.

Key Steps in EDA with PyCaret:

- PyCaret automatically generates a report during

setup(). - Use the

get_config()function to access specific details.

Example Output:

| Column | Data Type | Missing Values | Unique Values |

|---|---|---|---|

| Age | Numeric | 5% | 40 |

| Gender | Categorical | 0% | 2 |

2. Applying PyCaret’s Preprocessing Pipeline

Once you’ve explored the dataset, the next step is to apply PyCaret’s preprocessing pipeline. This is where the magic of automating data cleaning happens.

How to Set Up a PyCaret Pipeline for Data Cleaning

PyCaret’s setup() function is the gateway to preprocessing. It handles:

- Missing data imputation.

- Encoding categorical variables.

- Scaling and normalization.

- Outlier treatment.

Example Setup:

# Setting up preprocessing pipeline

setup(data=data, target='Price', normalize=True, remove_outliers=True)

What Happens Automatically:

- Missing values are filled using mean, median, or mode.

- Categorical features are encoded.

- Numeric features are scaled.

- Outliers are detected and treated.

Executing PyCaret’s setup() Function for End-to-End Data Preprocessing

Running the setup() function not only applies transformations but also generates logs for every cleaning step.

Step-by-Step Execution:

- Pass your dataset and target column to the function.

- Specify additional options like normalization or outlier removal.

- Let PyCaret handle the rest automatically.

3. Exporting the Cleaned Dataset

After preprocessing, you’ll want to save the cleaned dataset for further analysis or model training. PyCaret makes exporting data just as easy as cleaning it.

Saving Cleaned Data for Further Analysis

- PyCaret provides the

get_data()function to retrieve the cleaned dataset. - Save it to your preferred format using Pandas.

Example Code:

# Retrieve cleaned data

cleaned_data = get_data()

# Save to CSV

cleaned_data.to_csv('cleaned_data.csv', index=False)

Exporting Transformed Data to CSV or Other Formats

PyCaret also allows you to export data with all transformations (scaling, encoding, etc.) applied.

Steps to Export Transformed Data:

- Retrieve the transformed dataset using

get_data(). - Save it using Pandas, or export it to databases using supported libraries.

Advanced Automation Techniques in PyCaret

Tuning Data Cleaning Parameters in PyCaret

Automating data cleaning is convenient with PyCaret, but sometimes you need to customize certain steps to fit specific needs. PyCaret offers flexibility in data imputation, outlier handling, and feature scaling through its parameter settings. Here, we’ll explore how you can tweak these settings and even integrate PyCaret with other libraries for advanced workflows.

Customizing PyCaret’s Data Imputation Strategies

Handling missing values is one of the key aspects of data cleaning. While PyCaret automatically imputes missing values, it also allows customization to align with the nature of your dataset.

Common Imputation Strategies:

- Mean Imputation: For numeric data, replaces missing values with the column’s mean.

- Median Imputation: Suitable for skewed data, replacing missing values with the column’s median.

- Mode Imputation: Used for categorical features, replacing missing values with the most frequent value.

Customizing in PyCaret:

You can specify the imputation method in the setup() function using the numeric_imputation and categorical_imputation parameters.

Example Code:

from pycaret.classification import setup

# Custom imputation

clf_setup = setup(

data=my_data,

target='Outcome',

numeric_imputation='median',

categorical_imputation='constant',

categorical_imputation_value='Unknown'

)

Tips for Choosing the Right Imputation Strategy:

- Use mean or median for numeric columns based on data distribution.

- Apply constant imputation for categorical features when you want to assign a placeholder value like “Unknown.”

Advanced Parameter Settings for Outlier Removal and Feature Scaling

Outliers and feature scaling are critical for model performance. PyCaret provides parameters to configure these aspects during preprocessing.

Outlier Removal:

PyCaret detects and removes outliers based on Interquartile Range (IQR) or Z-scores. You can enable this feature using remove_outliers=True in the setup() function and further adjust its sensitivity.

Key Parameters for Outlier Handling:

outliers_threshold: Adjusts the threshold for identifying outliers. Lower values are more sensitive.remove_outliers: Enables or disables outlier removal.

Example Code:

# Custom outlier removal

clf_setup = setup(

data=my_data,

target='Outcome',

remove_outliers=True,

outliers_threshold=0.05 # More sensitive threshold

)

Feature Scaling:

PyCaret supports both standardization and normalization for feature scaling.

- Standardization: Scales data to have a mean of 0 and a standard deviation of 1.

- Normalization: Scales data to fall within a specific range, such as [0, 1].

Example Code:

# Enable normalization

clf_setup = setup(

data=my_data,

target='Outcome',

normalize=True,

normalize_method='zscore' # Alternative: 'minmax', 'maxabs'

)

Integrating PyCaret with Other Libraries for Preprocessing

While PyCaret is powerful on its own, it works well alongside libraries like Pandas and scikit-learn for customized workflows.

Combining PyCaret with Pandas for Advanced Data Manipulation

Pandas excels at data manipulation tasks such as grouping, merging, and filtering. You can preprocess your data in Pandas and then pass it to PyCaret for automation.

Example Workflow:

- Use Pandas to clean specific columns or apply advanced filters.

- Pass the manipulated DataFrame to PyCaret for further preprocessing.

Code Example:

import pandas as pd

from pycaret.classification import setup

# Advanced filtering with Pandas

data = pd.read_csv('raw_data.csv')

data = data[data['Age'] > 18] # Remove rows where Age is less than 18

# Pass to PyCaret

clf_setup = setup(data=data, target='Outcome')

How to Integrate PyCaret with scikit-learn for Customized Preprocessing Workflows

If you prefer using custom transformers or models from scikit-learn, you can combine them with PyCaret’s automated pipeline.

Steps to Combine PyCaret and scikit-learn:

- Preprocess data in PyCaret to handle missing values and scaling.

- Export cleaned data using

get_data(). - Apply scikit-learn transformers or models to the cleaned data.

Code Example:

from pycaret.classification import setup, get_data

from sklearn.preprocessing import PolynomialFeatures

# Preprocess data with PyCaret

clf_setup = setup(data=my_data, target='Outcome')

cleaned_data = get_data()

# Apply scikit-learn transformer

poly = PolynomialFeatures(degree=2)

transformed_data = poly.fit_transform(cleaned_data)

Key Takeaways: Tuning Parameters and Integrations in PyCaret

| Aspect | Key Features in PyCaret | Customization Options |

|---|---|---|

| Data Imputation | Mean, Median, Mode | numeric_imputation, categorical_imputation |

| Outlier Handling | IQR, Z-score Detection | remove_outliers, outliers_threshold |

| Feature Scaling | Standardization, Normalization | normalize, normalize_method |

| Library Integration | Works with Pandas and scikit-learn | Export data, apply advanced workflows |

Benefits of Using PyCaret for Data Cleaning

Time Savings Through Automated Preprocessing

One of the standout advantages of PyCaret is its ability to automate repetitive preprocessing tasks, drastically reducing the time spent on manual efforts.

Common Time-Consuming Tasks Automated by PyCaret:

- Handling Missing Values: Automatically detects and imputes missing values.

- Outlier Removal: Identifies and handles outliers without manual inspection.

- Feature Scaling and Encoding: Applies standardization, normalization, or encoding techniques with minimal setup.

How It Works:

Instead of writing separate code for each preprocessing step, you can use PyCaret’s setup() function. This single command automates an entire pipeline of data cleaning tasks.

Example Code:

from pycaret.classification import setup

clf_setup = setup(

data=my_data,

target='Target',

remove_outliers=True,

normalize=True

)

With PyCaret, a process that could take hours can be completed in minutes, letting you quickly move to modeling and analysis.

Reducing Human Error in Data Cleaning Workflows

Manual data cleaning often introduces errors, especially in large datasets. Mislabeling columns, overlooking outliers, or forgetting to scale features can lead to flawed models. PyCaret minimizes these risks by standardizing the cleaning process.

How PyCaret Reduces Human Error:

- Consistency: Ensures that every preprocessing step follows the same logic across datasets.

- Automation: Eliminates the need for manual code for repetitive tasks, reducing mistakes.

- Validation Checks: Built-in checks warn about potential data issues during the setup phase.

Example:

When you enable outlier removal or missing value imputation, PyCaret automatically applies the same logic to all applicable columns, ensuring no inconsistency creeps in.

How PyCaret Improves Data Quality and Model Performance

Clean data is the foundation of any good machine learning model. PyCaret ensures high-quality data, which directly translates to better model performance.

Key Features Enhancing Data Quality:

- Accurate Handling of Missing Values: Imputation strategies prevent data loss.

- Outlier Management: Reduces noise in the data, making patterns more detectable.

- Feature Scaling and Encoding: Ensures that all variables contribute meaningfully to the model.

Impact on Model Performance:

- Models trained on cleaned datasets are less prone to overfitting or underfitting.

- Scaling and encoding prevent issues with algorithms that are sensitive to feature magnitudes or categorical data.

Why Choose PyCaret for Automating Data Cleaning?

| Benefit | How PyCaret Helps |

|---|---|

| Time Savings | Automates preprocessing steps, reducing setup time. |

| Error Reduction | Standardizes workflows and eliminates manual errors. |

| Improved Data Quality | Ensures consistent, high-quality data preparation. |

| Better Model Performance | Enhances the accuracy and reliability of machine learning models. |

Challenges and Limitations of PyCaret in Data Cleaning

Scenarios Where PyCaret’s Automation May Fall Short

PyCaret’s automation is powerful, but certain situations require more nuanced approaches that its default settings may not address effectively.

Complex Domain-Specific Data Cleaning

- PyCaret applies general cleaning techniques. However, domain-specific preprocessing (e.g., handling scientific notations in research data or special date formats in financial datasets) often requires custom handling.

- Example: Cleaning text-heavy data or specialized fields like genomics or IoT sensor logs might not align with PyCaret’s automated workflows.

Handling Unstructured Data

- PyCaret focuses on tabular data and lacks built-in capabilities for unstructured data types like text, images, or audio.

- Workaround: Preprocess unstructured data using other libraries (like NLTK or OpenCV) before feeding it into PyCaret.

Limited Flexibility with Rare Scenarios

- Extreme Outliers: PyCaret’s outlier detection might overlook extreme edge cases that don’t fit typical statistical thresholds.

- Highly Imbalanced Data: While PyCaret identifies imbalances, advanced techniques like SMOTE-NC for categorical variables require external libraries.

Over-Automation Risks

- Automation can mask underlying issues in your data. For example, while PyCaret automatically imputes missing values, it might choose a strategy (like mean or median imputation) that doesn’t fit the context of your dataset.

Custom Cleaning Techniques PyCaret Doesn’t Handle Natively

Sometimes, you’ll need to go beyond PyCaret’s built-in capabilities. These situations often call for custom preprocessing with libraries like Pandas or NumPy.

Advanced Feature Engineering

- PyCaret doesn’t offer tools for creating domain-specific features or advanced transformations like polynomial features or log scaling.

Specialized Data Transformations

- Example: If your data contains log-transformed or exponential variables, you’ll need to manually revert transformations or engineer new features before using PyCaret.

Data Cleaning for Highly Custom Rules

- Removing entries based on conditional logic isn’t natively supported. For example, deleting rows where two columns have specific combined values must be handled manually.

- Code Example Using Pandas:

# Custom rule-based cleaning in Pandas

import pandas as pd

df = pd.read_csv('data.csv')

df_cleaned = df[(df['Age'] > 18) & (df['Income'] > 30000)]

Integration of Multiple Datasets

- PyCaret doesn’t natively handle merging datasets or resolving conflicts in multi-source data cleaning workflows.

Overcoming Limitations: Combining PyCaret with Other Tools

To address these gaps, you can integrate PyCaret with other libraries.

Using Pandas for Preprocessing

- Clean data manually before passing it to PyCaret for automation.

import pandas as pd

from pycaret.classification import setup

# Custom preprocessing

df = pd.read_csv('data.csv')

df['NewFeature'] = df['Feature1'] / df['Feature2']

# Load into PyCaret

clf_setup = setup(data=df, target='Target')

Leveraging Scikit-Learn for Custom Pipelines

- Combine PyCaret with Scikit-Learn pipelines for advanced transformations.

from sklearn.preprocessing import PolynomialFeatures

from pycaret.regression import setup

# Generate polynomial features

poly = PolynomialFeatures(degree=2)

poly_data = poly.fit_transform(df[['Feature1', 'Feature2']])

# Use in PyCaret

setup(data=poly_data, target='Target')

Conclusion: Simplify Data Cleaning with PyCaret

Data cleaning is a crucial step in building reliable machine learning models, and PyCaret has emerged as a tool that makes this process faster and easier. Whether you’re handling missing values, detecting outliers, or encoding categorical variables, PyCaret simplifies these tasks with its powerful automation capabilities.

Let’s revisit some key insights and explore why automating data cleaning with PyCaret is a game-changer for data scientists today.

Key Takeaways on Automating Data Cleaning Tasks

- Time-Saving Automation: PyCaret handles tedious preprocessing tasks, allowing you to focus on model building and analysis.

- Improved Data Quality: The tool ensures your datasets are cleaned efficiently, which directly impacts the performance of machine learning models.

- Accessible for Beginners: Its low-code interface makes it beginner-friendly while still providing advanced customization for seasoned professionals.

- Comprehensive Preprocessing: From missing data imputation to outlier removal and feature scaling, PyCaret covers a broad range of cleaning tasks.

Future Trends in Automated Data Preprocessing

Automation in data cleaning is continuously evolving. Here are some trends that could shape the future of tools like PyCaret:

- AI-Driven Cleaning Recommendations: Future tools may use machine learning models to suggest optimal cleaning strategies based on dataset characteristics.

- Integration with Real-Time Pipelines: Enhanced compatibility with streaming data will allow preprocessing on the fly, useful for IoT and live analytics.

- Domain-Specific Modules: Expanding support for industry-specific datasets like genomics, healthcare, or finance could broaden PyCaret’s usability.

Why PyCaret is a Game-Changer for Data Scientists in 2024

PyCaret stands out as a must-have tool for modern data scientists because:

- Low-Code Advantage: It significantly lowers the barrier to entry for data cleaning, empowering beginners to handle complex datasets with minimal effort.

- All-in-One Solution: It consolidates multiple preprocessing tasks into one unified framework, reducing reliance on multiple tools or manual coding.

- Consistent Updates: With ongoing improvements, PyCaret stays relevant to the latest trends and challenges in data science.

Automating data cleaning is no longer a luxury; it’s a necessity in today’s data-driven world. PyCaret offers a blend of simplicity and functionality that ensures your preprocessing workflows are efficient and accurate. As data science continues to grow, tools like PyCaret will become even more indispensable, helping professionals and beginners alike to unlock the full potential of their data.

FAQs about Automating Data Cleaning with PyCaret

Can PyCaret handle large datasets efficiently?

Yes, PyCaret can handle large datasets efficiently. However, performance may vary depending on system resources and dataset size. For extremely large data, consider sampling or distributed processing.

What preprocessing tasks can PyCaret automate?

PyCaret automates tasks like missing value imputation, outlier detection and treatment, feature scaling, encoding categorical variables, and data normalization, making it a comprehensive preprocessing tool.

Is PyCaret suitable for beginners in data science?

Absolutely! PyCaret’s low-code approach and user-friendly documentation make it an excellent choice for beginners while offering advanced options for experienced users.

External Resources

Official PyCaret Documentation

- Link: PyCaret Docs

- Description: Comprehensive guides on all PyCaret features, including preprocessing, imputation, and pipeline setups.

PyCaret GitHub Repository

- Link: PyCaret on GitHub

- Description: Explore the source code, examples, and detailed updates about the library.

Kaggle Notebooks Using PyCaret

- Link: PyCaret on Kaggle

- Description: Real-world examples of PyCaret for data cleaning and machine learning pipelines.

Leave a Reply