Top Data Science Skills You Must Master in 2025

Introduction: Why Data Science Skills Are Crucial in 2025 and Beyond

Data is everywhere. From the apps we use to the decisions shaping businesses, data drives the world. But raw data isn’t enough. It needs someone to analyze it, find patterns, and turn it into valuable insights. That’s where data science skills come in.

As we move into 2025 and beyond, these skills are more important than ever. Businesses, hospitals, and governments rely on data science to solve problems and plan for the future. It helps improve customer experiences, streamline processes, and even fight diseases.

In today’s fast-paced world, data science isn’t just for tech experts. It’s becoming important for everyone who wants to stay ahead. Let’s look at why these skills matter so much and how they’re shaping the future.

Technical Skills Every Data Scientist Needs in 2025

In the ever-evolving world of data science, programming skills are the backbone of success. As we approach 2025, mastering Python and R continues to be important for data scientists. These languages have shaped how we analyze data, create visualizations, and build predictive models. While new languages are emerging, Python and R remain the foundation of most data science workflows. Let’s explore why these languages are irreplaceable, what new languages are gaining attention, and how you can stay ahead with the right data science skills.

Why Python and R Are Indispensable for Data Scientists

1. Python: A Multipurpose Powerhouse

Python’s simplicity and flexibility make it a go-to language for data scientists. It is widely used for data cleaning, analysis, and even machine learning. Python’s extensive libraries provide tools for every stage of the data science pipeline.

Key Python Libraries for Data Science Skills:

- Pandas: For data manipulation and analysis.

- NumPy: For numerical computations.

- Matplotlib and Seaborn: For creating stunning visualizations.

- Scikit-learn: For machine learning.

- TensorFlow and PyTorch: For deep learning.

Here’s a quick example of how Python simplifies tasks like creating a linear regression model:

import pandas as pd

from sklearn.linear_model import LinearRegression

# Sample data

data = {'Hours_Studied': [1, 2, 3, 4], 'Scores': [40, 50, 60, 70]}

df = pd.DataFrame(data)

# Model training

model = LinearRegression()

model.fit(df[['Hours_Studied']], df['Scores'])

# Prediction

print(model.predict([[5]])) # Predict score for 5 hours of study

This short snippet shows how Python can transform raw data into actionable insights in minutes.

2. R: The Statistical Expert

While Python excels in general tasks, R shines when it comes to statistical analysis and visualization. It was specifically designed for data analysis, making it a favorite among statisticians and researchers.

Key R Libraries for Data Science Skills:

- ggplot2: For advanced visualizations.

- dplyr: For data manipulation.

- caret: For machine learning models.

- Shiny: For building interactive dashboards.

Example: Visualizing data trends with ggplot2:

library(ggplot2)

# Sample data

data <- data.frame(Hours_Studied = c(1, 2, 3, 4), Scores = c(40, 50, 60, 70))

# Plot

ggplot(data, aes(x = Hours_Studied, y = Scores)) +

geom_point() +

geom_smooth(method = "lm") +

labs(title = "Study Hours vs Scores", x = "Hours Studied", y = "Scores")

This visualization helps identify patterns in the data effortlessly.

New Programming Languages Gaining Traction in Data Science

While Python and R dominate the field, new languages are gaining popularity for niche applications:

| Language | Use Case | Why It’s Trending |

|---|---|---|

| Julia | High-performance computing, numerical analysis | Faster than Python for computational tasks |

| Scala | Big data (used with Apache Spark) | Efficient for large-scale data processing |

| Go | Building scalable applications | Combines speed and simplicity |

These languages are not replacing Python or R but complementing them in specific scenarios. For example, Julia is ideal for computations in finance or engineering where speed matters.

Mastering Machine Learning Algorithms for Data Science Skills: Top Techniques to Know for 2025

Machine learning algorithms are at the heart of modern data science skills. To stay relevant in 2025, understanding the most effective techniques is important for professionals and students alike. Among these, reinforcement learning and transfer learning are gaining significant attention due to their transformative potential in solving complex problems. This section will explore critical algorithms, why they matter, and how you can apply them in real-world scenarios.

Top Machine Learning Techniques for 2025

Machine learning has numerous approaches. Here are the top ones you need to focus on as part of your data science skills:

1. Supervised Learning

This technique involves training a model on labeled data. It’s widely used in tasks like predicting stock prices, classifying emails as spam, or diagnosing diseases.

- Examples:

- Regression: Predicting house prices.

- Classification: Identifying whether an email is spam or not.

Python Example for Classification:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Sample data

X = [[1, 2], [2, 3], [3, 4], [4, 5]]

y = [0, 0, 1, 1]

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Model training

model = RandomForestClassifier()

model.fit(X_train, y_train)

# Prediction

print(model.predict(X_test))

2. Unsupervised Learning

This technique is used to find patterns in data without labels. It’s commonly applied in clustering and dimensionality reduction.

- Examples:

- Clustering: Grouping customers based on buying behavior.

- Dimensionality Reduction: Visualizing high-dimensional data.

3. Reinforcement Learning

Reinforcement learning (RL) involves training an agent to make decisions by rewarding positive outcomes. It’s used in robotics, self-driving cars, and gaming AI.

Key Components of RL:

- Agent: The decision-maker.

- Environment: Where the agent interacts.

- Reward: Feedback for actions taken.

How It Works:

- The agent takes an action based on the environment.

- A reward (or penalty) is given based on the result of the action.

- The agent learns to maximize cumulative rewards.

Example: Training a robot to navigate a maze.

4. Transfer Learning

Transfer learning uses knowledge from one task to improve performance on a different but related task. It’s particularly useful when you have limited data for the new task.

Example:

A pre-trained image recognition model like ResNet can be fine-tuned to identify specific objects, such as medical images.

Python Code Example for Transfer Learning:

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, Flatten

# Load pre-trained ResNet50 model

base_model = ResNet50(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Add custom layers

x = Flatten()(base_model.output)

output = Dense(10, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

# Freeze base layers

for layer in base_model.layers:

layer.trainable = False

# Compile and train

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

This code uses a pre-trained model to adapt to a new classification problem.

Comparison of Key Techniques

Here’s a comparison of these machine learning techniques:

| Technique | Best For | Example Use Cases |

|---|---|---|

| Supervised Learning | Labeled data | Predicting stock prices, spam filtering |

| Unsupervised Learning | Unlabeled data | Customer segmentation, anomaly detection |

| Reinforcement Learning | Decision-making problems | Robotics, gaming, self-driving cars |

| Transfer Learning | Limited data | Medical imaging, speech recognition |

Why Reinforcement Learning and Transfer Learning Are Crucial

Reinforcement Learning:

- RL is revolutionizing industries where dynamic decision-making is important.

- For example, self-driving cars use RL to navigate safely by learning from simulations and real-world experiences.

Transfer Learning:

- It saves time and resources by reusing pre-trained models.

- This approach is invaluable in fields like healthcare, where labeled data is scarce.

How to Master These Techniques

To master these techniques as part of your data science skills:

- Practice Real-World Problems: Build projects like a chatbot or a recommendation system.

- Take Specialized Courses: Focus on reinforcement learning and transfer learning courses.

- Stay Updated: Follow research papers and attend conferences.

Deep Learning and Neural Networks: Redefining Artificial Intelligence in 2025

Deep learning and neural networks are at the forefront of artificial intelligence advancements. These technologies have not only improved traditional machine learning but have also introduced groundbreaking capabilities. In 2025, transformers are expected to dominate the landscape, shaping everything from natural language processing to computer vision. To strengthen your data science skills, understanding these concepts and their applications is essential.

What is Deep Learning?

Deep learning is a subset of machine learning. It uses artificial neural networks to process and analyze complex data. Unlike traditional models, deep learning can automatically extract features from raw data, which makes it ideal for tasks like image recognition, natural language understanding, and autonomous driving.

🔹 Want to go deeper into deep learning?

Check out my book: NEURAL NETWORKS AND DEEP LEARNING WITH PYTHON: A PRACTICAL APPROACH on Amazon for a hands-on guide! 🚀

How Neural Networks Work

Neural networks are the backbone of deep learning. These networks consist of layers of interconnected nodes, or “neurons,” inspired by the human brain. Each neuron processes information and passes it to the next layer.

Here’s a simple explanation of a feedforward neural network:

- Input Layer: Receives the data.

- Hidden Layers: Process the data through weights and biases.

- Output Layer: Produces the prediction or classification.

Example: Simple Neural Network for Digit Recognition

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.datasets import mnist

# Load MNIST dataset

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train, X_test = X_train / 255.0, X_test / 255.0 # Normalize data

# Build the neural network

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dense(10, activation='softmax')

])

# Compile and train the model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5)

This example demonstrates how to create a simple network to classify handwritten digits using the MNIST dataset.

How Deep Learning is Redefining Artificial Intelligence

Deep learning is revolutionizing fields that were once thought impossible for machines to master:

- Natural Language Processing (NLP): Chatbots, translators, and content generation tools rely on deep learning to understand and respond like humans.

- Healthcare: Algorithms assist in diagnosing diseases by analyzing medical images.

- Autonomous Vehicles: Self-driving cars depend on neural networks to identify objects and make decisions in real-time.

- Creative Applications: Deep learning powers tools for creating realistic images, music, and even writing stories.

Understanding Transformers

Transformers are a deep learning architecture introduced in 2017 by the “Attention is All You Need” paper. They have become a game-changer in NLP and beyond.

Key Components of Transformers:

- Self-Attention Mechanism: Allows the model to focus on relevant parts of the input sequence.

- Positional Encoding: Adds information about the order of the sequence.

- Feedforward Neural Network: Processes data after applying attention.

Applications of Transformers in 2025

Transformers are widely used in areas that require context understanding:

- Language Models: OpenAI’s ChatGPT and Google’s BERT improve text generation and comprehension.

- Image Processing: Vision Transformers (ViT) are used for tasks like object detection and segmentation.

- Code Understanding: Tools like Codex help in code generation and debugging.

Must Read

- Monotonic Sequence in Python: 7 Practical Methods With Edge Cases, Interview Tips, and Performance Analysis

- How to Check if Dictionary Values Are Sorted in Python

- Check If a Tuple Is Sorted in Python — 5 Methods Explained

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

Comparing Traditional Neural Networks and Transformers

| Aspect | Traditional Neural Networks | Transformers |

|---|---|---|

| Architecture | Sequential layers | Self-attention mechanism |

| Data Processing | Fixed-size input | Handles variable-length sequences |

| Applications | Image recognition | NLP, vision, and multimodal tasks |

| Performance | Limited by long dependencies | Excellent in capturing long-term dependencies |

Why Deep Learning is important for Your Data Science Skills

- Broad Applications: From text to images, deep learning used in mant areas.

- High Demand: Industries need professionals who can work with state-of-the-art models like transformers.

- Accessible Tools: Libraries like TensorFlow, PyTorch, and Hugging Face make it easy to implement complex models.

Data Engineering and Big Data Analytics: Key Skills for 2025

As the world generates more data, data engineering and big data analytics have become important in the data science landscape. In 2025, platforms like Apache Spark are expected to play a pivotal role in how data is processed and analyzed. Understanding the ETL (Extract, Transform, Load) process, data pipelines, and the tools that make these processes efficient is crucial to building data science skills that are in high demand.

The Role of Big Data Platforms like Apache Spark in 2025

In 2025, the role of big data platforms like Apache Spark is more significant than ever. These platforms allow companies to process vast amounts of data efficiently, enabling real-time analytics, machine learning, and data-driven decision-making.

Why Apache Spark?

Apache Spark is an open-source, distributed computing system that helps with large-scale data processing. It’s faster than traditional tools like Hadoop and offers real-time processing capabilities. Here’s why it’s critical:

- Speed: Apache Spark can process data up to 100 times faster than Hadoop due to in-memory computation.

- Scalability: It can handle petabytes of data, which is crucial for big data analytics.

- Ease of Use: With APIs in languages like Python, Java, and Scala, Apache Spark makes it easy for data scientists and engineers to interact with large datasets.

Example: Basic Apache Spark Setup in Python

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder.appName("SparkExample").getOrCreate()

# Load a sample dataset

df = spark.read.csv("path_to_file.csv", header=True, inferSchema=True)

# Perform some basic operations

df.show() # Display the data

df.describe().show() # Get summary statistics

Data Engineering Skills: ETL Processes, Data Pipelines, and Beyond

Data engineering is the backbone of any successful data science operation. It involves the creation and maintenance of data pipelines and the transformation of raw data into actionable insights. ETL processes (Extract, Transform, Load) are central to this work.

What is ETL?

ETL is a process that involves three steps:

- Extracting data from different sources.

- Transforming it into a format that’s easy to use.

- Loading it into a data warehouse or database for storage and analysis.

In simple terms, ETL helps gather, clean, and organize data so it’s ready to be used.

Why is ETL Important for Data Science?

Without a solid ETL process, your data would be incomplete or disorganized, making analysis difficult. Proper ETL processes ensure that data is clean, consistent, and ready for data science work.

Data Pipelines: Automating the Flow of Data

A data pipeline automates the flow of data through different stages, including collection, processing, and storage. A well-designed pipeline ensures that data is available in real-time, or at least in time for decision-making. Key skills related to building data pipelines include:

- Data Integration: Combining data from multiple sources, whether structured or unstructured.

- Data Transformation: Cleaning, filtering, and processing data for analysis.

- Data Storage: Storing the data in a data warehouse or lake that is optimized for querying.

Example: Building a Simple ETL Pipeline in Python

import pandas as pd

# Step 1: Extract data

data = pd.read_csv('data_source.csv')

# Step 2: Transform the data

data_cleaned = data.dropna() # Remove missing values

# Step 3: Load data into a database (e.g., SQLite)

import sqlite3

conn = sqlite3.connect('database.db')

data_cleaned.to_sql('cleaned_data', conn, if_exists='replace')

This Python code demonstrates a simple ETL process using pandas for data extraction and transformation and sqlite3 for data storage.

Key Components of Data Engineering in 2025

As data continues to grow, so will the complexity of the data engineering tools and processes used to handle it. Here are the key components to focus on:

- Cloud Platforms: Tools like AWS, Google Cloud, and Azure are important for scalable data storage and processing.

- Batch vs. Real-Time Processing: Understanding when to use batch processing (for large volumes of data) versus real-time processing (for immediate insights) is crucial.

- Data Warehouses and Lakes: These storage systems are optimized for handling massive datasets efficiently.

- Orchestration Tools: Technologies like Apache Airflow help automate and schedule workflows within data pipelines.

- Data Governance: Ensuring that data is accurate, secure, and compliant with regulations.

Future Trends in Data Engineering

| Trend | Description |

|---|---|

| Serverless Data Processing | Serverless frameworks like AWS Lambda will simplify infrastructure management. |

| Data Mesh | A decentralized approach to data architecture, allowing data ownership by domains. |

| Machine Learning in ETL | Machine learning models will help automate data transformation and anomaly detection. |

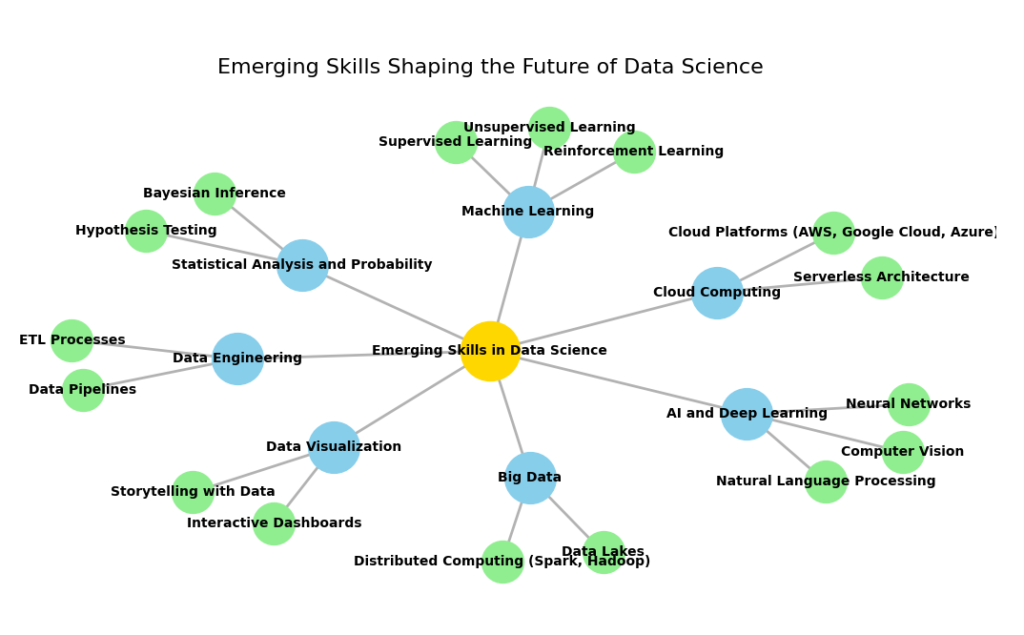

Emerging Skills Shaping the Future of Data Science

The world of data science is rapidly evolving, with new skills, tools, and techniques emerging that are shaping the future of the field. As we look toward 2025 and beyond, several key areas are gaining momentum, including MLOps, AI ethics, and quantum computing. These innovations are not just buzzwords—they are the foundation of data science skills that will drive the next wave of technological advancements.

MLOps: The Future of Machine Learning Operations

As machine learning (ML) models become more complex and integrated into business operations, there is an increasing need for systems that manage these models effectively. This is where MLOps comes in. MLOps (Machine Learning Operations) is the practice of combining machine learning with DevOps principles to manage the lifecycle of models.

Automating Model Deployment and Monitoring with MLOps Tools

One of the primary goals of MLOps is to automate the deployment, monitoring, and management of machine learning models. This is crucial because, unlike traditional software applications, machine learning models need to be updated and monitored continuously to ensure they remain accurate and effective over time.

Key MLOps Tools

- Kubeflow: An open-source platform that helps manage ML workflows.

- TensorFlow Extended (TFX): A framework for deploying and managing ML pipelines at scale.

- MLflow: A tool to track experiments, model deployment, and versioning.

By using these tools, data scientists can build scalable ML pipelines that automate the deployment of models and monitor their performance over time.

Example: Model Deployment with MLflow

import mlflow

import mlflow.sklearn

from sklearn.ensemble import RandomForestClassifier

# Define the model

model = RandomForestClassifier()

model.fit(X_train, y_train)

# Log the model with MLflow

mlflow.sklearn.log_model(model, "model")

This simple example shows how to log and track a machine learning model using MLflow. By doing this, it becomes easy to manage multiple models and monitor their performance.

The Significance of DevOps Skills in Data Science Workflows

While MLOps focuses specifically on machine learning, DevOps principles are equally important in data science workflows. DevOps is a set of practices that combines software development and IT operations, helping teams deliver applications faster and more reliably. For data scientists, DevOps skills areimportant to automate the management of data pipelines and ensure continuous integration and deployment (CI/CD) for models.

Key DevOps Skills for Data Scientists

- Version control (Git): Managing changes to code and data pipelines.

- Containerization (Docker): Packaging code and models in containers to ensure consistency across environments.

- CI/CD pipelines: Automating the process of testing, building, and deploying models.

By integrating DevOps principles with MLOps, data scientists can create more efficient and scalable workflows for machine learning model deployment and management.

AI Ethics and Responsible AI Practices

As artificial intelligence (AI) continues to evolve, it brings with it new ethical challenges. Understanding AI ethics is becoming increasingly important for data scientists, as AI models have a significant impact on society, including issues around privacy, bias, and fairness.

Why Understanding AI Ethics is important in 2025

In 2025, AI ethics will not only be a critical part of the data science field but also a competitive advantage for professionals who understand how to build ethical models. With AI systems being used in healthcare, finance, and even legal settings, it is importantl to ensure that these models are fair, transparent, and responsible.

Key Ethical Considerations

- Bias and Fairness: AI models can perpetuate or even amplify biases present in the training data, leading to unfair outcomes.

- Transparency: Understanding how a model makes decisions (i.e., model interpretability) is important for building trust.

- Privacy: AI systems must respect user privacy and comply with regulations like GDPR.

By staying informed about AI ethics, data scientists can build AI systems that not only perform well but also make a positive social impact.

Example: Mitigating Bias in Machine Learning Models

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Load data

X, y = load_data()

# Split data into train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

# Train a model

model = RandomForestClassifier()

model.fit(X_train, y_train)

# Assess fairness and adjust the model

# One common method is to analyze disparity in error rates across groups

In this example, assessing and adjusting the model for fairness is an ongoing task for data scientists. This is crucial when using AI in sensitive areas like hiring or loan approval.

The Impact of AI Governance on Data Science Careers

AI governance is an emerging field that is concerned with creating policies and frameworks to ensure that AI systems are developed, deployed, and used responsibly. This includes establishing guidelines for transparency, accountability, and fairness. As AI becomes more prevalent, AI governance will become an important aspect of data science careers.

Data scientists who are well-versed in AI governance will have a competitive edge, as companies seek professionals who can develop ethical, transparent, and reliable AI systems.

Quantum Computing in Data Science

In 2025, quantum computing will begin to play a more significant role in data science. Quantum computers are designed to solve complex problems that are currently out of reach for classical computers. This could revolutionize areas like cryptography, optimization, and machine learning.

An Introduction to Quantum Computing for Data Scientists

Quantum computing uses the principles of quantum mechanics to perform computations that would be impossible for classical computers. While it is still in its early stages, data scientists should start learning about quantum algorithms as these will likely become important tools for processing complex datasets.

Key Concepts in Quantum Computing

- Qubits: Qubits is the fundamental units of quantum computing, similar to classical bits but capable of representing multiple states simultaneously.

- Quantum superposition: The ability of a qubit to be in a combination of 0 and 1 states.

- Quantum entanglement: A phenomenon where qubits become linked, such that the state of one qubit depends on the state of another.

How Quantum Algorithms Are Transforming Data Analysis

Quantum algorithms promise to significantly improve data analysis by providing exponential speedups for certain types of problems. For example, quantum algorithms like Grover’s algorithm can speed up search problems, while Shor’s algorithm can solve integer factorization problems that are critical for cryptography.

Example: Quantum Search Algorithm (Grover’s Algorithm)

# Pseudo-code for Grover's search algorithm

def grover_algorithm(query):

# Initialize quantum state

# Apply quantum operations

# Perform search

return result

Though still theoretical, the impact of quantum algorithms on data science could be profound, particularly in optimization and machine learning.

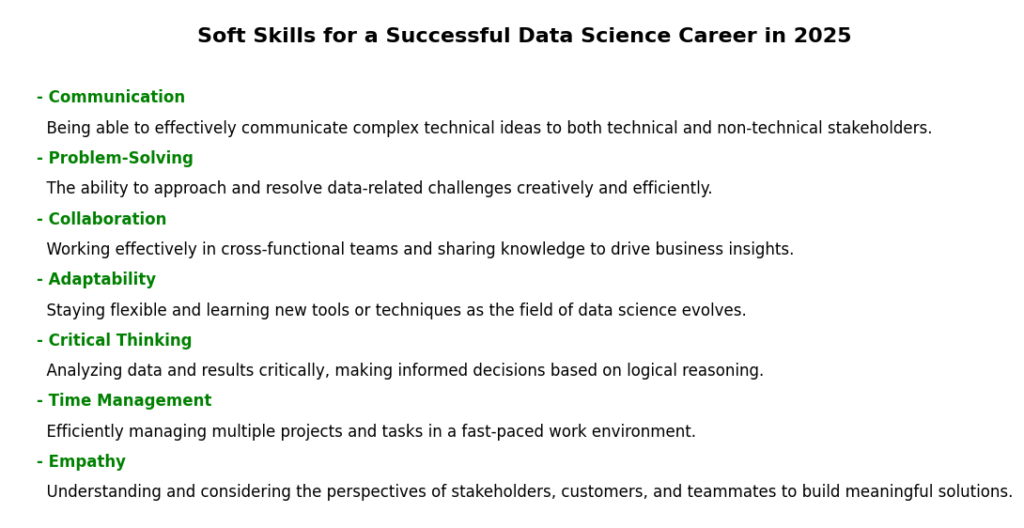

Soft Skills for a Successful Data Science Career in 2025

Data Storytelling and Visualization

In the fast-paced world of data science, the ability to turn raw data into compelling stories is a game-changer. Data storytelling is about more than just presenting numbers—it’s about creating a narrative that explains the insights behind the data in a way that resonates with your audience.

Why Storytelling is a Game-Changer in Data Science Communication

Storytelling has always been at the heart of human communication, and it’s no different when it comes to data science. The most sophisticated models and analyses won’t be useful unless you can convey their insights clearly and persuasively. In 2025, being able to tell a compelling story with data will be important for influencing business decisions, shaping strategies, and driving action.

Effective data storytelling combines visuals, narratives, and context to make data understandable and actionable for diverse audiences, from executives to technical teams.

Example: Using a Story to Explain Data Insights

Imagine you have a dataset showing customer satisfaction scores across various regions. Instead of just displaying a table or graph of the scores, you could tell a story like this:

- “Our satisfaction scores have dipped in the last quarter, especially in the Eastern region, where we see a sharp decline in scores from 85% to 70%. After investigating, we found that customers in this region have had issues with product delivery times, which have been consistently higher than the national average.”

This type of storytelling helps contextualize the data and drives action, such as improving delivery processes in the Eastern region.

Mastering Tools like Tableau, Power BI, and Matplotlib

To tell these stories, data visualization tools like Tableau, Power BI, and Matplotlib are important. These tools help transform complex datasets into clear, visually engaging reports that are easy to understand.

Tableau and Power BI

- Both are user-friendly tools that allow you to create interactive dashboards, making it easier to explore and share data insights.

- With Tableau, you can quickly connect to various data sources and create beautiful visualizations with drag-and-drop ease.

- Power BI, Microsoft’s business analytics tool, is tightly integrated with Microsoft products, making it perfect for organizations that use tools like Excel and Azure.

Matplotlib (for Python)

- For those who prefer coding their visualizations, Matplotlib is a powerful Python library for creating static, animated, and interactive visualizations.

- Whether you’re creating line charts, histograms, or even complex heatmaps, Matplotlib gives you the flexibility to create exactly what you need.

By mastering these tools, data scientists can create visualizations that tell clear, actionable stories.

Collaboration and Leadership

As data science becomes more integrated into various business functions, the ability to work well with others and lead teams becomes vital. Being a strong collaborator and leader can significantly enhance the success of any data-driven project.

Teamwork in Cross-Functional Data Science Projects

In today’s data-driven world, cross-functional collaboration is a must. Data scientists often work alongside business analysts, software engineers, product managers, and marketers. In 2025, teamwork in these projects will be crucial as businesses look to use data science to solve complex problems.

Effective collaboration requires more than just technical knowledge. It’s about being able to communicate data insights to non-technical team members, understanding their needs, and working together to find solutions.

Key Aspects of Teamwork in Data Science Projects

- Clear Communication: Sharing complex ideas in a way that everyone on the team can understand.

- Problem Solving: Working together to identify challenges and figure out the best approach to solving them.

- Adaptability: Being flexible and open to feedback and ideas from other team members.

Leadership Skills for Managing AI-Driven Initiatives

Leading a team that’s working on AI-driven projects requires a unique skill set. Leaders must understand both the technical side of AI and the business implications of the work being done. They also need to be great communicators, able to explain the value of AI projects to stakeholders and drive innovation.

Key Leadership Skills for AI Initiatives

- Vision: A strong leader needs to have a clear vision for how AI can be applied to solve business problems.

- Delegation: A good leader knows when and how to delegate tasks to ensure the project runs smoothly.

- Risk Management: AI projects often involve a degree of uncertainty, and leaders must be able to navigate risks effectively.

In 2025, data scientists who possess leadership skills and the ability to manage AI-driven initiatives will be in high demand.

Adaptability and Lifelong Learning

In this fast-changing environment, adaptability and a commitment to lifelong learning will be important for success.

Keeping Up with the Fast-Evolving Data Science Landscape

To remain competitive, data scientists need to keep their skills up to date. Whether it’s learning new tools, staying informed about the latest AI trends, or mastering emerging programming languages, continuous learning is key.

How to Keep Learning in Data Science

- Online Courses: Platforms like Coursera, edX, and Udacity offer a wealth of courses to help you stay current with new technologies and concepts.

- Workshops and Conferences: Attending industry workshops and conferences is an excellent way to network and learn about the latest advancements in data science.

- Reading Blogs and Research Papers: Staying informed by following thought leaders and reading academic research papers will give you an edge in the ever-changing field of data science.

Online Resources and Communities for Continuous Skill Development

Joining online communities can provide valuable insights and networking opportunities for data scientists. Some popular communities include:

- Kaggle: A platform for data science competitions and projects, where you can improve your skills by working on real-world datasets.

- Stack Overflow: A go-to resource for getting answers to your coding questions and collaborating with fellow data scientists.

- GitHub: A place to share your projects and collaborate on open-source data science initiatives.

These resources help data scientists stay sharp and adapt to new developments in the field.

Domain-Specific Knowledge: An Underrated Skill

Specialized Skills for Industry Applications

While foundational skills in data science—such as machine learning, statistics, and data visualization—are important, applying them effectively requires knowledge of the specific challenges and needs within various industries. Below, we will explore how specialized data science skills can be used in industries like healthcare, finance, and environmental science, focusing on how these skills directly impact decision-making and innovations in these sectors.

Data Science in Healthcare: Skills for Predictive Analytics

Healthcare is one of the most data-rich industries, yet it has a unique set of challenges that make data science applications particularly complex. With increasing access to patient data and the rise of technologies like wearable devices, there is a growing need for data scientists who can apply predictive analytics to solve healthcare problems.

Key Skills for Data Science in Healthcare

- Predictive Modeling: The ability to predict health outcomes using data from patient histories, lab results, and lifestyle factors is crucial. Techniques such as logistic regression, decision trees, and support vector machines (SVM) are commonly used for predictive healthcare analytics.

- Natural Language Processing (NLP): Much of healthcare data comes in unstructured forms, such as medical records or doctor’s notes. Skills in NLP help data scientists extract useful information from these text-heavy datasets to make better-informed predictions and decisions.

- Data Privacy and Ethics: Understanding regulations like HIPAA (Health Insurance Portability and Accountability Act) is important for working with sensitive health data. Data scientists need to know how to balance innovation with privacy concerns.

- Time Series Analysis: Predicting trends over time, such as patient health deterioration or disease outbreaks, requires advanced time series analysis techniques. Knowing how to forecast future health events based on historical data is vital for creating predictive models.

By mastering these skills, data scientists can help predict disease outbreaks, improve patient care, and even reduce healthcare costs through more efficient use of resources.

Example: Predicting Patient Readmissions

Using predictive analytics, hospitals can reduce patient readmission rates by identifying patients at high risk of returning within 30 days. Data scientists use logistic regression models to analyze factors like age, medical history, and previous admissions, helping healthcare providers offer preventive care or intervention strategies.

Key Skills for Data-Driven Finance and Investment Strategies

In the finance and investment sectors, data science has become an indispensable tool. With the rise of big data and machine learning, financial analysts and investors are increasingly relying on sophisticated models to make decisions.

Key Skills for Data Science in Finance

- Quantitative Analysis: Financial data analysis often involves complex statistical models. Data scientists need to understand stochastic processes, probability theory, and statistical inference to develop pricing models for options, derivatives, or portfolio management.

- Risk Management: Predicting and mitigating financial risk using machine learning algorithms is crucial. Models like Value-at-Risk (VaR) and Monte Carlo simulations are often used to assess potential losses under different market scenarios.

- Time Series Forecasting: Financial markets are highly dynamic, and forecasting stock prices, interest rates, and currency fluctuations requires advanced techniques in time series analysis. Tools like ARIMA (Auto-Regressive Integrated Moving Average) or LSTM (Long Short-Term Memory) networks can be used to predict market trends.

- Fraud Detection: Machine learning algorithms are increasingly being used to detect unusual patterns that could indicate fraudulent activities. Algorithms like decision trees, support vector machines, and neural networks help banks and financial institutions identify fraud in real time.

With the right mix of financial knowledge and technical expertise, data scientists can contribute to smarter investment strategies, improved fraud detection, and enhanced financial risk management.

Example: Credit Scoring Using Machine Learning

Machine learning is widely used for credit scoring, where a model can predict whether an individual is likely to default on a loan. By analyzing historical transaction data and financial behavior, data scientists build models to assess the creditworthiness of potential borrowers.

Geospatial Analytics and Climate Modeling

The intersection of geospatial analytics and climate modeling has gained significant attention in recent years, especially as concerns about climate change grow. By analyzing geospatial data and using machine learning to model climate trends, data scientists are playing a critical role in understanding and mitigating environmental issues.

Using GIS Tools for Geospatial Data Analysis

Geographic Information Systems (GIS) are powerful tools for analyzing spatial data, such as weather patterns, land use, and population density. By integrating GIS with machine learning, data scientists can extract meaningful insights to inform decision-making on topics ranging from urban planning to climate change.

Key GIS Tools and Skills

- ArcGIS: One of the leading GIS platforms, used to visualize and analyze geographic data.

- QGIS: An open-source alternative that allows for powerful spatial data analysis and mapping.

- Spatial Statistics: Data scientists must understand how to use spatial data to look for patterns and correlations, such as changes in temperature or rainfall in different regions.

Example: Mapping Urban Heat Islands

Geospatial analytics can be used to map urban heat islands—areas in cities that experience higher temperatures than surrounding rural areas due to human activity. Using GIS tools and temperature data, data scientists can analyze these heat patterns and recommend changes in urban design or green space development to combat rising temperatures.

Using Machine Learning for Climate Data Predictions in 2025

Machine learning is increasingly used for climate modeling, helping scientists and policymakers predict future climate conditions and assess the impact of human activities on global warming. With access to vast amounts of climate data, including satellite imagery and weather station readings, machine learning models can help predict trends like sea-level rise, temperature fluctuations, and extreme weather events.

Key Skills for Machine Learning in Climate Modeling

- Data Preprocessing: Climate data is often messy and unstructured, requiring substantial preprocessing before machine learning algorithms can be applied. Cleaning and normalizing data are important for accurate predictions.

- Deep Learning for Climate Predictions: Deep learning models, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), are increasingly used to predict weather patterns, climate anomalies, and even ocean temperature changes.

- Model Evaluation: Climate models require careful validation to ensure accuracy. Cross-validation and error analysis are crucial steps in confirming that the machine learning models are predicting climate data correctly.

Example: Predicting Sea-Level Rise Using Machine Learning

By analyzing satellite data on sea levels, scientists can use machine learning to predict future sea-level rise based on current trends. These models take into account various factors, such as temperature, ice melt rates, and ocean currents, to predict how coastlines might change in the coming decades.

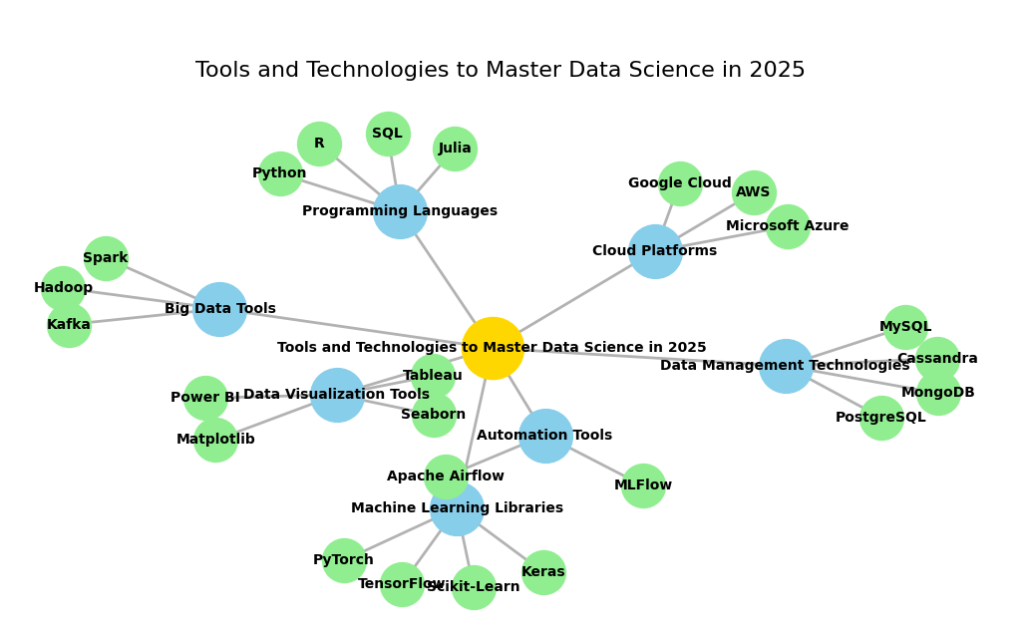

Tools and Technologies to Master Data Science in 2025

AutoML Platforms

AutoML (Automated Machine Learning) is revolutionizing the way of built, deployed and maintained a machine learning models. In the past, building machine learning models required a deep understanding of algorithms, data preprocessing, and model optimization. Today, AutoML platforms automate many of these steps, enabling both novice and experienced data scientists to create powerful models with minimal effort.

How AutoML is Simplifying Machine Learning Workflows

The main advantage of AutoML is its ability to simplify the entire machine learning lifecycle. Instead of spending countless hours on feature engineering, hyperparameter tuning, or model selection, data scientists can rely on AutoML platforms to automate these processes. This frees up time to focus on more complex tasks, such as understanding the business problem or interpreting model results.

Key Benefits of AutoML

- Faster Model Development: AutoML tools can quickly generate models, significantly reducing the time spent on the initial setup.

- Improved Accuracy: By automating the hyperparameter tuning process, AutoML can help fine-tune models to achieve better performance.

- Accessibility: Even those without extensive machine learning experience can use AutoML platforms to create models, lowering the barrier to entry.

For instance, an AutoML tool can automatically select the best algorithm, preprocess data, and tune parameters without requiring you to manually adjust every step.

Example: AutoML for Predictive Analytics

let’s view this you’re asked to create a model that predicts whether customers will leave a telecommunications company. Instead of doing everything yourself, you use AutoML. You upload the raw customer data, and AutoML automatically takes care of cleaning up the data, choosing the best features, and picking the right model. Within a few minutes, you have a model that can predict customer churn accurately, without needing to adjust anything manually.

Top AutoML Tools for Data Scientists in 2025

- Google Cloud AutoML: This platform offers a range of tools for building machine learning models with minimal code. It provides tools for vision, language, and structured data tasks, making it a wide option for many types of projects.

- H2O.ai: Known for its open-source machine learning tools, H2O.ai also offers AutoML capabilities that automate the machine learning pipeline while providing detailed insights into model performance.

- DataRobot: A popular AutoML platform, DataRobot simplifies the end-to-end process of building machine learning models and deploying them into production.

- Microsoft Azure AutoML: Part of the Azure ecosystem, this tool allows data scientists to automate the entire machine learning process, from data preprocessing to model deployment.

- TPOT: An open-source AutoML library in Python, TPOT uses genetic algorithms to automate the process of selecting and tuning machine learning models.

With AutoML, you can focus on higher-level tasks like data analysis and interpretation, while the platform handles the technical details.

Real-Time Analytics Tools

As businesses generate more data than ever before, the need to process that data in real-time has become important. Whether it’s monitoring customer interactions, tracking supply chain logistics, or analyzing sensor data from IoT devices, real-time analytics is critical for modern industries.

The Importance of Real-Time Data Processing in Modern Industries

Real-time data processing enables businesses to make faster, more informed decisions. In fields like finance, healthcare, and e-commerce, real-time insights can lead to competitive advantages, enabling companies to react to trends, detect anomalies, and make predictions quickly.

Benefits of Real-Time Analytics

- Immediate Decision Making: Real-time insights allow companies to make decisions instantly, which is crucial in fast-paced environments like stock trading or emergency response.

- Enhanced Customer Experience: By analyzing customer behavior as it happens, companies can offer personalized recommendations and solutions instantly, improving the customer experience.

- Operational Efficiency: Real-time analytics can optimize operations by monitoring processes in real-time, detecting bottlenecks, and automating certain actions.

For example, in a retail environment, real-time analytics can help track inventory levels, analyze sales trends, and adjust pricing strategies based on customer demand—all in real-time.

Example: Real-Time Fraud Detection in Finance

Banks use real-time data processing tools to monitor transactions for signs of fraud. As soon as a suspicious transaction is detected, the system can alert the bank and even prevent the transaction from being completed. This kind of immediate response is crucial to protecting both the bank and its customers.

Mastering Tools Like Kafka, Flink, and AWS Kinesis

To implement real-time analytics effectively, it’s important to master the right tools. Here are three of the top tools data scientists should be familiar with in 2025:

- Apache Kafka: Kafka is an open-source tool for building real-time data pipelines and streaming applications. It’s widely used for processing and analyzing high-throughput data in real-time, particularly in large-scale systems.

- Key Features: Distributed, fault-tolerant, and scalable.

- Use Cases: Event-driven architectures, real-time data processing in IoT systems, and log aggregation.

- Apache Flink: Flink is another open-source tool for real-time data processing. It’s designed for stream processing, meaning it can handle large amounts of real-time data with low latency.

- Key Features: High throughput, low-latency, and support for batch processing.

- Use Cases: Real-time analytics for monitoring social media, web traffic, and other streams of unstructured data.

- AWS Kinesis: A managed service provided by Amazon Web Services, Kinesis enables real-time processing of streaming data. It offers tools to collect, process, and analyze data streams.

- Key Features: Scalable, integrates with AWS services, and supports video, audio, and data analytics.

- Use Cases: Real-time analytics for sensor data, media streaming, and application logs.

Cloud Computing and Data Storage

As businesses increasingly rely on cloud computing for scalability and efficiency, understanding how to use the cloud for data storage and processing is critical for data scientists. Cloud platforms have drastically changed the way companies store, process, and analyze data, enabling greater flexibility and cost-efficiency.

The Growing Role of Cloud Platforms in Data Science

Cloud computing has become an important part of modern data science workflows. It allows for on-demand access to virtually unlimited computing resources, which is particularly important for big data processing, machine learning model training, and collaboration across teams.

Key Benefits of Cloud Computing in Data Science

- Scalability: Cloud platforms allow you to scale your data storage and processing capabilities as your needs grow, without having to invest in expensive physical hardware.

- Cost Efficiency: Pay-as-you-go pricing models mean that companies only pay for the resources they use, making it an affordable option for both small startups and large enterprises.

- Collaboration: Cloud platforms provide collaboration features, allowing teams to work together on projects regardless of their physical location.

Example: Cloud Storage for Big Data

If you’re working with large datasets in the healthcare industry, such as electronic medical records or imaging data. Using cloud storage services like Amazon S3 or Google Cloud Storage, you can store and access this data securely and at scale, without worrying about managing physical servers.

Key Skills for Using AWS, Google Cloud, and Azure Effectively

To make the most of cloud platforms, it’s important to understand how to work with the leading cloud providers: AWS, Google Cloud, and Microsoft Azure. Each offers unique tools and services for data storage, processing, and machine learning.

- AWS: Known for its comprehensive suite of tools, AWS offers services like S3 for storage, Lambda for serverless computing, and SageMaker for machine learning. Learning how to navigate these tools will be invaluable for data scientists.

- Google Cloud: Google Cloud offers tools like BigQuery for fast data analysis, TensorFlow for machine learning, and Google Cloud Storage for scalable data storage. Google Cloud’s integration with open-source tools makes it popular for machine learning projects.

- Azure: Microsoft Azure provides a suite of cloud services tailored to data science. With tools like Azure Machine Learning and Azure Databricks, it’s a strong contender for businesses looking for integrated machine learning and data analytics solutions.

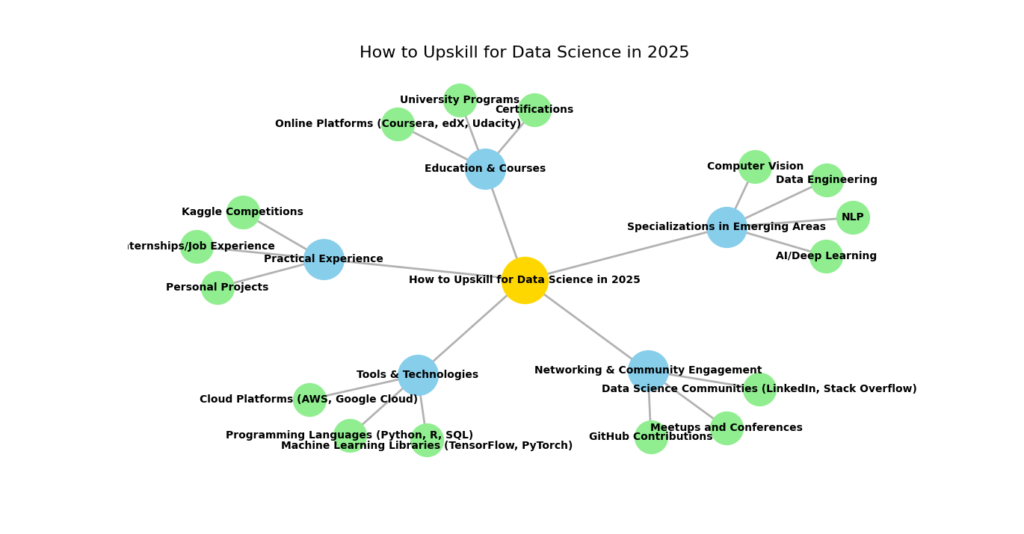

How to Upskill for Data Science in 2025

Top Certifications to Validate Your Skills

Certifications are an excellent way to validate your data science skills and demonstrate your expertise to potential employers. They can help you stand out in a competitive job market and show your commitment to staying updated with the latest industry trends. Below are some of the best data science certifications to pursue in 2025.

Best Data Science Certifications to Pursue in 2025

1. Certified Data Scientist (CDS) – Data Science Council of America (DASCA)

The Certified Data Scientist certification is one of the most recognized certifications for data scientists. It focuses on the core skills necessary for a data science role, including data analysis, machine learning, big data, and cloud computing. The certification helps you stand out to employers looking for a comprehensive understanding of data science.

Key Skills Covered: Machine learning, data analysis, statistics, big data, data visualization

Why it’s valuable: Provides a comprehensive curriculum covering key data science skills, recognized globally by employers.

2. Microsoft Certified: Azure Data Scientist Associate

This certification focuses on using Microsoft Azure for data science tasks, including data preparation, model training, and deployment. With the increasing demand for cloud computing skills, this certification can give you an edge in the job market.

Key Skills Covered: Data preparation, machine learning, model deployment using Azure

Why it’s valuable: Perfect for those working with or aiming to work with Microsoft’s cloud computing platform.

3. Google Cloud Professional Data Engineer

Google Cloud’s certification for data engineers focuses on data design, machine learning models, and data processing. If you’re working in cloud computing or looking to specialize in this area, Google Cloud is a great platform to master.

Key Skills Covered: Data architecture, machine learning on Google Cloud, big data tools

Why it’s valuable: Google’s platform is widely used, and this certification can help validate your cloud data expertise.

4. Data Science Professional Certificate – IBM (Coursera)

IBM offers a comprehensive data science certification that spans a wide range of topics, from basic data analysis and statistics to more advanced techniques like machine learning and AI. This certification is highly recommended for anyone looking to start or advance their data science career.

Key Skills Covered: Data visualization, machine learning, Python programming, data analysis

Why it’s valuable: This is an ideal starting point for newcomers to data science, with real-world projects and hands-on experience.

5. Certified Analytics Professional (CAP)

The Certified Analytics Professional certification is designed for professionals in analytics who want to take their skills to the next level. It’s perfect for those who already have a strong foundation in data analysis and want to focus on the business application of data science.

Key Skills Covered: Problem framing, analytics methodology, deployment, and data management

Why it’s valuable: It’s ideal for those looking to integrate data science with business strategies and analytics.

How Certifications Can Boost Your Data Science Career Prospects

Obtaining certifications in data science can significantly enhance your career prospects. Here’s how:

- Validation of Skills: Certifications show employers that you possess the required knowledge and skills in specific areas of data science. For example, machine learning, data visualization, and cloud computing are critical areas in modern data science roles.

- Increased Job Opportunities: With certifications from respected organizations, you’re more likely to be considered for data science roles. Many companies prefer candidates who have validated their skills through reputable certification programs.

- Confidence in Your Abilities: Earning a certification can boost your confidence in your data science capabilities. It also provides a structured learning path, so you know exactly what to focus on.

- Access to Networking and Career Resources: Some certifications provide access to exclusive networking groups, events, and job boards, which can help you find new opportunities or get advice from other professionals in the industry.

Online Resources for Learning Data Science Skills

The internet offers a wealth of resources for aspiring data scientists. From structured online courses to free resources, there are countless opportunities to learn and improve your data science skills. Below, we highlight some of the top online platforms and free resources for learning data science in 2025.

Popular Platforms Like Coursera, edX, and DataCamp

- Coursera

Coursera is one of the most popular platforms for online learning, offering courses from top universities and institutions worldwide. Whether you want to learn the fundamentals of data science or dive into specialized topics like deep learning or natural language processing, Coursera has something for everyone.- Recommended Courses:

- IBM Data Science Professional Certificate: A series of courses that cover all aspects of data science, from Python programming to machine learning.

- University of California Data Science Certificate: Focuses on the core skills needed for a data science role, such as data wrangling and visualization.

- Recommended Courses:

- edX

edX offers high-quality courses from prestigious universities like Harvard, MIT, and Stanford. These courses provide in-depth learning experiences on a variety of data science topics, and many come with a certification upon completion.- Recommended Courses:

- Data Science MicroMasters Program: A series of courses offered by UC San Diego that cover advanced topics such as machine learning, data visualization, and statistical analysis.

- HarvardX Data Science Professional Certificate: An excellent starting point for those new to data science, covering everything from basic programming in R to machine learning.

- Recommended Courses:

- DataCamp

DataCamp focuses specifically on data science and analytics. It’s a fantastic resource for those looking to learn programming languages like Python, R, or SQL, as well as skills in data manipulation, machine learning, and data visualization.- Recommended Courses:

- Introduction to Python: A beginner-friendly course to learn Python programming for data science.

- Machine Learning with Tree-based Models: A more advanced course focusing on machine learning algorithms.

- Recommended Courses:

Free Resources to Learn Data Science in 2025

For those who are looking to get started without any financial investment, many free resources are available to help you build your data science skills.

- Kaggle – Kaggle is a hub for data science and machine learning. The platform provides free datasets, competitions, and tutorials that allow you to practice your data science skills.

- Google’s Machine Learning Crash Course

Google offers a free, high-quality crash course on machine learning. It’s a great starting point for beginners and covers the basics of machine learning, deep learning, and building models. - Fast.ai

Fast.ai offers free courses designed to teach deep learning and machine learning through hands-on examples. These courses are aimed at practitioners who want to dive deeper into AI and machine learning. - YouTube

YouTube is an excellent platform for finding tutorials on every aspect of data science. Channels like Data School, StatQuest, and Corey Schafer provide clear, easy-to-follow tutorials on Python, R, and machine learning.

Conclusion

Upskilling for data science in 2025 is more accessible than ever before. By pursuing the right certifications and using top online learning platforms, you can enhance your knowledge and position yourself as a sought-after professional in this growing field. Whether you’re learning from scratch or looking to refine your skills, the resources outlined here will provide you with the tools you need to succeed. As data science skills continue to be in high demand, now is the perfect time to invest in your future career.

FAQs About Data Science Skills for 2025

What are the top in-demand data science skills for 2025?

In 2025, the top data science skills include machine learning, deep learning, AI programming (Python, R), data visualization (Tableau, Power BI), cloud computing (AWS, Google Cloud), and real-time analytics tools like Kafka and Flink. Familiarity with MLOps, AI ethics, and quantum computing will also be highly valued.

How can I start learning data science as a beginner in 2025?

Start by learning the basics of Python or R and understanding key concepts in statistics and data analysis. Free platforms like Kaggle, Coursera, and edX offer beginner-friendly courses. Focus on practical projects and build a strong foundation in data visualization and machine learning through hands-on practice.

Are certifications necessary for a career in data science?

Certifications can validate your data science skills and boost your credibility, but they are not strictly necessary. Practical experience, such as working on projects or participating in competitions like those on Kaggle, is just as important. However, certifications from reputable platforms like Coursera, edX, and Google can help you stand out to employers.

Leave a Reply