What is Data Wrangling in Data Science? An Ultimate Guide

Introduction to Data Wrangling

Have you ever worked with messy data and didn’t know where to start? If so, you’re not alone. Data wrangling is all about cleaning and organizing raw data so it’s ready to use. It’s a big part of what data scientists do, and it’s an important skill to have if you want to get useful insights from data.

But let’s face it—working with messy data can feel overwhelming. It might have missing values, confusing formats, or just too much information. The good news is, with the right steps, you can turn that chaos into something simple and organized. And once you do, analyzing data becomes much easier.

In this guide, we’ll walk you through everything you need to know about data wrangling. We’ll cover fixing missing data, combining datasets, and other tricks to make your data clean and ready to use. By the end, you’ll have the skills to handle messy data with confidence.

Ready to make sense of messy data? Let’s get started!

What is Data Wrangling?

Data wrangling, in simple terms, is the process of preparing messy or raw data into a clean and organized format that can be used for analysis. It involves tasks like fixing errors, filling in missing values, removing duplicates, and transforming data into a consistent structure.

Here’s an example to make it clear:

If you receive a spreadsheet with inconsistent dates, missing information, or incorrect labels, data wrangling is the step where you clean it up so it’s accurate and ready to work with.

Key steps in data wrangling include:

- Removing errors: Fixing typos or incorrect data entries.

- Filling gaps: Addressing missing values.

- Reformatting data: Standardizing formats (e.g., dates, currencies).

- Filtering: Keeping only the relevant parts of the data.

In short, data wrangling helps you turn chaotic data into a valuable resource for insights!

Importance of Data Wrangling: Why It’s a Crucial Step Before Data Analysis

When working with data, you often start with something messy—full of missing values, duplicate records, and inconsistencies. Data wrangling in data science is the process of fixing these issues to make your data clean, organized, and ready for analysis. Without this crucial step, your analysis might lead to incorrect conclusions or unreliable insights. Let’s explore why data wrangling is so essential and how it shapes the success of your data projects.

Why Is Data Wrangling Important?

1. Ensures Data Accuracy

Raw data often contains errors, like typos, missing values, or mismatched formats. If you skip cleaning this data, your results will reflect these mistakes. For example:

- A sales dataset may have missing entries for some regions.

- A customer data file might use both “USA” and “United States” for the same country.

By handling these errors, data wrangling in data science ensures that your analysis is based on accurate and consistent information.

2. Prepares Data for Analysis

Messy data cannot be directly used for analysis or machine learning. Wrangling prepares your data by:

- Removing duplicates.

- Converting dates or text to a usable format.

- Filling or removing missing values.

For instance, in Python, you can fill missing values in a dataset with the following code:

import pandas as pd

# Sample data

data = {'Name': ['Alice', 'Bob', None], 'Age': [25, None, 30]}

df = pd.DataFrame(data)

# Filling missing values

df.fillna({'Name': 'Unknown', 'Age': df['Age'].mean()}, inplace=True)

print(df)

This ensures your dataset is ready for further analysis.

3. Saves Time Later

While data wrangling in data science can feel time-consuming upfront, it actually saves you time later. Clean data reduces the chances of errors during analysis or modeling. Plus, if your data is well-organized, it’s easier to reuse for future projects.

4. Improves Decision-Making

Bad data leads to bad decisions. For example:

- A company analyzing sales data with missing information might misjudge their most profitable regions.

- A healthcare study with inconsistent patient records could produce unreliable conclusions.

By prioritizing data wrangling, you ensure that insights drawn from your data are reliable, leading to better decisions.

How Does Data Wrangling Shape Data Science Projects?

1. Structured Workflow

Wrangling provides a solid foundation for any data science project. The workflow looks like this:

| Step | Action | Example |

|---|---|---|

| Data Collection | Gather data from multiple sources. | Sales records, social media, surveys. |

| Data Wrangling | Clean and prepare the data. | Handle missing or duplicate data. |

| Data Analysis/Modeling | Extract insights or build models. | Predict customer behavior. |

| Decision-Making | Use insights to drive action. | Improve marketing strategies. |

Understanding the Data Wrangling Process

What Does Data Wrangling Involve?

Data wrangling in data science is all about preparing raw, unorganized data to make it clean and usable for analysis. This process involves multiple steps, each playing a critical role in transforming messy datasets into valuable resources for insights. Here’s a detailed overview of the key activities involved in data wrangling.

Key Activities in Data Wrangling

1. Identifying and Understanding the Data

Before you start cleaning, it’s important to understand the data you’re working with. This involves:

- Exploring the dataset to identify errors, missing values, or inconsistencies.

- Checking for duplicate records or irrelevant information.

- Understanding the data structure and relationships between variables.

For example, let’s say you’re working on sales data. You might notice missing sales figures for certain months or inconsistent formats for dates (e.g., “01-01-2024” and “Jan 1, 2024”).

Code Example:

import pandas as pd

# Load dataset

data = pd.read_csv('sales_data.csv')

# Check for missing values and basic info

print(data.info())

print(data.isnull().sum())

2. Handling Missing Data

Missing data is one of the most common problems in datasets. You can handle it in different ways, depending on the context:

- Fill missing values: Use the mean, median, or a constant value to fill gaps.

- Remove rows/columns: Eliminate entries if the missing data is excessive.

- Predict missing values: Use algorithms to estimate the missing information.

Example: In a customer database, if the “Age” column has missing values, you could fill them with the average age.

Code Example:

# Fill missing ages with the average

data['Age'].fillna(data['Age'].mean(), inplace=True)

3. Removing Duplicates

Duplicate records can lead to biased analysis. Identifying and removing them is essential for maintaining data quality.

Code Example:

# Remove duplicate rows

data.drop_duplicates(inplace=True)

4. Transforming Data

Transformations ensure consistency and make the dataset suitable for analysis. This step includes:

- Standardizing formats: Ensuring dates, currencies, and other fields are uniform.

- Encoding categorical variables: Converting text labels into numerical values.

- Normalizing data: Adjusting values to a common scale for comparisons.

Example: Standardizing dates in Python:

# Convert date column to standard format

data['Date'] = pd.to_datetime(data['Date'], format='%Y-%m-%d')

5. Filtering and Organizing Data

Not all data is useful for your analysis. Filtering helps in selecting only the relevant information. This might involve:

- Removing unnecessary columns.

- Selecting specific rows based on conditions.

Example: Keeping only customers from a specific region:

# Filter data for customers in 'North' region

filtered_data = data[data['Region'] == 'North']

6. Merging and Joining Data

Often, data comes from multiple sources. You may need to combine datasets to get a complete picture. Common operations include:

- Merging datasets: Combining two datasets based on a shared key (e.g., Customer ID).

- Appending datasets: Stacking data from multiple files or sources.

Code Example:

# Merge customer and sales data on 'CustomerID'

merged_data = pd.merge(customers, sales, on='CustomerID')

7. Data Validation

Validation ensures that the cleaned and transformed data meets expectations. You can check for:

- Outliers that could skew results.

- Consistency across rows and columns.

For example, if “Age” has values greater than 120, it’s likely an error.

Code Example:

# Check for outliers in the 'Age' column

print(data[data['Age'] > 120])

Tabular Overview of Key Activities

| Activity | Description | Example Task |

|---|---|---|

| Identifying Data | Understanding errors, formats, and relationships. | Checking for missing values. |

| Handling Missing Data | Filling, removing, or predicting missing values. | Filling gaps in the “Age” column. |

| Removing Duplicates | Eliminating repeated entries to ensure accuracy. | Removing duplicate customer records. |

| Transforming Data | Standardizing and converting data for consistency. | Encoding “Yes/No” as 1/0. |

| Filtering Data | Keeping only relevant rows and columns. | Selecting data for a specific region. |

| Merging Data | Combining multiple datasets for complete analysis. | Joining sales and customer data. |

| Validating Data | Ensuring the cleaned data meets expectations. | Checking for outliers in numerical columns. |

Must Read

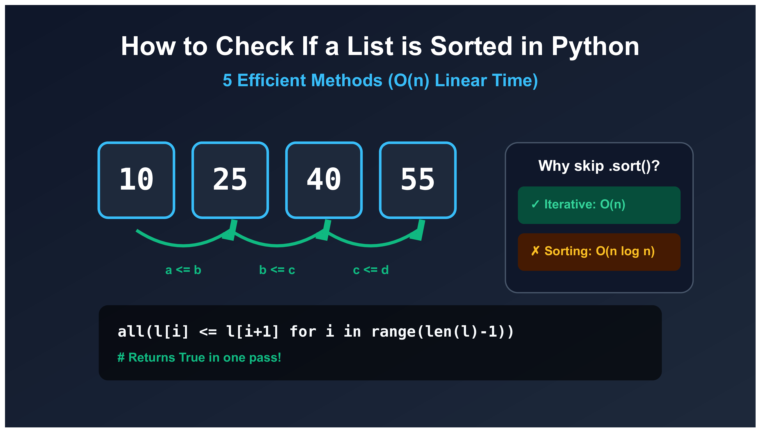

- How to Check If a List Is Sorted in Python (Without Using sort()) – 5 Efficient Methods

- How Python Searches Data: Linear Search, Binary Search, and Hash Lookup Explained

- I Implemented Every Sorting Algorithm in Python — The Results Nobody Talks About (Benchmarked on CPython)

- How to Reverse a String in Python: Performance, Memory, and the Tokenizer Trap

- How to Check Palindrome in Python: 5 Efficient Methods (2026 Guide)

Key Terms Related to Data Wrangling

When learning data wrangling in data science, it’s helpful to understand key terms used in this field. These terms often overlap but have distinct meanings based on context. I’lladd some examples and tips to help solidify the concepts.

1. Data Wrangling

Data wrangling is the overarching process of cleaning, organizing, and transforming raw data into a usable format for analysis. It’s like preparing ingredients before cooking—they need to be washed, cut, and measured before making a dish.

Key Activities in Data Wrangling:

- Handling missing values.

- Identifying outliers.

- Converting data types (e.g., dates, categories).

- Combining datasets for a unified view.

Why It Matters:

Without proper wrangling, your analysis can lead to inaccurate or misleading conclusions. For instance, if dates in a sales dataset are not properly formatted, trends over time will be impossible to detect.

2. Data Munging

Data munging is often used interchangeably with data wrangling, but it specifically emphasizes the process of manually handling and reshaping data.

Characteristics of Data Munging:

- Requires hands-on manipulation.

- Focuses on irregular or messy datasets.

- Often involves quick, iterative adjustments.

Example:

You might receive survey data where responses are stored as “Yes,” “No,” and “Y.” Data munging would involve standardizing these responses into a consistent format like 1 for “Yes” and 0 for “No.”

Code Snippet:

import pandas as pd

# Standardizing survey responses

data['Response'] = data['Response'].replace({'Yes': 1, 'Y': 1, 'No': 0})

3. Data Cleaning

Data cleaning is a key step in data wrangling in data science. It focuses on detecting and correcting errors, inaccuracies, and inconsistencies. Think of it as tidying up a cluttered room so you can easily find what you need.

Common Data Cleaning Tasks:

- Filling or removing missing values.

- Deleting duplicates.

- Fixing inconsistent formatting (e.g., “January 1, 2024” vs. “01/01/2024”).

- Removing irrelevant or noisy data.

Example:

In a sales dataset, some records might show negative quantities due to data entry errors. These need to be identified and corrected.

4. Data Transformation

Data transformation involves converting data from one format or structure to another. It’s like rearranging a bookshelf so all books are organized by genre or author, making them easier to access.

Types of Transformations:

- Scaling: Adjusting data to a common range, such as normalizing between 0 and 1.

- Encoding: Converting categorical data into numerical values.

- Aggregating: Summarizing data (e.g., calculating monthly sales from daily records).

Example: Normalizing age data to improve machine learning model performance.

Code Snippet:

from sklearn.preprocessing import MinMaxScaler

# Normalize the Age column

scaler = MinMaxScaler()

data['Age'] = scaler.fit_transform(data[['Age']])

5. Other Key Terms

| Term | Definition | Example |

|---|---|---|

| Data Imputation | Replacing missing values with estimates, such as the mean, median, or predicted values. | Filling missing ages with the column mean. |

| Data Integration | Combining data from multiple sources into a single dataset. | Merging customer and sales data by Customer ID. |

| Data Enrichment | Adding additional data to enhance the original dataset. | Adding weather data to sales data to analyze weather’s impact on sales trends. |

| Data Validation | Ensuring data meets accuracy and quality standards before analysis. | Checking for values outside the expected range, like ages over 120. |

| Feature Engineering | Creating new variables from existing data to improve machine learning models. | Extracting the “Year” from a “Date” column for time-based analysis. |

The Six important Steps of Data Wrangling

1. Data Discovery: The First Step in Data Wrangling in Data Science

Data discovery is the initial phase of data wrangling in data science, where data is explored and assessed to understand its quality, structure, and content. This phase is crucial in identifying data quality issues, which can impact the accuracy and reliability of insights generated from the data. In this section, we will discuss the techniques, tools, and methods for effective data discovery.

What is Data Discovery?

Data discovery involves manually reviewing and exploring the data to gain insights into its quality, accuracy, and relevance. It’s like being a detective, searching for clues to understand the data’s strengths and weaknesses. During this phase, data scientists examine the data’s structure, format, and content to identify potential issues, such as missing values, inconsistencies, and errors.

Techniques for Effective Data Discovery

Several techniques can be employed for effective data discovery:

- Data Profiling: This involves creating summary statistics and visualizations to understand the data’s distribution, central tendency, and variability. Data profiling helps identify outliers, missing values, and data inconsistencies.

- Visualizing Data: Visualizing data using plots, charts, and heatmaps enables data scientists to quickly identify patterns, trends, and correlations.

- Data Querying: Writing SQL queries or using data manipulation languages like Pandas in Python enables data scientists to extract specific data subsets and examine them closely.

- Data Sampling: Examining a random sample of data can provide insights into the data’s overall quality and characteristics.

Tools and Methods for Identifying Data Quality Issues

Several tools and methods can be used to identify data quality issues during data discovery:

- Data Quality Metrics: Metrics like data completeness, accuracy, and consistency can be calculated to evaluate data quality.

- Data Lineage Tools: Tools like Apache Atlas and AWS Glue provide data lineage capabilities, enabling data scientists to track data provenance and identify data quality issues.

- Tools of Data Profiling: Tools like Pandas Profiling and Data Quality provide automated data profiling capabilities.

- Data Visualization Tools: Tools like Tableau, Power BI, and D3.js enable data scientists to create interactive visualizations.

Example: Data Discovery Using Pandas

Let’s consider an example using Pandas in Python. Suppose we have a dataset containing customer information, including name, age, and address.

import pandas as pd

# Load the data

data = pd.read_csv('customer_data.csv')

# Examine the data

print(data.head()) # Display the first few rows

print(data.info()) # Display summary statistics

print(data.describe()) # Display data distributionData Discovery Best Practices

To ensure effective data discovery, follow these best practices:

- Document Data Sources: Record data sources and collection methods.

- Track Data Provenance: Use data lineage tools to track data changes.

- Use Data Quality Metrics: Calculate metrics to evaluate data quality.

- Visualize Data: Use plots and charts to understand data distribution.

2. Data Structuring: Organizing Raw Data into a Usable Format

Data structuring is a critical step in data wrangling in data science. It involves organizing raw data into a usable format, making it easier to analyze and extract insights. In this section, we will discuss the importance of structuring data, common structuring techniques, and examples of how to apply these techniques.

Importance of Structuring Data

Raw data is often unorganized and difficult to analyze. Structuring data helps to:

- Improve Data Quality: Organized data is less prone to errors and inconsistencies.

- Enhance Data Analysis: Structured data enables faster and more accurate analysis.

- Support Data Visualization: Organized data is easier to visualize, making insights more accessible.

How Structuring Helps

Structuring data helps to transform raw data into a usable format. This involves:

- Data Transformation: Converting data from one format to another (e.g., CSV to Excel).

- Aggregating Data: Grouping data by categories or attributes.

- Data Normalization: Ensuring data consistency and accuracy.

Common Structuring Techniques

Several techniques can be employed to structure data:

- Tabular Formats: Organizing data into tables with rows and columns.

- Hierarchical Formats: Organizing data into hierarchical structures (e.g., trees).

- Relational Formats: Organizing data into relational databases.

Examples of Structuring Techniques

Converting to Tabular Formats

Suppose we have raw data in a text file:

Name,Age,City

John,25,New York

Alice,30,San Francisco

Bob,35,ChicagoWe can structure this data into a tabular format using Pandas in Python:

import pandas as pd

# Load the data

data = pd.read_csv('raw_data.txt')

# Print the structured data

print(data)Output:

Name Age City

0 John 25 New York

1 Alice 30 San Francisco

2 Bob 35 ChicagoData Aggregation

Suppose we have data on customer purchases:

Customer ID,Product,Quantity

1,A,10

1,B,20

2,C,30

3,A,40We can structure this data by aggregating the quantity by product:

import pandas as pd

# Load the data

data = pd.read_csv('purchases.txt')

# Aggregate the data

aggregated_data = data.groupby('Product')['Quantity'].sum()

# Print the aggregated data

print(aggregated_data)Output:

Product

A 50

B 20

C 30

Name: Quantity, dtype: int64Data Structuring Best Practices

To ensure effective data structuring, follow these best practices:

- Document Data Structures: Record data structures and formats.

- Use Standard Formats: Employ standard formats (e.g., CSV, JSON).

- Validate Data: Verify data accuracy and consistency.

3. Data Cleaning: Ensuring Accuracy in Data Analysis

In this section, we will discuss the importance of data cleaning, common cleaning techniques, and examples of how to apply these techniques.

Understanding Data Cleaning

Data cleaning entails reviewing and correcting data to ensure accuracy and reliability. This process is necessary because:

- Errors and Inconsistencies : Data entry errors, missing values, and inconsistencies can lead to inaccurate insights.

- Data Quality : Poor data quality can impact model performance and decision-making.

- Data Integrity : Ensuring data integrity maintains trust in the data and analysis.

Why Data Cleaning is Necessary

Data cleaning is necessary for accurate analysis because:

- Prevents Biased Insights : Incorrect data leads to biased insights and poor decision-making.

- Improves Model Performance : Clean data enhances model accuracy and reliability.

- Enhances Data Visualization : Clean data enables effective data visualization.

Common Cleaning Techniques

Several techniques can be employed to clean data:

- Handling Missing Values : Imputing, interpolating, or deleting missing values.

- Duplicate Detection : Identifying and removing duplicate records.

- Inconsistency Detection : Identifying and correcting inconsistent data.

Handling Missing Values

Missing values can be handled using:

- Mean/Median Imputation : Replacing missing values with mean or median.

- Regression Imputation : Using regression models to predict missing values.

- Listwise Deletion : Deleting records with missing values.

Example: Handling Missing Values using Pandas

Suppose we have data with missing values:

import pandas as pd

# Load the data

data = pd.read_csv('data.csv')

# Print the data

print(data)Output:

Name Age City

0 John 25 NaN

1 Alice 30 NaN

2 Bob 35 NaNWe can impute missing values using mean imputation:

# Impute missing values with mean

data['City'] = data['City'].fillna(data['City'].mean())

# Print the cleaned data

print(data)Output:

Name Age City

0 John 25 New York

1 Alice 30 San Francisco

2 Bob 35 ChicagoDuplicate Detection

Duplicates can be detected using:

- Unique Identifiers: Identifying unique records using IDs.

- Data Matching: Matching records based on multiple attributes.

Example: Duplicate Detection using Pandas

Suppose we have data with duplicates:

import pandas as pd

# Load the data

data = pd.read_csv('data.csv')

# Print the data

print(data)Output:

Name Age City

0 John 25 New York

1 Alice 30 San Francisco

2 Bob 35 Chicago

3 John 25 New YorkWe can detect duplicates using Pandas:

# Detect duplicates

duplicates = data.duplicated()

# Print the duplicates

print(duplicates)Output:

0 False

1 False

2 False

3 TrueData Cleaning Best Practices

To ensure effective data cleaning, follow these best practices:

- Document Cleaning Steps: Record cleaning steps and techniques.

- Use Data Quality Metrics: Track data quality metrics (e.g., accuracy, completeness).

- Validate Cleaned Data: Verify cleaned data accuracy.

4. Data Enrichment

Data enrichment is an important part of data wrangling in data science. It involves enhancing a dataset by adding new, relevant information from various sources. This step makes the dataset richer and more informative, enabling better insights and more accurate predictions. Let’s explore this concept in detail, understand how it’s done, and look at real-world examples to make the idea more tangible.

What is Data Enrichment?

In simple terms, data enrichment is the process of supplementing an existing dataset with additional data to fill gaps, provide more context, or improve accuracy. For example, adding customer demographic information (like age or income) to a sales dataset can help identify trends and target the right audience.

It’s like taking a skeleton and giving it muscles and skin so it becomes functional and meaningful.

Methods for Enriching Data

Data enrichment can be performed using various methods depending on the type of data and the goals of your analysis. Here are some common approaches:

1. Joining External Datasets

Merging your dataset with external data sources is one of the most effective ways to enrich your data. For instance:

- Adding weather data to sales data to understand how weather affects purchases.

- Integrating census data with a customer database to identify target demographics.

Code Example:

import pandas as pd

# Sales data

sales_data = pd.DataFrame({

'Date': ['2024-01-01', '2024-01-02'],

'City': ['New York', 'Chicago'],

'Sales': [200, 150]

})

# Weather data

weather_data = pd.DataFrame({

'Date': ['2024-01-01', '2024-01-02'],

'City': ['New York', 'Chicago'],

'Temperature': [30, 20]

})

# Merging datasets

enriched_data = pd.merge(sales_data, weather_data, on=['Date', 'City'])

print(enriched_data)

Result:

| Date | City | Sales | Temperature |

|---|---|---|---|

| 2024-01-01 | New York | 200 | 30 |

| 2024-01-02 | Chicago | 150 | 20 |

2. Deriving New Features

Creating new variables from existing data is another enrichment method. For example:

- Extracting the day of the week from a date column.

- Calculating customer lifetime value based on purchase history.

Code Example:

# Adding a day-of-week column

enriched_data['Day'] = pd.to_datetime(enriched_data['Date']).dt.day_name()

print(enriched_data)

Result:

| Date | City | Sales | Temperature | Day |

|---|---|---|---|---|

| 2024-01-01 | New York | 200 | 30 | Monday |

| 2024-01-02 | Chicago | 150 | 20 | Tuesday |

3. Using APIs for Real-Time Data

APIs (Application Programming Interfaces) are useful for fetching live data, such as stock prices, news, or social media trends, and adding them to your dataset.

Example Sources:

- Google Maps API for location-based data.

- OpenWeatherMap API for weather information.

- Twitter API for sentiment analysis.

Examples of Sources for Data Enrichment

To enhance your dataset effectively, it’s essential to know where to find complementary data. Below are some common sources:

| Source | Use Case |

|---|---|

| Public Databases | Government datasets for demographics, economic data, or healthcare statistics. |

| APIs | Real-time updates like weather, stock prices, or traffic conditions. |

| Third-Party Tools | Marketing tools like HubSpot or Salesforce to fetch customer-related data. |

| Internal Data | Data from within your organization, such as customer feedback or product inventory. |

Why Data Enrichment is Important

Adding additional information during data wrangling in data science improves the quality and depth of insights. Let me share a real-world example to explain:

When working on a customer segmentation project, I initially had only transaction data (date, amount, customer ID). It was challenging to identify purchasing behaviors. By enriching the dataset with demographic details and marketing engagement scores, I was able to create more targeted and effective marketing strategies.

5. Data Validation

Data validation is an important part of data wrangling in data science. It ensures the dataset’s quality, accuracy, and reliability before any analysis begins. Poor data quality can lead to misleading insights and flawed decision-making. Let’s break down why data validation is critical and how you can perform it effectively.

Why Validate Your Data?

Validating data is like double-checking the foundation before building a house. If the foundation (data) isn’t solid, the structure (your analysis or model) will collapse. Here’s why data validation is important:

- Accuracy Matters: Even small errors in the dataset can lead to incorrect conclusions. Validation helps ensure every data point is reliable.

- Consistency: Inconsistent data (like different formats or missing values) can cause issues during analysis. Validation ensures uniformity across the dataset.

- Compliance: Many industries, like healthcare or finance, require datasets to meet specific standards. Validation helps adhere to these requirements.

- Saving Time and Resources: Detecting and fixing issues early prevents wasted effort on faulty analyses.

Validation Techniques

There are several techniques to validate your data during data wrangling in data science. Each method ensures a different aspect of quality and accuracy.

1. Checking for Missing Values

Missing data can skew results. It’s essential to identify and handle these gaps properly.

Code Example (Finding Missing Values):

import pandas as pd

# Sample dataset

data = pd.DataFrame({

'Name': ['Alice', 'Bob', None],

'Age': [25, None, 30],

'Salary': [50000, 60000, 70000]

})

# Checking for missing values

print(data.isnull())

# Summary of missing values

print(data.isnull().sum())

Output:

| Column | Missing Values |

|---|---|

| Name | 1 |

| Age | 1 |

| Salary | 0 |

2. Validating Data Types

Each column in a dataset should have the correct data type. For example, an age column should contain numbers, not text.

Code Example (Checking Data Types):

# Checking column data types

print(data.dtypes)

Output:

| Column | Data Type |

|---|---|

| Name | object |

| Age | float64 |

| Salary | int64 |

3. Range Validation

Checking whether values fall within an expected range is essential. For instance, a column for “Age” shouldn’t contain negative numbers.

Code Example (Validating Ranges):

# Validating age range

valid_age = data['Age'].between(0, 120)

print(valid_age)

Output:

| Index | Valid Age |

|---|---|

| 0 | True |

| 1 | False |

| 2 | True |

4. Removing Duplicates

Duplicate records can inflate metrics or skew analysis. Identifying and removing duplicates ensures accuracy.

Code Example (Removing Duplicates):

# Sample dataset with duplicates

data = pd.DataFrame({

'Name': ['Alice', 'Bob', 'Alice'],

'Age': [25, 30, 25],

'Salary': [50000, 60000, 50000]

})

# Removing duplicates

data = data.drop_duplicates()

print(data)

Output:

| Name | Age | Salary |

|---|---|---|

| Alice | 25 | 50000 |

| Bob | 30 | 60000 |

5. Cross-Field Validation

Some fields should align with others. For instance, if a “Joining Date” column exists, the “Exit Date” should not precede it.

Validation Checklist

Here’s a quick checklist to ensure your data meets quality standards:

| Validation Task | Why It Matters |

|---|---|

| Check for missing values | Ensures no gaps in critical information. |

| Validate data types | Prevents processing errors. |

| Remove duplicates | Avoids inflated metrics. |

| Verify value ranges | Maintains logical consistency. |

| Perform cross-field validation | Ensures relationships between fields are accurate. |

6. Data Publishing

Once you’ve successfully cleaned and wrangled your data, the final step is data publishing. This step prepares your dataset for analysis or sharing with others. Proper publishing ensures that your work is accessible, useful, and ready to be used in a variety of contexts. In this section, we’ll explore how to effectively prepare your data for publication and share best practices for making it usable for others.

Final Steps in Data Publishing

When you finish data wrangling in data science, you may feel like your work is done. However, before sharing your cleaned and structured dataset with others or using it for analysis, there are a few final steps you need to take to ensure that the data is presented properly. These steps are crucial for ensuring that the data can be used by others easily and accurately.

Here’s how you can prepare your dataset for publication:

1. Final Review of Your Dataset

Before publishing, double-check your data. This step involves reviewing the entire dataset to ensure that all cleaning and transformation steps have been properly applied.

- Check for missing values, duplicates, and outliers once again.

- Verify that all the columns are properly labeled, and the data types are correct.

- Confirm that no data is accidentally excluded or mishandled during cleaning.

2. Standardize the Format

To make sure your data is accessible, it’s important to standardize the format before publishing. This includes:

- Choosing the Right File Format: Choose the format that best suits the intended use of the data. Common formats include CSV, Excel, or JSON. If the data is to be used in databases, SQL files might be appropriate.

- Consistency in Units: Ensure that measurements (like currency, temperature, or distance) are consistent throughout your dataset.

Code Example (Saving Data to CSV):

import pandas as pd

# Example cleaned dataset

data = pd.DataFrame({

'Product': ['Laptop', 'Phone', 'Tablet'],

'Price': [1000, 500, 300],

'Stock': [150, 200, 120]

})

# Saving cleaned data to CSV

data.to_csv('cleaned_data.csv', index=False)

3. Metadata and Documentation

Good documentation is key when publishing data. This includes:

- Dataset Description: Provide a short description of the dataset, explaining what it represents, its source, and its intended use.

- Variables Explanation: Include an explanation of each column and its possible values.

- Data Collection Methods: Mention how the data was collected and any assumptions made during data wrangling.

- Known Issues: If there are any known issues with the data, such as missing entries or biases, it’s helpful to document those as well.

Best Practices for Effective Data Publishing

Once your data is cleaned, formatted, and documented, there are a few best practices you should follow to ensure your published data is accessible, usable, and easily understood by others.

1. Ensure Accessibility

- Use Open Data Formats: If possible, choose open formats like CSV or JSON, which are widely accepted and can be easily opened with a variety of tools.

- Cloud Platforms for Sharing: Upload your data to a cloud platform like Google Drive, AWS, or a public data repository. This allows for easy access and collaboration.

- Clear Naming Conventions: Ensure your dataset is easily identifiable by naming the files clearly and consistently. Use descriptive names for both the dataset and its columns.

2. Make the Data Usable

The goal of publishing data is to make it easy for others to use. This means:

- Data Cleaning Information: If possible, share the steps you took to clean and process the data. This way, others know how to handle the data correctly.

- Provide Sample Outputs: If relevant, include examples of the kind of analysis or results that can be achieved with the data. This shows potential users how they can interact with the dataset.

- Offer Contact Information: Include a way for others to reach out if they have questions or need clarification about the data.

3. Version Control

If your dataset undergoes future updates or changes, it’s helpful to keep track of these changes using version control. This ensures that users can refer to the correct version of the data at any given time. Platforms like GitHub provide version control features for datasets.

Summary of Best Practices for Data Publishing

Here’s a quick checklist of best practices to follow when publishing data:

| Best Practice | Why It Matters |

|---|---|

| Choose open data formats | Ensures accessibility across platforms. |

| Upload to a public platform | Increases data sharing and collaboration. |

| Use clear naming conventions | Makes the dataset easy to find and identify. |

| Include detailed documentation | Helps others understand and use the data correctly. |

| Maintain version control | Tracks changes and ensures correct versions. |

Tools and Technologies for Data Wrangling

Popular Tools for Data Wrangling

When it comes to data wrangling in data science, the tools you use can make a big difference in how quickly and effectively you can clean, transform, and analyze your data. There are several popular tools out there that can help with different aspects of data wrangling, and each has its own strengths. In this section, we’ll explore some of the most commonly used tools for data wrangling, such as Python (Pandas), R, Trifacta, and Alteryx, and help you choose the right one for your needs.

Overview of Tools for Data Wrangling

1. Python (Pandas)

Python, with its powerful Pandas library, is one of the most widely used tools for data wrangling. It’s a go-to choice for many data scientists because of its flexibility, ease of use, and extensive community support. Pandas allows you to easily manipulate large datasets, clean missing values, and convert data types.

Why Use Pandas?

- DataFrame Structure: Pandas uses a DataFrame, which is a table-like structure that makes data wrangling tasks much easier.

- Efficient Handling of Missing Data: It provides built-in methods to deal with missing data, like

dropna()orfillna(). - Data Transformation: You can reshape, merge, and aggregate data with just a few lines of code.

Example Code:

import pandas as pd

# Example dataset

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [25, None, 30],

'City': ['New York', 'Los Angeles', 'Chicago']}

df = pd.DataFrame(data)

# Filling missing values

df['Age'] = df['Age'].fillna(df['Age'].mean())

print(df)

This snippet fills the missing value in the “Age” column with the average of the other values.

2. R

R is another popular tool, especially for statisticians and data scientists in academia. It’s well-known for its wide range of statistical packages, making it an excellent choice for more advanced data wrangling, especially when you need to combine it with statistical analysis.

Why Use R?

- Great for Statistical Analysis: If your wrangling task is part of a larger statistical analysis, R is ideal.

- Tidyverse Package: R’s Tidyverse package, which includes

dplyr,tidyr, andggplot2, is specifically designed for data manipulation and visualization. - Integration with Other Tools: R can easily interface with other data sources and software like SQL, Hadoop, and Excel.

Example Code:

# Install and load the tidyverse package

install.packages("tidyverse")

library(tidyverse)

# Create a dataframe

data <- data.frame(Name = c("Alice", "Bob", "Charlie"),

Age = c(25, NA, 30),

City = c("New York", "Los Angeles", "Chicago"))

# Fill missing data

data$Age[is.na(data$Age)] <- mean(data$Age, na.rm = TRUE)

print(data)

This example shows how to handle missing data using the tidyverse package in R.

3. Trifacta

Trifacta is a tool focused entirely on data wrangling and is designed to help both technical and non-technical users clean and prepare data. It uses a visual interface, making it easier for those who prefer not to write code.

Why Use Trifacta?

- User-Friendly Interface: Its visual interface allows you to drag-and-drop data transformations, making it easy for beginners.

- Automation: It can automatically detect and suggest the most common cleaning actions, saving time.

- Integration with Cloud Services: Trifacta integrates well with cloud platforms like Google Cloud and AWS, making it a good choice for cloud-based data wrangling.

4. Alteryx

Alteryx is another tool that’s popular for data wrangling in data science. It is known for its ability to handle large datasets and its strong set of data transformation tools. Like Trifacta, Alteryx uses a visual workflow but offers more advanced features for experienced data professionals.

Why Use Alteryx?

- Advanced Data Blending: Alteryx excels in data blending, allowing you to combine data from multiple sources effortlessly.

- Predictive Analytics: It includes predictive analytics tools, which can be a plus if you’re not only cleaning but also analyzing the data.

- Automation and Scaling: Alteryx allows for workflow automation and is designed to scale with large datasets.

Choosing the Right Tool for Your Needs

Now that we’ve gone over some popular tools for data wrangling, the next question is: Which tool should you choose? The choice largely depends on the specific requirements of your project, your team’s skillset, and how you intend to use the data. Here are a few factors to consider when selecting a tool for data wrangling in data science:

1. Complexity of the Task

- For Advanced Data Manipulation: If your project requires complex transformations, Python with Pandas or R (especially with Tidyverse) may be your best bet.

- For Simple, Visual Wrangling: If you prefer not to write code and need a user-friendly tool, both Trifacta and Alteryx offer intuitive drag-and-drop interfaces.

2. Team Skill Set

- Experienced Coders: If your team is experienced in coding and prefers full control, Pandas (Python) or R would be ideal.

- Non-Coders: For non-technical teams or when you want to speed up the process, Trifacta or Alteryx are great choices.

3. Project Size and Scope

- Large Datasets: If you’re working with large datasets, tools like Alteryx and Trifacta are specifically designed to handle big data and offer cloud integration for easier scaling.

- Smaller, Local Projects: If the dataset is relatively small and doesn’t require heavy processing, Pandas or R will work just fine.

4. Budget and Licensing

- Free Options: Python (Pandas) and R are both open-source and free to use, which can be very appealing for individuals and small teams.

- Paid Tools: If you have the budget, Trifacta and Alteryx offer powerful features for enterprise-level data wrangling.

Summary of Popular Data Wrangling Tools

Here’s a comparison of the tools discussed:

| Tool | Strengths | Best For | Cost |

|---|---|---|---|

| Pandas (Python) | Flexible, extensive community support, fast | Complex wrangling, automation | Free |

| R (Tidyverse) | Excellent for statistics, strong packages | Advanced statistical analysis | Free |

| Trifacta | User-friendly, visual interface | Non-technical users, cloud data | Paid (with free trial) |

| Alteryx | Data blending, predictive analytics | Large datasets, enterprise work | Paid |

Choosing the right tool for data wrangling in data science depends on your project’s needs, your skill level, and the size of the data you’re working with. Whether you choose a coding-heavy tool like Python (Pandas) or a more visual solution like Trifacta or Alteryx, the goal remains the same: to clean, transform, and prepare data for analysis. Each tool has its strengths, and understanding those strengths will help you make an informed decision for your project.

Best Practices in Data Wrangling

When it comes to data wrangling in data science, applying the right techniques and following best practices is crucial. Ensuring that data is clean, structured, and ready for analysis requires careful planning and attention to detail. In this section, we’ll discuss two key best practices: understanding your audience and objectives and documentation throughout the process. Both of these practices are essential for ensuring that the data is usable, reliable, and transparent.

Understanding Your Audience and Objectives

One of the most important aspects of data wrangling in data science is understanding who will use the data and why. Before you even begin cleaning or transforming the data, it’s essential to have a clear understanding of the end goals. Are you preparing the data for a machine learning model? Are you presenting the data to decision-makers in your organization? Or are you cleaning data for use in a public report? These objectives will determine how you handle the data at each step of the wrangling process.

Why is this important?

Knowing the audience and the intended purpose of the data helps you:

- Determine the level of detail: Depending on the end use, you might need to keep detailed raw data or only provide summary-level insights.

- Choose the right transformations: If the data is meant for machine learning, you may need to normalize or scale it. For reporting purposes, you might need to aggregate it and present it in a more digestible format.

- Ensure compatibility: Different audiences might require different file formats or data structures. For example, a data analyst might prefer working with CSV files, while a machine learning engineer may prefer data in a JSON or Parquet format.

Example: If you’re preparing data for a machine learning model, your primary focus will be on data quality and ensuring that features are clean, normalized, and free of inconsistencies. You may perform operations like filling missing values with imputation methods or encoding categorical variables. However, if your data is being prepared for a report, the focus might shift towards summarizing the data and presenting trends, where a visual representation (such as graphs or tables) could be more beneficial.

Key Takeaways for Understanding Your Audience:

- Know the audience’s technical skill level: Is your audience technical, like a data scientist, or non-technical, like a business leader? Tailor your data wrangling steps accordingly.

- Know the intended use case: Is the data being used for reporting, machine learning, or business analysis? This will shape your wrangling approach.

Documentation Throughout the Process

Documentation is another critical aspect of data wrangling in data science. It’s easy to forget what changes were made after several rounds of data cleaning and transformation, but keeping track of these changes is important for several reasons:

- Transparency: When working with large datasets, it’s essential to know what was changed, why, and how. This transparency helps ensure that any transformations are reproducible and can be verified by others.

- Collaboration: In team-based data wrangling projects, documentation allows team members to understand what has already been done and what steps still need to be taken. It also helps avoid duplicating efforts.

- Future Reference: Often, you’ll need to revisit the data wrangling process months or even years later. Documentation allows you to understand your past decisions and adapt your approach if needed.

Why is Documentation Crucial?

- Track Changes: During data wrangling, you will make several transformations—whether it’s handling missing values, removing duplicates, or transforming variables. Keeping a log of these steps makes it easier to understand and reproduce the process later.

- Communicate Decisions: If you are working in a team or sharing your work, documenting the decisions you make (e.g., why you chose to impute missing data with the mean rather than using the median) ensures clarity and helps others follow your logic.

- Prevent Errors: When transformations are not properly documented, it’s easy to overlook previous steps or make conflicting changes that compromise the integrity of the data.

How to Document Your Data Wrangling Process:

- Version Control: Use version control tools like Git to track changes to your scripts or notebooks. This way, you can see the history of changes made to the code and the dataset itself.

- Commenting in Code: Whether you’re using Python, R, or another tool, it’s good practice to comment on what each part of your code is doing. For example, before cleaning a dataset, write a brief note on the reason for the cleaning step.

- Log Files: Keep a log file (in text or spreadsheet format) that lists all data wrangling actions performed, with timestamps and notes on why each step was necessary.

Example: If you’re filling missing values in a dataset, a simple comment in Python might look like this:

# Filling missing values in 'Age' column with the mean value

df['Age'] = df['Age'].fillna(df['Age'].mean())

Additionally, you could document the step in a text file:

[Date: 2024-11-28]

Step: Filling missing values in 'Age' column with the mean value

Reason: The 'Age' column had missing values that needed to be imputed. The mean was chosen as it is a simple imputation method and appropriate for this dataset.

Key Documentation Practices:

- Keep a Log of Changes: Use a file to track all transformations, along with the reasons behind them.

- Explain Why and How: Write clear comments in your code and document the rationale behind your decisions.

- Use Version Control: Regularly commit changes to version control systems like Git to track the history of your data wrangling efforts.

Challenges in Data Wrangling

Data Science, comes with its own set of challenges. Whether you are dealing with large datasets, inconsistent data formats, or missing values, these issues can slow down your progress and affect the accuracy of your results. In this section, we will explore some of the common challenges faced by data scientists during data wrangling and discuss practical strategies to overcome these challenges.

Common Challenges Faced by Data Scientists

1. Dealing with Large Datasets

Problem: One of the biggest hurdles in data wrangling is handling large datasets that don’t fit in memory. Working with big data often leads to slow processing times, crashes, or the inability to perform certain operations like sorting or filtering.

Example: A dataset containing millions of rows of customer transaction data might not load easily into memory, leading to delays in the wrangling process.

2. Inconsistent Data Formats

Problem: Data often comes from multiple sources, and these sources might use different formats or units of measurement. For example, one dataset may use “YYYY-MM-DD” format for dates, while another uses “MM/DD/YYYY.” This inconsistency can make it difficult to merge or compare data.

Example: You may have customer data in one sheet with dates formatted as “MM-DD-YYYY,” and in another sheet, dates might be in “YYYY/MM/DD” format, which could create errors when trying to merge these datasets.

3. Handling Missing Data

Problem: Missing data is a common issue in many real-world datasets. Some values may be missing entirely, while others may be incorrectly entered as placeholders like “NaN” or “NULL.”

Example: In a medical dataset, patient age might be missing for some records, while others have incorrect placeholder values like “999” or “unknown.”

4. Data Duplication

Problem: Duplicated records can arise from multiple data entry points or errors during data collection. Having duplicate records in your dataset can lead to biased results or overestimation of certain values.

Example: If customer orders are entered twice by mistake, you might end up with duplicated sales data, leading to inflated revenue figures.

5. Outliers and Noise

Problem: Outliers (data points that fall far outside the expected range) can distort statistical analyses and machine learning models. Detecting and handling outliers is a key challenge in data wrangling.

Example: A dataset on customer spending habits might have an entry showing a customer spent $1,000,000 in one transaction, which could be a mistake or an outlier that needs to be addressed.

6. Merging and Joining Data

Problem: Combining data from different sources is often required, but mismatches in column names, types, or missing keys can complicate this process.

Example: When merging two datasets, one may have “customer_id” while the other uses “customerID.” These mismatches could prevent a successful merge and result in lost or mismatched data.

Strategies to Overcome These Challenges

Now that we’ve covered some common challenges, let’s look at some practical strategies for overcoming them. These approaches will help you manage the complexities of data wrangling in data science and ensure your dataset is clean, reliable, and ready for analysis.

1. Efficiently Handling Large Datasets

- Use Chunking: If your dataset is too large to fit into memory, chunking can help. Chunking allows you to break down the dataset into smaller, manageable pieces and process each piece one at a time.

- Example in Python (Pandas):

import pandas as pd

chunk_size = 10000 # Number of rows per chunk

for chunk in pd.read_csv('large_dataset.csv', chunksize=chunk_size):

process_chunk(chunk) # Perform data wrangling on each chunk

- Leverage Distributed Computing: Tools like Dask or Apache Spark allow you to distribute the data across multiple machines, enabling faster processing of large datasets.

- Optimize Data Types: When dealing with large data, optimizing data types can save memory. For example, using category data type for categorical columns instead of object can help reduce memory usage.

2. Handling Inconsistent Data Formats

- Standardize Formats: Before starting any analysis, it’s important to standardize the data formats. This can be done by converting date columns to a common format or ensuring numerical columns use the same units.

- Example in Python (Pandas):

# Converting date formats to 'YYYY-MM-DD'

df['date_column'] = pd.to_datetime(df['date_column'], format='%Y-%m-%d')

- Use Data Transformation Tools: Tools like Trifacta and Alteryx are specifically designed to help standardize and clean data from various sources, making the wrangling process smoother.

3. Handling Missing Data

- Imputation: When dealing with missing values, imputation techniques can be used to fill in the gaps. Common methods include replacing missing values with the mean, median, or mode of the column, or using more sophisticated techniques like regression imputation or k-Nearest Neighbors (k-NN).

- Example in Python (Pandas):

# Filling missing values with the mean

df['age'] = df['age'].fillna(df['age'].mean())

Drop Missing Values: In some cases, you might choose to drop rows or columns that have too many missing values. This approach is useful when the missing data cannot be reliably imputed.

- Example in Python (Pandas):

# Dropping rows with missing values in 'age' column

df = df.dropna(subset=['age'])

4. Removing Duplicate Data

- Drop Duplicates: Duplicate records can easily be removed by using the drop_duplicates() function in Pandas or similar methods in other tools. This will ensure that each record appears only once.

- Example in Python (Pandas):

# Removing duplicate rows

df = df.drop_duplicates()

Identify Duplicate Columns: Ensure that there are no duplicate columns in your dataset, which could arise if data is merged incorrectly. Use column names or indexes to identify and remove these duplicates.

5. Handling Outliers and Noise

- Z-Score or IQR Method: Use statistical methods to detect and handle outliers. The Z-score and Interquartile Range (IQR) methods are popular for identifying extreme values.

- Example in Python (Pandas):

from scipy import stats

df = df[(np.abs(stats.zscore(df['spending'])) < 3)] # Remove outliers based on Z-score

Visualization: Use visualization tools like box plots or scatter plots to visually identify outliers in your data.

6. Merging and Joining Data

- Ensure Matching Columns: Always check that the column names are consistent across datasets before merging. If needed, rename columns using

rename()in Pandas. - Handle Missing Keys: When joining data, ensure that there are no missing or unmatched keys. In case of missing keys, use left join or right join to retain data from one dataset while merging with another.

- Example in Python (Pandas):

# Merging two datasets on 'customer_id'

df = pd.merge(df1, df2, on='customer_id', how='left')

Conclusion

While data wrangling in data science can be a challenging task, the right strategies and techniques can help you effectively overcome obstacles like large datasets, inconsistent formats, missing values, and duplicates. By applying the methods outlined here, you can prepare your data for analysis with confidence. Remember, data wrangling is an iterative process. Stay patient, be meticulous in your work, and don’t hesitate to experiment with different approaches to find what works best for your dataset.

FAQs

1. What is Data Wrangling in Data Science?

Data wrangling is the process of cleaning, transforming, and organizing raw data into a structured format for analysis. It involves tasks like handling missing values, removing duplicates, and ensuring consistency across datasets.

2. Why is Data Wrangling Important in Data Science?

Data wrangling is crucial because it ensures the quality and accuracy of the data before analysis. Without proper wrangling, the results from data analysis or machine learning models can be misleading or incorrect.

3. What Are Common Data Wrangling Tasks?

Common tasks include data cleaning (removing duplicates, handling missing values), data transformation (standardizing formats, creating new features), and data integration (merging datasets from different sources).

4. Which Tools Are Used for Data Wrangling?

Popular tools include Python (Pandas), R, Alteryx, and Trifacta. These tools help automate and simplify data wrangling tasks like cleaning, transforming, and merging datasets.

5. How Long Does Data Wrangling Take?

The time required for data wrangling varies depending on the dataset’s size and complexity. It can take anywhere from a few hours to several days or weeks for large, complex datasets with many inconsistencies.

External Resources

Kaggle – Data Cleaning and Wrangling

- Kaggle offers various tutorials and resources related to data wrangling, particularly in Python using libraries like Pandas. Their datasets are also great for practicing real-world data wrangling.

- Link: Kaggle Data Cleaning

Pandas Documentation

- The official Pandas documentation provides in-depth explanations on how to manipulate and wrangle data using the Pandas library in Python. It covers everything from basic operations to advanced techniques.

- Link: Pandas Documentation

Leave a Reply