The Engineering History of AI: Why Modern Systems Fail in Predictable Ways

Why I’m Writing This

As the author of Neural Networks and Deep Learning with Python, and founder of EmiTechLogic, I spend my days teaching engineers how to build AI systems.

But there’s a disconnect I keep seeing:

Students learn to train models. They don’t learn why those models fail.

Everyone wants to build the next ChatGPT. Nobody wants to study why expert systems collapsed in 1989, why perceptrons couldn’t solve XOR in 1969, or why symbolic AI required an “AI Winter” to course-correct.

But here’s what I’ve discovered after years of teaching neural networks and deploying LLM tutorials: modern AI systems fail for the exact same architectural reasons that symbolic AI failed 40 years ago. We’ve just moved the failure point.

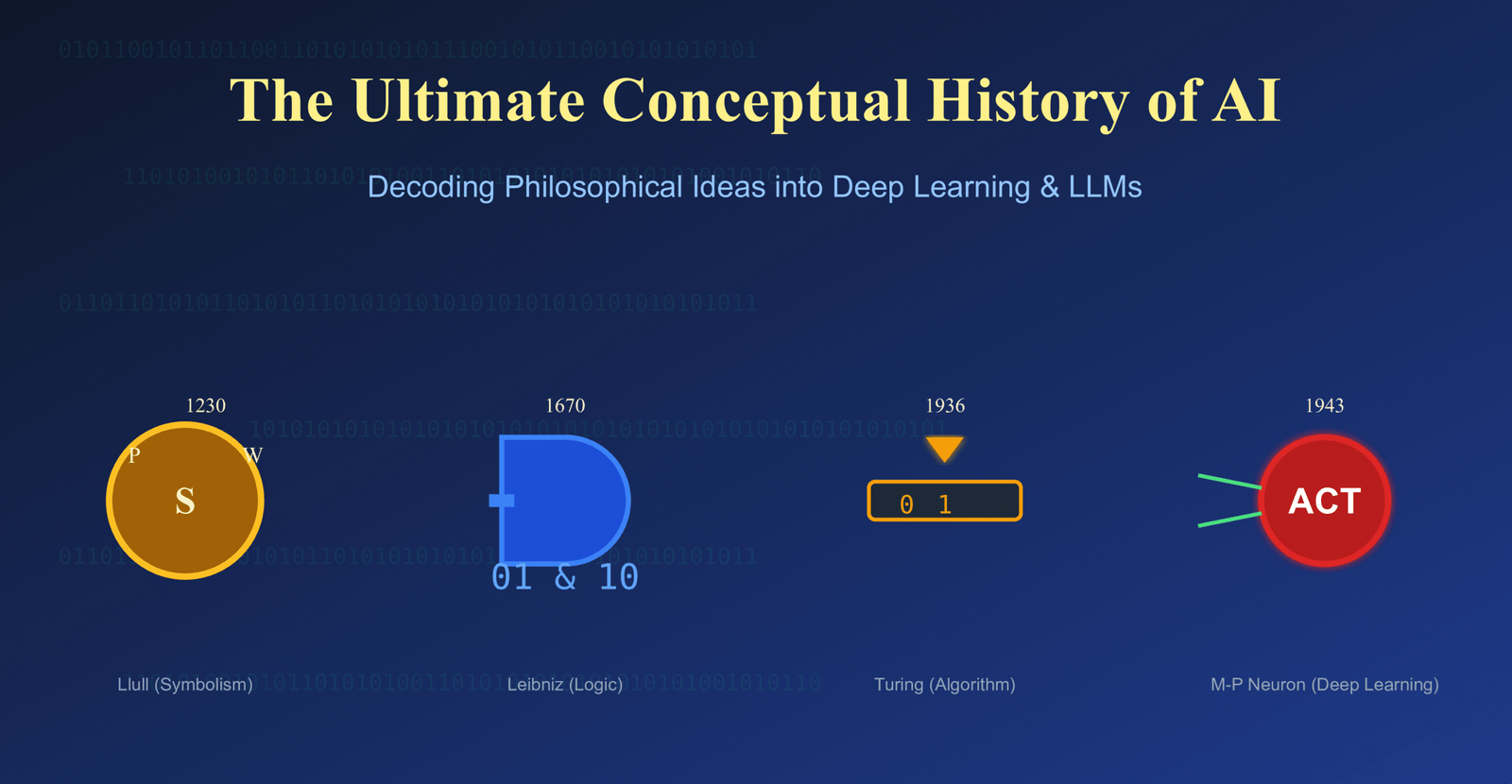

This post traces the engineering constraints that have shaped AI for 70 years—from Ramon Llull’s 13th-century logic machines to GPT-4’s hallucinations. Understanding these constraints isn’t just academic history. It’s practical knowledge that will help you debug your next production deployment.

Part 1: The Combinatorial Explosion—From Llull (1273) to LLM Token Generation (2024)

Ramon Llull’s Ars Magna: The First “AI” and Its Fatal Flaw

Before computers existed, a 13th-century philosopher named Ramon Llull built something remarkable: a mechanical system for generating knowledge.

His “Ars Magna” used rotating paper discs with fundamental concepts:

- Truth, Goodness, Power, Wisdom, Justice, etc.

By rotating the discs, you could mechanically combine concepts:

- Truth + Wisdom = Enlightened Understanding

- Power + Justice = Righteous Authority

- Goodness + Eternity = Divine Grace

This wasn’t mysticism—it was combinatorial search, the same principle underlying:

- Google’s search algorithms

- Modern knowledge graphs

- LLM token generation

The Llullian Combinator

A 13th-century logic machine reconstruction.

Alignment

PROPOSITION

Rotate wheels to begin.

Llull’s insight: If thought is combining basic symbols, then a machine could theoretically generate all truths.

Llull’s problem: Combinatorial explosion.

With 9 concepts and 2-way combinations: 81 possibilities

With 9 concepts and 3-way combinations: 729 possibilities

With 10 concepts and 3-way combinations: 1,000 possibilities

The search space grows factorially. Without intelligent pruning, you generate infinite nonsense mixed with occasional insight.

The Modern Parallel: LLM Token Generation

When I teach neural networks, students often ask: “Why do LLMs hallucinate?”

The answer traces back to Llull’s combinatorial problem.

Modern LLMs like GPT-4 or Claude don’t “understand” text—they predict the next most probable token from a vocabulary of 50,000+ tokens. At each step:

Current context: "The capital of France is"

Possible next tokens: "Paris" (high probability), "London" (low probability), "banana" (extremely low probability)

Selection: Sample from probability distribution

This is combinatorial search with probabilistic pruning. The model learned from training data which combinations are likely, but it has no “truth checker.”

The architectural constraint:

- Llull had no pruning mechanism (generated everything)

- LLMs have training data bias as crude pruning (generate probable things)

- Neither system verifies truth—they just navigate possibility spaces

Why this matters for practitioners:

When you deploy an LLM in production, you’re not deploying a “knowledge base”—you’re deploying a probabilistic search engine traversing a high-dimensional space of token combinations.

If the statistically most probable next token is factually wrong (due to training data bias or noise), the model will generate it with complete confidence.

Practical implication: Never ask an LLM to generate factual information without external grounding (databases, APIs, retrieval systems). The architecture fundamentally prioritizes plausibility over veracity.

Part 2: Turing’s Universal Machine and the Platform-Independence of Intelligence

1936: Alan Turing Formalizes the Algorithm

Before Turing, computing machines were purpose-built hardware. A loom wove cloth. A calculator computed numbers. Each machine had one function.

Turing proved something profound: you could build a Universal Machine that could simulate any other machine if given the right instructions (software).

This introduced the concept of the Stored Program—the theoretical foundation for why:

- Your GPU can render video games, then train neural networks, then mine cryptocurrency

- The same iPhone runs Spotify, Instagram, and Claude

- Intelligence can be “software” running on biological or silicon “hardware”

The insight: Intelligence is platform-independent. If thought is a series of state transitions, you can abstract it from biology.

This spawned the Physical Symbol System Hypothesis: any system that manipulates symbols can theoretically achieve human-level intelligence.

The Finite State Machine

Turing’s “Universal Machine” abstraction: intelligence as symbol manipulation on an infinite tape.

Waiting for input…

Algorithm: Binary Increment

Representation Gap

Notice how the machine doesn’t “know” it’s adding numbers. It simply follows a Physical Symbol System transition table.

To the machine, ‘1’ is not a value; it is a symbol trigger. The intelligence is in the Software, abstracted from the silicon tape.

The Modern Reality: Abstraction Creates Distance from Truth

In my book “Neural Networks and Deep Learning with Python,” I emphasize that neural networks are universal function approximators—they can theoretically learn any mapping from input to output.

But “theoretically” does a lot of work in that sentence.

The problem with symbolic abstraction:

When you represent reality as symbols (whether LISP code in 1970 or embeddings in 2024), you create a representation gap between:

- The symbols in your system

- The actual reality they’re supposed to represent

Example from teaching neural networks:

I often have students build image classifiers. They train a model on ImageNet that achieves 95% accuracy. They’re thrilled—until they deploy it.

In production, the model:

- Classifies a husky as a wolf (because training images of wolves often had snow backgrounds)

- Fails on slightly rotated images

- Confidently misclassifies adversarial examples

What happened?

The model learned to manipulate symbols (pixel patterns → class labels) without understanding the underlying reality. It learned correlations in training data, not causal relationships in the world.

This is Turing’s abstraction taken to its logical conclusion: when intelligence is pure symbol manipulation, it has no ground truth beyond the symbols themselves.

Practical implication:

Test your models on out-of-distribution data before production. The gap between “works on test set” and “works in reality” is the representation gap—and it’s where most deployed AI fails.

Part 3: Why Symbolic AI Failed (1980s) and Why LLMs Fail Differently (2024)

The Promise of Expert Systems

From the 1950s through 1980s, AI meant symbolic AI—encoding human expertise as IF-THEN rules in languages like LISP and Prolog.

The paradigm: Intelligence is rule-following. Encode expert knowledge, get expert performance.

Success stories:

- MYCIN: Medical diagnosis (matched expert physicians)

- XCON: Computer configuration for Digital Equipment Corporation (saved millions)

- DENDRAL: Chemical structure analysis

The collapse:

By the late 1980s, these systems hit a wall. Three fundamental problems:

1. The Frame Problem

How many rules do you need to “make coffee”?

- Use the coffee maker (not the toaster)

- Use water (not gasoline)

- Don’t start a fire

- Gravity exists

- Cups hold liquids

- Hot surfaces burn

- [… infinite implicit assumptions humans know]

You can’t encode common sense. The number of contextual rules is infinite.

2. The Knowledge Acquisition Bottleneck

Experts can’t articulate their tacit knowledge as rules:

- “How do you diagnose this disease?”

- “Well, it just ‘feels’ like pneumonia based on years of experience…”

You can’t program intuition.

3. Brittleness

If the system encounters anything 1% outside its programmed rules, it doesn’t degrade gracefully—it crashes or outputs nonsense.

There’s no “common sense” to fill gaps between logical nodes.

The Neural Network Solution (Sort Of)

When I teach backpropagation in my courses, I explain that neural networks “solve” these problems by:

- Learning from examples instead of explicit rules (solves knowledge acquisition)

- Generalizing from patterns instead of matching exact cases (reduces brittleness)

- Handling noise and ambiguity through probabilistic outputs

But they introduce opposite problems:

| Symbolic AI (1980s) | Neural Networks (2024) |

|---|---|

| Too rigid—can’t handle anything outside rules | Too fluid—can’t maintain rigid constraints |

| Interpretable—can trace logical reasoning | Black box—can’t explain decisions |

| Deterministic—same input → same output | Stochastic—same input → variable outputs |

| Fails explicitly (crashes) | Fails subtly (hallucinations) |

The modern challenge:

In my work building tutorials for EmiTechLogic, I’ve seen this pattern repeatedly:

Students build a model that works beautifully in Jupyter notebooks. Then they try to deploy it and discover:

- It can’t maintain business rules (too fluid)

- It generates plausible but wrong answers (hallucination)

- It’s inconsistent across runs (stochastic)

- They can’t debug why it failed (black box)

The architectural insight:

We spent 40 years escaping symbolic AI’s rigidity. Now we’re spending the 2020s trying to add structure back into neural systems through:

- Retrieval-Augmented Generation (RAG) – connecting to structured databases

- Function calling – forcing models to use deterministic tools

- Constitutional AI – embedding rules and values

- Chain-of-thought – making reasoning explicit

We’re not moving away from symbolic AI—we’re integrating it with neural systems.

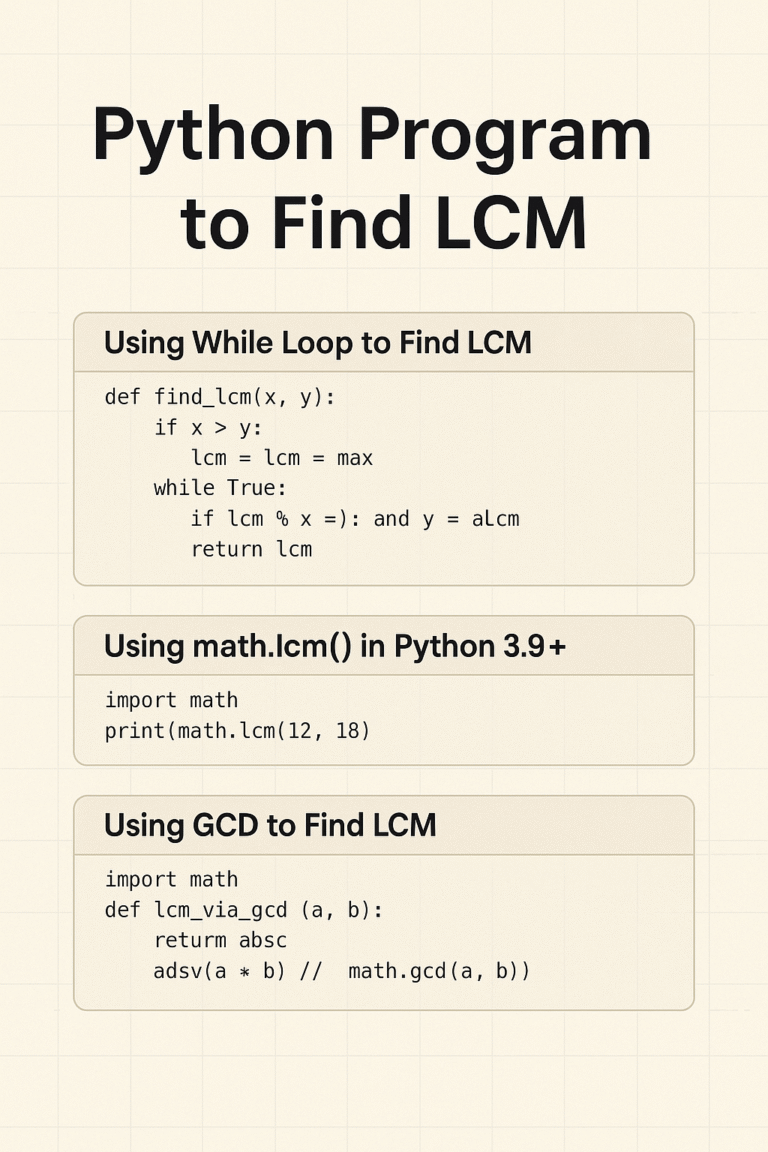

Part 4: The XOR Problem (1969) and Why LLMs Can’t Do Math (2024)

1969: Minsky and Papert’s Proof That Nearly Killed Neural Networks

In 1969, Marvin Minsky and Seymour Papert published “Perceptrons,” proving mathematically that single-layer perceptrons cannot solve XOR (exclusive OR).

The XOR truth table:

A | B | Output

0 | 0 | 0

0 | 1 | 1

1 | 0 | 1

1 | 1 | 0

The problem: XOR is not linearly separable. You can’t draw a single straight line to separate true from false outputs.

This proof effectively killed neural network funding for 20 years. Everyone assumed it was a fundamental limitation.

What they missed: Multi-layer networks CAN solve XOR. They just didn’t have backpropagation to train hidden layers yet.

2024: The Same Linear Limitation Explains LLM Math Failures

When teaching neural networks, I always cover this history because it explains a modern problem students encounter constantly:

“Why is my LLM so bad at basic arithmetic?”

The answer traces back to the XOR problem—not because of linearity, but because of the deeper principle: some operations require fundamentally different computational structures than pattern matching.

How LLMs process numbers:

# When you give GPT-4: "What is 127 × 384?"

# It doesn't compute—it tokenizes:

tokens = ["What", "is", "127", "×", "384", "?"]

# "127" might tokenize as ["12", "7"] or ["127"] depending on the tokenizer

# The model then predicts: "What token sequence looks like a plausible answer?"

# It learned from training data what "reasonable answers" look like

# It does NOT perform symbolic manipulation: 127 × 384 = 48,768

Math requires:

- Precise sequential operations (multiply, then add, then carry)

- Exact value preservation across steps

- Deterministic symbolic manipulation

- Algorithmic procedures

LLMs are trained for:

- Approximate pattern recognition

- Statistical plausibility

- Continuous value spaces

- Context-dependent generation

These are architecturally incompatible objectives.

Real example from my teaching:

I have students build a simple calculator using GPT-3.5:

prompt = "Calculate: 1234 + 5678"

response = call_gpt(prompt)

# Expected: "6912"

# Sometimes gets: "6912"

# Sometimes gets: "6911" or "6913" or "7012"

The model treats numbers as tokens to predict, not quantities to compute.

The solution (learned from XOR):

In 1986, backpropagation solved XOR by adding hidden layers—changing the architecture to match the problem.

In 2024, we solve math by adding deterministic computation—changing the architecture to match the problem:

# Don't do this:

result = llm.generate("Calculate 1234 + 5678")

# Do this:

def solve_math(prompt):

# Use LLM to parse intent and extract numbers

parsed = llm.extract_operation(prompt)

# Use deterministic code for computation

result = eval(parsed.expression) # Simplified

# Use LLM to format natural language response

return llm.format_response(result)

This is exactly what GPT-4’s Code Interpreter does—and why it’s reliable for math while raw GPT-4 isn’t.

The lesson: Just as perceptrons needed hidden layers for XOR, LLMs need external tools for operations that don’t match their architectural strengths.

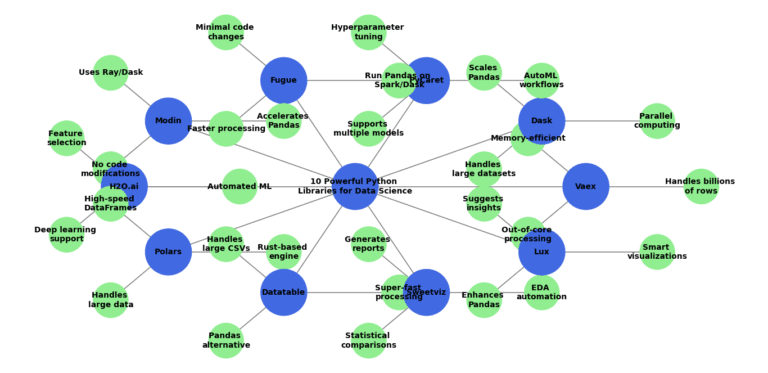

Part 5: Why GPUs Changed Everything (2012) and What It Means for Cost (2024)

The Hardware Lottery: AlexNet and the GPU Revolution

When teaching the history of deep learning, I always emphasize 2012 as the inflection point—not because of algorithmic breakthroughs, but because of hardware.

Perceptron Activation & GPU Parallelism

Visualizing the mathematical bottleneck: from 1986’s algorithms to 2012’s GPU revolution.

Single Inference (The Math)

// Perceptron Equation

y = σ(w₁x₁ + w₂x₂ + b)

Parallel Throughput (The GPU)

$0.00

In 2012, AlexNet utilized thousands of small cores to process matrix math simultaneously.

1986: The Algorithm

Backprop existed, but CPUs processed math serially. “Multi-layer networks could theoretically solve complex problems. But training was prohibitively slow.”

2012: The Revolution

AlexNet repurposed GPU shaders. “Training time dropped from weeks to hours.” We stopped being smart and started being massive.

2024: The Cost

“AI has marginal costs per inference.” Unlike SaaS, every extra query scales compute costs linearly. Scaling = Operational Expense.

The constraint before 2012:

Backpropagation was discovered in 1986. Multi-layer networks could theoretically solve complex problems. But training was prohibitively slow on CPUs.

The breakthrough:

Alex Krizhevsky used NVIDIA GPUs (designed for video game rendering) to train AlexNet for ImageNet 2012. Training time dropped from weeks to hours.

Why GPUs mattered:

- Neural networks are massive matrix multiplications

- GPUs have thousands of cores for parallel computation

- Video game graphics use the exact same math operations

Rich Sutton’s “Bitter Lesson”: Over the long term, general methods that leverage compute always outperform methods that leverage human knowledge.

We stopped trying to be “smart” with hand-crafted features. We started being “massive” with compute and data.

The Modern Cost Reality: AI Has Marginal Costs Per Inference

As someone who teaches AI implementation, I need to prepare students for a reality that surprises most engineers:

Traditional software:

- Build once, run infinitely at near-zero marginal cost

- Scaling is mostly about infrastructure (servers, bandwidth)

AI systems:

- Every inference costs compute

- OpenAI pays ~$0.10-0.30 in compute per GPT-4 call

- Scaling costs scale linearly with usage

Example from my own work:

When building tutorials for EmiTechLogic, I experimented with AI-generated code explanations. Initial estimate:

- 1,000 students × 10 queries each = 10,000 API calls

- 10,000 × $0.03 = $300/month

Actual usage:

- Students ask way more questions than expected (averaging 40+ queries)

- Longer context windows (including code snippets)

- Retry logic for failed requests

- Actual cost: ~$1,800/month

The architectural economics:

The 2012 GPU revolution made AI possible at scale. But in 2024, that same compute dependency means:

- AI has ongoing operational costs, not just development costs

- Inference costs scale with users (unlike traditional SaaS)

- Model size directly impacts profit margins

Practical implications for builders:

- Budget 3-5x your initial API cost estimates

- Implement aggressive caching (semantic similarity search before API calls)

- Use smaller models when possible (GPT-3.5 vs GPT-4 is 10x cheaper)

- Control context windows (every extra token costs money)

- Consider self-hosting for high-volume applications (initial investment but lower marginal costs)

Part 6: From RNNs to Transformers—Why Sequential Processing Still Matters

The RNN Era: Sequential Processing and Its Limits

Before 2017, if you wanted to process text with neural networks, you used Recurrent Neural Networks (RNNs) or Long Short-Term Memory networks (LSTMs).

How RNNs work:

Input: "The cat sat on the mat"

Processing:

"The" → hidden state h₁

h₁ + "cat" → hidden state h₂

h₂ + "sat" → hidden state h₃

... (sequential)

In my book, I explain RNNs using the metaphor of reading a book one word at a time, trying to remember everything you’ve read so far.

Two fundamental problems:

1. Parallelization Bottleneck

You can’t compute h₃ until h₂ is complete. This means:

- Can’t leverage GPU parallelism effectively

- Training time scales linearly with sequence length

- Can’t use thousands of GPU cores simultaneously

2. Vanishing Gradients

As sequences get longer, the “memory” of early words fades. By word 100, the network has essentially “forgotten” word 1.

2017: Attention Is All You Need

The Transformer architecture solved both problems with self-attention—processing entire sequences simultaneously.

How self-attention works:

Every word generates three vectors:

- Query (Q): “What context am I looking for?”

- Key (K): “What information do I contain?”

- Value (V): “Here’s my actual content”

Example:

Sentence: "The bank approved the loan"

"bank" computes attention scores:

- "bank" ↔ "approved" = 0.8 (high attention - banks approve things)

- "bank" ↔ "loan" = 0.9 (high attention - banks give loans)

- "bank" ↔ "the" = 0.1 (low attention - article)

Result: "bank" understands it's a financial institution, not a riverbank

Why this matters:

- Full parallelization: All attention computations happen simultaneously (can use 10,000 GPU cores at once)

- No vanishing gradients: Every word directly attends to every other word

- Scalability: Enabled GPT-3 (175B parameters) and GPT-4 (1T+ parameters)

The Trade-off Nobody Mentions: Context Windows Create New Failure Modes

When teaching Transformers, I always emphasize what we gave up:

RNNs:

- Process sequentially (slow)

- But handle unlimited sequence length (theoretically)

- Memory fades gradually

Transformers:

- Process in parallel (fast)

- But hard-limited by context window

- Memory is all-or-nothing (truncation)

The practical problem:

When your input exceeds the context window (4K, 8K, 32K, 128K tokens), modern LLMs don’t error gracefully—they silently truncate.

Example from my tutorial work:

I built a code review bot for EmiTechLogic students. It would:

- Take a Python file

- Analyze for bugs and improvements

- Generate feedback

It worked perfectly for files under 500 lines. Then a student submitted a 2,000-line file.

What I expected: Error message (“File too large”)

What happened: The model analyzed the first 1,500 lines, silently ignored the rest, and confidently said “No issues found”—even though the bug was on line 1,800.

The architectural constraint:

Transformers batch-process fixed-size windows. When you exceed the window:

Input: [8,500 tokens]

Context limit: 8,192 tokens

Processing: Truncate to 8,192 tokens (no warning)

Model: Generates based on incomplete input

The fix:

- Always count tokens before sending (use tiktoken library)

- Implement chunking for long inputs

- Add validation to check if output references content from entire input

- Use models with larger context windows when needed (but pay attention to cost)

Part 7: Why LLMs Hallucinate—The Architectural Explanation

This is the question I get most when teaching: “Why do LLMs confidently generate false information?”

The answer requires understanding the shift from symbolic to probabilistic AI.

Symbolic AI: Closed-World Assumption

Query: "Who won the 1987 World Series?"

System checks database:

- If found: "Minnesota Twins"

- If not found: "I don't know"

The system operates in a closed world—only facts in the database exist. If something isn’t there, the system says “I don’t know.”

Neural Networks: Open-World Assumption

Query: "Who won the 1987 World Series?"

System:

1. Converts query to vector embedding

2. Traverses high-dimensional space

3. Generates most probable token sequence

4. Outputs: "The [Minnesota/Chicago/Boston/...] [Twins/Cubs/Red Sox/...]"

The model lives in a continuous space where every concept is a point in a high-dimensional cloud. It doesn’t “know” facts—it predicts probable continuations.

Why Confidence Doesn’t Mean Accuracy

When I explain this in my courses, I use this metaphor:

LLMs are like a student who:

- Has read 10,000 textbooks (training data)

- Remembers statistical patterns, not specific facts

- When asked a question, generates what “sounds right” based on patterns

- Has the same confident tone whether they know the answer or are guessing

The technical explanation:

# When you ask: "What is the capital of Bhutan?"

# The model doesn't query a fact database

# It predicts tokens:

P("Thimphu" | context) = 0.85 # High probability

P("Kathmandu" | context) = 0.08 # Lower probability

P("Bangkok" | context) = 0.03 # Low probability

# It samples from this distribution

# "Confidence" reflects probability distribution, not factual certainty

Why hallucinations happen:

- Training data gaps: If the model never saw accurate information about topic X, it extrapolates from similar patterns

- Conflicting information: Training data contains contradictory facts → model blends them

- Statistical noise: Random variations in the probability distribution

- Prompt ambiguity: Vague queries let the model “drift” into plausible but incorrect territory

The Solution: Neurosymbolic Architecture

After years of teaching and building, I’ve concluded the future is hybrid systems:

Symbolic layer (deterministic):

- Vector databases with verified facts

- APIs for real-time data

- Rule-based constraints for business logic

Neural layer (probabilistic):

- Natural language understanding

- Context synthesis

- Generation and formatting

Integration:

def answer_query(user_question):

# Neural: Understand intent

intent = llm.parse_intent(user_question)

# Symbolic: Retrieve facts

facts = database.query(intent)

# Neural: Generate response

response = llm.generate_from_facts(facts)

return response

This is Retrieval-Augmented Generation (RAG)—combining 1980s expert systems with 2024 neural networks.

Practical Takeaways: Engineering Lessons Across 70 Years

After teaching neural networks and building AI tutorials, these are the patterns I see repeatedly:

1. Combinatorial Explosion (Llull → LLMs)

Problem: Possibility spaces grow factorially

Solution: Implement intelligent pruning (temperature, top-p sampling, structured outputs)

2. Abstraction Gap (Turing → Production)

Problem: Symbols drift from reality

Solution: Test on out-of-distribution data, implement validation layers

3. Brittleness vs. Fluidity (Symbolic → Neural)

Problem: Can’t maintain rigid rules with probabilistic systems

Solution: Hybrid neurosymbolic architecture (RAG, function calling)

4. Linear Limitations (XOR → Math)

Problem: Some operations don’t match architectural strengths

Solution: Route to appropriate tools (code execution for math, databases for facts)

5. Hardware Constraints (GPUs → Costs)

Problem: Inference has marginal costs

Solution: Budget 3-5x estimates, aggressive caching, model right-sizing

6. Context Windows (Transformers)

Problem: Silent truncation corrupts data

Solution: Always count tokens, implement chunking, validate outputs

7. Hallucination (Probabilistic Computing)

Problem: Plausibility ≠ truth

Solution: External grounding, retrieval systems, validation

Conclusion: The Pattern Across 750 Years

From Ramon Llull’s rotating discs (1273) to GPT-4 (2024), the same fundamental constraints have shaped machine intelligence:

The core challenge: Building systems that balance exploration (generating novel combinations) with verification (ensuring accuracy).

Symbolic AI leaned too far toward verification (brittle rules).

Neural networks leaned too far toward exploration (creative hallucination).

The future—what I teach at EmiTechLogic and write about in my books—is integration: architecting the boundary between rigid constraints and flexible generation.

This isn’t just history. It’s the engineering foundation you need to build reliable AI systems in 2025.

GitHub Repository: https://github.com/Emmimal/the-engineering-history-of-ai

Recommended Next Steps

For engineers looking to move from theory to implementation, I recommend these high-impact technical deep dives:

- Build a Neural Network from Scratch – Master the underlying backpropagation calculus before you start relying on high-level APIs like PyTorch.

- Optimizing Chunking for RAG Systems – Learn how to structure document retrieval so your LLM stays grounded in reality, solving the “context window” engineering hurdle.

- Python Optimization for AI Workloads – A practical guide to handling the compute-heavy demands of modern data processing and inference.

Test Your Knowledge: The Four Pillars of AI

External Resources for the History of AI

| Pillar / Concept | Resource Title | Description / Relevance | URL / Search Term |

| 1. Symbolism (Ramón Llull) | Ramon Llull: From the Ars Magna to Artificial Intelligence | A book discussing Llull’s contribution to computer science, focusing on his “Calculus” and “Alphabet of Thought,” foundational to Symbolic AI. | https://www.iiia.csic.es/~sierra/wp-content/uploads/2019/02/Llull.pdf |

| 1. Symbolism (Ramón Llull) | Leibniz, Llull and the Logic of Truth: Precursors of Artificial Intelligence | Academic paper detailing the direct intellectual lineage connecting Llull’s symbolic methods to Leibniz’s work on mechanical calculation. | https://opus4.kobv.de/opus4-oth-regensburg/files/5839/Llull_Leibniz_Artificial_Intelligence.pdf |

| 2. Logic (Hobbes/Leibniz) | Timeline of Artificial Intelligence (Wikipedia) | Provides context on Thomas Hobbes’ “Reasoning is but Reckoning” and Gottfried Wilhelm Leibniz’s development of binary arithmetic and the universal calculus of reasoning. | https://en.wikipedia.org/wiki/Timeline_of_artificial_intelligence |

| 3. Algorithm (Alan Turing) | “Computing Machinery and Intelligence” (1950) | Turing’s seminal paper proposing the ‘Imitation Game’ (Turing Test) and discussing the limits and capabilities of any computation expressible as a machine algorithm. | Search Term: Alan Turing "Computing Machinery and Intelligence" MIND 1950 |

| 4. Activation (McCulloch-Pitts) | “A Logical Calculus of the Ideas Immanent in Nervous Activity” (1943) | The groundbreaking paper that introduced the Formal Neuron Model, reducing the biological neuron to a mathematical “Threshold Gate,” the blueprint for all artificial neural networks. | Search Term: McCulloch and Pitts "A Logical Calculus of the Ideas Immanent in Nervous Activity" 1943 |

Frequently Asked Questions on the History of AI

1. What are the four core concepts that form the intellectual foundation of AI?

The four pillars are:

Symbolism (Llull): The idea of turning concepts into manipulable tokens.

Logic (Hobbes/Leibniz): Reducing thought to pure binary calculation (0s and 1s).

Algorithm (Turing): Defining the universal recipe for computation (sequential steps).

Activation (McCulloch-Pitts): Modeling the biological decision unit (the neuron).2. How does Ramón Llull’s Ars Magna relate to modern AI?

Llull’s 13th-century machine was the first systematic attempt to mechanize knowledge by combining concepts (symbols) based on fixed rules. This is the ancestor of Symbolic AI and remains the theoretical basis for modern rule-based expert systems and customer service chatbots.

3. Why is Boolean Logic (0s and 1s) essential if modern AI uses complex math?

Boolean Logic, formalized by Leibniz, is the absolute lowest level of all digital computation. Every complex instruction—from high-level code to deep network calculations—must ultimately be translated into sequences of simple, binary True/False switches. It is the fundamental language of all digital processors.

4. What crucial concept did Alan Turing establish with the Universal Turing Machine (UTM)?

The UTM established the concept of the universal algorithm. It provided the theoretical proof that one single machine could perform any computation that can be expressed as a finite, sequential set of logical instructions, thus defining the limits and possibilities of all future computer programs and AI.

5. How is the McCulloch-Pitts Formal Neuron the building block of Deep Learning?

McCulloch and Pitts successfully modeled the biological neuron as a Threshold Gate. This gate only “fires” (outputs a 1) if the combined input signals exceed a fixed value. This simple, all-or-nothing decision unit is the core foundation used to construct the large, interconnected layers found in all modern Neural Network Architectures.

Leave a Reply